Minnesota 'Acting as a Ministry of Truth' With Anti-Deep Fake Law, Says Lawsuit

The broad ban on AI-generated political content is clearly an affront to the First Amendment.

A new lawsuit takes aim at a Minnesota law banning the "use of deep fake technology to influence an election." The measure—enacted in 2023 and amended this year—makes it a crime to share AI-generated content if a person "knows or acts with reckless disregard about whether the item being disseminated is a deep fake" and the sharing is done without the depicted individual's consent, intended to "injure a candidate or influence the result of an election," and either within 90 days before a political party nominating convention or after the start of the absentee voting period prior to a presidential nomination primary, any state or local primary, or a general election.

Christopher Kohls, a content creator who goes by Mr. Reagan, and by Minnesota state Rep. Mary Franson (R–District 12B) argue that the law is an "impermissible and unreasonable restriction of protected speech."

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth's sex, tech, bodily autonomy, law, and online culture coverage.

Minnesota's Deep Fake Law

Violating Minnesota's deep fake law is punishable by up to 90 days imprisonment and/or a fine of up to $1,000, with penalties increasing if the offender has a prior conviction within the past five years for the same thing or the deep fake is determined to have been shared with an "intent to cause violence or bodily harm." The law also allows for the Minnesota attorney general, county or city attorneys, individuals depicted in the deep fake, or any candidate "who is injured or likely to be injured by dissemination" to sue for injunctive relief "against any person who is reasonably believed to be about to violate or who is in the course of violating" the law.

If a candidate for office is found guilty of violating this law, they must forfeit the nomination or office and are henceforth disqualified "from being appointed to that office or any other office for which the legislature may establish qualifications."

There are obviously a host of constitutional problems with this measure, which defines "deep fake" very broadly: "any video recording, motion-picture film, sound recording, electronic image, or photograph, or any technological representation of speech or conduct substantially derivative thereof" that is realistic enough for a reasonable person to believe it depicts speech or conduct that did not occur and developed though "technical means" rather than "the ability of another individual to physically or verbally impersonate such individual."

Under this definition, any sort of AI-generated content that carries a political message or depicts a candidate could be criminal, even if the image is absurd (for instance, that image of a pregnant Kamala Harris being embraced by Donald Trump that generated controversy last month) or benign (like using AI to generate a picture of a candidate in front of an American flag) and even if the content is clearly labeled as parody or fantasy. The law does not state that a so-called deep fake must be shared with an intent to deceive nor that it had to actually deceive anyone, merely that a reasonable person might possibly be fooled by it.

What's more, the law bans not just the creation of such an image or its publication by the person who created it but any dissemination of the image if the person sharing it is "reckless" about determining its origin. That could leave anyone who shares such an image on social media liable.

And the law singles out a certain type of content production—that created by technical means—while still allowing parody and impersonation created by human "ability."

All of this creates a perfect storm of First Amendment violation.

Parody, satire, and fantasy—even about political candidates—are protected by the First Amendment. Heck, even lying about a candidate is protected by the First Amendment. So it's hard to see how a ban on less-than-100% accurate images, videos, or audio alone could be criminalized.

This isn't to say that the use of politics-related deep fakes could never be criminal. Using them in a commercial capacity could perhaps factor into a fraud case or a campaign finance violation, for instance. But the crime here cannot simply be the creation or sharing of AI-generated content depicting a political candidate or commenting on a political issue.

Suit: Minnesota "acting as a Ministry of Truth"

The possibility of being punished for protected speech underscores the Kohls and Franson lawsuit.

"Mr Reagan earns a livelihood from his content, and Rep. Franson communicates with her constituents and party members on social media," according to a press release from their lawyers at the Hamilton Lincoln Law Institute (HLLI) and Upper Midwest Law Center (UMLC). "Both hope to continue posting and sharing videos and political memes online, including those created in part with AI."

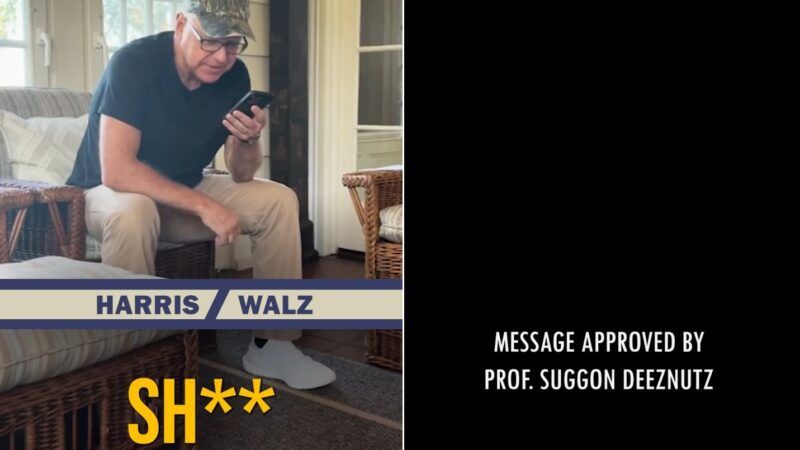

Kohls has already come under fire for a parody Harris campaign video he created. The video—which was labeled as a parody—was shared by Elon Musk, and Franson shared Musk's sharing of it. Both Kohls and Franson worry that their actions could run afoul of Minnesota's deep fake law.

"While [Franson] tweets her own commentary on events, many of her posts are 'memes,' images or video clips created by others and either 'retweeted' into her X feed or uploaded with a new tweet," states her complain. "These memes are often computer-edited or AI-created images and videos featuring the likeness of real politicians for comedic or satirical effect. For example, on September 16, Rep. Franson retweeted an account that posted [laughing emojis] in response to an image by the Babylon Bee, a satire publication, captioned 'Oh No! Tim Walz Just Waved So Hard His Arms Flew Off!' The accompanying image features the likeness of Gov. Walz at a political rally, except his forearms have flown off their bones. In fact, this never happened. The image has been altered for humorous effect."

"The law is supposed to promote democratic elections, but it instead undermines the will of the people by disqualifying candidates for protected speech or subjecting them to lawfare by political opponents," HLLI Senior Attorney Frank Bednarz said.

The suit, filed in the U.S District Court for the District of Minnesota, seeks a declaration that the Minnesota deep fake law is unconstitutional and can't be enforced.

The law "constitutes an impermissible and unreasonable restriction of protected speech because it burdens substantially more speech than is necessary to further the government's legitimate interests in ensuring fair and free elections," states the complaint. It further argues that the law represents an impermissible content-based restriction on speech, that it is overbroad, and that it "is not narrowly tailored to any government interest. The state has no significant legitimate interest in acting as a Ministry of Truth."

More Sex & Tech News

• Some people won't be satisfied until we must show ID to use any part of the internet at all.

• A study from the group Pregnancy Justice claims that in the year following the repeal of Roe v. Wade, there was a spike in pregnancy-related prosecutions. "That period yielded at least 210 prosecutions, with the majority of cases coming from Alabama (104) and Oklahoma (68)," reports News Nation. "That's the highest number the group has identified over any 12-month period in research projects that have looked back as far as 1973."

• A judge has declared Georgia's six-week abortion ban to be unconstitutional. "Women are not some piece of collectively owned community property the disposition of which is decided by majority vote," wrote Fulton County Judge Robert McBurney in his ruling. "Forcing a woman to carry an unwanted, not-yet-viable fetus to term violates her constitutional rights to liberty and privacy, even taking into consideration whatever bundle of rights the not-yet-viable fetus may have."

Today's Image

Show Comments (34)