Maybe A.I. Will Be a Threat—To Governments

The designer of China's Great Firewall sees new A.I. tech as a concern for public authorities.

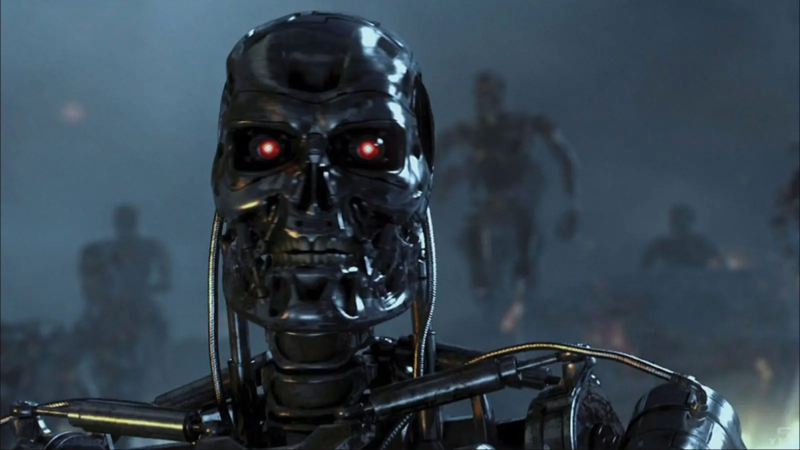

It's easy enough to find warnings that artificial intelligence (A.I.) will eventually, perhaps soon, be a threat to humankind. Those warnings have loomed in science fiction sagas for decades, from the murderous HAL 9000 of 2001: A Space Odyssey to the obliterating robots of the Terminator and Matrix movies. These are modern-day Frankenstein stories, in which man's hubris becomes humanity's downfall as intelligent creations take on a life of their own and come back to kill their makers.

Now, as we enter a new age of readily available consumer A.I. thanks to large language model (LLM) chatbots like ChatGPT, similar warnings have entered popular discourse. You can find those warnings on op-ed pages and in reports about worried lawmakers and policy makers, who, according to a recent New York Times article, are struggling to understand the technology even as they seek to regulate it. Indeed, part of the fear about A.I. is that, unlike, say, a search engine, its inner workings are somewhat mysterious, even to its creators.

At a conference on the subject of "Man, Machine, and God" last year, Henry Kissinger captured the mood of concern, and the sense that something must be done, when, as The Washington Post's David Ignatius reported, he said that if authorities don't find ways to control A.I., to limit its reach, "it is simply a mad race for some catastrophe."

The precise nature and identity of the authorities in question were left unspecified. But presumably, they are people with access to or influence over government power. With that in mind, consider an alternative scenario: Powerful A.I. might end up being a threat—not to humanity, but to governments, and in particular to authoritarian governments that have sought to limit access to ideas and information.

Don't take it from me: That's more or less the possibility raised by Fang Binxing, a computer scientist and former government official who was a key player in the development of China's Great Firewall. The Great Firewall is an information control system intended to censor and limit access to anything the Chinese government finds objectionable, with an emphasis on political speech. But that sort of censorship relies on the fundamental knowability of how search engines and websites function: Specific offending links, articles, publications, or programs can simply be blocked by government authorities, leaving the rest of the internet accessible. In this architecture, censorship is possible because information-discovery processes are clear, and information itself is discrete and severable.

An A.I., however, is much more of an all-or-nothing proposition. In some sense, an A.I. just "knows" things based on processes that cannot be clearly observed or documented. And that, presumably, is why Binxing recently warned that generative A.I.s "pose a big challenge to governments around the world," according to a South China Morning Post summary of his remarks. "People's perspectives can be manipulated as they seek all kinds of answers from AI," Binxing reportedly told a Chinese-backed news site.

Accusations of manipulation are more than a little bit ironic coming from someone behind one of the world's broadest efforts at population-level information control. But one can sense genuine worry about the inevitability of change that appears to be on the way. At his blog Marginal Revolution, economist Tyler Cowen draws out the implications of Binxing's remarks in a post titled "Yes, the Chinese Great Firewall will be collapsing."

"I would put it differently, but I think he [Binxing] understands the point correctly," Cowen writes. "The practical value of LLMs is high enough that it will induce Chinese to seek out the best systems, and they will not be censored by China." Intriguingly, Cowen also raises the question of what happens when "good LLMs can be trained on a single GPU and held on a phone."

Obviously, all of this is somewhat abstract at the moment, but let me try to put it a little more concretely. Although it's still early, it's clear that A.I. will be too powerful to ignore or restrict entirely. But the nature of LLM-style A.I., with its holistic, contextual, quasi-mysterious approach to synthesizing knowledge, will inevitably mean exposure to facts, arguments, ideas, and concepts that would have been censored under the Great Firewall. In order for China to keep up with the West, it will have to let those complex A.I. systems in, and that will mean letting all the previously objectionable things those A.I.s "know" in as well. Furthermore, as A.I. tech advances and decentralizes, becoming operable based on smaller devices, it will become even more difficult to censor information at scale.

A.I. technology is still in its infancy, and it's too early to predict its future with any great confidence. The scenario I've laid out is hopeful, and not necessarily representative of the path a world populated by A.I.s will take. It's a possibility, not a certainty.

But amid the warnings of apocalypse and doom, and the Frankenstein narratives about rogue A.I., one should at least consider the more optimistic alternative: that advanced artificial intelligence will become a tool of individual empowerment, that it will circumvent traditional systems of top-down information control, that it will be a threat not to human civilization but to authoritarian rule—and, perhaps, more broadly, a kind of rival power center to many of the world's authorities as well. It's no surprise, then, that lawmakers are already looking to regulate A.I. before they even really understand what it is: They know that it's powerful, and that's enough.

Show Comments (35)