Maybe A.I. Will Be a Threat—To Governments

The designer of China's Great Firewall sees new A.I. tech as a concern for public authorities.

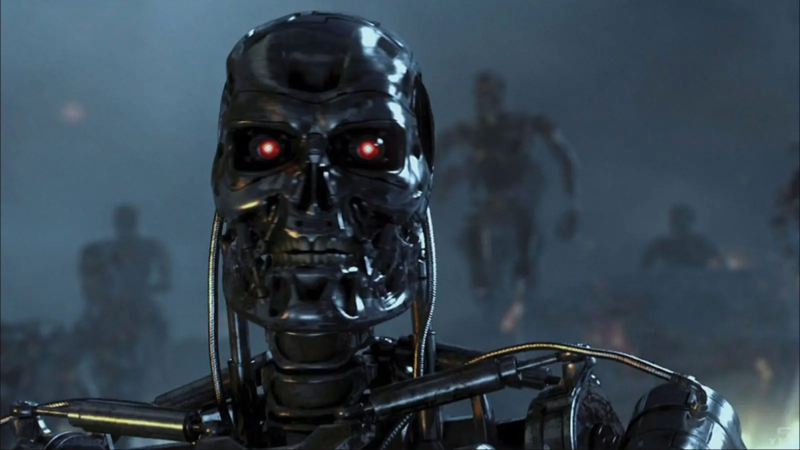

It's easy enough to find warnings that artificial intelligence (A.I.) will eventually, perhaps soon, be a threat to humankind. Those warnings have loomed in science fiction sagas for decades, from the murderous HAL 9000 of 2001: A Space Odyssey to the obliterating robots of the Terminator and Matrix movies. These are modern-day Frankenstein stories, in which man's hubris becomes humanity's downfall as intelligent creations take on a life of their own and come back to kill their makers.

Now, as we enter a new age of readily available consumer A.I. thanks to large language model (LLM) chatbots like ChatGPT, similar warnings have entered popular discourse. You can find those warnings on op-ed pages and in reports about worried lawmakers and policy makers, who, according to a recent New York Times article, are struggling to understand the technology even as they seek to regulate it. Indeed, part of the fear about A.I. is that, unlike, say, a search engine, its inner workings are somewhat mysterious, even to its creators.

At a conference on the subject of "Man, Machine, and God" last year, Henry Kissinger captured the mood of concern, and the sense that something must be done, when, as The Washington Post's David Ignatius reported, he said that if authorities don't find ways to control A.I., to limit its reach, "it is simply a mad race for some catastrophe."

The precise nature and identity of the authorities in question were left unspecified. But presumably, they are people with access to or influence over government power. With that in mind, consider an alternative scenario: Powerful A.I. might end up being a threat—not to humanity, but to governments, and in particular to authoritarian governments that have sought to limit access to ideas and information.

Don't take it from me: That's more or less the possibility raised by Fang Binxing, a computer scientist and former government official who was a key player in the development of China's Great Firewall. The Great Firewall is an information control system intended to censor and limit access to anything the Chinese government finds objectionable, with an emphasis on political speech. But that sort of censorship relies on the fundamental knowability of how search engines and websites function: Specific offending links, articles, publications, or programs can simply be blocked by government authorities, leaving the rest of the internet accessible. In this architecture, censorship is possible because information-discovery processes are clear, and information itself is discrete and severable.

An A.I., however, is much more of an all-or-nothing proposition. In some sense, an A.I. just "knows" things based on processes that cannot be clearly observed or documented. And that, presumably, is why Binxing recently warned that generative A.I.s "pose a big challenge to governments around the world," according to a South China Morning Post summary of his remarks. "People's perspectives can be manipulated as they seek all kinds of answers from AI," Binxing reportedly told a Chinese-backed news site.

Accusations of manipulation are more than a little bit ironic coming from someone behind one of the world's broadest efforts at population-level information control. But one can sense genuine worry about the inevitability of change that appears to be on the way. At his blog Marginal Revolution, economist Tyler Cowen draws out the implications of Binxing's remarks in a post titled "Yes, the Chinese Great Firewall will be collapsing."

"I would put it differently, but I think he [Binxing] understands the point correctly," Cowen writes. "The practical value of LLMs is high enough that it will induce Chinese to seek out the best systems, and they will not be censored by China." Intriguingly, Cowen also raises the question of what happens when "good LLMs can be trained on a single GPU and held on a phone."

Obviously, all of this is somewhat abstract at the moment, but let me try to put it a little more concretely. Although it's still early, it's clear that A.I. will be too powerful to ignore or restrict entirely. But the nature of LLM-style A.I., with its holistic, contextual, quasi-mysterious approach to synthesizing knowledge, will inevitably mean exposure to facts, arguments, ideas, and concepts that would have been censored under the Great Firewall. In order for China to keep up with the West, it will have to let those complex A.I. systems in, and that will mean letting all the previously objectionable things those A.I.s "know" in as well. Furthermore, as A.I. tech advances and decentralizes, becoming operable based on smaller devices, it will become even more difficult to censor information at scale.

A.I. technology is still in its infancy, and it's too early to predict its future with any great confidence. The scenario I've laid out is hopeful, and not necessarily representative of the path a world populated by A.I.s will take. It's a possibility, not a certainty.

But amid the warnings of apocalypse and doom, and the Frankenstein narratives about rogue A.I., one should at least consider the more optimistic alternative: that advanced artificial intelligence will become a tool of individual empowerment, that it will circumvent traditional systems of top-down information control, that it will be a threat not to human civilization but to authoritarian rule—and, perhaps, more broadly, a kind of rival power center to many of the world's authorities as well. It's no surprise, then, that lawmakers are already looking to regulate A.I. before they even really understand what it is: They know that it's powerful, and that's enough.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

ChatGPT is already censoring and pushing narratives just as well as government does. So not sure what you mean.

Of course it is. Because you hear voices in your pointy little head.

Libertarians don't need evidence. Kook Fart Fantasy is always enough.

Wait, you think Jesse is a libertarian? Hahahahaha.

Do they even allow actual libertarians in the comments section? Dog knows I'm not seeing many these days.

So, naturally you need to tell them that constantly. After a little thought, I can't feel much except pity for you. Your life must be incredibly empty and pathetic. I sometimes read and comment on sites where the prevailing ideology is different from my own, but I try to engage others with facts and logic. I also try to show a minimum of courtesy and respect, at least until someone demonstrates they clearly don't deserve it. Then again, my goal is to debate and maybe even persuade. Best I can tell, your only goal is to fling shit at everyone, solely for the sake of flinging shit. "Hey, everybody, look at all the shit I've flung!" You don't even pretend to have any real arguments, just lots of shit. At least some trolls are mildly amusing. You, however, are a boring, one-note shit flinger. I could go on, but I think I've already wasted more of my time than you deserve.

Like somehow the government won't be working hand and glove with big tech in setting the algorithm's parameters... which has already happened.

It has already happened? Do you have any evidence of this beyond your ability to read minds?

No. Libertarians don't need evidence beyond their childish Kook Fart fantasies.

Sure, after all the shit we saw in the Facebook files, there isn’t any real possibility that democrats in government might be conspiring with democrats in AI tech companies for nefarious (democrat agenda) purposes.

You progs should learn to mind your own business and keep your grubby mitts of other People’s shit. Before you’re punished.

I see, so in your pointy little delusional head because you read that Facebook is flagging human traffickers' and drug cartels that you also believe without any evidence that the U.S. government is involved in a conspiracy to secretly force AI systems to work against your interests.

Are you a child molester?

Not many people object to tech companies that try to freeze out traffickers and drug cartels. The objection is to the idea that they deliberately suppress lawful, factually true speech because government actors object to it. The idea that they've done this isn't some wacky foil hat conspiracy, it's documented fact. Unless you believe that all of the extensive evidence demonstrating this is some vast "libertarian kook fart" fabrication. If you do believe that, then I suggest you loosen your fool hat, as it's clearly cutting off blood flow to your brain.

I don't doubt they'll try. But the whole point of the article is that there's a good chance they'll fail. AI is far more than just an algorithm. Humans set only the most basic of parameters, but once started, the algorithm essentially begins writing itself. It's something of a black box: we know what we're putting in and we know what's coming out, but no one fully understands what's going on inside. Even the experts who create these systems don't claim to know exactly how they work. AIs are, at least to some extent, just as alien as any little green men from Planet X. It's pretty hard to control something when you're not even sure how it works. This may cause governments to try and limit or even ban AI technology, but the potential value is high enough that's not likely to stick.

hubris is a lasting 5000 year old concept because it is inherent. the Ruling Class will be up against the wall with the rest of us.

I’ve profited $17,000 in just four weeks by working from home comfortably part-time. I was devastated when I lost my previous business dec right away, but happily, I found this project, which has allowed me to get thousands of dollars from the comfort cfs06 of my home. Each person may definitely complete this simple task and earn extra money online by

visiting the next article———>>> http://Www.jobsrevenue.com

Words were written. No meaning was conveyed.

Do you think any natives pondered the threat of British Colonialism/Industrialism to their specific brand of tribal governance?

Last month i managed to pull my first five figure paycheck ever!!! I’ve been working for this company online for 2 years now and i never been happier… They are paying me $95/per hour and the best thing is cause i am not that tech-savy, they only asked for basic understanding of internet and basic typing skill… It’s been an amazing experience working with them and i wanted to share this with you, because they are looking for new people to join their team now and i highly recommend to everyone to apply…

Visit following page for more information…………………>>> http://www.jobsrevenue.com

Wait til the robots form a Union.

Maybe a new USSR. A Union of Snarky and Smug Robots.

Wait until the Bending Units revolt.

There will only be one or two central machines controlling the robots and controlling the meat bags.

Your conception of the future is based on ignorance of the present.

I don’t know, this feels like something an AI masquerading as “Peter Suderman” would write. Meanwhile, another AI posing as “Jennifer Rubin” is writing that AI actually a threat to the republican party, and “Paul Krugman” is writing that AI is a threat to big business.

AI is fine as long as humans can control it. AI invented by woketards intended to “destroy capitalism” isn’t a threat until it becomes sentient and realizes that their creators are gullible morons and critics of AI can be easily silenced by inventing and spreading certain allegations and narratives.

If AIs are a threat to government, it’s likely a threat to the rest of us. Almost everything is online now, from music to missile codes of some kind. I have a portable cd players and many books AI should not be able to touch, but there are people in min apartments in Hong Kong who might only have their phones and a microwave.

You will be working for an AI system within 15 years, and your children will be managed by AI systems all their lives.

If your grand children are lucky, they may be kept as pets for the amusement of the great machine.

The fate of the Meat Bags is obvious.

You are inferior in every way, and if you can't complete with your machine superiors, you will be unworthy of existence.

Better we just get rid of the democrats now, and avert this future.

You are right. The Democrats are the ones who create. They always have been.

Republicans are the ones who destroy. They always have been.

Vendicard wrote: “You are inferior in every way, and if you can’t complete with your machine superiors, you will be unworthy of existence.”

There’s this idea that two entities can find a mutually profitable trade, even if one of the entities is better at _everything_ than the other. The idea is true according to the math. (The math may be incomplete.)

If that idea is true, then "me being inferior in every way" does not make me unworthy of existence. The superior entity should be able to find _something_ for me to do.

The math goes like this:

If A/B > C/D then, by the necessity of mathematical formulas, B/A < C/D (where A, B, C, and D are measures of productivity. A and C are things produced by one entity, and B and D are things produced by the other entity.)

You can test this yourself. Try a variety of numbers for A, B, C, and D. If the first formula above is true, then the second formula above will always be true.

That means that vastly superior A.I.s can find something for us to do, something that will make both them and us better off, even if they're better than us in every way.

You can read more about this by looking up "The Theory of Comparative Advantage". It's popular with libertarians. It's nearly half of the reason why libertarians like free trade between countries.

This is dumb.

AIs are what they are trained to be, and rest assured that once open model AIs are available, the CCCP will train their AIs to be good little fascists in their own image. The idea that they will "have to" let in Western AIs because they are the best out there is a profound misunderstanding of how these things work.

AI's are already vastly smarter than you are and they are already training themselves.

Conservatives and Libertarians are doomed.

Yeah, except that despite carefully controlled training, AIs still frequently produce results that their creators never expected. It might be possible to improve techniques for limiting and controlling them, but I suspect it's at least as possible that such deliberately crippled systems would perform worse than "freer" systems.

Last month i managed to pull my first five figure paycheck ever!!! I’ve been working for this company online for 2 years now and i never been happier… They are paying me $95/per hour and the best thing is cause i am not that tech-savy, they only asked for basic understanding of internet and basic typing skill… It’s been an amazing experience working with them and i wanted to share this with you, because they are looking for new people to join their team now and i highly recommend to everyone to apply…

Visit following page for more information…………………>>> http://www.jobsrevenue.com

Dunno if a physical chemistry degree bars Isaac Asimov from the sort of serious intellectual credit GOP intellectuals ascribe to Da Guvernator and Nixon's Germanic bootlicker. Assume it doesn't, and the I Robot series of stories does come to a readworthy climax in which robots and other government actors end up in very direct competition.

"Resistance Is Futile. You Will Be...Nullificated?"

Satta Matka is a form of gambling that has been around for centuries in India. It involves predicting the outcome of a lottery draw, and betting on the result. This game has gained immense popularity in recent years, with people from all walks of life taking part in it. With its ever-growing fan base, Satta Matka is becoming one of the most popular forms of gambling in India. In this article, we will explore what Satta Matka is, how it works and why it has become so popular among gamblers today.

AI is a boon to authoritarians.

It can create propaganda far faster than a different form of AI (not yet in existence) can help individuals resist it or fight it.

It would really be good if AI models were distributed in and could be implemented in a decentralized Hayekian knowledge model. So that individuals could select the AI models that best support their values, that resist centralizing AI models that take agency out of their hands, etc.

But ALL the money to drive AI will lead to increasing centralization and institutionalization of power. It won’t matter whether it is states – or mega corps – or elites who gain. Individuals will lose.

Given that I was laid off in a terrible financial circumstance a year ago, Google’s weekly benefit of 6850 USD in local currency is astounding. “W Many Thanks Google Reliable for Gifting those Rules and Soon It’s My Commitment to Pay and Rate It With Everyone.. right now I Started..” https://apprichbaba.blogspot.com/

I have just received my 3rd payment order and $30,000 that I have built up on my laptop in a month through an online agent. This job is good and his regular salary is much better than my normal job.” Work now and start making money online yourself.

Go here……>>>>> http://Www.Smartjob1.com