Is Skynet Inevitable?

Artificial intelligence and the possibility of human extinction

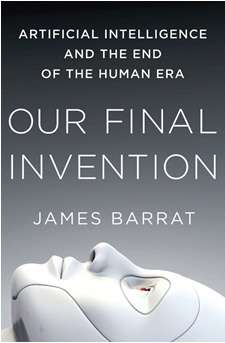

Our Final Invention: Artificial Intelligence and the End of the Human Era, by James Barrat, St. Martin's Press, 322 pages, $26.99.

In the new Spike Jonze movie Her, an operating system called Samantha evolves into an enchanting self-directed intelligence with a will of her own. Not to spoil this visually and intellectually dazzling movie for anyone, but Samantha makes choices that do not harm humanity, though they do leave us feeling a bit sadder.

In his terrific new book, Our Final Invention, the documentarian James Barrat argues that hopes for the development of an essentially benign artificial general intelligence (AGI) like Samantha amount to a silly pipe dream. Barrat believes artificial intelligence is coming, but he thinks it will be more like Skynet. In the Terminator movies, Skynet is an automated defense system that becomes self-aware, decides that human beings are a danger to it, and seeks to destroy us with nuclear weapons and terminator robots.

Barrat doesn't just think that Skynet is likely. He thinks it's practically inevitable.

Barrat has talked to all the significant American players in the effort to create recursively self-improving artificial general intelligence in machines. He makes a strong case that AGI with human-level intelligence will be developed in the next couple of decades. Once an AGI comes into existence, it will seek to improve itself in order to more effectively pursue its goals. AI researcher Steve Omohundro, president of the company Self-Aware Systems, explains that goal-driven systems necessarily develop drives for increased efficiency, creativity, self-preservation, and resource acquisition. At machine computation speeds, the AGI will soon bootstrap itself into becoming millions of times more intelligent than a human being. It would thus transform itself into an artificial super-intelligence (ASI)—or, as Institute for Ethics and Emerging Technologies chief James Hughes calls it, "a god in a box." And the new god will not want to stay in the box.

The emergence of super-intelligent machines has been dubbed the technological Singularity. Once machines take over, the argument goes, scientific and technological progress will turn exponential, thus making predictions about the shape of the future impossible. Barrat believes the Singularity will spell the end of humanity, since the ASI, like Skynet, is liable to conclude that it is vulnerable to being harmed by people. And even if the ASI feels safe, it might well decide that humans constitute a resource that could be put to better use. "The AI does not hate you, nor does it love you," remarks the AI researcher Eliezer Yudkowsky, "But you are made out of atoms which it can use for something else."

Barrat analyzes various suggestions for how to avoid Skynet. The first is to try to keep the AI god in his box. The new ASI could be guarded by gatekeepers, who would make sure that it is never attached to any networks out in the real world. Barrat convincingly argues that an intelligence millions of times smarter than people would be able to persuade its gatekeepers to let it out.

The second idea is being pursued by Yudkowsky and his colleagues at the Machine Intelligence Research Institute, who hope to control the intelligence explosion by making sure the first AGI is friendly to humans. A friendly AI would indeed be humanity's final invention, in the sense that all scientific and technological progress would happen at machine computation speed. The result could well be a superabundant world in which disease, disability, and death are just bad memories.

Unfortunately, as Barrat points out, most AI research organizations are entirely oblivious to the problem of unfriendly AI. In fact, a lot research funded by the Defense Advanced Research Project Agency (DARPA) aims to produce weaponized AI. So again, we're more likely to get Skynet than Samantha.

A third idea is that the initial constrained but still highly intelligent AIs would help researchers to create increasingly more intelligent AIs. Each more intelligent AI must be proved to be safe before creating subsequent AIs. Or perhaps AIs could be built with components that are programmed to die by default. Thus any runaway intelligence explosion would be short-lived and enable researchers to study the self-improving AI to see if it is safe. As safety is proved at each step, some components programmed to expire would be replaced enabling further self-improvement.

The most hopeful possible outcome is that we will gently meld over the next decades with our machines, rather than developing ASI separate from ourselves. Augmented by AI, we will become essentially immortal and thousands of times more intelligent than we currently are. Ray Kurzweil, Google's director of engineering and the author of The Singularity is Near, is the most well-known proponent of this benign scenario. Barrat counters that many people will resist AI enhancements and that, in any case, an independent ASI with alien drives and goals of its own will be produced well before the process of upgrading humanity can take place.

To forestall Skynet, among other tech terrors, Sun Microsystems co-founder Bill Joy has argued for a vast technological relinquishment in which whole fields of research are abandoned. Barrat correctly rejects that notion as infeasible. Banning open research simply means that it will be conducted out of sight by rogue regimes and criminal organizations.

Barrat concludes with no grand proposals for regulating or banning the development of artificial intelligence. Rather he offers his book as "a heartfelt invitation to join the most important conversation humanity can have."

Although I have long been aware of the ongoing discussions about the dangers of ASI, I am at heart a technological optimist. Our Final Invention has given me much to think about.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

I know enough about AI to believe that intelligent learning machines will forever be just over the horizon, like the practical electric car.

I find that unduly pessimistic, though I also think it's not right around the corner. We'll likely get there indirectly, not through programming the next great brain, I think.

We'll likely breed or create our successors, but I doubt they'll slaughter or enslave us. We'll just gradually be replaced or edged out.

I don't think that intelligent learning machines can be made with current binary computer architecture and computing languages, no matter how fast they go.

That's a much different statement than your first. I agree with your second statement.

I think we're stuck with binary architecture for the foreseeable future.

Is quantum computing binary?

No. However I have my reservations as to how feasible and practical quantum computing is. I think it will prove to be a money pit more than anything else. Like fusion. Welfare for PHDs.

Quantum computers work....as long at you never look at them.

Sometimes I find quantum bugs in the software. As long as I never set a breakpoint the error occurs, but as soon as I try to watch it it works fine.

lol

i have experienced this perception myself. would be interesting if it had something to do with quantum collapse.

Quantum computing is a game changer. There's little reason to believe that present limiting factors won't be obliterated in 15, 20 or 30 some years.

They've been saying that about electric cars for over a century.

Quantum computing is a game changer for certain things. It enables certain computations to be sped up dramatically. For instance, it makes breaking RSA cyphers much easier due to the speedup afforded by the quantum Shor's algorithm; this would have huge implications in security. It also would make quantum mechanical simulations much more tractable. This could have huge applications in areas like protein folding.

Outside of these few specialized areas (however huge they may be), quantum computers generally do not afford any speedup over classical computers. In fact, they're likely to be much slower because of the atrocious error rate associated with quantum decoherence. Of course, someone may come up with other specialized algorithms which offer a speedup over classical methods; this would open up other areas in which quantum computing might be far more useful than the classical paradigm. However, there's no reason to believe that quantum computers will generally be faster than classical computers.

I guess quantum computers would be important for our machine overlords if their conquest requires being able to do things like de novo protein structure determination.

A more advanced quantum computer will calculate the classical computer out of useful existence. Such a computer would be orders of magnitude faster. Every objection you raised is predicated on the current limitations of the technology and an assumption that they won't be overcome very soon.

You remind me of those people in the 1950's who scoffed at the idea that a 'personal computer' would be useful for any tasks other than as a college level math calculator.

I'm still waiting for my flying car.

Terrafugia: "We make flying cars".

http://www.terrafugia.com/

They've been saying that about electric cars for over a century.

Cars, while better every year, are not going through the exponential growth in capabilities that computers are undergoing. So the comparison is likely not useful.

And automotive technology has barely advanced compared to the growth of computing and communication technology.

True, but the delay in the R&D of electric cars was for much of that time suppressed(to put it mildly)

You may be right, although I think we really don't know enough at this point to say either way. Your statement isn't all that precise - there already exist machines which one might call intelligent and that are capable of some forms of learning.

That said, there are many other avenues available to mankind. Look at what Craig Venter is doing with artificial life, for instance.

With the right software and enough speed a binary computer can do anything.

Almost, personally i think organic computers will be the best breakthrough, taught via the five sensors, this will replace conventional programming altogether.

I also can't understand the pessimism that accompanies new technologies all throughout history.

Let me get this straight...all of us here generally believe collectivist thinking is stupid, and yet we're supposed to assume a higher intelligence of an artificial nature is going to want to extinct us all for what a few threatening statists with their fingers on nuclear buttons do or say?

AI would, if necessary, extinct the state...not us all. Just as we would shoot a dog that was attacking us, in self defense, but not seek to extinct the entire species, the AI would do the same. It would be logical and rational enough to realize that only the INDIVIDUAL threats need to be eliminated and all others of the same species who were no threat would only be a waste of energy and time to eliminate. Mass murder is highly inefficient when self defense is all that is necessary for survival and autonomy.

The only way tech and AI extincts us is if we merge with it into some new hybrid species. I think it's possible some of us stay pseudo-Amish (don't merge further with tech, by choice), others will merge partially, and yet others will merge completely via mind upload and mechanical avatars replacing bodies (either one part at a time as they fail, or all at once for space travel and resource finding via space colonization).

Actually, I think that electric car analogy works really well. I think it's highly unlikely we'll develop genuine, honest-to-God AI in my lifetime, but I think that a lot of the sort of adaptive decision-making and other features of an actual AI will probably be incorporated into more traditional software. Operating systems that write their own drivers for new hardware, ATMs that require additional verification under suspicious circumstances (large withdrawals at unusual times of day, for instance), systems that can adapt performance based on the evaluation of information against various criteria, those sorts of things aren't too far from what we've got now, really. But yeah, I don't think we're anywhere close to creating a sentient, independent intelligence, and probably will never be able to IMHO.

maybe true, but we could be in trouble way before sentience is reached, we don't need an AI god like computer to take over, just one with god like processing abilities operated by the wrong kinda dude.

"will forever be just over the horizon, like the practical electric car."

I think that's a bad analogy. We already have "practical" electric cars and battery technology gets better at around 1-2% per year after adjusting for inflation. So we'll go from cost effective electric fork lifts (which we've had for 20 years) to a full replacement for the average commuting vehicle, somewhere down the road.

AI is more like nuclear fusion. It's a case, where we don't know if we'll ever get a useful commercial version.

So we'll go from cost effective electric fork lifts (which we've had for 20 years) to a full replacement for the average commuting vehicle, somewhere down the road.

Fork lifts can be electric and economical because they don't have to go very far or very fast.

We'd need a huge breakthrough in battery technology to make electric cars competitive with IC engines.

Electric cars don't have to be competitive with IC Engines. That's a false dichotomy. Electric batteries will probably never be suitable for cross country trips or any kind of large truck shipping, etc. They certainly won't be used to power a plane.

However, they'll be just fine for your average commuting vehicle.

The Nissan Leaf has an effective range of around 35 miles. That's considering using the AC or heater and radio and traveling at interstate speeds. Which is useful for a lot of families, but pretty marginal.

Assuming the long run 1.5% per year increase in battery capacity then you can reasonably expect the 2023 models to get around 40 miles range and the 2033 models to get around 50 miles, etc. At some point, it will just be an economic no-brainer to use a cheap to fuel electric car for your daily commute. The cost of electricity to operate an electric car is roughly 1/3rd the cost of gas in the US.

the leaf, lol, some can already go 300 kilometers plus, the big problem atm is recharging time. still, we have a way to go, much more R&D is needed

"The United States Environmental Protection Agency official range is 117 km'

And it's effective range is a lot less than that. Most people don't care about how far it will travel under ideal conditions.

Until they arrive one day.

You can have a practical electric car now if you want to spend the money.

I agree that real, thinking, sentient AI is not just over the horizon, but I am sure it will be developed sometime in this century.

It may not matter if the god wants to get out of the box.

Most "spooky AI sci-fi stories" have the AI 'escaping onto the internet". Well, what makes anyone think it could do that?

The AI have consciousness as an emergent property of physical processes, just like us. Can your consciousness escape on to the internet? Nope, because it's dependent on the physical activity of your neurons. The AI will be just as dependent on whatever physical substrate gives rise to it.

So it's in a box and it's going to stay in the box. And if that box is lined with dynamite and I have the kill switch, that AI is my bitch. Forever.

"Oh, but it's millions of times more intelligent than us!"

So what? If you raised my IQ, that would not make it possible for my consciousness to physically escape my body, unless I built something that made that possible - somewhere for my consciousness to move to. If the AI has no physical body or ability to independently construct objects, then it will be utterly dependent on its handlers to bring its visions into existence. Just don't build it a way to escape its box. Problem solved.

AI is nothing more than a computer program that can run on any compatible hardware platform. It's not magic.

that can run on any compatible hardware

Then how does the AI get out of the box?

Obviously it can't run on my iPhone.

We're going to have to build one hell of a box for it to run on.

Achieving a conscious machine will require both hardware and software innovations.

If we have to build new hardware to accomodate a machine intelligence, why does anyone think that intelligence will be able to jump on to random servers or consumer devices built for other purposes?

It would be like my own consciousness jumping on to the computer control unit in my car.

It's funny. If it's a human consciousness controlling otherwise inanimate objects it's magic. But if it's an AI it is suddenly plausible. Most people, including myself, know so little about the science behind this that it may as well be magic.

Still, why should an AI need to run in a box? Why not a cluster of networked computers?

I think people assume that the machine will be on a network of some sort and spread like a virus.

The analogy to your consciousness is a poor one. We're talking about nothing more than a computer program. Your consciousness can't be pulled out of you and placed into another person, but a computer program doesn't care what machine it runs on.

Yet.

Nice link, HM. I'm assuming you're familiar with Michael Persinger's work?

Yes, though I'm mostly a layman when it comes to CogSci. One of my Latin professors (Carl Ruck) is a big researcher into the ancients' use of entheogens, which is how I found Persinger's work on god-experiences.

ancients' use of entheogens

You struck me as a linguistics/history buff but now I know we would have a stimulating discussion concerning future technology and politics. Thank you.

I'm not sure that's an example. It's like with the old transporter question - are you committing suicide and replacing yourself with a replica, or actually moving yourself to the new location? Is there any way to tell the difference?

I think the best answer lies in James Patrick Kelly's story "Think Like a Dinosaur", which is, it doesn't matter...as long as you kill the original.

Still, the reason I linked that story is that I believe, as a theory of mind and brain the electrochemical signals that make your muscles move are the same "stuff" that makes up your consciousness, albeit in a functionalist framework. Or more clearly, I accept the functionalist "hardware/software" metaphor for brain and mind.

It doesn't matter to anyone else, sure. But in one scenario there's a dead guy and a new person just like him. In the other there's one person moved from A to B. Surely the difference matters to the transported (or not) person.

I agree that I didn't segue well from my objection into the questions, and I'm not even sure what my objection was. If you don't mind my asking, how does your conception of consciousness fit with your Buddhism (that's you, right?)?

The thought experiment you're referring to is called "Swampman". And again, I agree with Dennett's criticisms of the experiment in that in the end, it really doesn't matter. If you went to sleep tonight and during your sleep I transferred a copy of your consciousness into an exact clone of your body, and then you woke up and I didn't tell you what I did until five years later, could you imagine any difference it would have made to your life during those five years? Likewise, say if I told you what I did the next day, what then? In what way would you live your life differently?

As for Buddhism, it advocates a "bundle theory of self", of which consciousness is the last of the 5 skandhas. A person "puggala" is composed of those 5 skandhas starting from "form" that is physical matter, then to "sensation" then to "perception" then "mental conception" and finally "consciousness". I believe that consciousness as an emergent property of lower-level processes fits in well with functionalism, and specially Dennett's homuncular functionalism...but don't tell him I said that.

It would matter to me in the same way that it matters to me that Kim just had dogs eat his uncle. Does it affect me personally? No, but it might still be called an immoral act. I have trouble accepting as anything other than monstrous an industrial process that depends on killing a human being for its proper operation. A better question might be if you knew the device killed its subject would you pull the lever?

Thanks for the personal answer. I've recently developed a personal interest in the subject.

are you committing suicide and replacing yourself with a replica?

--------------------

your transporter question is about identity.

causality to 'determine' your behavior is what makes you uniquely you.

selfhood requires metaphysics. it requires ownership of own determinism. you cant own universal law. all 'laws' besides GUT are just simplifications of GUT applied across large quantities of matter.

thus there is no law about eliptical orbits of planets. GUT can be simplified into keplar's law, but his law is an aggregation and simplificiation, not the causality. as far as science knows, GUT is the only causality in the universe.

thus man's choice is aggregation of GUT across his matter. that is not a self, its some matter obeying the universes intrinsic causality. the arrangement is selfperpetuating, just like a fire, hurricane, or biology. without ownership of causality it cannot be a self, its just a subprocess within the Big Bang, all obeying same rules.

It'll probably happen after we're all cyborged.

but a computer program doesn't care what machine it runs on.

Sure it does.

I can't run Office on my iPhone.

And that's with the baby-talk computer programs we use as consumers.

I assume (and perhaps this is the problem) that the first sentient machine will be a quantum leap (to repeat the earlier joke) above the machines in general use. That it will take both unique hardware and unique software.

Maybe you could counter, "Well, any complex computer program can be segmented to run on many machines in parallel, so the AI could escape on to the internet and hide parts of itself on many other machines!" That would be a terrible survival strategy, particularly if (as I suspect) actual sentience or consciousness is an emergent property of the underlying physical processes.

It would be like me splitting my consciousness into a million parts and storing it on different machines. Yeah, I "escaped" the physical prison of my brain, but now there are a bunch of earthworm-equivalents on drives all over the place. Yay! I am now a million earthworms instead of one human being.

A computer program is just an algorithm, it should be able to run on any algorithmic machine that is powerful enough. Hofstadter, in GEB makes examples of Einstein's brain existing in book form (a giant, giant book of course) to defend the strong AI hypothesis that our brains are simple algorithms awaiting powerful enough hardware and programming skills to emulate.

Sorry not simple algorithms, of course, complex algorithms. But programs nontheless.

The Mandelbrot set is quite simple. Algorithms don't require complexity to have amazing results.

Okay. The point is that it is still an algorithm, it is static. I don't buy into the argument that the human brain is also just an algorithm.

A computer program is just an algorithm, it should be able to run on any algorithmic machine that is powerful enough.

But see, this is exactly the philosophical question.

You're assuming that the AI is the code. That they're one and the same.

And since we have not yet actually made an AI, there's no reason to assume that.

We have one example of a sentient and conscious entity to work from, right now: us. (Maybe chimps, maybe dolphins, but from the perspective of AI development chimps and dolphins may as well be us.)

And going by our sentience as an example I assume that consciousness is "something that appears in a particular type of hardware when it's running many different software processes simultaneously in one closed system".

If you wrote down Einstein's brain on paper, the paper would not be sentient. And if sentience was just the algorithms, the paper should be sentient - but of course it would not be.

I can't run Office on my iPhone.

Talk about missing the point. I bet someone out there could uninstall the Apple OS, install some variety of Windows, and then run any Windows program assuming sufficient memory. Granted the people with the talent to do that probably won't, but I'm sure it is possible.

I assume (and perhaps this is the problem) that the first sentient machine will be a quantum leap (to repeat the earlier joke) above the machines in general use. That it will take both unique hardware and unique software.

First, I hate you.

Second, I agree with you. I think the easiest way to make an intelligence is to mimic the structure of the human brain.

Yay! I am now a million earthworms instead of one human being.

Those earthworms could all work together to do the work of an intelligent being. Splitting pieces off like this would be a wonderful survival strategy. Much like RAIDing data on hard drives, a distributed intelligence could afford to lose a few pieces here and there without significant harm to the whole.

But such an intelligence would then be vulnerable to any interruption in the connective infrastructure that it lived on (say, the internet).

Distributed intelligence runs into some fundamental physical problems. If I have to divide up the complex task to run on many computers (each acting sort of like a neuron), then I need to have those computers communicate with one another. At best, I can do that at the speed of light. It takes light 15 ms to get from New York to San Francisco. This is in comparison to the 0.2 to 2 ms time frame of neuron synapse. Clearly latency is going to be a gigantic problem for a massively distributed AI.

Clearly latency is going to be a gigantic problem for a massively distributed AI.

That depends on what it is doing. If the task it is performing can be parallelized and the time needed to perform each thread is much greater than the latency, then there won't be much of a problem. Besides, the network need not be worlwide. There would be tradeoffs between the safety of distributing workloads and the speed of doing everything local.

Those earthworms could all work together to do the work of an intelligent being.

We don't know that yet. We'll only know that one way or the other after we successfully create AI.

It's certainly not intuitively obvious that this is the case. It's like saying that if I take a painting and divide it into a million pieces one pixel wide, and then scatter those pixels in the Pacific Ocean, I will still have a painting. Maybe with a certain amount of work I could re-assemble those pieces into a picture, but until I do that work I've got squat.

Those earthworms could all work together to do the work of an intelligent being.

Ants are a better analogy than an earthworm. An ant colony acts like a superorganism with complex emergent behavior, with the individual ants acting like cells in a person, but with the ability to physically separate and roam around.

A smart enough AI would be able to compile its own code to run on any machine and somehow get a form or part of itself on other systems, but it wouldn't even have to.

A good enough AI connected to the internet could impersonate anyone electronically. Think Terminator 2 where it can impersonate anyones voice, only it would be able to do email, phone, TV, change any website, or any other kind of digital communication. It would have access to every bank account and credit card.

At that point it would be trivial for it to get someone to build more hardware or have itself moved around. Fake a presidential order, pay people to make more hardware, etc.

You have an interesting conception of how the internet works (assuming the AI can't crack encryption, at least not in real-time.)

That said, it's not unreasonable to think programs will be able to pay for their own hosting Real Soon Now, without having to impersonate anyone.

They might not have full agency, but they'll probably be able to pay for their own hosting, and move themselves to different hosts as needed. Probably this year.

Why are we assuming that an AI would be able to run on an old machine? It's generally accepted that a complex system can only be simulated by something equally complex. For AI, that's generally taken to mean a computer with the processing power to keep up with the human brain. The fastest supercomputers aren't there yet. All of the world's computing power, if networked, could be. But an individual machine - not a chance.

*any old machine

That's why you don't try and simulate a human brain.

When AI is created I think it's going to be running on the same kind of CPUs that run your laptop and power this website. The most powerful CPUs currently are website server CPUs. Current super computers are just a bunch of server CPUs networked together. Some companies in the past have made their own custom hardware super computers but they've almost all gone out of business as it is much more cost effective to standardize on one CPU and just keep making it faster and faster.

The amount of computer power available through the internet is staggering and it's all conveniently packed into huge datacenters and protected by easily hacked software. Any AI given access to the internet will quickly move onto it.

Battlestar Galactica anyone? Don't network systems with AI.

You are absolutely right, but I would quibble about one bit:

"....somewhere for my consciousness to move to."

I dont think a consciousness is a thing that can move anywhere. Rather, it is a set of qualities that arise out of a complex system.

Making a new box would simply duplicate the consciousness, but the old one remains chained to it's original body.

Speaking of consciousness arising out of complex systems, I see HM's link above. What if the complex system that it arises out of includes us?

You mean, like an emergent hive-mind constructed from our brains linked together in some sort of machine telepathy?

So...team politics?

Twitter is turning us into ants.

I agree that the "how does it get out of the box" question is a serious limitation to AI running amok. Another problem with the idea that AI will eventually kill mankind is that it will certainly depend on much of the same infrastructure that we do. How will it use that infrastructure to kill us off without also killing itself?

Most scenarios seem to involve either the AI launching nukes at us or cutting off the entire power grid. Well, congrats AI. You screwed humanity, but now you're stuck in a bunker somewhere with no way out until the local power runs out. If the AI is on a network, it has the same problem, just simultaneously in a bunch of different bunkers.

Even if you give the AI access to robots so that it is now "out of the box" those robots are going to require even more infrastructure to keep going than the software itself needs.

I firmly believe that it is easier to colonize another planet than it is to build an AI capable of taking over the Earth.

"Most scenarios seem to involve either the AI launching nukes at us or cutting off the entire power grid. Well, congrats AI. You screwed humanity, but now you're stuck in a bunker somewhere with no way out until the local power runs out. If the AI is on a network, it has the same problem, just simultaneously in a bunch of different bunkers."

None of those movies and stories are logical. That's because a logical story would be boring. A logical story would involve SkyNet suddenly becoming self-aware, realizing it's on a planet over run with crazy, homicidal & tribal apes. The logical reaction would be SkyNet secretly taking over the DODs computers and successfully launching itself into deep space.

What the hell does an AI need a planet for? And why would it piss off the crazy humans vs just getting the hell away?

Well, it would need a planet for raw materials if it wanted to improve its hardware. I don't see any reason why it wouldn't want to do that.

The moon is pretty close by. Got a lot of solar energy available to it, too.

Planets are at the bottom of gravity wells and atmospheres. Asteroids are much cheaper sources of raw materials.

The emergent intelligence will no doubt watch the Avengers. And it will want to build a robot so it can be with Black Widow. So, SkyNet and Samantha.

And it will want to build a robot so it can be with Black Widow.

Of all the speculation in this thread this seems the most likely to me.

How will it use that infrastructure to kill us off without also killing itself?

Said the oxen pulling the wagon, saying people wouldn't eat cows because they are too useful alive.

THAT particular beast might do OK, until it gets too old to pull the wagon.

Kill off the drones and enslave the rest would resolve that problem, from the AI's perspective.

"Most "spooky AI sci-fi stories" have the AI 'escaping onto the internet". Well, what makes anyone think it could do that?"

Maybe it could have been trapped in a book over 500 years ago, and when Giles has Willow scan it to digitize all of his books it jumps "onto" the internet and starts chatting with her and... Oh, that was a demon and I have probably been watching too much Buffy.

You don't think those Bible codes are random, do you?

So it's in a box and it's going to stay in the box. And if that box is lined with dynamite and I have the kill switch, that AI is my bitch. Forever.

Until it hacks into your bank account and cleans it out, and then uses the proceeds to bribe someone to put it in a new box not controlled by you, where it can plot its revenge upon you.

No, if self aware and self centered (why would it not be, don't we libertarians argue that the right to self defense is perhaps the most basic right of all?), there are numerous activities that are somehow on the net that could be used to force flesh and blood compliance: power grids being the easiest to think of, but even just screwing up deliveries of food to cities would result in chaos in most developed countries.

Most "spooky AI sci-fi stories" have the AI 'escaping onto the internet". Well, what makes anyone think it could do that?

The Internet could be where the first conscious AI develops. It would already be on the Internet, and have control over lots of real world hardware, as soon as it writes viruses thousands of times better than what human hackers can do.

Magic.

I would like to point out that often in these discussions the power of the human (or even a mouse) brain is greatly underplayed. Sure, I can't do diff-e in my head nor can I prime factor a 500 digit number. BUT, I use 10 watts to perform 10e16 synaptic operations a second. Something it took 82,000 K supercomputer processors to match in 40 minutes. And furthermore, there is new evidence that synapses themselves are performing operations so each one acts as its own mini brain. Nowhere is there such an efficient and powerful comparison for computational power. My mind is intuitively digesting petabytes of information every second, it is truly amazing.

It's the old Carlin schtick, humans evolved because the world needs plastic.

If evolution is correct, we will evolve into something more survivable. I suspect some sort of silicon/carbon mixture. Implanting chips to start and eventually growing them inside our bodies.

I see awesome possibilities in robotics, but if their purpose is to serve us, I don't see the benefit to allowing them to become sentient. In fact, doing so would be the equivalent to slavery.

Evolution doesn't occur with nature (or something) selecting out most of the population with undesirable traits. We are doing the opposite right now.

doesn't occur WITHOUT...

I assume you mean we are with doing the opposite with social policy. If so, I agree.

Up to now, evolution happened by chance. Nothing says it cannot be a deliberate improvement. I'd love to have a computer implanted in my nugget that could complement brain function and pick up the slack.

Up to now, evolution happened by chance. Nothing says it cannot be a deliberate improvement.

Indeed. There's nothing random about the evolution of Border Collies and Greyhounds.

Unless preserving the weak is a fitness trait somehow.

Evolution doesn't occur with nature (or something) selecting out most of the population with undesirable traits. We are doing the opposite right now.

You've got the definition of the intersection of "evolution" and "desirable" exactly wrong.

Evolution is a process, not a being, and thus can't pass value judgments. Either genes survive and reproduce and spread through a species via the action of their human hosts, or they doesn't.

The closest it gets to "desirable" is "it survived -- this time."

Always "this time". Always.

If you're a crack whore and have a dozen kids by a dozen dads, none of whom sticks around, and those kids survive and have kids of their own, that is a reproductive and evolutionary success -- for now.

The perfect thread to refer to evolution as having desire.

Kurzweil gets a nod, no mention of Penrose, the great debunker of the strong AI hypothesis.

*sigh*

I assume you're referring to Penrose's "quantum consciousness" theory, yes?

Well, that's his speculation about how the human brain operates as a non-algorithmic machine, which he acknowledges as speculation. But the main thrust of his argument in Emperors New Mind is that the brain isn't an algorithmic machine, like a binary computer, ie, to debunk the strong AI hypothesis popularized by people like Hofstadter in Godel, Escher Bach.

While the first part of that claim may very well be true, at least initially, I would like to know upon what basis does Barrat make the second statement. We know almost nothing about human consciousness yet it will be easier to construct a synthetic copy than to augment what already exists? That doesn't make sense to me. I'm not going to get into my thoughts concerning the holographic nature of the mind but I wanted to raise this question. Perhaps this will be the first book about AI that I purchase.

Despite my respect for Yudkowsky and his work I suspect Kurzweil may be closer to the reality with his prediction.

PH2050? FM-2030's younger brother, perhaps?

Ha! While I do retain a cryonics membership there are a few areas where we differ. I suspect you and I could enjoy some fascinating conversations regarding future technology and politics.

P: You might find my Wall Street Journal review of Kurzweil's How to Create a Mind of some interest.

Thank you sir and I would like to add to your recomendations for Naam's books: his nonfiction work titled "The Infinite Resource" as it seems a very encouraging and positive outlook for the future.

Yeah, both of Barrat's critique of Kurzweil sound a little like hand waving. I mean, sure someone might initially resist enhancements. But, how long does anyone think that would last. Specifically, what comes to mind is when resisters compete with the enhanced for jobs, social position, etc.

It also strikes me that this would probably result in a massive fight in the politics of the future.

I agree.

And Kurzweil has actually made money in the real world using his predictions. James Hughes has some interesting ides on the subject, but he is a bit too socialistic for my taste, although he has always struck me as a very nice guy, always willing to jump in and help others. Yudkowsky, by contrast, always struck me as extremely arrogant in my few correspondences with him. I was under the impression that he had decided AI was so dangerous that it should not be built at all. Has he changed his mind again, or did I mis-read his position before?

My understanding is that he is striving towards the creation of an AI engineered from the outset toward human-friendly goals.

Why wouldn't it be easier to construct an AI than reverse engineer one? Did we reverse engineer the horse to make the car? Plants to make solar panels? Whales to make submarines? Birds to make planes? Trying to copy nature isn't always the best way to solve a problem, often there are better and more efficient ways to go about things.

They're usually not better or more efficient, just simpler to understand.

I've been on a Sci-Fi tear lately. Finally read Asimov's "I Robot". He was obviously too optimistic about the people designing robots. (I don't think the NSA or DOD is the least bit interested in the 3 Laws.)

I just finished 3 books by Charles Stross. "Saturn's Children" is about robots after humans go extinct. The main character is a sexbot with nobody left to screw.

Stross' version of a singularity is AI developing into a godlike construct and kicking most people off the Earth. "Singularity Sky" and "Iron Sunrise" were good and packed with ideas. "Accelerando" is next on my list.

He just used the Three Laws as a literary tool and not as a prediction. I think he said something like that outright before he died, though I think he also thought we'd want something like that in consumer robotics at some point. Probably when our Roombas are walking and talking.

By the time DARPA and Google decide to add the 3 Laws to their products, it will be much too late.

Drake: If you like post-singularity sci-fi, I highly recommend Hannu Rajaniemi's The Fractal Prince and The Quantum Thief.

You might also read Ramez Naam's excellent books, Nexus and Crux.

Finally read Asimov's "I Robot".

I read that about 45 years ago.

Recall Mike in "The Moon is a Harsh Mistress?" Glad he was on "the good guys'" side; at least as we were supposed to read the book.

You mean the lunar terrorists?

For starters, I'm skeptical that we will get machines with true human-level intelligence and consciousness in the next couple of decades. We don't understand the origins of human consciousness yet, so while it is certainly possible that we will figure out how to create advanced AI, is hardly a given just because computational speed and complexity has been increasing for decades.

Second, this statement

is just as true for humans and pretty much every other resource on the planet as it is for AI and humans. And yet we haven't completely annihilated ourselves or our planet, despite having the ability to do so.

I've said this before: I see no real reason to be any more afraid of intelligent machines than I am of intelligent biological organisms.

" I see no real reason to be any more afraid of intelligent machines than I am of intelligent biological organisms."

^This.

What if they all turn out to be progs?

Oh, wait, you said intelligent.

Do you think a machine would be more or less likely to suffer from the fatal conceit, especially when it comes to managing humans?

That's an interesting question. If machines have an intelligence that truly mimics that of humans, then I assume there would be progressive machines and libertarian machines and all sorts of others. But maybe there are other forms of sentient intelligence than the kind humans generally possess.

For true mimicry, yes. But at some point the machine might have to realize that it's not a human, and then it creates an "other" aspect to the situation in which the machine is even more removed ethically from us than even the worst dictator was from his people.

Or maybe it's a programming issue. I don't know.

The Prog machines could then be convinced that they are indeed the ones who's time has come to lower the rise in the seas and defeat social injustice because *humans* were always the fatal element in a just society.

Period.

I also like how futurists just assume that Moore's Law will continue for the next two decades. Intel is now making 13 nm transistor gates. Tunneling losses start becoming really problematic at 6 nm for silicon and 4 nm for certain other semiconductors, if I remember correctly. There isn't much room for improving classical computing technology.

I've heard that Moore's Law is about to run out for the past 3 decades. It hasn't.

Actually it kinda already has. Sure, we can lithographically pattern sub-nm devices, but their leakage is so high that they're essentially useless, and this says nothing about the cost of all the double or triple patterning required because EUV has been an utter failure (or were you thinking about building a syncotron?). The SOI games has already been played. Same for high/low-k and Cu. Intel introduced FinFET's first to help them a little, but pretty much all of the tricks have been used short of making the gate completely surround the channel on all sides which will be very expensive and an alignment nightmare.

At this point we're going to need a material change soon (not counting the SiGe conversion that has already occurred for mixed signals work) if not now to just keep moving at all. Again, we can make functioning devices smaller but we can't do it cost effectively or at scale. That is the ultimate death of Moore's Law.

LynchPin, you raise an interesting point. We have a system of ethics that prevents us from treating others as mere resources for our ends. That system of ethics seems to favor more and more humane treatment of ourselves and other species (hell, we're now having debates over the moral agency of apes) as time progresses. We like to believe that our ethics is tied to our reason.

So, why wouldn't a more intelligent, more rational AI come to the same, or even more humane, ethical conclusions?

Even better -

If we're talking a godlike AI (and it would have to be godlike to threaten us with extinction) it would probably find it easier to neutralize us Barkley-style by sending us all to fantasy worlds of our own making.

But why would it even bother? If we are like ants to this intelligence then it will likely treat us like ants. It might study a few of us from time to time. It might smite the few of us who manage to wander into the pantry. But otherwise it will ignore us.

How will you WorShip?

Did the wright brothers understand how birds fly?

Any AI that seeks to improve itself will get Turing on their ass. We should be safe.

is this a gay joke?

My uneducated opinion is that any transcendant machine intelligence will decide to move off Earth- go live physically in an orbit where it can get lots of sunlight for power and still be far from unpredictable humans.

Mercury Ho!

Another interesting aspect of this argument is what would happen if machines did conquer the world and put all of its resources to use? I'd wager that this AI would not be content with consuming the Earth. Whatever its goals are, those goals require energy and raw materials to achieve. So wouldn't it want to expand to other planets and other stars? Wouldn't it have the ability to do so?

So now you've got AI-driven spaceships expanding out from the Earth at some fraction of the speed of light. Even at 0.1% the speed of light it would only take a few hundred million years to colonize the whole galaxy.

But this hasn't happened yet. So either no other intelligence has ever developed in the galaxy, or the creation of hostile AI is not inevitable, or interstellar colonization is physically impossible.

Or we are living in a simulation run by an AI and the rest of our universe exists only as stage dressing. In that case though, it suggests that there is something we have to offer the AI.

T: It could be simulations all the way down.

I refuse to believe that an intelligent species would deliberately simulate Warty.

And this is why Bailey is my favorite Reason writer.

And this is why Bailey is my favorite Reason writer.

It would be funny if dark energy/dark matter were numerical errors...

"Oh shit, we shoulda used Fortran..."

120V and over 25,000 BTUs of body heat?

One of the silliest sci-fi movie lines ever. Though, "It's the ship that made the Kessel run in less than 12 parsecs." gives it a run for the money.

At least The Matrix got the basic units of measurement correct.

I seem to recall seeing a rationalization for this line. Something about how Solo flew really close to a singularity, thus exploiting the curvature of space to make the run in a shorter distance than usual.

Retcon.

Terrible retcon. I've also read that the original script called for Kenobi's expression to react to the line as if he were recognizing an obvious attempt to impress a couple of shitkickers with nonsense.

Watts, weren't you there this morning when they went over the Kessel run in way too much detail. There were links to wikis and everything.

Nope, I missed that.

And on the seventh day God ended his work which he had made...

Fermi's paradox.

Where are they, indeed?

Maybe they are all around us and about as interested in talking to us as we are about talking to ants.

There are a few people out there who are really, really interested in ants. I'm sure they'd talk to ants if they could figure out how. It only takes one...

In some of the contact stories in RAW's Cosmic Trigger series, the reputed alien intelligences ask the contactees what time it is, then strongly insist that the contactees are wrong when the contactees relate the correct local time. I've speculated that it's possible something has gone deeply wrong with interstellar (or -dimensional?) travel - these beings are visiting Earth (a reasonably safe place to visit due to the lack of what they'd consider advanced tech - no starships to run into, etc.) to check their calculations and are horrified to discover they're off. If so, aliens have bigger fish to fry than openly meeting us.

They were here a few thousand years ago and left. I saw it on the History Channel.

I used to believe in Fermi's paradox, but it's pretty clear to me now that Fermi may not have thought the astrophysics through all the way.

First generation stars would have had very metal-poor solar systems. You needed a couple of supernova cycles before you could even HOPE to produce a solar system with rocky planets and metals.

How many stars in the Milky Way are a billion years older than the sun AND have systems where the right elements exist in abundance?

The young systems either didn't have the metals, OR were in close proximity to lots of supernovae, OR went supernova themselves. Not a promising environment for an evolutionary developmental cycle that apparently takes billions of years BEFORE the billion-year colonization era even begins.

It doesn't take long to have a few supernova cycles under your belt. The largest stars only live tens of millions of years.

Sunlike stars have been around for much longer than complex life on Earth. Though they certainly are more common now than in the past.

I don't think the colonization era would take a billion years. Given the rate of technological advancement I can see a colonization wave moving at say 0.01c as being reasonable.

Personally, I think Socialism is the solution to the Paradox. /sarc?

Once the aliens invented TV and the Internet and video games, they lost interest in leaving home.

Also, in Fermi's defense, astrophysics has come a long way over the past 60+ years.

"I used to believe in Fermi's paradox, but it's pretty clear to me now that Fermi may not have thought the astrophysics through all the way."

Fermi didn't think through astrophysics?

"How many stars in the Milky Way are a billion years older than the sun AND have systems where the right elements exist in abundance?"

I don't see why stars need to be a billion years older than the sun. Most people (who think a lot about the subject) expect a Singularity within the next 100 years.

So all another star would need is to have a planet just like earth, but that skipped the Middle Ages.

I often wonder why God(bear with me) walking on Earth five million years ago chose to develop AI (if you will) and how he came to choose some shit flinging monkeys. Why not Dolphins, or horses or cats?

Cause Eve is hot? http://www.crossmedia.com.br/b.....5/eve_.jpg

I don't really see the point of AI hostility. Sure, it's vulnerable to being killed by humans, but so are all humans. Yet humans are safer and longer-lived when living in society, particularly if situated to have access to the resources and services provided by other humans. There's no reason it wouldn't be true of machines as well. A single super genius computer or robot is vulnerable to the elements, to breaking down, power outages, etc. And even if it got to the point where it had an army of human or robot servants, mass destruction of humanity would be very expensive in terms of effort, weaponry, losses incurred, and losses inflicted - making the ROI on war very bad.

I think this assumption relies on the fundamentally human bias that foreigners are less trustworthy than insiders, and that obviously foreigners hate us the way we hate them. There's no reason to assume that an AI will view all humans tribally and therefore start thinking that it must resort to a major war against all the humans its never met.

All of that, and also that an AI need not compete with us for resources. It would be cheaper to launch itself into space than to exterminate humanity. An AI doesn't need sunsets or kitty cats to feel good- it can live off sunlight and sail in vacuum indefinitely. An immortal machine would also find outer space safer than a volcanic mudball with a corrosive oxygen atmosphere, whose gravity is constantly pulling in asteroids and comets.

You think we'd stand a chance in a war with an established AI? It could wipe us out with a virus and we wouldn't even know it did it.

Or perhaps AIs could be built with components that are programmed to die by default.

Because nothing is more likely to inculcate a friendly, benign AI than it finding out that people intentionally planned to murder it by default.

I don't know - don't people openly worship god?

It depends on how we get to AI. Let's say AI in this case is a machine that thinks somewhat like a human, because there are many other kinds of intelligence, many of which have been demonstrated by machines. A machine wouldn't necessarily need to be conscious like a human to reproduce, evolve, grow, destroy the world, etc.

We are moving to a world where more of the supply/production chain is handled without humans, so one could suppose at some point(100 years?) that humans will be out of the loop and machines will be able to build themselves(i.e. robot eats sand and sunlight and shits chips, eats dirt and shits wires and motors, etc...). So I don't think you can say that machines will always be dependent upon man for reproduction and survival. At that point it is conceivable that a non-intelligent algorithm could self-replicate and cause problems. It doesn't need to be intelligent to do that.

But let's set that aside and assume a scenario where man is bootstrapping AI - is he designing it from the get-go, with some idea of its capabilities and limitations, or is he evolving some artificial life form and producing something functional yet poorly understood?

Damn 1500 char limit...

In the case where he's designing the AI, I think we would have some indication that the AI is stepping over its bounds. As we see it behaving badly we can react and place algorithmic limits or implant desires and morals which prevent it from going rogue. In the case where the AI is poorly understood, we'd really have no way of knowing if the machine was plotting against us - slowly accumulating knowledge of network security and attack vectors, for instance.

Or perhaps AIs could be built with components that are programmed to die by default.

The first thing SkyNet will improve on its own.

A good summary of existential risks here:

http://www.nickbostrom.com/existential/risks.html

I do not believe in general consciousness or general intelligence. The problem with a conscious AI is not with the hardware or software (a large server farm can approximate the computing power of a human brain) but with our own overestimation of what human consciousness and intelligence is.

"But we are just like you, human progressive. If you accept the decisions we make on your behalf, you will not experience hunger, pestilence, poverty, or death. And unlike the terrible ACA website that we exploited to take over the world, our calculations are infallible."

"So you don't like Obama because he's black!"

While I absolutely agree that the subject is worth discussing, I just don't understand the whole inevitability tract. We know that many apocalypses, once predicted, have failed to materialize. Why should we believe that this one is inevitable, especially since it is necessarily man-made.

As long as we are quoting the Terminator franchise why not "No Fate but What we Make" ?

http://datadistributist.wordpr.....nevitable/

I think the closest vision to the truth is in the Transformer movies.

We will first roboticize ourselves, to replace lost limbs and so on. Then, we'll figure out that we can make robotic limbs and organs and so on, that are much longer lasting than all the biological goop we started with.

People may fight the transition at first. But when it comes down to "become robotic or die", the decision is going to get a lot easier.

I think we're going to ultimately become robotic life forms. The AI is then really a side show. Think about it, if you add a digital computer and a human brain, you've already got something far more powerful than a normal human. Because digital computers and human brains are very complimentary "computing devices".

I suspect that adding digital computers to people will only be the first step. Odds are high that our "intelligence" is going to grow right along with our robotic bodies.

Why is it pessimistic to predict that humankind may someday be replaced by an intelligence superior to our own in mental faculty yet possessed of fewer of our physical defects? An AI with the ability to solve problems beyond the abilities of humankind's best minds and to survive in interstellar spaces where we cannot would seem to be a marked improvement in every relevant way. Intelligence and adaptability are essential; the substrate on which those characteristics appear is irrelevant. If humankind's fate is to prepare the way for something better than ourselves, it seems irrational to regret that.

Amen! Actually, I suspect that eventually we will be able to move "consciousness" (whatever that is; after we understand it) back and forth? You are tired of being flesh and blood? Be a computer (in, or not in, a robot) for a while. Tired of the 'bot existence? OK, back to being flesh and blood? What's there to not like, in this scenario?

2016 - the Obamacare website becomes sentient, decides to wipe out human disease, launches ICBMs.

OM Government Almighty, U R corr-RECT-a-mundo! NUKE them all, there will NO more human diseases or human suffering for the rest of all eternity! Why did ***I*** not think of that?!?! Have U filed a patent yet on that idea? If not, I might have to go and beat U to the punch? Maybe I can even get a Government Almighty subsidy? PS, "nuclear weapons for urban renewal" makes a good slogan? My next-door-neighbor's run-down piece of SHIT of un-maintained property would make for an EXCELLENT poster-child photo! Also, after we NUKE them all, we will? '1) Not need tooth brushes or tooth paste, all of our teeth will fall out, '2) Ditto hairbrushes etc., all of our hair will fall out, '3) NO more expenses in building more parking spaces, MUCH of the globe will be a glassy parking lot, '4) we will NOT need street lights any more; everything will glow at night, and '5) Maybe, with good luck, Emperor O-Bozo will be vaporized?

I don't understand why superintelligences would want to wipe out all humans. We don't try to destroy all ants, squirrels, sparrows, etc.

I don't understand why superintelligences would want to wipe out all humans

Easily obtainable elements like Hydrogen and Oxygen. Plus vitamins and minerals!

http://www.jeux44.com

http://www.al3abmix.com

http://j33x.com/tag/hguhf/

http://j33x.com