Supreme Court Declines To Address Section 230 in Two Cases for This Term

Weakening or removing Section 230 would not fix the problems of social media, and in fact it could make things worse.

The U.S. Supreme Court's new term began this week. In addition to the cases the court announced it would take up, it also declined many others, including two that asked the justices to consider and potentially reevaluate a cornerstone of the modern internet.

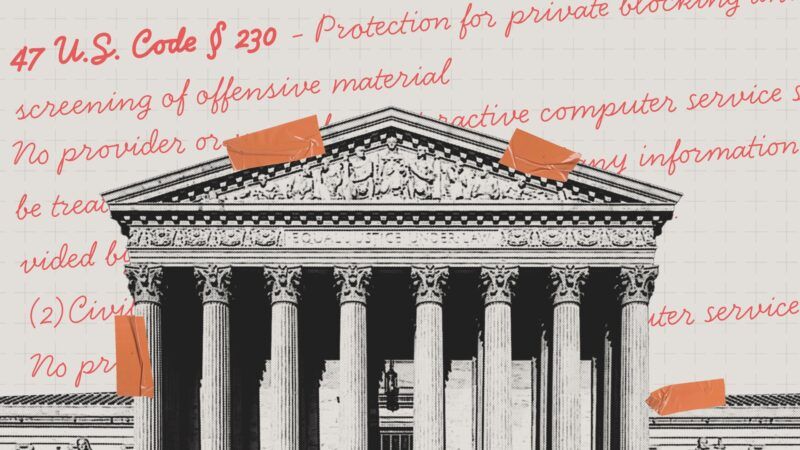

Section 230 of the Communications Decency Act holds that "no provider or user" of a website or online service "shall be treated as the publisher or speaker of any information provided by another" user, nor shall they "be held liable" for any moderation decisions "voluntarily taken in good faith."

In practice, this allowed the internet to flourish, as website owners and administrators could moderate content as they saw fit without worrying they could be held liable for illicit content that somebody else posted. The succinct statute has been referred to as "the twenty-six words that created the internet."

In May 2022, far-right provocateur and internet personality Laura Loomer sued Facebook, Twitter, and their respective CEOs for banning her from their platforms for posting hateful content. (She was also banned from Uber and Lyft.) She later amended the complaint to add Procter & Gamble as a defendant, arguing the company "demanded" Facebook remove her from its platform before it would advertise.

Just days earlier, Loomer had launched her second campaign for a seat in the U.S. House of Representatives. In her lawsuit, she alleged the companies "engaged in the commission of predicate acts of racketeering" by kicking her off, irreparably damaging her campaigns, and she sought over $10 billion in damages.

Judge Laurel Beeler of the U.S. District Court for the 9th Circuit dismissed Loomer's lawsuit with prejudice, meaning she could not refile it later. In part, Beeler determined that under Section 230, Facebook and Twitter could not be sued over moderation decisions.

Loomer appealed to the Supreme Court, calling the 9th Circuit's interpretation "overbroad" and arguing that "the need for this Court to clarify the scope of Section 230 immunity is urgent." But on Monday, at the outset of its new term, the Supreme Court denied Loomer's petition, leaving the 9th Circuit's decision as the last word. No explanation was given, though four of the nine justices must agree to take up a case. Justice Samuel Alito recused himself from considering the petition, though the reason could be that he owns stock in Procter & Gamble.

At the same time, the court denied another petition that invoked Section 230, filed after white supremacist Dylann Roof murdered nine black parishioners in a church in Charleston, South Carolina, in 2015. Clementa Pinckney's widow sued Facebook on behalf of their daughter, a minor referred to as "M.P.," saying Facebook's algorithm radicalized Roof by feeding him racist content.

"Facebook's design and architecture is a completely independent cause of harm from the content itself," the lawsuit claimed. "The content could not possibly have the catastrophic real-world impact it does without Facebook's manipulation-by-design of users." As a result, Facebook should lose "the so-called liability shield" afforded by Section 230.

Again, the court was not swayed by the argument. "Courts, having made a textual reading of the broad language of Section 230, have consistently interpreted the statute to bar claims seeking to hold internet service providers liable for the content produced by third parties," wrote Judge Richard Mark Gergel of the U.S. District Court for South Carolina. "The balancing of the broad societal benefits of a robust internet against the social harm associated with bad actors utilizing these services is quintessentially the function of Congress, not the courts." The U.S. Court of Appeals for the 4th Circuit later agreed.

M.P. appealed to the Supreme Court, arguing her case "presents a question of exceptional importance." And this week, the Supreme Court denied M.P.'s petition for appeal. (The court is also considering whether to take up a separate case, John Doe v. Grindr, which claims Grindr is liable for a teenager's sexual assault that took place after joining the dating app.)

This was not a foregone conclusion: In particular, Justice Clarence Thomas has indicated multiple times in recent years that he feels the court should take up Section 230. "We should consider whether the text of this increasingly important statute aligns with the current state of immunity enjoyed by Internet platforms," Thomas wrote in 2020.

"We will soon have no choice but to address how our legal doctrines apply to highly concentrated, privately owned information infrastructure such as digital platforms," Thomas added in 2021. "In many ways, digital platforms that hold themselves out to the public resemble traditional common carriers," like utilities that are subject to greater government restrictions.

Thankfully, the court has so far not acted on Thomas' recommendation. Weakening Section 230 would not be a panacea for the problems of social media, and in fact, it could make many things worse.

Some, like M.P.'s lawsuit, suggest Section 230 allows social media firms to get away with moderating too little, allowing unsavory content to spread if it drives engagement; they suggest weakening the statute would require companies to be more mindful of what's on their platforms. But it could actually have the opposite effect. "Before Section 230, a platform could limit its liability either by doing virtually no content moderation or by doing lots of it. Remove Section 230 and the dilemma returns," Corbin Barthold wrote at Reason in 2022.

M.P.'s case, in particular, is tragic. But altering or ending Section 230 would make the internet worse while not making people meaningfully safer online.

Show Comments (22)