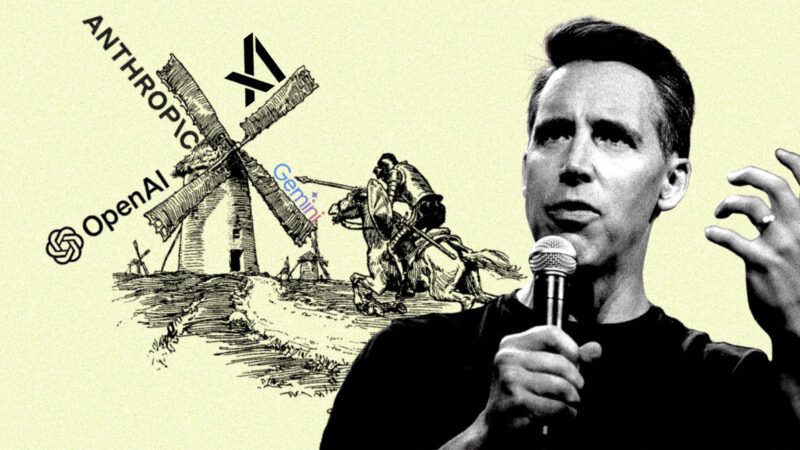

Josh Hawley and Democrat Allies Target AI With New Legal and Regulatory Regime

Two bills recently introduced by Hawley would set American AI and the economy back.

For years, Democratic lawmakers have been sounding the alarm about the potential danger of AI. An increasing number of Republicans are beginning to echo these warnings.

On Tuesday, Sen. Josh Hawley (R–Mo.) introduced the AI LEAD Act. The bill, cosponsored by Sen. Dick Durbin (D–Ill.), "would classify AI systems as products, allowing for liability claims when an AI system causes harm." Hawley said the bill will allow "parents—and any consumer—[to] sue when AI products harm them or their children." The bill makes AI developers liable for "failure to exercise reasonable care [that's] a proximate cause of harm," which includes "mental or psychological anguish, emotional distress, or distortion of a person's behavior that would be highly offensive to a reasonable person."

While the First Amendment currently shields social media platforms from a duty of care regarding the speech that their algorithms direct to users, free speech protections for AI companies are legally ambiguous. However, Garcia v. Character Technologies and Raine v. OpenAI—two cases in which teenagers took their own lives after building intimate "relationships" with chatbots—could soon provide clarity as to whether AI companies have a duty of care.

The AI LEAD Act isn't the only AI legislation Hawley introduced this week. On Monday, he and Sen. Richard Blumenthal (D–Conn.) introduced the Artificial Intelligence Risk Evaluation Act. The bill would require advanced AI developers like OpenAI and xAI to submit all relevant information about their large language models to the Energy Department for testing and approval before deployment.

The bill, if passed, would create the Advanced Artificial Intelligence Evaluation Program in the Energy Department and charge it with "systematically collec[ing] data on the likelihood of adverse AI incidents," which include threats to critical infrastructure, loss-of-control scenarios, and "a significant erosion of civil liberties, economic competition, and healthy labor markets." Ominously, the bill also requires the program to determine "the potential for controlled AI systems to reach artificial superintelligence" and to recommend measures, "including potential nationalization," to manage or prevent this from happening.

Advanced AI developers must in turn provide the underlying code, training data, model weights, interface engine, and other "detailed information regarding the…advanced artificial intelligence system" to the energy secretary upon request. Those developers that deploy their systems without first complying with the program would face a $1 million daily fine.

Dean Ball, senior fellow at the Foundation for American Innovation, describes the bill as "a federally mandated veto point on the future of AI." Hawley said on X that "Congress can't allow American jobs…to take a back seat to AI," and that he's introducing the bill "to ensure AI works for Americans, not the other way around." While Hawley seems to believe that AI is taking American jobs, the likelihood of imminent substitution is overblown.

Both bills would compel AI developers and deployers to invest more time and resources bubble-wrapping their tools before making them available to the public—time and resources that could otherwise go to maintaining American AI dominance. It's unlikely that either bill will pass as written, but Republican support for these measures could signal that Congress is willing to tighten the screws on the industry, which would set America back in the global AI race.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

AI might say something mean about Jimmy Kimmel? Perhaps even jawboning?

I often use an anonymous incognito browser to do youtube searches when I don't want to pollute my algorithm... like I need to learn how to throw in the J-Bolts before the slab sets up so I don't need to break out the rotohammer... and I don't want my normal youtube feed to be full of oiled up shirtless men using tools...

The good news: The normie youtube feed, untainted by my account shows about as much Jimmy Kimmel content as it ever did. The bad news: It all treats him like Jesus, where before it just treated him like Gandhi. Oh, still chockablock with MSNBC, Jon Stewart, Steven Colbert and some dude named Seth Meyers-- I don't know who that is.

When I want to see oiled up shirtless men using tools, I login as Robby. Ymmv.

You know what's worse than Josh Hawley? A Democrat that acts like Josh Hawley!

Trump: Literally worse than Hitler

DeSantis: Literally worse than Trump!

Josh Hawley: Literally worse than DeSantis

Elon Musk: Literally worse than Josh Hawley.

Democrats who act like Josh Hawley: Literally worse than Elon Musk!

You know what's worse than Josh Hawley?

Jumping on a bicycle when the seat is missing?

Josh Hawley is an idiot.