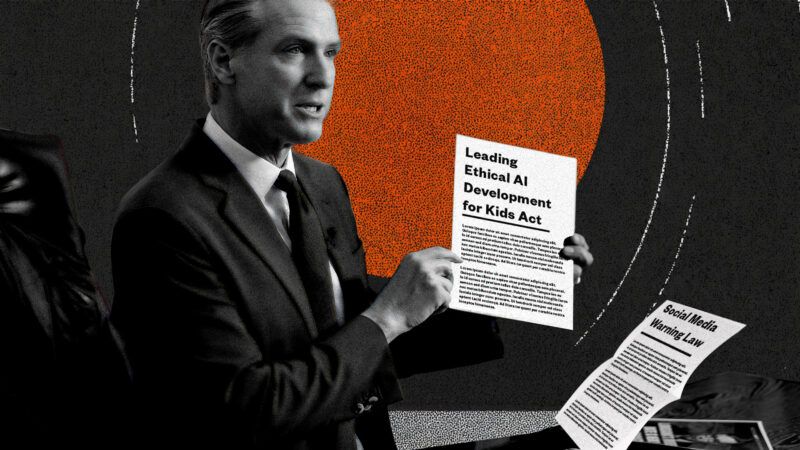

These 2 Terrible Tech Bills Are on Gavin Newsom's Desk

One limits children’s access to mental health services, the other mandates a black box warning, and both undermine users’ digital privacy.

The California state Senate recently sent two tech bills to Democratic Gov. Gavin Newsom's desk. If signed, one could make it harder for children to access mental health resources, and the other would create the most annoying Instagram experience imaginable.

The Leading Ethical AI Development (LEAD) for Kids Act prohibits "making a companion chatbot available to a child unless the companion chatbot is not foreseeably capable of doing certain things that could harm a child." The bill's introduction specifies the "things" that could harm a child as genuinely bad stuff: self-harm, suicidal ideation, violence, consumption of drugs or alcohol, and disordered eating.

Unfortunately, the bill's ambiguous language sloppily defines what outputs from an AI companion chatbot would meet these criteria. The verb preceding these buckets is not "telling," "directing," "mandating," or some other directive, but "encouraging."

Taylor Barkley, director of public policy for the Abundance Institute, tells Reason that, "by hinging liability on whether an AI 'encourages' harm—a word left dangerously vague—the law risks punishing companies not for urging bad behavior, but for failing to block it in just the right way." Notably, the bill does not merely outlaw operators from making chatbots available to children that encourage self-harm, but those that are "foreseeably capable" of doing so.

Ambiguity aside, the bill also outlaws companion chatbots from "offering mental health therapy to the child without the direct supervision of a licensed or credentialed professional." While traditional psychotherapy performed by a credentialed professional is associated with better mental health outcomes than those from a chatbot, such therapy is expensive—nearly $140 on average per session in the U.S., according to wellness platform SimplePractice. A ChatGPT Plus subscription costs only $20 per month. In addition to its much lower cost, the use of AI therapy chatbots has been associated with positive mental health outcomes.

While California has passed a bill that may reduce access to potential mental health resources, it's also passed one that stands to make residents' experiences on social media much more annoying. California's Social Media Warning Law would require social media platforms to display a warning for users under 17 years old that reads, "the Surgeon General has warned that while social media may have benefits for some young users, social media is associated with significant mental health harms and has not been proven safe for young users," for 10 seconds upon first opening a social media app each day. After using a given platform for three hours throughout the day, the warning is displayed again for a minimum of 30 seconds—without the ability to minimize it—"in a manner that occupies at least 75 percent of the screen."

Whether this vague warning would discourage many teens from doomscrolling is dubious; warning labels do not often drastically change consumers' behaviors. For example, a 2018 Harvard Business School study found that graphic warnings on soda decreased the share of sugar drinks purchased by students over two weeks by only 3.2 percentage points, and a 2019 RAND Corporation study found that graphic warning labels have no effect on discouraging regular smokers from purchasing cigarettes.

But "platforms aren't cigarettes," writes Clay Calvert, a technology fellow at the American Enterprise Institute, "[they] carry multiple expressive benefits for minors." Because social media warning labels "don't convey uncontroversial, measurable pure facts," compelling them likely violates the First Amendment's protections against compelled speech, he explains.

Shoshana Weissmann, digital director and fellow at the R Street Institute, tells Reason that both bills "would probably encourage platforms to verify user age before they can access the services, which would lead to all the same security problems we've seen again and again." These security problems include the leaking of drivers' licenses from AU10TIX, the identity verification company used by tech giants X, TikTok, and Uber, as reported by 404 Media in June 2024.

Both bills could very likely exacerbate the very problems they seek to address— worsening mental health among minors and unhealthy social media habits—while making users' privacy less secure. Newsom, who has not signaled if he'll sign these measures, has the opportunity to veto both bills. Only time will tell if he does.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Oh, I know this one!

m-i-c-r-o-m-a-n-a-g-e-b-y-b-u-r-e-a-u-c-r-a-t-s-t-o-o-s-t-u-p-i-d-t-o-g-e-t-a-r-e-a-l-j-o-b.

For what it's worth, California cannot properly manage a bowel movement, much less handle these bills.

As long as stupid black females are hired for important decisions such as mayor and Fire dept. expect even more mismanagement.

The people of Cali are getting what they voted for.....good and hard.

In before sarcbot strawman. He won't criticize Newsome though.

How does Nick Jacastro feel about it?

Reason never criticizes Democrats, therefore this article does not exist.

Haha. Called it. Poor retarded sarc.

Is there anyone among the commentariat in Maine that can take Sarc to the vet, or Canada, and have him put down?

Years ago, sarc was neutered when he first contracted TDS.

Missing the important AI news of the day.

Nvidia gives $100 billion to OpenAI so that OpenAI can buy Nvidia chips. Which will certainly help Nvidia but makes you wonder who is really paying and what does this mean about AI

AI clearly can't fund its growth with revenues. Nvidia knows this which is why they are recycling their own revenues within the Silicon Valley AI sphere. Meaning - we are in a bubble and the clock is ticking. Not news to anyone. Nor however is it the same sort of obvious as a sock puppet on a Super Bowl commercial.

Once that shit-ton of capital spending/intensity starts getting written down by the Mag7+, they will turn into shitty business models - permanently. What made those companies so massively profitable over the decades was that they could generate obscene margins with no capital expenditures via their cartelized network effect. it was the great success of the VC fueled tech model for decades. That's gone permanently.

Tech will now become VERY capital intensive and not just the semi/foundries where that capital was spent by China/Taiwan/Korea for decades. Meaning that the consumer-driven debt-driven economic model that has fueled the US for decades will now diminish. And Asia is now pulling capital out of the US because the US is clearly a hostile uninvestable space for foreigners.

8 Gw of chip compute which is the 'deal' here is the equivalent of roughly 18% of incremental electrical capacity created each year in the US. Since this deal involves precisely zero Gw of additional electrical power supply (like pretty much everything that techbros have been doing with their data center construction), the only effect is to increase demand - ie increase electricity bills for everyone else. IDK the marginal price elasticity of that sort of demand increase - but it will be noticeable. After all, $100 billion is about six months of Nvidia revenue. So - not only is AI hyped/supposed to take people's jobs, it will also reduce their ability to have indoor electricity. Won't take long before the tech crowd gets an even more evil reputation.

Maybe AI will come up with some non-imaginary solution in response to a well-structured prompt. Hahahahaha.

Why is the supply of electric power seen as a limited resource, but there is no end to the amount of chips that can be produced?

Constructing 8 gw (defined as such in this deal) of electric generation costs maybe $7 billion for gas, $13-14 billion for wind/solar, and maybe a bit more for nuclear. Plus ongoing fuel costs for gas and nuclear. Nvidia chose NOT to do any of that. They chose to have someone else pay that via either higher electric prices on others or capital investment in generation (which will only be covered by higher electric prices). While pretending that none of this even exists.

Tech and AI are simply not serious. They are parasites. The sooner this delusionary bubble ends the better. Unfortunate too because I really like AI the technology.

Why does AI have to produce its own energy, or risk being called a parasite? Do we expect airlines to refine their own jet fuel? It's not nvidias responsibility to limit chip production to match existing and/or predicted energy availability, just like it isnt the responsibility of carmakers to limit production to existing oil resources.

If you're mad st AI, thats fine - dont invent your own standards then attack AI for not meeting them...

Don't make me support Newsom.

We license therapists for a reason. Having a chatbot act as a therapist is almost infinitely worse than an untrained person because there are no rails to go off

The Leading Ethical AI Development (LEAD) for Kids Act prohibits "making a companion chatbot available to a child

This is not "limiting children’s access to mental health services."

You "digital-friend" people are as bad as the pedos. And I'm now convinced that Jack is a pedo. Grooming by any other name.

it's also passed one that stands to make residents' experiences on social media much more annoying.

God forbid.

"Fire is hot! It makes me not want to touch it! But I want to, so that's not faaaaiiir!"

It's "for Kids" Act?????

Who's kids? Guess all the Kids daddy is Gavin Newsom & CA-Ds.

Anything, literally anything greasy Gavin supports should be immediately suspect. No one should trust him, least of all the people of California but unfortunately there are plenty of brain dead individuals who continue to vote for democrats and when they get shit on by the same people they voted for, they haven't the intellect to reason out why they continue to fail.

Newsom is aiming for the White House and he just may achieve that goal. If he does, we are in for a very bad time of it.

Newsom is slimy and repugnant, and half the country can't stand him, and ordinarily that would be OK, but a large percentage of Democrats also find him slimy and repugnant, he'll never get the nomination.

Democrats are convinced they will win in 2028, so all the fringe politicians will come out of the woodwork to run for President, Gavin will get eliminated early (Maybe not Kamala Dec. 2019 early, but early), and some extremist Dem will head the 2028 ticket and hand the Presidency to JD Vance.

How about we quit giving the Psych industry any more money until they can actually cure someone with a mental illness? They actually make up diseases by voting, not using science! Is anyone monitoring these frauds at all or have they got you all bamboozled with their big made-up words?