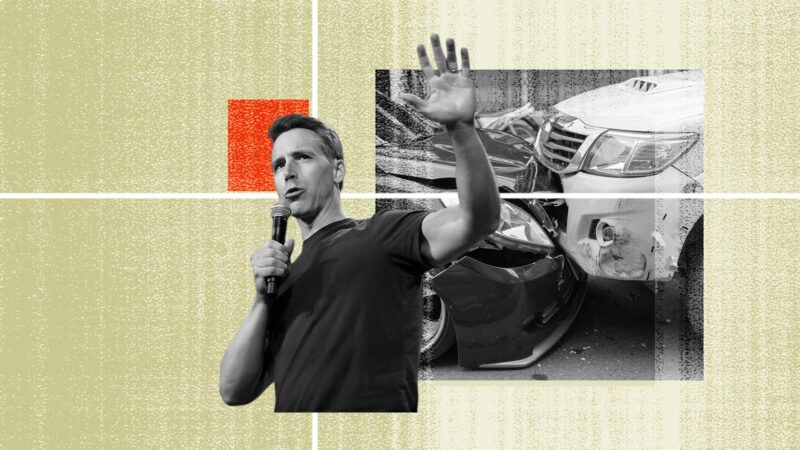

Josh Hawley's Anti–Driverless Cars Policy Would Kill a Lot of People

Tens of thousands of people die each year in crashes where human error was the cause or a contributing factor.

In a September 4 speech at the National Conservatism Conference, Sen. Joshua Hawley (R–Mo.) bemoaned the rise of artificial intelligence, listing, among other complaints, "Only humans ought to drive cars and trucks." This sentiment isn't only anti-innovation, it's a dangerous line of thinking when it comes to the realities of road safety.

Each year, more than 40,000 Americans die in auto accidents. State-by-state statistics show that nearly 1,000 Missourians—Hawley's constituents—died in auto accidents in 2023. The vast majority of these accidents are caused by human error. Although the exact number varies, as accidents often have multiple causes, studies over the years have found that human error caused or contributed to between 90 percent and 99 percent of auto accidents. Of course, many people operate vehicles safely, but this significant death toll caused by human drivers ought to make us open to safer solutions.

While much of our discourse around artificial intelligence (AI) has focused on generative AI products like ChatGPT, autonomous vehicles represent one of the exciting and often underappreciated applications of this emerging technology. AI is important not only for the development of the fully driverless cars that Hawley seems concerned about but also for many of the technologies we now expect in cars, such as lane-departure notifications and anti-lock braking systems.

Unlike human operators, autonomous vehicles don't get drunk, drowsy, or distracted. A 2024 study published in Nature Communications found that "vehicles equipped with Advanced Driving Systems generally have a lower chance of occurring than Human-Driven Vehicles in most of the similar accident scenarios."

Autonomous vehicles are already a reality, and millions of miles of testing have proven their safety. Like any technology, they are not without error, and there have been headline-grabbing examples of malfunctions or accidents, but data show these are rare.

As of March 2025, Waymo, one of the leading companies in autonomous vehicles, had operated vehicles over 71 million miles without a human driver. According to released safety data, the vehicles had reduced accidents by more than 78 percent in every category examined, for both passengers and other road users like pedestrians or cyclists. On a national scale, that trend would save more than 30,000 American lives each year.

This potential benefit to road safety has attracted many policymakers to look for ways to embrace, rather than discourage, this technological development. On the same day as Hawley's speech, Secretary of Transportation Sean P. Duffy announced plans to modernize safety standards with autonomous vehicles in mind, recognizing the important role of transportation innovation. Across the nation, both red and blue states have sought to encourage the testing and development of autonomous vehicles: 29 states have enacted legislation related to autonomous vehicles, and a further 11 have acted on the issue through executive orders. These policymakers recognize that we should see technology as a way to assist and improve transportation safety, encouraging human flourishing and improving our transportation ecosystem.

When autonomous vehicles get into accidents, it makes the national news because it is so rare. Meanwhile, human-operated vehicle accidents are so frequent that we consider them a sad but normal occurrence. Hawley may nearly have it backward: It's humans, not AI, who have proven to be dangerous operators of cars and trucks. We should consider the costs of the lives lost to human error if we deter the development of this important technology. Instead of trying to restrict the potential application of AI technology, we should be excited about its potential.

Show Comments (70)