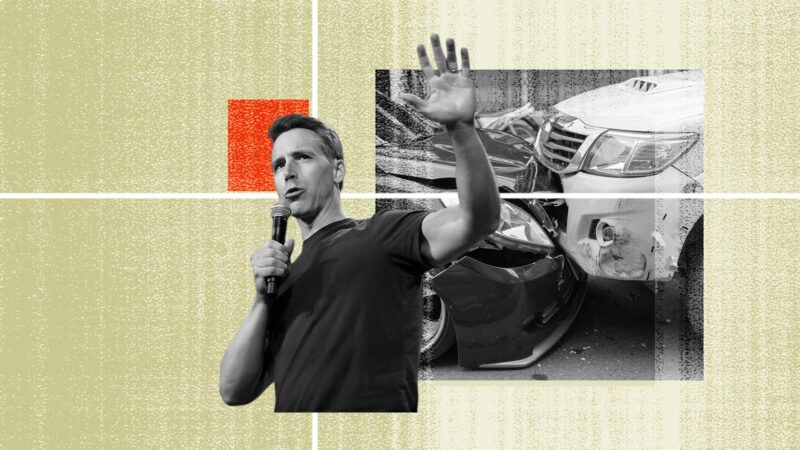

Josh Hawley's Anti–Driverless Cars Policy Would Kill a Lot of People

Tens of thousands of people die each year in crashes where human error was the cause or a contributing factor.

In a September 4 speech at the National Conservatism Conference, Sen. Joshua Hawley (R–Mo.) bemoaned the rise of artificial intelligence, listing, among other complaints, "Only humans ought to drive cars and trucks." This sentiment isn't only anti-innovation, it's a dangerous line of thinking when it comes to the realities of road safety.

Each year, more than 40,000 Americans die in auto accidents. State-by-state statistics show that nearly 1,000 Missourians—Hawley's constituents—died in auto accidents in 2023. The vast majority of these accidents are caused by human error. Although the exact number varies, as accidents often have multiple causes, studies over the years have found that human error caused or contributed to between 90 percent and 99 percent of auto accidents. Of course, many people operate vehicles safely, but this significant death toll caused by human drivers ought to make us open to safer solutions.

While much of our discourse around artificial intelligence (AI) has focused on generative AI products like ChatGPT, autonomous vehicles represent one of the exciting and often underappreciated applications of this emerging technology. AI is important not only for the development of the fully driverless cars that Hawley seems concerned about but also for many of the technologies we now expect in cars, such as lane-departure notifications and anti-lock braking systems.

Unlike human operators, autonomous vehicles don't get drunk, drowsy, or distracted. A 2024 study published in Nature Communications found that "vehicles equipped with Advanced Driving Systems generally have a lower chance of occurring than Human-Driven Vehicles in most of the similar accident scenarios."

Autonomous vehicles are already a reality, and millions of miles of testing have proven their safety. Like any technology, they are not without error, and there have been headline-grabbing examples of malfunctions or accidents, but data show these are rare.

As of March 2025, Waymo, one of the leading companies in autonomous vehicles, had operated vehicles over 71 million miles without a human driver. According to released safety data, the vehicles had reduced accidents by more than 78 percent in every category examined, for both passengers and other road users like pedestrians or cyclists. On a national scale, that trend would save more than 30,000 American lives each year.

This potential benefit to road safety has attracted many policymakers to look for ways to embrace, rather than discourage, this technological development. On the same day as Hawley's speech, Secretary of Transportation Sean P. Duffy announced plans to modernize safety standards with autonomous vehicles in mind, recognizing the important role of transportation innovation. Across the nation, both red and blue states have sought to encourage the testing and development of autonomous vehicles: 29 states have enacted legislation related to autonomous vehicles, and a further 11 have acted on the issue through executive orders. These policymakers recognize that we should see technology as a way to assist and improve transportation safety, encouraging human flourishing and improving our transportation ecosystem.

When autonomous vehicles get into accidents, it makes the national news because it is so rare. Meanwhile, human-operated vehicle accidents are so frequent that we consider them a sad but normal occurrence. Hawley may nearly have it backward: It's humans, not AI, who have proven to be dangerous operators of cars and trucks. We should consider the costs of the lives lost to human error if we deter the development of this important technology. Instead of trying to restrict the potential application of AI technology, we should be excited about its potential.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

"Tens of thousands of people die each year in crashes where human error was the cause or a contributing factor."

...especially when the drivers are drunk or high.

or senile

or dicking around with a cell phone

Or an illegal, with a faked license. .. this is fun lets do more.

Could be distracted while high and drunk while simultaneously getting a blowjob from a Mexican illegal whore.

Apologies to ENB.

"Hawley may nearly have it backwards: It's humans, not AI, who have proven to be dangerous operators of cars and trucks."

It's a little early in the technology development and rollout stages to be making this sort of comment.

Consider where the autonomous vehicles are operated, in controlled environments even if they are on public roadways.

The majority however are mine sites with autonomous dump trucks which is not really acceptable evidence.

"...It's a little early in the technology development and rollout stages to be making this sort of comment..."

Living in a low-traffic hilly area of SF surrounded by (relatively) cheap flatlands, we have watched the development of these things with humor and concern for ~20 years (neither Bing nor Google will tell you when the first embarrassing test began; are you surprised?)

When they first rolled out, it was obvious that the engineers had badly underestimated both the data acquisition requirements and the correct processing of that; the cars were incapable of by-passing a double-parked car!

Any of you who hold a GA pilot's license know the attention and control requirements for that activity and should also know the number of decisions/minute are far, far fewer than driving on public city streets.

Dunno about you, but in in city driving, I want to make eye contact to make decisions in questionable encounters; no autonomous vehicle offers that.

Who the fuck hires you people to write for Reason? Continued...

I see that your rhetorical style is appeal to emotion (a logical fallacy) using big, scary-looking numbers.

Don your critical thinking cap and put those numbers into perspective.

The population of the united states, in 2024, was approximately 340 million people. Forty thousand of that is 0.000124% of the population. It is statisically insignificant.

The population of Missouri in 2023 was approximately 6.2 million people. One thousand of that is, nominally higher than the national value, about 0.000163%.

Both values are marginally over one ten-thousandth of a percentage point. For reference, 1% of 340m is 3.4 million and 1% of 6.2m is 62,000.

FIFY

For reference, Waymo avoids conditions outside of its Operational Design Domain (ODD) which includes heavy rain, heavy snow, and ice. These conditions impair their sensors affecting their ability to detect lane markings, traffic, and road surfaces accurately. Their vehicles will pull over to a minimal risk condition, return to base, or reroute to avoid unsafe situations caused by such weather events.

Again, don your critical thinking cap. Just because there have been millions of safe miles in tested conditions doesn't mean they've been tested in every condition or terrain.

To just accept, "whelp, they're safe," based on their say so, based on performance in cherry-picked conditions, is simply appeal to authority (another logical fallacy).

[Aside: How many people will die because a robocar won't drive them to the ER or Urgent Care because it's raining outside? Often, driving in bad weather is simply unavoidable.]

Again, reference above. Waymo avoids conditions outside of its ODD.

Again, put on your critical thinking cap.

Any accident reduction was within Waymo's Operational Design Domain (i.e. cherry-picked conditions).

Applying the same math above, 30,000 Americans represent, based on 2024 population, only approximately 0.0000882% of the population. That is eight hundred-thousandths of one percent.

To my opening point, your title is deceptive and wrong.

First and foremost, prohibiting driverless cars will not kill anybody. Sure, it won't help reduce accidents and traffic fatalities like robocars marginally can in limited, optimal conditions. But Hawley's policy won't actually kill anyone.

Second, the verbiage and hyperbole ("Kill a Lot of People") are a blatant (and false) appeal to emotion. The number, while scary sounding -- ooooh! thousands of people -- is certainly not "a lot." It is a vanishingly small number of people.

"If it saves just one life!!" Right? Fuck off.

Note, I don't disagree with the overarching premise: government ought not deter innovation for stupid reasons. However, you fail to persuade with click-bait headlines, hyperbole, and logical fallacies (appeal to emotion and appeal to authority).

See.Less, in addition to being emotional you are also innumerate. Your calculations are all off, understating the percentages, by 2 orders of magnitude.

Oooh! Clever ad hominem!

More ad hominem.

Ah. Fast to ascribe a failure of education or understanding rather than simply note a procedural error. Another ad hominem! That's a hat trick!

Yes. You are correct. I neglected to multiply my quotient by 100. As such, correcting my numbers:

* 40k deaths nation wide divided by 340m Americans, then multiplied by 100, yields a result of approximately 0.012% (nominally over one one-hundreth of one percent).

* 1k deaths in Missouri (2023) divided by 6.2m Missourians, then multiplied by 100, yields a result of approximately 0.016% (nominally over one one-hundreth of one percent).

* 30k lives predicted to be "saved" by robocars divided by 340m Americans, then multiplied by 100, yields a result of approximately 0.001% (one one-thousandth of a percent).

As a percentage of the populations (national and Missouri), the numbers are still very, very tiny. Miniscule, even.

Therefore, my conclusions stand. While the numbers, as raw data points, may look scary, the reality is that they are very, very small values.

Now, do you have anything interesting or substantive in response to the content of my post?

Go try a Tesla self drive.

See less is just what you are doing, and you did it again. 30,000 is .0088% of 340,000,000. At least you are only off by a factor of 8.8 this time. If these numbers are very, very small values, what do you think of the much smaller average of 7,208 US deaths per year between 1965 and 1972 in the Vietnam War?

Speaking of logical fallacies:

"...If these numbers are very, very small values, what do you think of the much smaller average of 7,208 US deaths per year between 1965 and 1972 in the Vietnam War?..."

Misdirection is a hallmark of a dishonest POS; congratulations!

How is it misdirection to simply try to determine how See.More decides when a number of violent deaths is not important?

So if he does it, you get to also? You're REALLY bad at this.

If he does what?

You are correct when you say Waymo does not operate under all weather conditions. The Waymo Operational Design Domain lists snow, ice and hail as not part of the current domain, but Waymo also states that it is testing operation under these conditions. I expect that there are other weather related conditions where they would also decide to not operate, e.g. hurricanes and flooding. Also they only operate in areas where they have mapped. I believe that they are currently mapping in Dallas.

"...but Waymo also states that it is testing operation under these conditions..."

So let's leave operations under those conditions out of the comparisons?

Given you are a propagandist, I'm sure you have a weasel-out to exclude those from any consideration, right?

0.0045% was the homicide rate in NYC last year but Trump wants to put it under military rule.

Unless I missed it, the article also IGNORED total miles. When analyzed per mile driven, autonomous vehicles have a significantly worse safety record than humans.

Do you have a source for that?

Who pays you?

Waymo advertises 100M a year.

American drivers are estimated 3.2T a year.

Oh, and do you have any data supporting your (implied) claims, or (like the low-watt bulbs promoting EVs) hoping you can skate by requiring others to supply as yet unknown specifics?

Here's a link to a study comparing the safety of autonomous vehicles to human driven, and it is based on miles driven.

https://pubmed.ncbi.nlm.nih.gov/39485678/

Minus those dangerous times Waymo doesn't operate?

Including mine-truck miles?

You're not very good at this.

You do seem to want to discuss mining trucks. So I will address autonomous mining trucks and how they relate to autonomous road vehicles.

Autonomous mining trucks have satisfied the functional requirements of the mining industry while at the same time greatly improving safety when compared to human drivers. The knowledge gained in the development of autonomous mining trucks can be applied to autonomous road automobiles, but the requirements for the two autonomous domains are quite different. Mining trucks operate in a tightly controlled environment. The roads are all private, access to the roads is restricted to mining trucks, service vehicles for the maintenance of the mining roads, and other service vehicles required for the mining operation. The location of all people and vehicles is monitored using V2X (vehicle to everything) communication. The mining trucks have predetermined routes that they follow repeatedly. Parts of the intelligence for controlling the trucks is located in both central locations and in the trucks. The mining trucks have automatic right of way over all other traffic. The roads are maintained continuously to avoid road problems that would stop the mining trucks. The mining trucks must be extremely rugged vehicles designed to operate for long periods of time in the conditions found at a mining operation.

In contrast the requirements for autonomous road vehicles are much more complex. All types of roads and mixtures of road traffic must be supported. Locations of all vehicles and pedestrians are not provided by a system such as V2X. Autonomous road vehicles do not have right of way over all other traffic. Autonomous road vehicles require support for higher speeds than mining trucks require. The intelligence for autonomous road vehicle operation is mostly located in the vehicle, constant communications with a central service can not be assumed. Unexpected road conditions must be handled by the autonomous road vehicles. Traffic control and instructions must be obeyed by the autonomous road vehicle. The vehicles should be designed to operate for at least the range of conditions for which a human driver would be expected to be able to operate the vehicle.

As a side note, mining operations are beginning to use autonomous road vehicle technology for the other vehicles that operate on the private mining roads.

If you are saying that mining trucks currently fulfill a more complete set of their requirements than autonomous road vehicles fulfill the complete set of their requirements, then yes of course they do, but the requirements are different and much more challenging for the road vehicles. That's why autonomous road vehicles are being rolled out in stages where a subset of the requirements that is sufficient for a specific task and location has been met. That's why it makes sense for Waymo to be rolling out to Austin and Dallas. The weather conditions that Waymo currently doesn't handle are the same unusual conditions that Dallas drivers can't handle, and for the type of hail that we can get in Texas, don't expect any car or driver to be able to handle it.

Reply to "times Waymo doesn't operate"

The study is comparing the safety per mile driven of autonomous Waymo vehicles with human driven vehicles in the same geographic areas during the same time periods. Waymo autonomous vehicles will pull to the side of the road and pause operations when weather conditions make it unsafe to operate. In Phoenix the unsafe weather conditions are primarily monsoon storms with heavy rain and flooding, conditions where humans shouldn't be driving either. Haboobs, dust storms. can be unsafe for human driving but with radar and lidar Waymo can continue to operate.

"...According to released safety data, the vehicles had reduced accidents by more than 78 percent in every category examined, for both passengers and other road users like pedestrians or cyclists.

Again, put on your critical thinking cap..."

I doubt she has experience with driverless cars; if she did, she'd at least attempt to account for what those of us who do regularly resort to: Simply stopping and letting that idiot-driven vehicle get out of the way entirely before proceding.

Are you saying that while driving when you encounter a driverless car you stop your car and wait until the driverless car is out of the way? Where do you stop your car? In the middle of the road? At the side of the road? Do you block traffic while waiting? If the driverless car is traveling in the same direction as you, why don't you just pass it? It won't cause you any problems if it is receding in your rearview mirror, or the digital equivalent of the mirror.

Well, my Tesla is a safer driver than I am. That’s all I care about.

In which case, please notify the public when you're driving. Or quit driving. That's all I care about.

The vast majority of these accidents are caused by human error. Although the exact number varies, as accidents often have multiple causes, studies over the years have found that human error caused or contributed to between 90 percent and 99 percent of auto accidents.

Up to 90-99% of automobile deaths up until Mar. of 2020 and then back up to 90-99% again after Jan. 7, 2021. Trust the experts.

Until autonomous vehicles account for at least 1% of total miles driven, that is actually a condemnation of autonomous vehicle "safety".

Are you assuming the remainder of the 90-99% were the fault of vehicle autonomy?

We're assuming propagandists like you have no data to back your (implied) claims.

Prove me wrong.

Or is it that you simply refuse to look at the data?

One more diversion - what a surprise!

Another evasion, you still haven't replied to the data that I posted.

When I was driving a semi truck my trucker's GPS consistently wanted me to make U turns. These turns were legal but as we have seen can be very dangerous. It takes a real live human being to weigh the risk. As far as I know autonomous trucks are limited to the interstates and nearby distribution centers. But big trucks go everywhere from shithole cities to dirt roads in the mountains and deserts. And sometimes it rains. And sometimes it snows, a lot. It's simply not possible to maintain a perfect driving environment for these things. And if they all park on the shoulder waiting for spring we'll have bigger problems.

Gee, Mark U and aajax have been challenged on their bullshit and seem to have wandered off to lick their wounds.

Fuck the both of you; those who post here are not imbeciles. We can spot paid propogandist shits for some well-funded tech a mile off.

Fuck off and die.

I have replied to you.

After those who pay you for your shilling gave you some 'answers'? You REALLY SUCK at this; they should demand their money back.

Then why are you so unable to respond?

The issue boils down to trust. Do I trust the very sophisticated and complex computer program(s) written by programmers who don't (and probably can't) anticipate all of the contingencies occurring while I am driving more than I trust my own experience, attention, and reaction time? To date, the answer, based on personal experience, is "no."

I'm not sure you understand how this software works, but when you add "to date", I agree that for the most part today we are not at a point where we can all safely move to autonomous vehicles.

In the future, unless you are enhanced, there will be no way you could compete with autonomous software in experience, attention, and reaction time.

"In the future, unless you are enhanced, there will be no way you could compete with autonomous software in experience, attention, and reaction time."

Assertions from paid shills should be and are ignored.

I assume that as a libertarian, you're not advocating that all people be REQUIRED to travel in "driverless" cars. In fact, such cars are driven by programmers who wrote very complex and sophisticated computer programs that "drive" the cars, even though they (the programmers) cannot anticipate all of the contingent conditions in which the cars will be used.

I am not advocating requiring people to be required to travel in "driverless" cars, but I expect in the not to distant future for owners of some roads to start requiring autonomous vehicles. How do you feel about being required to travel in autonomous elevators in some buildings? I do like taking the stairs when I can, but sometimes I don't get that choice.

If you think middle Americans freak out when you try to take their guns, just watch what happens when you try to take their steering wheels.

As I already said, they just won't be allowed on some roads. While the steering wheel may be one of the last parts to be removed, much of the replacement of the control of the car with software has already happened.

^^^Pedant missing the point.

The point is that the change is happening incrementally, and what is at first looked at with skepticism or fear soon becomes commonplace and not long after essential. Do you want to drive without antilock braking?

My antilock brakes have never decided to refuse to let the car move against my will. You're just not getting the point here.

Good thing computers are perfect at adapting, never have bugs or coding flaws, and cannot be hacked.

(I'd have added rise up and overthrow us, but at this point I for one welcome our new robot overlords.)

Yes it's also a good thing that humans are perfect at adapting, never make mistakes, and never take bribes.

The same humans who will be programing those self driving cars.

^+1 - And thereby visiting the errors on all of the products at once, and NOT LEARNING it was an error.

Fuck Josh Hawley, but come on with that headline and the appeals to emotion.

I will never understand Reason's love (and Stossel's) love of automated cars. It's like the most anti-libertarian thing there is, it means you no longer control where you can go, it's entirely in the hands of government and tech companies.

How is it any different. Do you think that the government doesn't already control where you can go? Do you think that the government doesn't track your travel? Do you think that your car, if it was made recently, doesn't track you and monitor your driving?

Snooping on you is not the same thing as actually being able to remotely control your vehicle. And no one is forcing us (yet) to drive newer vehicles with advanced spying technology.

Finally someone got around to mentioning the worst problem with them. They give The Authorities the ability to control where you may or may not go, and when.

I agree that the "Authorities" should be restricted in the control that they have, but crippling the capabilities of your car is not the way to address that issue.

Be nice if politicians didn't think it was their job was to control the world.

How many control 'accidents' have the politicians been in?

We elect the controlling politicians. MAGA loves them.

Right, right... De-Regulation and Abolishing is all about 'controlling politicians'. /s

Unlike human operators, autonomous vehicles don't get drunk, drowsy, or distracted.

Unlike human operators, autonomous vehicles depend on certain road conditions - like clear lane delineations. There's a stretch of road I drive every day where cars in autonomous mode clearly get confused. It's because the paint on the left side is all but gone, and the dots on the right are clearly in need of repair. Swervy swervy go the cars that don't know how to read that, as they alert the driver to go back to manual controls.

Also not a fan of when autonomous or semi-autonomous cars jam on their brakes because their algorithms identify something ahead of them that demands immediate correction, that a normal person would just ease off their accelerator in response to.

Look, at the end of the day, it's an all too common tale: a technology in its infancy, that too many people want to rush into despite its infancy, and too many people want to immediately outlaw because of its infancy.

What we really need is someone to club society over the head and say, "Slow your roll guys, let's develop this further before we start tilting one way or the other."

We went all-in on "green energy" because we were convinced that mother gaia was going to die unless the cockroaches scuttling around on her paid fealty to the sun god. Now we know that environmental claptrap was a complete boondoggle. We banned everything from bump stocks to silencers because we were convinced that they would turn society into Mad Max because a few bad people did a few bad things with them. Now we know that was a psychotic over-reaction. We went all-in on LGBT-mania - to the point of intentionally castrating children - because we were convinced of the progressive lie that serious mental health problems are just "alternative lifestyles."

We do this ALL THE TIME, because we never stop to think: Hey, does this make any sense at all?

I love the idea of autonomous vehicles. Everything from mag highways to whatever craziness Space Man Bad is dreaming up. But I also know that the tech is in its infancy.

We need not consider it like it's matured, let alone act rashly because it hasn't.

I don't want to think about how autonomous vehicles are going to survive on the Cross Bronx Expressway.

For all you people bitching about autonomous vehicles and "human error", even the autonomous vehicle mishaps are caused by human error...