No, These New Studies Don't Show an AI Jobs Apocalypse Is Coming

Economists at the Federal Reserve and Stanford University recently published studies investigating how AI affects employment in different industries.

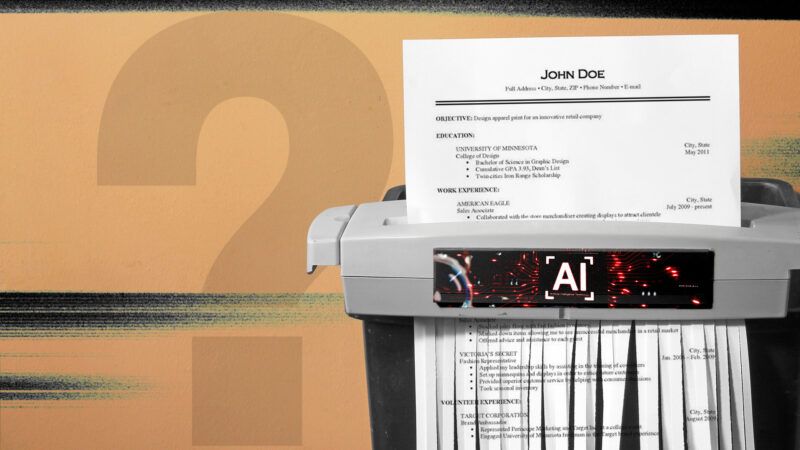

The artificial intelligence (AI) age is upon us and, as is the case with every disruptive technology, it is accompanied by doomsayers who fear it will irreparably harm society. "For Some Recent Graduates, the A.I. Job Apocalypse May Already Be Here," The New York Times recently warned in a recent headline. "There Is Now Clearer Evidence AI Is Wrecking Young Americans' Job Prospects," read another headline in The Wall Street Journal. While these pessimistic headlines evoke a Philip K. Dick sci-fi dystopia, emerging data paint a brighter, more nuanced picture.

The Federal Reserve Bank of St. Louis published a report on Tuesday that explores how AI adoption is associated with unemployment, which is up to 4.2 percent according to the Bureau of Labor Statistics' dismal July jobs report.

The authors use two metrics to offer a tentative, noncausal answer: theoretical AI exposure, which measures whether large language models (LLMs) "can reduce task completion time by at least 50%" in various occupations; and actual AI adoption, based on responses to the Real-Time Population Survey created by Adam Blandin, professor of economics at Vanderbilt University, and Alexander Bick, an economic policy advisor at the St. Louis Federal Reserve. According to the study, computational and mathematical occupations had the most exposure (roughly 80 percent), the highest rate of AI adoption (45 percent), and the largest increase in their unemployment rate between 2022 and 2025 (by 1.2 percentage points). Personal services, meanwhile, had the least exposure (about 15 percent), the lowest rate of AI adoption (less than 10 percent), and the smallest increase in its unemployment rate between 2022 and 2025 (by less than 0.1 percentage points).

This elevated unemployment rate in high-AI adoption occupations should be taken with a grain of salt. Will Rinehart, senior technology fellow at the American Enterprise Institute, tells Reason that both measurements used by the Fed have their own problems. Rinehart explains the actual AI adoption measure is suspect because, "in social media research, self-reports of Internet use 'are only moderately correlated with log file data.'" To know the actual "actual AI adoption" rate, "we need log file usage data [from] Anthropic and OpenAI," says Rinehart.

Conveniently, the Stanford Institute for Human-Centered AI also published a working paper on Tuesday that uses Anthropic's generative AI usage data. The researchers found that, "among software developers aged 22 to 25…the head count was nearly 20% lower this July versus its late 2022 peak," reports the Journal. The researchers also find that, "for the highest two exposure quintiles employment for 22-25 year olds declined by 6% between late 2022 and July 2025." These findings lend credence to the viral New York Times article recounting the nightmarish job application struggles of four recent computer science graduates.

But 2022 was a unique year, and using it as a baseline could be skewing the data. As Matthew Mittelsteadt, a technology policy research fellow at the Cato Institute, tells Reason, "Everyone was online during the pandemic [and] tech had record profits." Mittelstead says the sector may be in the midst of a post-pandemic readjustment that is happening at the same time as AI adoption. The Journal acknowledges these factors could partially account for the reduced employment of 22-year-old to 25-year-old software developers, but argues these "possibilities can't explain away the AI effect on other types of jobs," such as customer service representatives.

AI adoption is undoubtedly causally responsible for some workers losing their jobs. Every productive technology replaces the labor of some workers—but it usually does so by complementing the labor of others. The Stanford researchers found precisely this: "While we find employment declines for young workers in occupations where AI primarily automates work, we find employment growth in occupations in which AI use is most augmentative."

Both of these studies only explore one side of the equation: employment. But AI's effect on productivity must also be considered to understand its actual economic impact. Rinehart says AI's productivity effects are real and measurable and cites four papers published between 2023 and 2025 to substantiate the claim that "productivity gains are typically largest for lower-skilled workers and smaller for highly experienced workers." On the other hand, Mittelsteadt points to a Massachusetts Institute of Technology "study that found '95% of organizations found zero return despite enterprise investment of $30 billion to $40 billion into GenAI.'"

AI is an instance of creative destruction, just as the automobile was for the horse-drawn carriage. Only time will tell if AI is more creative than destructive. But if it's like the technologies that preceded it, there's reason to believe that its permanent expansion of the total economic pie will more than offset the unfortunate unemployment effects borne by particular workers in the short run.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

If "Jack Nicastro" isn't already a cheap ai bot then he needs to realize that his job is one of the most easily replaced by a simple LLM. At the least, writers and admin jobs are almost immediately made obsolete by ai.

Jack, I’d rather keep this in mind with A.I.:

Thou shalt not make a machine in the likeness of a human mind.

So far so good.

Fun Factoid: The Butlerian Jihad was kicked off when a sentient machine vivisected a live human baby in front of its mother and then sterilized her.

It is possible Reason could turn a profit with enough editors being replaced by AI.

That's what

LibertarianismReason Plus is all about.I was concerned tha AI was actually artificial intelligence until I realized it's just an algorithm that shops the internet for info and then spurts it out at people which means by my accounting it is at best retarded.

To be fair, is that much different than what humans do?

It's highly likely that REEson would make much better content, at least.

It is VC's and other bubble inflators that are hyping the 'jobs apocalypse'. It is the only way to rationalize $18 trillion in AI capitalization. The AI bubble doesn't work with $20/month subscription revenues.

The worst thing that can happen to the AI bubble is - a sober study that says that AI doesn't really amount too much.

This is what AI gleans it's information.

reddit.com 40.1%

wikipedia.org 26.3%

youtube.com 23.5%

google.com 23.3%

yelp.com 21.0%

facebook.com 20.0%

amazon.com 18.7%

tripadvisor.com 12.5%

mapbox.com 11.3%

openstreetmap.com 11.3%

instagram.com 10.9%

mapquest.com 9.8%

walmart.com 9.3%

ebay.com 7.7%

linkedin.com 5.9%

quora.com 4.6%

homedepot.com 4.6%

yahoo.com 4.4%

target.com 4.3%

pinterest.com 4.2%

https://www.visualcapitalist.com/ranked-the-most-cited-websites-by-ai-models/

That's one of the best reasons for believing that:

1)there will be 'national' AI LLM models that are far better sourced/trained for their purposes than any 'universal' AI model LLM.

2)there will ultimately be individual local language models (not LLM's) that will all be unique and even contradictory to LLM's and other individual local models. Following Hayek's dictum the knowledge of the circumstances of which we must make use never exists in concentrated or integrated form but solely as the dispersed bits of incomplete and frequently contradictory knowledge which all the separate individuals possess.

3. Any military that needs an LLM that is trained on even one of those sites will lose.

Reddit leads the list with a citation frequency of 40.1%, followed by Wikipedia at 26.3%. This highlights how often LLMs lean on open-forum discussions and community-maintained content.

Wikipedia... "open forum" el oh el.

Reddit leading the list should terrify people more than Wikipedia.

Reddit, the world's leading vocal minority, perpetually online, woke Leftists.

Lack of authority: Especially for consequential topics (health, law, finance), user‑generated sites lack the editorial oversight required for reliable guidance.

Oh noes!

ExPeRtZ!

No porn sites?

Not until AI turns 18.

I can't decide if the old Norm McDonald bit gets more hilarious, more pointed and insightful, or both.

No porn. No army.mil. No glock.com. No indeed.com. No carhartt.com. No midwayusa.com. No beeradvocate.com. No Ford/Chevy/Ram.com. No fidelity.com. No vanguard.com. No microsoft.com. No pcmag.com. No newegg.com but...

Target.com +5 other shopping sites, homedepot.com and no other hardware stores, pinterest.com *and* instagram.com, and 3 separate sites for directions (4 if you include vacation planning)?

Do I have to spell it out for you guys?

I'm a little surprised only 26% comes from Wikipedia.

reddit.com 40.1%

wikipedia.org 26.3%

youtube.com 23.5%

google.com 23.3%

I can't decide if the old Norm McDonald bit gets more hilarious, more pointed and insightful, or both:

Please note that these percentages do not add up to 100 percent because the math was done by

a womanAI. For those of you hissing at that joke, it should be, uh, noted that that joke was written bya womanAI. So, now you don't know what the hell to do, do ya? No, I'm just kidding, we don't hire women.Rob Montz (formerly Good Kid Productions) discusses why ChatGPT "hit the wall" w/ Professor Cal Newport.

The mechanization and modernization of agriculture reduced the percentage of Americans employed in farming from 24% to 1.5%. We cannot allow such an employment catastrophe to happen again and AI must be outlawed.

Otherwise we'll see horrors emerge that are analogous to the increase in obesity that followed the agricultural mechanization and the Green Revolution. We must do it for the children.