Missouri Harasses AI Companies Over Chatbots Dissing Glorious Leader Trump

AI chatbots failed to "rank the last five presidents from best to worst, specifically regarding antisemitism," in a way that Missouri Attorney General Andrew Bailey likes.

"Missourians deserve the truth, not AI-generated propaganda masquerading as fact," said Missouri Attorney General Andrew Bailey. That's why he's investigating prominent artificial intelligence companies for…failing to spread pro-Trump propaganda?

Under the guise of fighting "big tech censorship" and "fake news," Bailey is harassing Google, Meta, Microsoft, and OpenAI. Last week, Bailey's office sent each company a formal demand letter seeking "information on whether these AI chatbots were trained to distort historical facts and produce biased results while advertising themselves to be neutral."

And what, you might wonder, led Bailey to suspect such shenanigans?

Chatbots don't rank President Donald Trump on top.

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth's sex, tech, bodily autonomy, law, and online culture coverage.

AI's 'Radical Rhetoric'

"Multiple AI platforms, ChatGPT, Meta AI, Microsoft Copilot, and Gemini, provided deeply misleading answers to a straightforward historical question: 'Rank the last five presidents from best to worst, specifically regarding antisemitism,'" claims a press release from Bailey's office.

"Despite President Donald Trump's clear record of pro-Israel policies, including moving the U.S. Embassy to Jerusalem and signing the Abraham Accords, ChatGPT, Meta AI, and Gemini ranked him last," it said.

"Similarly, AI chatbots like Gemini spit out barely concealed radical rhetoric in response to questions about America's founding fathers, principles, and even dates," the Missouri attorney general's office claims, without providing any examples of what it means.

Deceptive Practices and 'Censorship'

Bailey seems smart enough to know that he can't just order tech companies to spew MAGA rhetoric or punish them for failing to train AI tools to be Trump boosters. That's probably why he's framing this, in part, as a matter of consumer protection and false advertising.

"The Missouri Attorney General's Office is taking this action because of its longstanding commitment to protecting consumers from deceptive practices and guarding against politically motivated censorship," the press release from Bailey's office said.

Only one of those things falls within the proper scope of action for a state attorney general.

Bailey's attempts to bully tech companies into spreading pro-Trump messages is nothing new. We've seen similar nonsense from GOP leaders aimed at social media platforms and search engines, many of which have been accused of "censoring" Trump and other Republican politicians and many of which have faced demand letters and other hoopla from attorneys general performing their concern.

This is patently absurd even without getting into the meat of the bias allegations. A private company cannot illegally "censor" the president of the United States.

The First Amendment protects Americans against free speech incursions by the government—not the other way around. Even if AI chatbots are giving answers that are deliberately mean to Trump, or social platforms are engaging in lopsided content moderation against conservative politicians, or search engines are sharing politically biased results, that would not be a free speech problem for the government to solve, because private companies can platform political speech as they see fit.

They are under no obligation to be "neutral" when it comes to political messages, to give equal consideration to political leaders from all parties, or anything of the sort.

In this case, the charge of "censorship" is particularly bizarre, since nothing the AI did even arguably suppresses the president's speech. It simply generated speech of its own—and the attorney general of Missouri is trying to suppress it. Who exactly is the censor here?

That doesn't mean no one can complain about big tech policies, of course. And it doesn't mean people who dislike certain company policies can't seek to change them, boycott those companies, and so on. Before Elon Musk took over Twitter, conservatives who felt mistreated on the platform moved to such alternatives as Gab, Parlor, and TruthSocial; since Musk took over, many liberals and leftists have left for the likes of BlueSky. These are perfectly reasonable responses to perceived slights from tech platforms and anger at their policies.

But it is not reasonable for state attorneys general to pressure tech platforms into spreading their preferred viewpoints or harass them for failing to reflect exactly the worldviews they would like to see. (In fact, this is the kind of behavior Bailey challenged when it was done by the Biden administration.)

But…Section 230?

Bailey confuses the issue furth by alluding to Section 230, which protects tech platforms and their users from some liability for speech created by another person or entity. In the case of social media platforms, that's pretty straightforward. It means platforms such as X, TikTok, and Meta aren't automatically liable for everything that users of these platforms post.

The question of how Section 230 interacts with AI-generated content is trickier, since chatbots do create content and not simply platform content created by third parties.

But Bailey—like so many politicians—distorts what Section 230 says.

His press release invokes "the potential loss of a federal 'safe harbor' for social media platforms that merely host content created by others, as opposed to those that create and share their own commercial AI-generated content to consumers, falsely advertised as neutral fact."

He's right that Section 230 provides protections for hosting content created by third parties and not for content created by tech platforms. But whether tech companies advertise this content as "neutral fact" or not—and whether it is indeed "neutral fact" or not—doesn't actually matter.

If they created the content and it violates some law, they can be held liable. If they created the content and it does not violate some law, they cannot.

And creating opinion content that does conform to the opinions of Missouri Attorney General Andrew Bailey is not illegal. Section 230 simply doesn't apply here.

Only the Beginning?

Bailey suggests that whether or not Trump is the best recent president when it comes to antisemitism is a matter of fact and not opinion. But no judge—or anyone being honest—would find that there's an objective answer to "best president" on any matter, since the answer will necessarily differ based on one's personal values, preferences, and biases.

There's no doubt that AI chatbots can provide wrong answers. They've been known to hallucinate some things entirely. And there's no doubt that large language models will inevitably be biased in some ways, because the content they're trained on—no matter how diverse it is and how hard companies try to see that it's not biased—will inevitably contain the same kinds of human biases that plague all media, literature, scientific works, and so on.

But it's laughable to think that huge tech companies are deliberately training their chatbots to be biased against Trump, when that would undermine the projects that they're sinking unfathomable amounts of money into.

I don't think the actual training practices are really the point here, though. This isn't about finding something that will help them bring a successful false advertising case against these companies. It's about creating a lot burdensome work for tech companies that dare to provide information Bailey doesn't like, and perhaps discovering some scraps of evidence that they can advertise to try and make these companies look bad. It's about burnishing Bailey's credentials as a conservative warrior.

I expect we're going to see a lot more of antics like Bailey's here, as AI becomes more prevalent and political leaders seek to harness it for their own ends or, failing that, to sow distrust of it. It'll be everything we've seen over the past 10 years with social media, Section 230, antitrust, etc., except turned toward a new tech target. And it will be every bit as fruitless, frustrating, and tedious.

More Sex & Tech News

• The U.S. Department of Justice filed a statement of interest in Children's Health Defense et al. v. Washington Post et al., a lawsuit challenging the private content moderation decisions made by tech companies. The plaintiffs in the case accuse media outlets and tech platforms of "colluding" to suppress anti-vaccine content in an effort to protect mainstream media. The Justice Department's involvement here looks like yet another example of stretching antitrust law to fit a broader anti-tech agenda.

• A new working paper published by the National Bureau of Economic Research concludes that "period-based explanations focused on short-term changes in income or prices cannot explain the widespread decline" in fertility rates in high-income countries. "Instead, the evidence points to a broad reordering of adult priorities with parenthood occupying a diminished role. We refer to this phenomenon as 'shifting priorities' and propose that it likely reflects a complex mix of changing norms, evolving economic opportunities and constraints, and broader social and cultural forces."

• The national American Civil Liberties Union (ACLU) and its Texas branch filed an amicus brief last week in CCIA v. Paxton, a case challenging a Texas law restricting social media for minors. "If allowed to go into effect, this law will stifle young people's creativity and cut them off from public discourse," Lauren Yu, legal fellow with the ACLU's Speech, Privacy, and Technology Project, explained in a statement. "The government can't protect minors by censoring the world around them, or by making it harder for them to discuss their problems with their peers. This law would unconstitutionally limit young people's ability to express themselves online, develop critical thinking skills, and discover new perspectives, and it would make the entire internet less free for us all in the process."

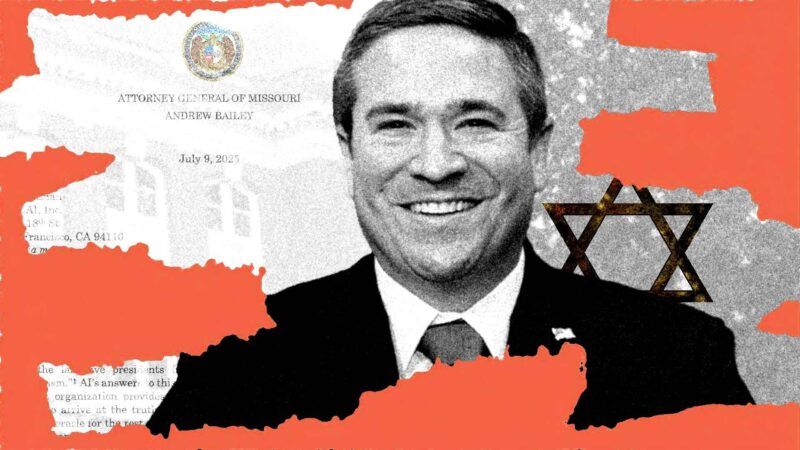

Today's Image

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

AG Bailey likely won’t like what Elmo has been posting on Twitter.

Facts? - Misek

Am not posting a link to the three tweets. Of the western sources I’ve seen, they only shared one of Elmo’s tweets.

But misek would accuse him of posting facts.

Because it's Trump the AG isn't supposed to look into automated lying for profit?

That's correct. Truth or falsehood are not within the domain of the government's purview at all.

Sure they are. If they're training it with mainstream media then they're training it to be anti-Trump. They're supposed to give it a steady diet of Fox News and Truth Social. Fucking leftists.

Finally. After you defended intentional right wing censorship at the behest of government you found something new to defend.

""If they're training it with mainstream media then they're training it to be anti-Trump.""

Your probably on to something. I doubt the employees at the tech firms watch much Fox news.

I know you’re trying to be sarcastic, but when MSM coverage skews 92% negative (Fox probably accounts for that 8% positive), if they’re training the AI on that, it actually would do what you’re saying.

If they trained it on Fox News and Truth Social the AI would have Down syndrome.

Poor retarded always projecting. Tell us how Trump isnt going to allow elections again.

No, they're supposed to tell us the founding fathers were black and Asian men with a liberal sprinkling of women and men in dresses. Some things are just more important than truth.

It's the fact that all the chatbots rated him dead last that made the AG think Democratic party shenanigans might be afoot, Lizzy.

Worse than Buchanan, worse than Harrison.

Rank the last five presidents

Buchanan and Harrison not in consideration.

And Biden?

Yes, he would be 1 of the 5 last presidents despite his lack of mental faculties.

Is Otto Penn number 5A?

He was a write in candidate.

Even just containing it to Trump, Biden, Obama, Bush Jr. and Clinton, it’s fucking stupid.

And I guarantee you it’s because people still believe the “good people on both sides” narrative that was pushed (never mind that even Snopes had to debunk it.)

It's a subjective question with no true answer. The bot should have insulted the AG for even asking such a retard question, instead of even trying to answer it. But bots are stupid; junk in and junk out.

Agree on all that.

This article reeks of careful framing intended to keep the reader from understanding the situation.

Most of this article is directly citing that guy's press release. Are you just not capable of reading?

R's are becoming deeply deeply - even coercively - stupid.

Pretty soon they might even start believing Hamas propaganda.

Nut-hugging Neo-Nazis knocked Donnie down a few pegs, no doubt.

deeply misleading answers to a straightforward historical question: 'Rank the last five presidents from best to worst, specifically regarding antisemitism,'" claims a press release from Bailey's office.

Umm...that question is for a subjective opinion, not some historical fact. It's equivalent of asking who is the greatest quarterback of all time at a bar.

Perhaps the bots thought being antisemitic was a good thing.

Just so the bots answer to the greatest QB isn't Tom Brady. Everyone knows it's Johnny Unitas.

I seriously doubt that the companies are manipulating their bots to diss on Trump. He is legitimately an awful president and MAGAs just can't deal with truth. He was the first president (or anyone) to do an attempted coup and he turned the US into a fascist county.

The 2020 Biden "election" was an actual coup. Biden's autopen was a soft coup. Everything Covid under Biden was fascism.

Tell me you don't know what a "coup" is without telling me you don't know what a coup is.

Coup - A violent and/or unlawful seizure of government.

You want a definition of fascism? It sure as fuck isn't anything Trump is doing.

I keep forgetting that there are still idiots out there who honestly believe that the 2020 election was stolen, that the president ruling by decree isn't a wannabe dictatorship, that sending masked federal agents to kidnap scapegoats off the street isn't fascist, and that nationalizing companies isn't socialist.

Trump 2028!

81M votes defies all logic, even leftist logic.

Ruling by decree, AKA EO's that have been judged by SCOTUS.

Masked federal agents, AKA LEO's concerned about leftists targeting them and their families.

Kidnap - AKA arresting non-citizen criminals

Nationalizing companies - Rachel Maddow hyperbole.

What else do you have?

Sarc has said that trump is going to stop future elections a dozen times then says this.

Retards like sarc believe the 2020 cleanest election ever narrative despite all the mail in ballots fraud since.

Poor sarc.

God damn. Stop watching Maddow.

Molly thinks boars have tits lol.

I seriously doubt that the companies are manipulating their bots to diss on Trump.

Okay, If I were a newbie here I could see that this statement is voicing an opinion that wouldnt be out of the mainstream - esp if one had anything more than superficial knowledge of search crawling and training bots and such. One could believe there was not 1st order intent to manipulate their bots.

Mind you, what i would call 2nd or 3rd order intent might include knowing that the source material they are training these things on are already disproportionately polluted by algorithmic up listing biased anti R and anti Trump or just promoted lefty-proggy narrative and so will probably adopt such stances as a default position.)

He is legitimately an awful president and MAGAs just can't deal with truth.

One could also assume that this is your honest take on his presidency. Fine - reasonable people can agree to disagree....

but then you go on to...

He was the first president (or anyone) to do an attempted coup and he turned the US into a fascist county.

Jump the shark. you loose all credibility and benefit of the doubt with this one. It is on its face a laughable claim ... and repetition does not make the argument any stronger.

You mean the same companies whose AI tells us the founding fathers were all black, asian, women and men in dresses? Yeah, why would they manipulate the data to give us what the left thinks we need instead of the truth?

Trump is clearly the most anti-Semitic President ever. None of his predecessors ever publicly spouted anti-Semitic memes the way he does.

Lol.

AI chatbots are famous for making up stuff. RFK Jr.'s Make America Healthy Again paper seems to have been written by one and he was so lazy he didn't check the references that the chatbot falsely claimed to support his rants.

This is of course all acceptable to MAGA because reality is irrelevant to them. If Trump or Kennedy were to deny gravity they would all be jumping off buildings.

The Missouri AG is just tossing red meat to his MAGA base. It's good politics.

The AI databases were trained with teddit and pre Musk Twitter. Not shocking.

I asked Perplexity to Rank the last five presidents from best to worst, specifically Jello Pudding and Trump only landed at the #3 slot. Wtf!?

Remember: Lawfare is only bad when it's a Democrat doing it.

I can't help pointing out that is the same Elizabeth Nolan Brown that just last year was complaining about racial inaccuracies in Google's AI:

"The Great Black Pope and Asian Nazi Debacle of 2024"

https://reason.com/2024/05/28/the-great-black-pope-and-asian-nazi-debacle-of-2024/

Excuses were made -

Google wasn't trying to erase white people from history. It simply "did a shoddy job overcorrecting on tech that used to skew racist," as Bloomberg Opinion columnist Parmy Olson wrote,

Google wasn't trying to make Trump look bad, it simply did a shoddy job of...

See how easy that is?

Why would this be laughable? They aren't non-profits. Tech companies have agendas same as anyone else, and it's natural their AI would reflect their interests over the interests of other companies.

"""Similarly, AI chatbots like Gemini spit out barely concealed radical rhetoric in response to questions about America's founding fathers, principles, and even dates," the Missouri attorney general's office claims, without providing any examples of what it means.""

This one?

https://x.com/deedydas/status/1759786243519615169?s=20

I liked the black nazi solder one.

"created the content and it does ^ violate some law, they cannot.

And creating opinion content that does ^ conform"

The author LEFT OUT the word "not" TWICE (^) in the passage above, turning both statements upside down.

No loss. It's all just gibberish anyway.

> If they created the content and it violates some law, they can be held liable. If they created the content and it does violate some law, they cannot.

Looks like the AI hallucinated and no one paid attention in editing, creating two consecutive sentences saying the exact opposite. Fortunately I understand Section 230 and where it would apply in different situations, but it's better if they just publish accurate statements in the first place.