The 3 Myths Supporting NIH Funding

Innovation, basic research, and economic growth do not rely on federal science funding.

The Trump administration's proposal to cut National Institutes of Health (NIH) indirect funds has been widely attacked, with heated claims it will annihilate biomedical scientific research in the United States. Leading with a picture of a 12-year-old child with muscular dystrophy, Shetal Shah, a neonatology professor, argued in the Honolulu Star-Advertiser that the cuts would "hobble" vital medical research, and a Time magazine interviewee went as far as to call it the "apocalypse" of U.S. science writ large. While the funding cut has been blocked by federal judges for now, the future fiscal status of the NIH, and the university researchers that depend on it, remains uncertain.

Pundits discussing the cuts nearly universally agree that federally funded science is a crucial component of lifesaving medical therapies, innovative technology, and the ongoing status of the U.S. as a scientific superpower. These assertions are repeated ad nauseam despite history telling a different story. Promulgating three core myths, advocates for maintaining the status quo of public funding have been active in the media, but none of their key assertions withstand considering the historical record.

Myth No. 1: The U.S. Cannot Be an Innovative Research Leader Without Strong Public Funding

Shortly after the funding cuts were announced, a member of the University of California, Santa Cruz's informatics team wrote, "American research institutions have historically been at the forefront of medical and technological advancements. This proposal is a fast track to reversing that. Other countries that actually invest in research will surge ahead while U.S. institutions struggle to keep the lights on."

These arguments make sense only if the following assumptions are accurate: that the amount of NIH spending is important for scientific achievement, and that publicly funded academic research is the primary factor needed for innovation. These assumptions don't stand up under scrutiny.

Like many things in U.S. research, the NIH owes its growth to the spoils of war. The Ransdell Act of 1930 that established NIH research fellowships was the product of World War I chemists looking for funding to apply their knowledge to medical issues. The subsequent Public Health Service Act of 1944 arose from proponents such as Vannevar Bush, who ran the Office of Scientific Research and Development during World War II, wanting to ensure the massive federal science endowments that took place during the war would continue through peacetime. From these efforts, despite U.S. science previously being mostly laissez faire, the NIH budget would expand from $8 million in 1947 to more than $1 billion in 1966. If federal funding is a prerequisite for scientific innovation, we would expect a severe lack of the latter in medicine prior to the expanded federal role.

But in the early 20th century, philanthropists were already funding the type of research the NIH later did, with impressive yields. Among them was the Rockefeller Foundation, whose research led to the discovery of a vaccine for yellow fever, advanced the understanding of cell biology, and assisted the efforts to mass produce penicillin. For this latter effort, U.S. pharmaceutical firms proved to be the key component to produce enough penicillin to sustain the war efforts and help save the lives of wounded soldiers.

U.S. biomedical science in the era before the NIH was spreading federal money was not hurting for private support, and while some insist that increased government spending only strengthened a good foundation, the public sector's role is often overstated regarding some canonical research achievements. The Human Genome Project is a good example. The NIH correctly asserts this groundbreaking international collaboration "changed the face of the scientific workforce," but it was only made possible by the automatic gene sequencer developed by Leroy Hood, who noted the invention received "some of the worst scores the NIH had ever given."

It was only through the generosity of Sol Price—the founder of warehouse superstores—that the technology came to fruition and the human genome was finally sequenced. Similar stories of private generosity in place of government grants can be found for stem cell research.

As for the importance of publicly supported academics, consider the story of mRNA vaccine development. The NIH timeline implies that smart government investment into years of HIV research was the key to this lifesaving technology, but the chief innovator of the eventual product, Katalin Karikó, was roadblocked for years in academia and even demoted for her lack of grant acquisition. She would later leave the university setting and work for BioNTech in the private sector to create the Pfizer vaccine. Her story is conspicuously absent from the NIH timeline of events.

Karikó's experience suggests that public funding for academic science is not necessarily the crucial factor for scientific advancement and innovation. In practice, the private sector drives new technologies. Drug development, for instance, would be impossible without the power of the pharmaceutical industry to fund new chemical entities. Authors of a 2016 survey on some of the most transformational drug therapies that appeared in the journal Therapeutic Innovation & Regulatory Science wrote that "without private investment in the applied sciences there would be no return on public investment in basic science."

A subsequent analysis in 2022 in the same journal found that only privately funded projects actually hit the market as Food and Drug Administration–approved therapies and that public funding had a possible negative effect on achieving approval. Note the cautious interpretation by the authors: "Our study results underscore that the development of basic discoveries requires substantial additional investments, partnerships, and the shouldering of financial risk by the private sector if therapies are to materialize as FDA-approved medicine. Our finding of a potentially negative relationship between public funding and the likelihood that a therapy receives FDA approval requires additional study" (emphasis added).

If it cannot deliver therapies, federally funded science should at least be producing noteworthy and reproducible science. A writer for Psychology Today noted this month that the federal government is the largest funder of psychological research. But should we consider that a good thing? Psychology is a research field where as low as one-third of the findings can be replicated for a given set of publications, which calls into question the trustworthiness of that nonreproducible work. Even outside psychology, it is not even clear that federally funded research is producing our most noteworthy scientists: A large majority of researchers authoring the most highly cited papers in the biomedical research field operate without NIH funding.

Myth No. 2: Private Firms Will Not Fund Basic Research

Like many articles discussing the potential NIH cuts, the National Education Association's (NEA) take was breathless and hyperbolic, best summed up by the isolated quote, "People will die." Amid such claims, the NEA chose to promote another popular myth: Without public funds, basic research efforts will suffer. As the author writes, "Corporations also don't care about basic science, also known as fundamental or bench science….If the federal government doesn't fund the professors doing this work, it doesn't happen."

This argument originates from the works of the renowned economist Kenneth Arrow. After the launch of Sputnik, the U.S. government commissioned Arrow to come up with an economic model to support the public funding of science, given concerns that the Soviet Union would overtake the U.S. technologically. Arrow penned a now canonical paper in 1962 titled "Economic Welfare and the Allocation of Resources for Invention," where he argued the market would fail to fund basic research, and therefore public funding of science was necessary.

Arrow's theoretical assertion, and that of his modern acolytes, is completely at odds with the literature assessing how much basic research the private sector actually performs. Nathan Rosenberg, in his 1989 paper Why do firms do basic research (with their own money)?, found that corporations invest quite handsomely in basic science, which allows them to be a "first mover" along the learning curve of scientific innovation. Additional work by Edwin Mansfield in The American Economic Review in 1980 has also concluded that companies' profits increase along with greater investment in basic research. A later study in Research Policy in 2012 would demonstrate that Mansfield's findings remained true, finding greater profits in the technological sector for those investing in basic science.

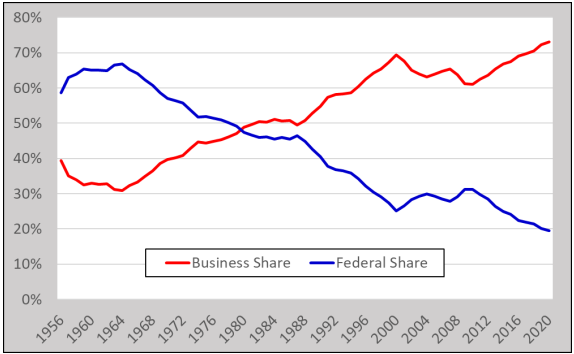

Contrary to the myth, companies do have motivation to invest in basic research, and they are clearly responding to that incentive. The National Science Foundation's own data confirm this—showing that as federal support for basic research has plateaued and declined, business and other nonfederal support for basic research has increased. This trend is consistent with the last 65 years of research and development investment patterns. In the "golden era" of NIH funding in the later 1950s and through the 1960s, large increases in federal R&D funding were accompanied by a decline in private contributions, as reported by the Congressional Research Service (CRS) in the figure below. When federal funds became scarcer, the business share started to rise again.

In short, if federal funds do contract, basic research will be well taken care of in the hands of the private sector.

Myth No. 3: Cutting Public Funding for Science Will Devastate the Economy

Whenever national science budget cuts are proposed, academics are quick to claim the cuts will have devastating economic effects. Within a few weeks of the NIH budget announcement, University of Arizona astronomy professor Chris Impey asserted that federal cuts would have "negative impacts on the economy" and that a "substantial part of U.S. prosperity after World War II was due to the country's investment in science and technology." The aforementioned Shah wrote, "With these losses, we risk losing both scientific progress and economic vitality."

The most thorough review of the relationship between scientific funding and economics does not support the link between government scientific research money and positive macroeconomic outcomes. Terence Kealey, in his 1996 book The Economic Laws of Scientific Research, thoroughly examined centuries of international trends and found that countries that operated under laissez faire science policies enjoyed greater economic growth and technological innovation than those who chose dirigisme—state control of economic and social matters. Britain had little to no government support for science in the 18th and 19th centuries, and yet it enjoyed the agricultural and industrial revolutions. France, on the other hand, promoted government funding of science since the late 17th century and ended up far less prosperous.

In the United States in the 19th century, the government did have a scant number of offices for mission-oriented research such as the U.S. Office of Coast Survey, but by and large, science was not widely supported by the government. Despite various government research offices growing at the beginning of the 20th century, Kealey found the U.S. private sector was still providing just over 76 percent of R&D funds as late as 1940. As we have seen, this changed in the 1950s with a massive expansion in public funding.

If economic prosperity results from a country's investment in science, post-1950s economic trends should vindicate this assertion. They do not. After an impressive growth of real gross domestic product (GDP) in the immediate postwar years, annual growth of real GDP was fairly static during the "Golden Era" of NIH funding. The trend of real GDP between the late 1940s and early 1980s confirms growth proceeded at roughly the same rate with little deviation during this time. We should be thankful the economic effect of U.S. public research endowment was only neutral, as other countries were not so lucky. Britain's Labour Party in the 1960s, ignoring the historic success of the country's laissez faire approach, believed government funding for science would be the backbone of prosperity, but by 1976, it was already applying for a loan from the International Monetary Fund to curtail Britain's poor economic conditions.

The greatest counterargument to the economic myth is, ironically, a series of audits performed by the government itself. The Bureau of Labor Statistics (BLS) was assessing returns on R&D investments as far back as 1989, and reported, "the far more dominant pattern is that federally financed expenditures have no discernable effect on productivity growth." The BLS reviewed the literature again in 2007 and found R&D had a large rate of return—but only if it was privately funded. "Returns to many forms of publicly financed R&D are near zero," the BLS reported.

Internationally, the findings on R&D have been the same. In 1999, the Organization for Economic Cooperation and Development sought to understand why some countries, particularly the United States, had enjoyed such marked economic growth while others had not. Their findings, published in 2003, showed positive returns for private R&D but "could find no clear-cut relationship between public R&D activities and growth."

Despite the readily available mountain of literature showing publicly funded science has no clear economic benefit, the NIH funding cuts were followed by a myriad of reports that such action would threaten "economic stability." Some myths are so pervasive that no matter the evidence, someone is always ready to revive them yet again.

Show Comments (59)