We Absolutely Do Not Need an FDA for AI

If our best and brightest technologists and theorists are struggling to see the way forward for AI, what makes anyone think politicians are going to get there first?

I don't know whether artificial intelligence (AI) will give us a 4-hour workweek, write all of our code and emails, and drive our cars—or whether it will destroy our economy and our grasp on reality, fire our nukes, and then turn us all into gray goo. Possibly all of the above. But I'm supremely confident about one thing: No one else knows either.

November saw the public airing of some very dirty laundry at OpenAI, the artificial intelligence research organization that brought us ChatGPT, when the board abruptly announced the dismissal of CEO Sam Altman. What followed was a nerd game of thrones (assuming robots are nerdier than dragons, a debatable proposition) that consisted of a quick parade of three CEOs and ended with Altman back in charge. The shenanigans highlighted the many axes on which even the best-informed, most plugged-in AI experts disagree. Is AI a big deal, or the biggest deal? Do we owe it to future generations to pump the brakes or to smash the accelerator? Can the general public be trusted with this tech? And—the question that seems to have powered more of the recent upheaval than anything else—who the hell is in charge here?

OpenAI had a somewhat novel corporate structure, in which a nonprofit board tasked with keeping the best interests of humanity in mind sat on top of a for-profit entity with Microsoft as a significant investor. This is what happens when effective altruism and ESG do shrooms together while rolling around in a few billion dollars.

After the events of November, this particular setup doesn't seem to have been the right approach. Altman and his new board say they're working on the next iteration of governance alongside the next iteration of their AI chatbot. Meanwhile, OpenAI has numerous competitors—including Google's Bard, Meta's Llama, Anthropic's Claude, and something Elon Musk built in his basement called Grok—several of which differentiate themselves by emphasizing different combinations of safety, profitability, and speed.

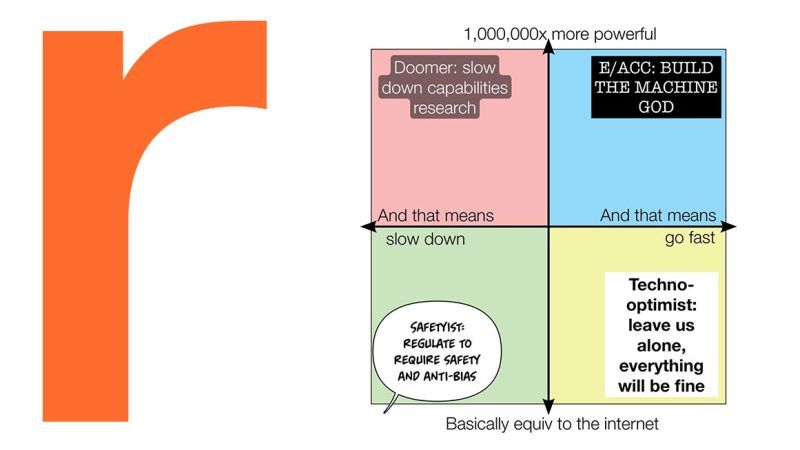

Labels for the factions proliferate. The e/acc crowd wants to "build the machine god." Techno-optimist Marc Andreessen declared in a manifesto that "we believe intelligence is in an upward spiral—first, as more smart people around the world are recruited into the techno-capital machine; second, as people form symbiotic relationships with machines into new cybernetic systems such as companies and networks; third, as Artificial Intelligence ramps up the capabilities of our machines and ourselves." Meanwhile Snoop Dogg is channeling AI pioneer-turned-doomer Geoffrey Hinton when he said on a recent podcast: "Then I heard the old dude that created AI saying, 'This is not safe 'cause the AIs got their own mind and these motherfuckers gonna start doing their own shit.' And I'm like, 'Is we in a fucking movie right now or what?'" (Hinton told Wired, "Snoop gets it.") And the safetyists just keep shouting the word guardrails. (Emmett Shear, who was briefly tapped for the OpenAI CEO spot, helpfully tweeted this faction compass for the uninitiated.)

wake up babe, AI faction compass just became more relevant pic.twitter.com/MwYOLedYxV

— Emmett Shear (@eshear) November 18, 2023

If even our best and brightest technologists and theorists are struggling to see the way forward for AI, what makes anyone think that the power elite in Washington, D.C., and state capitals are going to get there first?

When the release of ChatGPT 3.5 about a year ago triggered an arms race, politicians and regulators collectively swiveled their heads toward AI like a pack of prairie dogs.

State legislators introduced 191 AI-related bills this year, according to a September report from the software industry group BSA. That's a 440 percent increase from the number of AI-related bills introduced in 2022.

In a May hearing of the Senate Judiciary Subcommittee on Privacy, Technology, and the Law, at which Altman testified, senators and witnesses cited the Food and Drug Administration and the Nuclear Regulatory Commission as models for a new AI agency, with Altman declaring the latter "a great analogy" for what is needed.

Sens. Richard Blumenthal (D–Conn.) and Josh Hawley (R–Mo.) released a regulatory framework that includes a new AI regulatory agency, licensing requirements, increased liability for developers, and many more mandates. A bill from Sens. John Thune (R–S.D.) and Amy Klobuchar (D–Minn.) is softer and more bipartisan, but would still represent a huge new regulatory effort. And President Joe Biden announced a sweeping executive order on AI in October.

But "America did not have a Federal Internet Agency or National Software Bureau for the digital revolution," as Adam Thierer has written for the R Street Institute, "and it does not need a Department of AI now."

Aside from the usual risk throttling of innovation, there is the concern about regulatory capture. The industry has a handful of major players with billions invested and a huge head start, who would benefit from regulations written with their input. Though he has rightly voiced worries about "what happens to countries that try to overregulate tech," Altman has also called concerns about regulatory capture a "transparently, intellectually dishonest response." More importantly, he has said: "No one person should be trusted here….If this really works, it's quite a powerful technology, and you should not trust one company and certainly not one person." Nor should we trust our politicians.

One silver lining: While legislators try to figure out their priorities on AI, other tech regulation has fallen by the wayside. Regulations on privacy, self-driving cars, and social media have been buried by the wave of new bills and interest in the sexy new tech menace.

One thing is clear: We are not in a Jurassic Park situation. If anything, we are experiencing the opposite of Jeff Goldblum's famous line about scientists who "were so preoccupied with whether or not they could, they didn't stop to think if they should." The most prominent people in AI seem to spend most of their time asking if they should. It's a good question. There's just no reason to think politicians or bureaucrats will do a good job answering it.

Show Comments (75)