Facebook Said My Article Was 'False Information.' Now the Fact-Checkers Admit They Were Wrong.

While this is a problem, it's not one that scrapping Section 230 would solve.

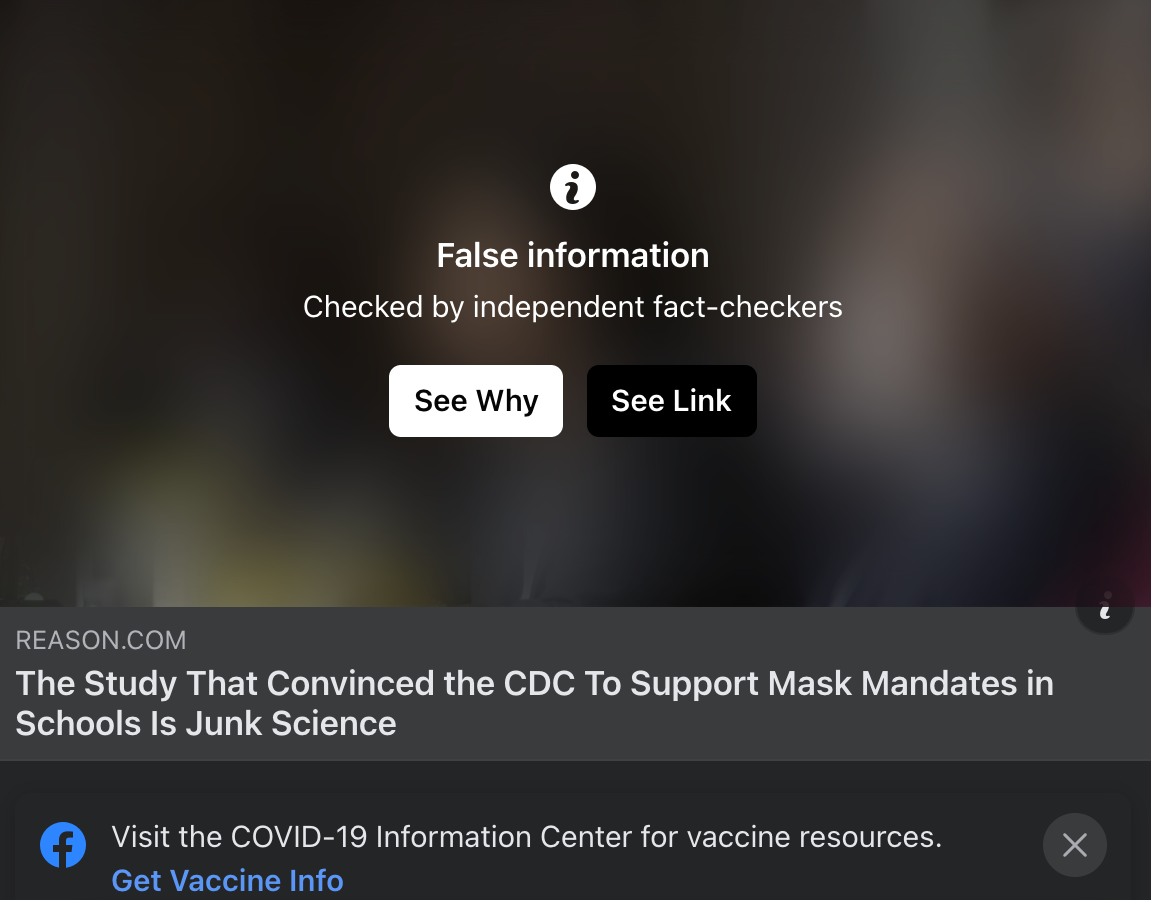

On Monday, I received a rather curious notification on Facebook. A friend alerted me that when she tried to share a recent article of mine, the social media site automatically blurred the accompanying image, replacing it with the ominous declaration that the link contained "false information checked by independent fact-checkers."

The article in question was this one: "The Study That Convinced the CDC To Support Mask Mandates in Schools Is Junk Science." As the Reason Roundup daily newsletter (subscribe today!), it contained information on several other subjects as well, but Facebook made matters fairly clear that the fact-checkers were taking issue with the part about masks in schools. Attempting to share the article on Facebook prompted a warning message to appear: This message redirected to an article by Science Feedback, an official Facebook fact-checking organization, which asserted that "masking can help limit transmission of SARS-CoV-2 in schools" and it was false to say that "there's no science behind masks on kids."

Since I had never made this claim, it was odd to see it fact-checked. Indeed, the purveyor of false information here was Science Feedback, which had given people the erroneous impression that my article said something other than what I had actually written.

The source for the article was a recent piece from The Atlantic's David Zweig. My claims were not really unique at all; rather, I had summarized impressive, original research performed by Zweig that demonstrated that the Centers for Disease Control and Prevention (CDC) had relied on a flawed study to conclude the school mask mandates were beneficial.

"Masks may well help prevent the spread of COVID, [some experts] told me, and there may well be contexts in which they should be required in schools," wrote Zweig. "But the data being touted by the CDC—which showed a dramatic more-than-tripling of risk for unmasked students—ought to be excluded from this debate."

According to Zweig, the study in question—which was conducted in Arizona—had all sorts of problems. Researchers did not verify that the schools comprising the data set were even open during the time period in question; they ignored important factors like varying vaccination rates; and they counted outbreaks instead of cases. The study's subsequent finding—that schools without mask mandates had far worse COVID-19 outcomes than schools with mask mandates—should not have been so readily believed by the nation's top pandemic policy makers.

That's it. Neither Zweig's article nor mine makes the claim that masks don't work on kids, or that masks fail to limit transmission in schools. Both addressed a single study that concerned mask mandates.

Intriguingly, Zweig's article did not receive the same "false information" label. When I attempted to share it on Facebook, I received no warning. My Reason article, on the other hand, generated the following disclaimer from the social media site: "Pages and websites that repeatedly publish or share false news will see their overall distribution reduced and be restricted in other ways."

Facebook relies on more than 80 third-party organizations to perform fact-checking functions for the site. These were chosen by the company to serve in those roles; they do not have the power to remove content, but once they have reviewed a post and rated it as false, the social media site will automatically deprioritize it so that fewer users encounter it in their feeds. This gives the fact-checkers considerable power. They also handle the appeals internally.

Their decisions can be controversial. John Stossel, host of Stossel TV and a contributor for Reason, has accused Facebook fact-checkers of "stifling open debate." Stossel has also landed himself on the wrong side of "false information" labels: Climate Feedback, a subgroup within Science Feedback, labeled two of his climate change–related videos as "misleading" and "partly false." Stossel's situation is similar to mine in that the fact-checker attributed to him a claim—"forest fires are caused by poor management, not by climate change," in this case—that his video never actually made.

"In my video arguing that government mismanagement fueled California's wildfires, I acknowledged that climate change played a role," Stossel explained in a subsequent video summarizing his side of the dispute.

Stossel eventually succeeded in getting two Climate Feedback editors to admit that they had not watched his video—and after they had watched the video, they agreed with him that it was not misleading, having noted that both government mismanagement and climate change have contributed to forest fires. But Climate Feedback still did not "correct their smear," according to Stossel.

I've had better luck. I contacted both Facebook and Science Feedback, seeking clarification and correction. On Tuesday, Science Feedback admitted that they had flagged my article erroneously and that they would remove the "false information" label.

"We have taken another look at the Reason article and confirm that the rating was applied in error to this article," they wrote. "The flag has been removed. We apologize for the mistake."

I asked for additional details, and receive this note from Ayobami Olugbemiga, a policy communications manager at Facebook.

"Thanks for reaching out and appealing directly to Science Feedback," he wrote. "As you know, our fact-checking partners independently review and rate content on our apps and are responsible for processing your appeal."

Stossel, it should be noted, is currently suing Facebook, Science Feedback, and Climate Feedback. He acknowledges that a private company has the right to ban, take down, or deprioritize content as it sees fit. Moreover, different individuals and organizations can disagree about basic factual questions like the science of climate change. But he says that in attributing to him a direct quotation that he never uttered, the fact-checkers committed defamation.

"This case presents a simple question: do Facebook and its vendors defame a user who posts factually accurate content, when they publicly announce that the content failed a 'fact-check' and is 'partly false,' and by attributing to the user a false claim that he never made?" wrote Stossel's attorneys in the lawsuit. "The answer, of course, is yes."

This is a complicated issue because social media companies and other websites are typically immune from defamation lawsuits aimed at the speech of other actors on the platforms under a federal statute known as Section 230. This statute does not treat all speech that occurs on Facebook as Facebook's speech: One user can sue another user for libel, but they generally can't sue Facebook. There is an exception, of course, for the company's own speech—it would be possible to sue Facebook over a press release, or online statement made by an employee. Facebook has claimed that its third-party fact-checkers are independent and distinct, though the company has acknowledged that it does pay them.

There are many Republicans and Democrats who want to scrap Section 230 entirely: President Joe Biden, former President Donald Trump, Sen. Josh Hawley (R–Mo.), and Sen. Elizabeth Warren (D–Mass.) have all denounced the statute's protections for Big Tech companies. Getting rid of Section 230 wouldn't solve the problem of overly aggressive fact-checking and content moderation, though. On the contrary, it could very well exacerbate it. The more liability Facebook is subjected to, the less permissive it is likely to be.

But that doesn't mean the status quo is particularly satisfying. It's good that the fact-checker reversed course in my case, but needless to say, Facebook should revisit its formal, contractual relationship with an organization that routinely misquotes the people it scrutinizes.

Show Comments (385)