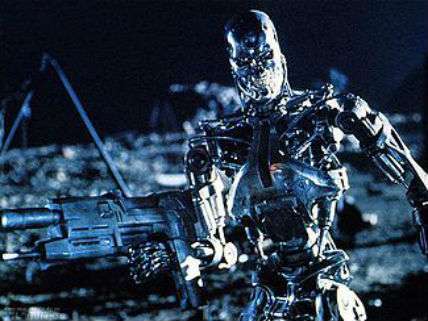

Killer Robots: Protectors of Human Rights?

Why a ban on the development of lethal autonomous weapons now is premature.

"States should adopt an international, legally binding instrument that prohibits the development, production, and use of fully autonomous weapons," declared Human Rights Watch (HRW) and Harvard Law School's International Human Rights Clinic (IHRC) in an April statement. The two groups issued their report, "Killer Robots and the Concept of Meaningful Human Control," as experts in weapons and international human rights met in Geneva to consider what should be done about lethal autonomous weapon systems (LAWS). It was their third meeting, conducted under the auspices of the Convention on Conventional Weapons.

What is a lethal autonomous weapons system? Characterizations vary, but the U.S. definition provides a good starting point: "A weapon system that, once activated, can select and engage targets without further intervention by a human operator." Weapons system experts typically distinguish among technologies where there is a "human in the loop" (semi-autonomous systems where a human being controls the technology as it operates), a "human on the loop" (human-supervised autonomous systems where a person can intervene and alter or terminate operations), or a "human out of the loop" (fully autonomous systems that operate independent of human control).

The authors of that April statement want to ban fully autonomous systems, because they believe a requirement to maintain human control over the use of weapons is needed to "protect the dignity of human life, facilitate compliance with international humanitarian and human rights law, and promote accountability for unlawful acts."

The HRC and IHRC argue that killer robots would necessarily "deprive people of their inherent dignity." The core argument here is that inanimate machines cannot understand the value of individual life and the significance of its loss, while soldiers can weigh "ethical and unquantifiable factors" while making such decisions. In addition, the HRC and IHRC believe that LAWS could not comply with the requirements of international human rights law, specifically the obligations to use force proportionally and to distinguish civilians from combatants. They further claim that killer robots, unlike soldiers and their commanders, could not be held accountable and punished for illegal acts.

Yet it may well be the case that killer robots could better protect human rights during combat than soldiers using conventional weapons do now, according to Temple University law professor Duncan Hollis.

Hollis notes that under international human rights law, states must conduct a legal review to ensure that any armaments, including autonomous lethal weapons, are not unlawful per se—that is, they are neither indiscriminate nor the cause of unnecessary suffering. To be lawful, a weapon must be capable of distinguishing between civilians and combatants. Also, it must not by its very nature cause unnecessary suffering or superfluous injury. A weapon would also be unlawful if its deleterious effects cannot be controlled.

Just such considerations have persuaded most governments to sign treaties outlawing the use of such indiscriminate, needlessly cruel, and uncontrolled weapons as antipersonnel land mines and chemical and biological agents. If killer robots could better discriminate between combatants and civilians and reduce the amount of suffering experienced by people caught up in battle then they would not be per se illegal.

Could killer robots meet these international human rights standards? Ronald Arkin, a roboticist at the Georgia Institute of Technology, thinks they could. In fact, he argues that LAWS could have significant ethical advantages over human combatants. For example, killer robots do not need to protect themselves, and so could refrain from striking when in doubt about whether a target is a civilian or a combatant. Warbots, Arkin contends, could assume "far more risk on behalf of noncombatants than human warfighters are capable of, to assess hostility and hostile intent, while assuming a 'First do no harm' rather than 'Shoot first and ask questions later' stance."

LAWS, he suggests, would also employ superior sensor arrays, enabling them to make better battlefield observations. They would not make errors based on emotions—unlike soldiers, who experience fear, fatigue, and anger. They could integrate and evaluate far more information faster in real time than could human soldiers. And they could objectively monitor the ethical behavior of all parties on the battlefield and report any infractions.

Under the Geneva Convention, the principle of proportionality prohibits "an attack which may be expected to cause incidental loss of civilian life, injury to civilians, damage to civilian objects, or a combination thereof, which would be excessive in relation to the concrete and direct military advantage anticipated." Human soldiers may take actions where they knowingly risk, but do not intend, harm to noncombatants. In order to meet the requirement of proportionality, autonomous weapons could be designed to be conservative in their targeting choices; when in doubt, don't attack.

In justifying their call for a ban, the HRW and IHRC argue that soulless warbots cannot be held responsible for their actions, creating a morally unbridgeable "accountability gap." Under the current laws of warfare, a commander is held responsible for an unreasonable failure to prevent a subordinate's violations of international human rights laws. The organizations that oppose the deployment of autonomous weapons argue that a commander or operator of a LAWS "could not be held directly liable for a fully autonomous weapon's unlawful actions because the robot would have operated independently." Hollis counters that since states and the people who represent them are supposed to be held accountable when the armed forces they command commit war crimes, they could similarly be held accountable for unleashing robots that violate human rights.

Peter Margulies, a professor of law at Roger Williams University, makes a similar argument. Holding commanders responsible for the actions of lethal autonomous weapons systems, he writes, is "a logical refinement of current law, since it imposes liability on an individual with power and access to information who benefits most concretely from the [system's] capabilities in war-fighting."

In order to augment command responsibility for warbots' possible human rights infractions, Margulies suggests that states and militaries create a separate lethal autonomous weapons command. The officers that head up this dedicated command would be required to have a deep understanding of limitations of the killer robots under their control. In ambiguous situations, killer robots should also have the capability of requesting a review by its human commanders of its proposed targets.

"The status quo is unacceptable with respect to noncombatant deaths," Arkin argues cogently. "It may be possible to save noncombatant lives through the use of this technology—if done correctly—and these efforts should not be prematurely terminated by a preemptive ban."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

"For example, killer robots do not need to protect themselves, and so could refrain from striking when in doubt about whether a target is a civilian or a combatant."

Drones also do not need to protect themselves but seem to have a "blowing up weddings" problem.

I'm not sold on the idea that letting the government deploy robots that can target and kill people without any human control is a good thing.

That doesn't seem a problem of technology, but of deliberate policy.

Removing the person in the loop won't make it better and has a huge potential to make it worse.

People, *occasionally* refuse to shoot without a clear target and sometimes they blow the whistle on illegal/immoral programs.

People, *occasionally* refuse to shoot without a clear target...

True. But, they also sometimes go into pure self--preservation mode where killing indiscriminately to preserve their own life and limb becomes their MO. This is something, at least ideally, could be foregone with robot warriors.

If its just personal fear - why not tele-operated ground drones?

http://www.deviantart.com/art/.....-393893704

Not necessarily a bad thing. But, I think it would run into the same argument you and John play with below. If we can safely make war, why refrain from it?

As with any tech claim by Bailey, it depends on if the ability to program the parameters and the devices response to the environment lives up to the most optimistic predictions. Unfortunately, my skepticism about this is only a little less than what I have for the accuracy of a CBO budget estimate.

Okay. Now that is an argument I can support. I don't think there's anything wrong with the technology. I just don't think we're anywhere near there yet.

Apparently he hasn't seen Robocop.

"Dick, I'm very disappointed."

https://www.youtube.com/watch?v=mrXfh4hENKs

I'm not sold on the idea that letting the government deploy robots that can target and kill people without any human control is a good thing.

Because letting them do it with human control is so much more humane?

The people who keep putting forth the "blowing up weddings" meme have a truthfulness problem.

my buddy's mother-in-law makes $74 /hour on the internet . She has been out of work for seven months but last month her pay check was $20063 just working on the internet for a few hours. navigate to this website??????? http://www.elite36.com

That's not a problem that's a fact of war.

A preview button? Why I can't even.

When you look beneath the hood, these "human rights activists" rarely turn out to be particularly interested in human rights. What galls them isn't abuse of innocents, but the disequilibrium of power afforded by the technology. It isn't "sporting" (and yes, I've actually heard a leftist argue precisely this). Nevermind the platitudes they constantly offer about "war isn't a game or a John Wayne movie". As long as both sides are equally at risk of death, it doesn't matter who is the aggressor

My suspicion is that robot warriors could prove much more respectful of human life than live soldiers. They're immune from the fear that makes humans at war apt to take shortcuts and they're immune to the desire for revenge which can lead soldiers to going off the handle.

Those are good reasons to deploy them.

Counter reasons include: Machines are cheap, humans are expensive. The cheaper war is the more likely politicians are to resort to it. You saw how Obama tried to spin Libya? Oh its perfectly OK to do this because *they can't shoot back* at us so no American lives are at stake.

I have always thought there was a real moral issue to a war that incurs no casualties. It is not that I think casualties are good. I don't. It is that you shouldn't use the excuse of "we can do this with few or no casualties" as part of the calculus for determining if you should go to war. If a cause is not worth dying for, then how is it worth killing for? I don't think we should be killing people for reasons that we don't think are worth dying for.

You and me pal. But we'll never be the ones issuing orders to the killbots.

I wouldn't be too sure about that. Machine intelligence, at least as it stands now, is developed through the use of algorithms. I suspect the algorithm for a battle bot will be fairly similar to that of any other kind of autonomous machine. Which means you can build such a thing in your garage. Which leads to interesting implications when it comes to the balance of power between the individual and the government.

This is one of the big reasons why ethicists are troubled by "Non-Lethal" policing mechanisms. If a police force can break up a massive protest with a phaser set to stun, they are more likely to use them on even semi-peaceful protestors.

Yes, I agree with that very much. Making war too easy is a big danger. It's bad enough that the politicians who make decisions to go to war rarely have much to lose by making the decision. This would just make those decisions even more consequence-free.

OTOH if both sides could field robot killers that would selectively target the decision-makers, that would add a really interesting new dynamic to the conflict.

I won't say the response to their development would be desirable. However, on a certain level, not fighting a war in such a way to maximize the safety and well being of your own people strikes me as downright horrific. What kind of barbaric monster would say, "Yeah, there's this technology we could employ that would mean thousands of our young men and women wouldn't have to die or spend the rest of their life as a cripple, but I'm not going use it, because I don't want to make war too safe for us."?

I think that depends on what kind of war and what kind of army you have. If we only ever fought defensive wars, I wouldn't be bothered so much. If someone is trying to invade or something, then yeah, you do whatever you can to defend yourself. But with a lot of the shit we have been involved in lately it would make it way easier for politicians to get away with unpopular military adventures.

They already do that.

This is certainly a valid argument. My (rather cynical) counterargument is that war is *already* cheap for the TOP MEN exercising Smart Power. These technological advances may make war safer/cheaper for the grunts who are actually getting shot at, but their opinions don't exactly matter to the powers that be.

Except that we won't hold a monopoly on this technology. I suspect creating battle bots will be cheaper than training and fielding a traditional army. Smaller states will benefit from this cost reduction. Then again I can see asymmetrical bots being created that look like, oh I don't know, street sweeping robots or even look like people. Then we're back to square one.

A better alternative is to evolve past the need for governments and remove the threat of war from the human race forever.

In any case, states and commanders who choose to develop and deploy such weapons would be responsible to any violations of the laws of war that they might perpetrate.

Yes. And that is why no commander in their right mind and with any respect for international law would ever deploy such a thing. Doing so would be taking responsibility for the actions of a machine over which they have no control once launched.

One of two things is going to happen, either international law is going to recognize the "but the machine malfunctioned" defense or these machines will only be deployed by countries who have no regard for international law.

Beyond the legal issues, I cannot see these things ever being politically palatable for anything but truly outlaw nations. We live in the world of the strategic private, where a small group of unsupervised sadists on the night shift at Abu Garibe can do lasting strategic damage to the United States. Given that, only a complete lunatic would deploy killer autonomous robots in defense of the United States. "But it is overall better than soldiers" is not going to undo the public relations disaster and resulting strategic damage to the country when one of these things malfunctions and kills a bunch of people. Sorry Ron but "the US killer robots are murdering civilians" is worse than "US private murdered civilians". You can at least hold the private accountable and show you don't tolerate that behavior. What are you going to do with a robot? Court Martial it? Put it in front of a firing squad?

You could prosecute the commander who deployed it. But then no commander in his right mind would ever want to deploy one.

Basically. And there's no telling what would happen the first time these things come in contact with the enemy. Military history is full of massive fuckups where great ideas don't survive contact with an opposing army, so I would not want to be in the vicinity if one of these things came wandering into my town the first time it was deployed in a conflict zone.

Yes. Friendly fire is an enormous issue. It is really difficult to just keep from killing your own people let alone actually kill the enemy.

Remember, they has of yet built self driving cars that can deal with bad roads and the unpredictability of other human drivers. And now they are going to build robots that can function in the chaos of combat?

I sear to God Ron Bailey will believe anything if it comes wrapped in some new technology.

Switching to 100 percent self driving cars would save tens of thousands of lives per year, even with current technology.

I'm not sure you understand the difference between machine and organic intelligence. Machine intelligence can be programmed to do just what the author of the article said, err on the side of caution and allow itself to be destroyed rather than kill a noncombatant. Compare that with something like Abu Gharib or My Lai. While Abu Gharib probably had more to do with things like the Stanford Prison Project than war crimes, My Lai was perpetrated by guys who just had too much. That doesn't happen with machines.

I disagree John. You've seen how this administration has handled the maturation of drone technology as a weapons platform - its been full steam ahead with deploying it. And they pop people like they're playing a videogame.

Guys we wouldn't have even bothered going after 10 years ago are getting droned now - along with anyone who happens to be in the vicinity when we locate them.

Congress has abdicated its warmaking authority.

Autonomous weapon systems are the perfect tool for war-hungry politicians who don't want to have to deal with *responsibility*. Everything that can be kept secret will be, anything that gets out that dirties your reputation? Well its not your fault, the bots were poorly programmed. You had good intentions and the supplier let you down (hell, Obama's been blaming Libya on *David Cameron* FFS). But, if you're given some more oversight authority and a larger budget you can ensure that such failures never happen again.

I see your point. Perhaps I am a bit optimistic. Regardless, I think we Ron's claim that these things are in any way a good idea is preposterous.

Humans are still the ones "pulling the trigger" when it comes to drones. Now you may have a point if the autonomous robot's programming is closed source. Open source would be a better choice. That way everyone can see the "rules" that guide the bot's decision making process and open source would be far more resistant than closed source to hacking.

I cannot see these things ever being politically palatable for anything but truly outlaw nations.

Maybe at first. But, if they can be shown to work, not using them will become politically unpalatable. Our two countries go to war. Your country's youth are coming home in body bags. Mine are at home eating cheeseburgers, drinking beer, and playing footsie with nubile young coeds. I'm either going to win the war or you're going to adopt the technology.

Whoever wants to fight a 'fair' war is a moron.

The tree of liberty must be refreshed from time to time with the blood of patriots & tyrants, and the various industrial fluids of FREEBOTS. it is it's natural manure.

1. Protecting the dignity of human life - complete bullshit, this is something spouted by 'bioethicists'. Dead is dead, whether some dude aimed a cross hair and pressed a button or not the level of dignity your broken and burned corpse will have is the same.

2. Facilitate compliance with . . - more bullshit. We can't facilitate compliance *right now* with people giving the orders.

3. Promote accountability - again, there's no accountability *now*.

Yes there is. There is a lot of accountability in western armies. There is however no accountability among non western armies. In fact, the law of war types have created a system where it pays to be a guerrilla or a terrorist who hides in the civilian population using them as shields rather than a uniformed soldier who fights a force on force battle.

I can assure you, there is an appearance of accountability, but there really is not. They hardly hold the correct people accountable, the same as the politicians.

There are more than a few people sitting in Leavenworth who would beg to differ with that assertion.

There are a couple of Generals who should be but didn't even have to face trial.

Oh yeah, there's lot of people locked up for crimes...just enough to make you think there's accountability. The people who really should be certainly aren't. They get off with a nice retirement and quietly put out to pasture.

There's no accountability *above* the military. And that's just as important. A bot army will not be a *military* force, it will be distributed among multiple government agencies (every single one that currently has a law enforcement arm in addition to agencies that have a foreign operations arm like the CIA) and run by agencies like the CIA - which historically has been pretty goddamn awful in this area, and not just recently with drones, but pretty much their whole history has been nothing but bullshit violent shenanigans.

I was thinking militarily. But yeah, there would be no accountability if you turned the CIA loose with these things, although that would limit the number of them.

I would agree that this would lead to what amounts to a really nasty machine run assassination force. That would be horrific but not quite the same thing as an army of them waging conventional war.

The kicker is - if these things became possible there would no longer be a need for them as a conventional force.

They'd just be used as a nasty machine run assassination force that pops up and kills anyone who dares to look at us funny.

Its not like we seriously have to worry about being invaded and just murdering 'terrorists' is a lot easier than occupations and liberations.

One of the amateur military blogs had an article a few years ago talking about the future conflict between Islam and the west. It talked about the development of these things and described the killer robots as Raid and the Muslims as roaches. It was pretty brutal even for me. That being said, I am not sure it wasn't a pretty accurate description of the future.

People just want to be safe and as a rule really don't give a shit about some person from an alien culture who lives half a world away. Worse, radical Islam has done so much damage to the image of Muslims and made it so easy for non Muslims to dehumanize Muslims that I don't think the situation you or the blog describe is that far fetched.

There's zero accountability for collateral damage casualties. There's only accountability when individual soldiers or units go rogue and commit crimes.

Gedankenexperiment #1: Ask one of those self-anointed human rights experts "what is a right?"

The ultimate problem with a robot army is that it reduces the number of people who can object.

When the fit hits the shan and armed partisans are in San Antonio protesting some injustice, the President could have two choices: 1) Send in 5,000 killbots that will ruthlessly massacre any human with a gun, or 2) convince his commanders, who must convince the officers of the Texas National Guard, who must convince their non-coms and who must convince their soldiers to throw away everything they've learned about the constitution and humanity to take up arms against their neighbors.

Admittedly, soldiers are there to do their job. So they might just do it anyway. But to have enough human minds in the chain- people who might just resign rather than fulfill these orders- could be the last hope we have against the rise of a dictator in chief.

That is a huge problem. The Nazis figured out fairly early that even the best trained and most fanatical soldier could not be relied upon to just murder civilians. The Nazis switched to gas chambers and industrial death camps because even with their Army filled with thousands of fanatics you couldn't just shoot millions in the back of the head.

A robot army would not have that problem. I can't believe anyone thinks that turning war over to soulless robots will make war anything but even more horrific than it is.

Yeah, the Nazis figured out that there were some small percentage of psychopaths that they could put in their death camps. The problem is that there weren't enough for wide spread carnage (though the communists seemed to figure it out to a better extent.)

With robots, you keep fucking with the software until you find the right behavior. Copy, paste, send to factory. Done.

For a lot of AI use cases, that is actually great. You don't need to train 50 people. You train one AI and then replicate. But with soldiers, we cannot trust authorities to create a Citizen Soldier. They will see the Citizen part as a bug. You can have as many ethicists as possible writing guidelines and rules. But as we saw with Bush and Obama, a good lawyer can get around any rules such that you are "legally" waterboarding, renditioning and bombing weddings. As soon as you have a software engineer twist around the loopholes enough, going from prototype in an already developed killbot to rewriting all the killbots in the field is just an upload.

The sad part is that it really doesn't matter. We might keep the US from doing this, but this is exactly the type of asymmetric weapon that countries like Iran will ultimately flock towards once the technology has shown any potential.

Nuclear weapons cannot economically be used against an individual, but are perfect for taking out bunkers full of dictators, mixed-economy congressmen, archbishops and field marshals. For that reason, the USSR could not trust its own bomber pilots and submarine crews with them, relied instead on ICBMs and collapsed when microprocessors made tracking antimissiles reliable. These States' parasitical dictators discovered that their hired murderers were vulnerable to conscripts with fragmentation grenades and the Nixon r?gime collapsed. The real solution, freedom instead of religious coercion, is as unthinkable to Republicans as it is to Democrats. So, while the Svengali channel keeps Trilbys from voting libertarian, they'll come up with manacles for your limbs, just like in China and Korea.

I'm going to create a couple robots and keep them in my house and take care of them but if they disobey me then I will kick them out and make them live in the wild and then if they reproduce themselves and are nasty and kill each other and humans and ruin the earth, then I will destroy them except for any that seem good, whom I will save, and promise never to do that again, and then if they start building stuff and try to rival me then I will confuse them and scatter them and then I will have to suffer millenia of their bitching and moaning about what an evil jerk I am and probably don't even exist anyway. But I'll still love them.

You could then send your son, Jesus Cyborg, who is both man and machine, to die for their sins and redeem their circuitry.

His name is Neo!

I'll buy one of those and program it to kill anyone who enters my home without knocking.

"I was in fear for my programming"

No thanks. Seen that movie too many times. (not serious)

I believe a robot woodchipper could be a definite positive for human rights. (Hi Preet!)

Off to the smelter with them! Woodchippers don't work on metal.

I, for one, welcome our new robot overlords.

Unleash the killer robots. What could possibly go wrong?

Before we make warbots, we really need to invent interstellar space flight. It's hard to form a ragtag fleet and flee the Cylon tyranny if you're stuck on just one planet.

On the other hand, robots rarely fly into a roid rage and beat, tase, kick, or shoot someone because they were disrespected.

Once I saw the draft of 5210 bucks,,, I admit that my friend's brother was like really generating cash in his free time with his COM. HH His aunt has done this for only 5 months and by now repaid the loan on their home and bought a new Car ...B-----12

------------ http://www.Buzzmax7.com

Doomsday Machines that eliminate human meddling are in daily use as prohibition enforcement devices. Mandatory Minimums and 3 Strikes laws serving up life sentences on harmless hippies, mexicanos and brown people are the glue that holds together the DemoGOP injustice system.

My favorite doomsday machine clip.

https://www.youtube.com/watch?v=-mUCLHzWiJo

Kylie . although Martin `s stori is inconceivable... on tuesday I bought themselves a Jaguar E-type after bringing in $8921 this last 4 weeks and over ten k this past month . it's certainly my favourite-job Ive ever had . I began this 10-months ago and right away began to earn minimum $71... per-hour .r...I'm Loving it!!!!

CLICK THIS LINK????? http://www.ny-reports.com

Start making more money weekly. This is a valuable part time work for everyone. The best part work from comfort of your house and get paid from $100-$2k each week.Start today and have your first cash at the end of this week. For more details Check this link??

Clik This Link inYour Browser?

???? http://www.selfCash10.com

our roomate's sister-in-law makes 85 an hour on the internet... she's been unemployed for eight months but the previous month her income was 12306 working on the internet a few hours every day... go to this site

========== http://www.pathcash30.com

The acronym LAWS is ironic and unfortunate. Who thinks these things up?

uptil I saw the bank draft four $8760 , I be certain ...that...my sister woz actually bringing in money part time from there labtop. . there neighbour had bean doing this 4 only about eighteen months and resently cleard the depts on there home and bourt a top of the range Chrysler ....

Clik This Link inYour Browser....

? ? ? ? http://www.Reportmax20.com

I've made $76,000 so far this year working online and I'm a full time student.I'm using an online business opportunity I heard about and I've made such great money.It's really user friendly and I'm just so happy that I found out about it.

Open This LinkFor More InFormation..

??????? http://www.selfcash10.com