DHS to Start Collecting Iris and Facial Recognition Images at Border

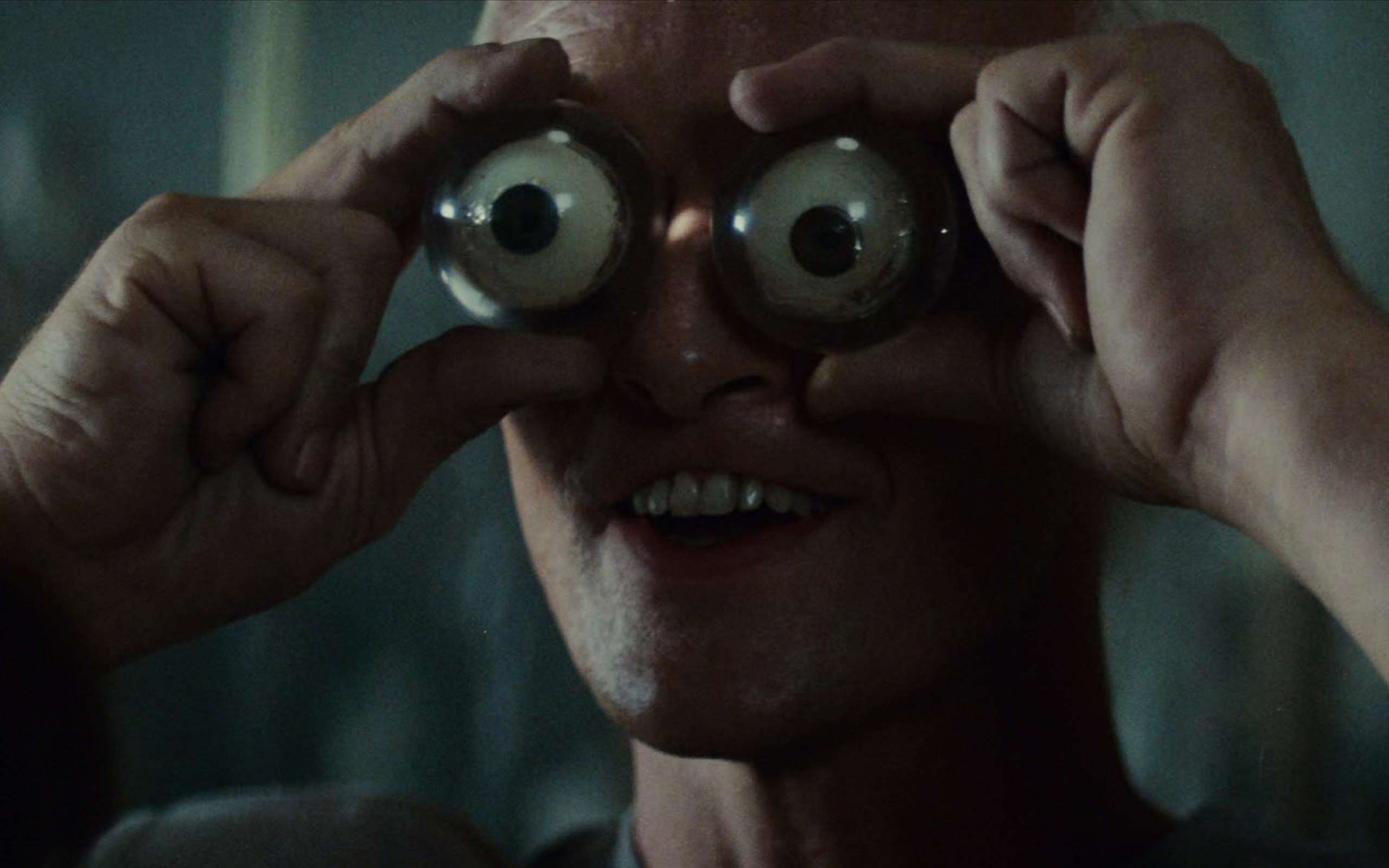

It's Philip K. Dick's world. We just live here.

Here we go, inching ever closer to the world of science-fiction writer Philip K. Dick. From National Journal's Nextgov site comes word that

The Department of Homeland Security this summer plans to roll out iris and facial recognition services to the U.S. Border Patrol, according to DHS officials.

The service will be able to share images with the FBI's massive multibiometric system, officials said.

The test is part of a coming overhaul of the department's "IDENT" biometric system, which currently contains more than 170 million foreigner fingerprints and facial images, as well as 600,000 iris templates. DHS last November released two sets of system specifications as part of market research for the new project.

"It's the Border Patrol stations that are doing the actual collections" of iris and face images from individuals processed through Customs and Border Patrol stations, Ken Fritzsche,director of the identity technology division at the DHS Office of Biometric Identity Management, toldNextgov. He spoke Tuesday at the 2015 Biometrics for Government and Law Enforcement conference.

"They've been collecting irises and storing the data with us," Fritzsche said. "Now, we're going to provide matching capability in a limited production pilot to CBP."

More food for thought: In 2011, the FBI

embarked on a multiyear, $1 billion dollar overhaul of the FBI's existing fingerprint database to more quickly and accurately identify suspects, partly through applying other biometric markers, such as iris scans and voice recordings.

And facial recognition too, of course. But don't worry, because they are very serious about regulating the use of such information in strict compliance with civil liberties and yadda yadda yadda.

In the aftermath of the 9/11 attacks, various goverment agencies started pushing facial recognition systems that were clearly not ready for prime time (by that, I mean they didn't even do what their supporters claimed they did). It's not fully clear whether those bugs have been worked out, meaning that even a 1 percent error rate could have massive repercussions on day to day life for millions of people. That same sort of error rate has already been deemed OK for things such as worker-verification systems that anti-immigrationists are pushing to use.

One question I've yet to see fully addressed: Does this sort of super-science stuff actually lead to more meaningful interactions between law enforcement and people? Or does it simply generate more data that can be stored and warehoused somewhere, to be pulled out whenever an agency thinks it needs to track somebody? One of the most pressing debates over massive, dragnet-style collection of both metadata and specific communications (including reports on bank transactions over $10,000) is whether this stuff leads to more prevention of crime or not. Indeed, when the NSA's total surveillance programs came to light, supporters such as Sen. Dianne Feinstein (D-Calif.) and Rep. Mike Rogers (R-Mich.) were quick to say that such techniques were responsible for stopping terror attacks. Yet the two specific cases they cited, the 2009 arrests of Najibullah Zazi and David Headley, actually proved the exact opposite. Each was the result of using "'old tools' such as searching computers of suspects in custody and sharing information among agencies," not some sort of big data investigation.

Whether the technology even does what it claims to, we know that all sorts of slippage will take place. In 2013, for instance, it came to light that 30,000 Ohio cops could access that state's facial recognition database without oversight.

But here's the deal: As with most technology that satisfies human desires (whether good or bad, private or public), the question is never if a certain technology will be deployed but how and when. The feds and various state and local agencies will try to slip this sort of tech into our lives in a casual way. The only way to push back is to question whether this will actually address real problems, follow its deployment every step of the way, design workable restraints on its misuse, and come up with the next iteration of technology that will render this sort of prone-to-abuse stuff obsolete.

Show Comments (133)