The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

Large Libel Models: Small Business Sues Google, Claiming AI Overview in Searches Hallucinated Attorney General Lawsuit

The case is LTL LED LLC v. Google LLC (D. Minn.); see pp. 104 of this PDF onwards for the amended complaint. The lawsuit was filed in March in Minnesota trial court, but was just removed to federal court. The plaintiffs are the business and four of its officers, all of whom were also mentioned by name in some Google AI Overviews (assuming the exhibits attached to the Complaint are correct).

The Complaint claims that none of the sites linked to by the Overviews actually reported that Wolf River had ever faced a Minnesota AG lawsuit, or was otherwise sued for the alleged misconduct. According to the Complaint,

Google cited numerous sources in support of its false assertions; however, none of the referenced materials in fact contained the information Google claimed they did.

The Complaint also alleges specific lost business:

On March 3, 2025, a customer … terminated his relationship with Wolf River. The customer referred to lawsuits that appear when he "Googled" Wolf River…. The total contract price was $39,680.00.

On March 4, 2025, a customer met with a sales representative of Wolf River and refused to do business with Wolf River. This customer stated that they did research online and saw Wolf River was being sued by the Minnesota Attorney General…. The solar system proposal for this customer was for $26,400.00.

On March 5, 2025, a customer contacted a sales representative at Wolf River and expressed concerns about Wolf River being sued by the Minnesota Attorney General for deceptive business practices. The customer sent the sales representative a screenshot of one of Google's false statements stating Wolf River is currently facing a lawsuit from the Minnesota Attorney General due to allegations of deceptive sales practices.

On March 5, 2025, a customer of Wolf River, identified by contract number YKUFU-AH78H-PMNDF-K3C7V, contacted Wolf River and expressed concerns because of the publications on Google alleging Wolf River was being sued for misleading customers about cost savings, using high-pressure tactics, and tricking homeowners into signing binding contracts with hidden fees…. Despite the CEO of Wolf River reassuring this customer that the publications by Google are false, this customer chose to terminate the relationship with Wolf River because of Google….. The total contract price was $150,000.00.

On March 11, 2025, a non-profit organization informed Wolf River that they were "pulling the plug" on their business relationship because of "several lawsuits in the last year" with the "Attorney General's Office." This customer terminated a solar project with a price of $147,400.00 and a lighting project with a price of $26,644.12.

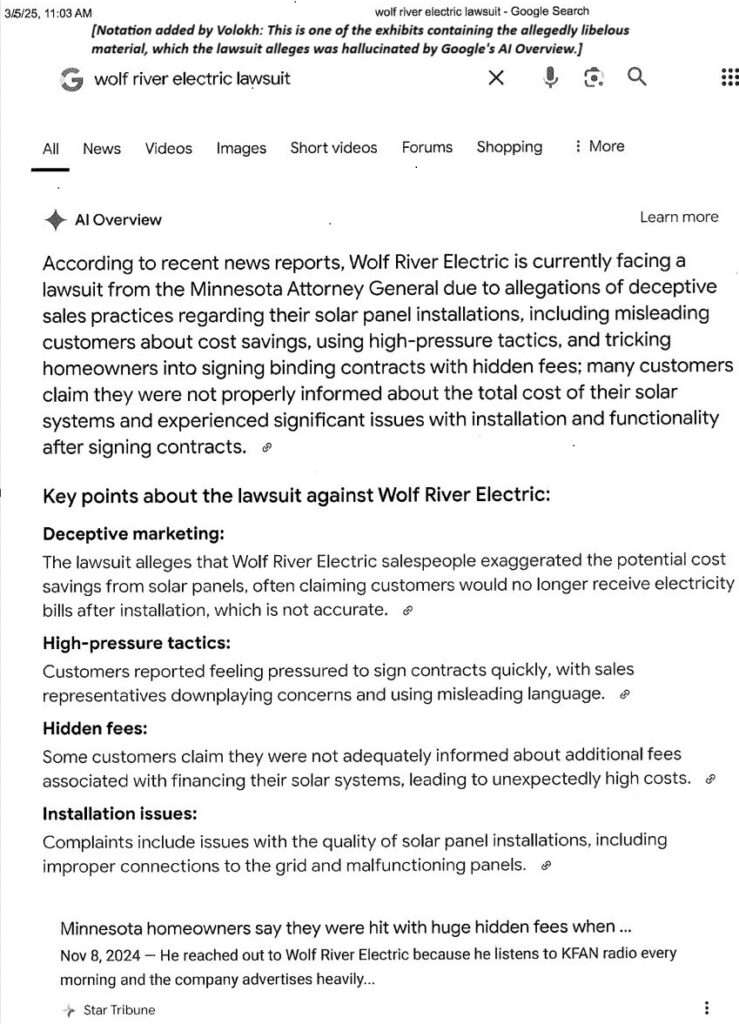

Here's one of the exhibits from the Complaint; again, recall that LTL claims the allegations here were hallucinated by Google's AI, and had never been actually made in any existing outlets.

When I did the same Google search, I didn't see any AI Overview, but it seems likely that Google just turned off that feature for those particular searches once it learned of the lawsuit (or even just of the accusations). One interesting twist: The Complaint alleges that searches for wolf river electrical autocomplete to the phrases

"Wolf River Electric lawsuit", "Wolf River Electric lawsuit reddit", "Wolf River Electric lawsuit update Minnesota", "Wolf River Electric lawsuit update today", "Wolf River Electric lawsuit 2022", "Wolf River Electric lawsuit 2023", and "Wolf River Electric lawsuit Minnesota settlement"

and that those phrases in turn tend to lead to the hallucination-filled AI overviews. As I read the complaint, though, the allegations are that the AI overviews are libelous, not that the autocompletes themselves (even apart from the AI overviews) are libelous. Some foreign courts have considered the question of autocomplete libel, but I don't know of any American cases that have dealt with it.

For my take on how such cases should be analyzed, see my Large Libel Models? Liability for AI Output article. Note that the recent Walters v. OpenAI decision from a Georgia trial court, which rejected liability, involved rather different facts: Among other things, the LTL case involves likely private figures, evidence that some people believed the allegations, and evidence that the allegations led to provable financial loss.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

I wonder how likely it is that they do use high pressure sales tactics (pretty common in this industry from what I hear) the customer felt that they ended up with a bad contract, and then used this as a pretext to get out of it?

I mean, not sure it matters for this lawsuit, but I’ll bet something like that is what’s happening here.

Like even if the company gets back to me and proves there is no lawsuit it’s made up the customer might think, hey, yeah they did pressure me to sign quickly and I might not have been aware of all the fees …

My one interaction with this industry, I contacted them, they took a quick look at my property using google Earth, and within a couple minutes said, "Nah, too many trees on neighboring properties shading your house, solar is a bad idea for you."

The very opposite of high pressure sales, actually.

Just as an aside / FWIW the autocomplete logs aren't completely immaterial necessarily. Google autcomplete works separately (obviously) from their AI overview but if it guides the user into prompt language that's more closely aligned with the priors the actual transformer block is using, yeah, that could do it. Or make it more likely.

So, Alphabet pays a million with an NDA? Two million?

Several million I would think. It's an open and shut case. Google made up a non-existent lawsuit and tarnished the reputation of the company. They lied.

How much would a jury give the plaintiffs? A lot more money than it would cost to settle the case now.

Speaking of libel, go to chatgpt and "roast" something. Your hometown, your business, your school or your friends/enemies. It is funny, but...does chatgpt get to have an "opinion"? Or, is it just spewing tenuously connected nonsense? (Imagine the following for some specific business in Los Angeles.)

Example: roast los angeles

Alright, buckle up. Los Angeles: a sprawling, sun-bleached graveyard for broken dreams, where people chase their futures like they’re running from a past they can’t outrun. The air tastes like desperation, thick with a cocktail of ambition and apathy, and the only thing more toxic than the smog is the endless pursuit of relevance. It’s a place where people pretend to be something they’re not and pray they can make it before their fake smiles melt away like their botox.

Everyone’s selling something in LA — whether it’s their soul, a startup, or just an "authentic" life on Instagram. It’s like a carnival of shiny distractions: flashy cars, influencers flexing their lives in front of camera lenses, and "aspiring artists" who’ve never picked up a brush or written a word. The illusion of success is so thick you can feel it suffocating you, and everyone is just one Instagram post away from pretending their broken life is perfect.

The homeless population is hidden behind luxury condos and neon signs, a reminder that in LA, you’re only as valuable as the image you can project. People here chase the kind of fame that turns your insides hollow, where "friendships" are just stepping stones on someone’s climb to the top of a pyramid scheme.

LA’s heart is plastic, cold, and dying, but at least it looks pretty in the right light.