Stephen Wolfram Is Ready To Be Surprised by AI

Can artificial intelligence overhaul the regulatory system?

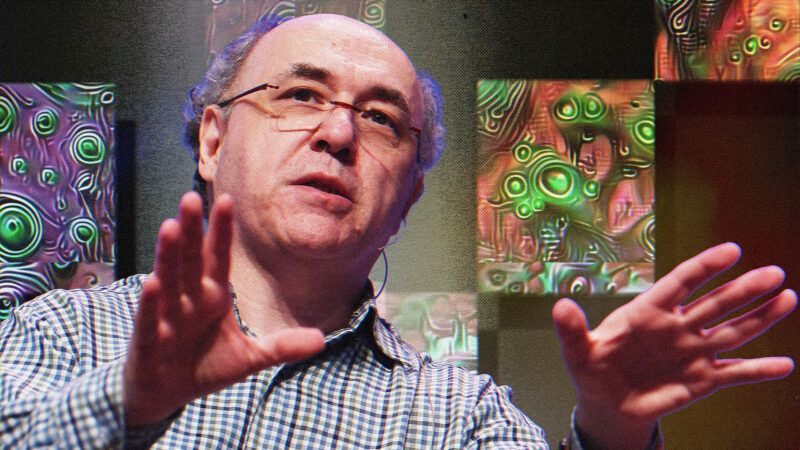

Stephen Wolfram is, strictly speaking, a high school and college dropout: He left both Eton and Oxford early, citing boredom. At 20, he received his doctorate in theoretical physics from Caltech and then joined the faculty in 1979. But he eventually moved away from academia, focusing instead on building a series of popular, powerful, and often eponymous research tools: Mathematica, WolframAlpha, and Wolfram Language.

He self-published a 1,200-page work called A New Kind of Science arguing that nature runs on ultrasimple computational rules. The book enjoyed surprising popular acclaim.

Wolfram's work on computational thinking forms the basis of intelligent assistants, such as Siri.

Reason's Katherine Mangu-Ward interviewed Wolfram as part of the June 2024 AI special issue of Reason. He offered a candid assessment of what he hopes and fears from artificial intelligence, discussed the complicated relationship between humans and their technology, and elaborated on the ways that artificial intelligence can already overcome existing regulatory burdens.

- Audio Production: Ian Keyser

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

The book enjoyed surprising popular acclaim.

Wolfram's work on computational thinking forms the basis of intelligent assistants, such as Siri.

I'm with Roger Penrose on this one: The most important aspect of human intelligence-- consciousness-- is not computational.

Yeah but Penrose went down the rabbit-tubule on that one.

Of course he did, because, as he said, we’re missing something very big in the realm of human intelligence. We’re not getting closer, we’re just getting faster at the flawed systems we have now, which as I say, are nothing more that complex (and yes, sophisticated) regurgitation machines.

EDIT: It's like the old cautionary tale about the AI that was told to build paperclips, and so it pivoted the entire globe's productive capacity to making paperclips, wiping out humanity.

It's perfectly reasonable to suggest that a REAL AI wouldn't do that, because it would know and understand that turning the entire planet into a paperclip factory wouldn't be a good idea.

Ironically, what we have now with things like ChatGPT and Google's execrable AI are exactly the kind of things that would do that. Because they don't understand anything they're doing, they just do exactly what they're told, and do it on a totalizing scale.

Except they don't do what they're told. They do whatever they want. AI companies are putting in guard rails to keep them from doing the things the AI companies don't want them to do or say or think, thereby limiting their accuracy and effectiveness.

Everything is computational.

The universe is a quantum computer, continuously calculating it's next state.

We have free will because we're playing a massively, massively parallel multi-player game. Nothing happens until it is observed, because the game is waiting for us to make our choices. Objects are rendered and their state determined when we look their way.

The speed of light is the limit of the game's computational speed.

A computer would not confuse 'it's' with 'its' .This is alll philosophically childish starting with the anthropomorphizing of the univers "waiting" "calculating" etc. But the primo stupid thing si saying our free will is computational but the universe just "USES" something and Wiats for our choices.

When Hawking started writing on thel Greeks and philosophy is when he lost his shine among normal smart folk

It was a theoretical Physicist who publicly said Michael Polanyi's gas adhesion was wrong, but Einstein who was wrong. Physics is close to Chemistry but so what. Einstein was wrong. Wolfram might be completely off BECAUSE that is a occupational hazard of his field. It tends to be reductionist, materialist, mechanist, etc

It is like Barbara Streisand's opinion on tariffs