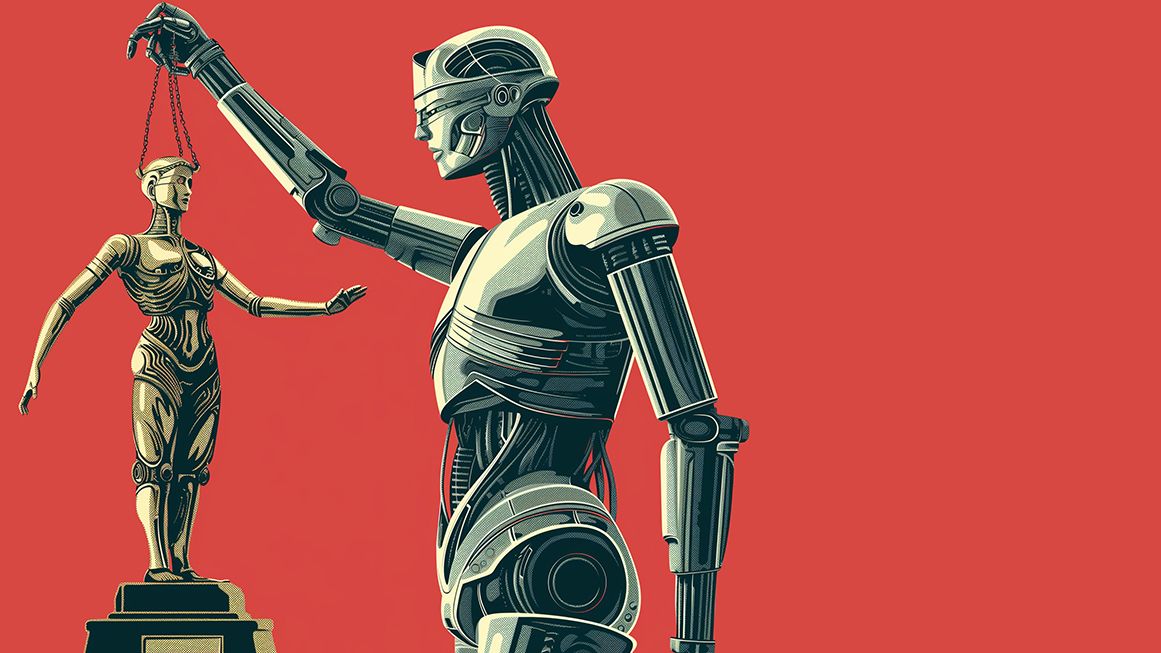

Will Antitrust Policy Smother the Power of AI?

Left alone, artificial intelligence could actually help small firms compete with tech giants.

Populism continues to blur political lines. Nowhere is that more apparent than in antitrust policy. For decades, conservatives largely held the line against left-wing antitrust hawks who see "monopolies" everywhere. But their mistrust of Big Tech's political biases has caused the populist right to get more comfortable about unleashing antitrust. Odd alliances have formed with Republican senators openly praising some of the most progressive members of the Biden administration occupying high offices at the Federal Trade Commission (FTC) and Department of Justice. Adding a new wrinkle to this phenomenon is the dawn of the AI Age. We are seeing an ironic merger of panics.

What many see as a dystopian technological frontier with AI is just history repeating itself. New technology often disrupts dominant industries and firms in ways even the harshest antitrust remedies can't match. AI should be no different, but bipartisan antitrust zeal may score a pyrrhic victory and halt this historical cycle.

The right has long been concerned with large and biased institutions; conservative complaints about the "liberal media" and progressivism in academia have a rich history. The difference is that in the past conservatives responded by building their own institutions. Because of new technology, it had been easier to do so. Social media allowed heterodox voices to reach huge audiences. Search engines and online marketplaces made it easier to find customers. Online conservative institutions such as PragerU now have viewership numbers that rival and surpass traditional media. Entrepreneurs can circumvent retailers like Walmart and list directly on Etsy and Amazon. Despite concern about the size of today's tech companies, the evidence is abundant that competition downstream of platforms is thriving, benefiting consumers.

What's changed is that the barriers to building competing platforms are now perceived to be insurmountable. The "go build your own" argument is routinely mocked now. Indeed, there are daunting technological and knowledge barriers to coding, launching, and maintaining a new online platform. Just as new technology turbocharged competition in industries such as media and retail, AI also has the power to erase the barriers to building competing digital services.

While AI is making waves generating interesting images or "helping" students with homework, consumer-facing AI burst onto the scene a few years ago with services such as ChatGPT-3—turning plain text instructions into functional web code. As AI continues to improve, one may not need to "learn to code" to compete. "Go build your own" search service or shopping platform won't be a punchline. AI has unprecedented potential to level the playing field, if the revolution isn't stymied by the impatience of antitrusters.

AI now faces two broad policy threats. The first is AI being prematurely regulated under a heavy-handed ex ante approach as opposed to the status quo of permissionless innovation and ex post enforcement of existing laws as applied to AI. By entwining regulators in AI development, only the largest firms with the resources and knowledge necessary to navigate government demands will be able to compete. Lawmakers hardly realize that their desire to rein in dominant firms actually guarantees their longevity as market leaders. But the companies understand this and are usually more than happy to swallow regulations that will act as barriers to potential competitors.

The other threat is that antitrust scrutiny could sap the resources necessary for the continued development of competing AI systems. Antitrust litigation is ongoing or imminent for essentially all the major tech companies. In January, the FTC launched a probe into Microsoft's investments in OpenAI. Such cases impose enormous resource costs. Even a raised eyebrow from a regulator will deter companies from making bold new investments.

Bipartisan antitrust bills were introduced in the last Congress that would have explicitly barred the largest tech companies from ever making a new acquisition again. Such a policy, through either litigation or legislation, would close off a key avenue for investors in startups to make their money back. If investors don't see a realistic path to making a return, they're not likely to help new competitors get off the ground in the first place. Revolutionary AI companies may thus never leave parents' garages.

Innovation has always served as a crucial check on accumulated market power. The current antitrust enthusiasts have mistakenly latched onto AI as a symptom of their concerns with Big Tech, totally missing what it really represents: a natural solution.

This article originally appeared in print under the headline "Antitrust May Smother the Power of AI."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Those that oppose Artificial Insemination (AI) don’t baste, but may be based.

Those turkeys are just masterbasters.

Those with a lot of stroke stick to what they know.

Really starting to miss the Floridamanbad articles.

Am I the only one here who thinks coverage of public policy w.r.t. private AI is overblown? I can't see such policy as ever affecting the issue, since AI is so nebulous and can be called a million different things.

When AI is outlawed, only outlaws will use AI.

Seriously. It sounds like a cheap quip, but all this handwringing over AI regulation missing the point. Murder has been outlawed for several thousand years, yet we still get murder. The idea that congress can effectively ban or regulate AI is nonsense.

The problem is that the technology has left the barn. You have bad actors like China or Putin or dead Iranian heads of state who have the resources to set up their own AI systems. And it's certainly not out of range for a variety of criminal types around the world.

The danger is NOT AI taking over the world. That's stupid science fiction. The real danger is Generative AI faking everything. Which is going to be a MAJOR ISSUE in the election after this. Within five years AI will be able to FAKE texts, articles, photographs, and videos to an extremely realistic degree. All that freakout over fake news was just a warmup. Imagine an election where you can't trust ANYTHING, and by that I mean not even from your own side.

Any ounce of credulity in your makeup will be exploited to the fullest extent. Some dude working for an authoritarian government can generate fake news during an election cycle that WILL FOOL MOST OF THE PEOPLE. Not this is not just in the electoral sphere, but in financial scams and other crimes.

This is because we have trained ourselves to believe anything that fits our narrative. No one is exempt from this.

How do you combat that? Well banning AI won't do a damned thing! Neither will regulating it. The danger is not Google or Microsoft, the danger is the lone bad actor with access to AI. Because outlawing AI means only outlaws will use AI. The horse is out of the barn!

But we can use AI to combat this. But that can't happen if it's been outlawed or regulated into an ineffectual state. This is exactly like firearms. Banning it guarantees that only the State and criminals will have it.

I don't know what the ultimate answer to this looming problem is, but I do know that Biden/Trump is not the answer. That's just your credulity talking meaning that you are a prime victim for AI abuse.

How could AI combat fake videos?

I imagine it could be used effectively to identify fake videos.

Yeah, it's ridiculous to think that "AI" (if we must call it that) can be effectively regulated or controlled. It's a computer program, which is an idea. And people will come up with all sorts of less centralized ways to use it. The automatic writing and image generation is pretty neat, but I think the most interesting things aren't going to come from the big, publicly available models.