Elon Musk Enforces Twitter's Ban on 'Hateful Conduct' As Critics Predict a Flood of Bigotry

The "free speech absolutist" is maintaining some content restrictions while loosening others.

Twitter has seen an "unprecedented" increase in "hate speech" since Elon Musk took over the platform in late October, The New York Times reports. As the paper's fact checkers might say about someone else's scary claims, the Times story is misleading and lacks context. But it highlights the challenges that Musk faces as he tries to implement lighter moderation practices without abandoning all content restrictions.

Before Musk bought Twitter, the Times says, "slurs against Black Americans showed up on the social media service an average of 1,282 times a day. After the billionaire became Twitter's owner, they jumped to 3,876 times a day. Slurs against gay men appeared on Twitter 2,506 times a day on average before Mr. Musk took over. Afterward, their use rose to 3,964 times a day. And antisemitic posts referring to Jews or Judaism soared more than 61 percent in the two weeks after Mr. Musk acquired the site."

Those numbers, which the Times attributes to "the Center for Countering Digital Hate, the Anti-Defamation League and other groups that study online platforms," might seem alarming. But in the context of a platform whose users generate half a billion messages every day, they suggest that explicitly anti-black, anti-gay, and anti-Jewish tweets are pretty rare.

Assuming that "antisemitic posts" are about as common as the two other categories, we are talking about something like 0.0024 percent of daily tweets. The Times concedes that "the numbers are relatively small," which is like saying the risk of dying from a hornet, wasp, or bee sting is relatively small.

For readers who bother to do the math, the Times warns that the increases it cites are only the beginning: "Researchers said the increase in hate speech, antisemitic posts and other troubling content had begun before Mr. Musk loosened the service's content rules. That suggested that a further surge could be coming."

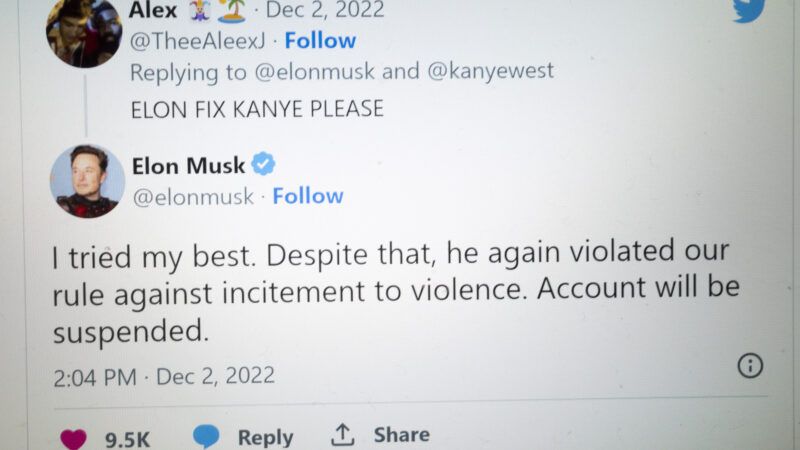

So far, however, Musk has retained Twitter's ban on "hateful conduct." Musk cited that rule yesterday, when he suspended music and fashion tycoon Kanye West's account. West, who recently has dismayed fans and business partners alike with a string of antisemitic remarks, had already run afoul of Twitter's rule by tweeting that he was about to go "death con 3 On JEWISH PEOPLE." His latest offense was tweeting a Star of David with a swastika at its center.

West's earlier comment was either a deliberate play on "DEFCON 3," a military term that denotes an "increase in force readiness above that required for normal readiness," or an accidental mangling of that phrase. Either way, the anti-Jewish hostility was hard to miss.

The image that prompted West's suspension likewise posed a bit of a puzzle. "I like Hitler," West told conspiracist Alex Jones on the latter's podcast yesterday. "Hitler has a lot of redeeming qualities." He added that "we got to stop dissing Nazis all the time." It nevertheless seems fair to assume that adding a swastika to a Jewish star was not meant as a compliment.

While Musk said West had "violated our rule against incitement to violence," the policy is broader than that description implies. "You may not promote violence against or directly attack or threaten other people on the basis of race, ethnicity, national origin, caste, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease," it says. "We also do not allow accounts whose primary purpose is inciting harm towards others on the basis of these categories." Also forbidden: "hateful imagery and display names."

The official rationale for that policy emphasizes that open expressions of bigotry have a chilling effect on Twitter participation:

Twitter's mission is to give everyone the power to create and share ideas and information, and to express their opinions and beliefs without barriers. Free expression is a human right—we believe that everyone has a voice, and the right to use it. Our role is to serve the public conversation, which requires representation of a diverse range of perspectives.

We recognize that if people experience abuse on Twitter, it can jeopardize their ability to express themselves….

We are committed to combating abuse motivated by hatred, prejudice or intolerance, particularly abuse that seeks to silence the voices of those who have been historically marginalized. For this reason, we prohibit behavior that targets individuals or groups with abuse based on their perceived membership in a protected category.

There is obviously a tension between Twitter's commitment to "free expression" and its prohibition of hate speech. But while even the vilest expressions of bigotry are protected by the First Amendment, that does not mean a private company is obligated to allow them in a forum it owns. Twitter has made a business judgment that the cost of letting people talk about how awful Jews are, in terms of alienating users and advertisers, outweighs any benefit from allowing users to "express their opinions and beliefs" without restriction.

Other social media platforms strike a different balance and advertise lighter moderation as a virtue. But all of them have some sort of ground rules, because a completely unfiltered experience is appealing only in theory.

Parler, for example, describes itself as "the premier global free speech app," a refuge for people frustrated by heavy-handed moderation on other platforms. It nevertheless promises to remove "threatening or inciting content." Parler also offers the option of a "'trolling' filter" (mandatory in the Apple version of the app) that is designed to block "personal attacks based on immutable or otherwise irrelevant characteristics such as race, sex, sexual orientation, or religion." Such content, it explains, "often doesn't contribute to a productive conversation, and so we wanted to provide our users with a way to minimize it in their feeds, should they choose to do so."

Musk describes himself as a "free speech absolutist." That approach is mandatory for the government, except when it comes to judicially recognized exceptions such as fraud, defamation, and true threats. But it is not viable for a social media platform that wants to maintain some semblance of a welcoming environment, and it does not describe Musk's actual practices since he took over Twitter.

Musk has invited back inflammatory political figures such as Donald Trump and Marjorie Taylor Green. He has rescinded Twitter's ban on "COVID-19 misinformation," a fuzzy category that ranged from demonstrably false assertions of fact to arguably or verifiably true statements that were deemed "misleading" or contrary to government advice. At the same time, however, Musk has interpreted Twitter's rule against impersonation as requiring that parody accounts be clearly labeled as such, and he evidently thinks enforcing the ban on "hateful conduct" is important enough to justify banishing a celebrity with a huge following.

As far as the critics quoted by the Times are concerned, that stance does not really matter, because loosening any rules is tantamount to abandoning all of them. "Elon Musk sent up the Bat Signal to every kind of racist, misogynist and homophobe that Twitter was open for business," said Imran Ahmed, chief executive of the Center for Countering Digital Hate. "They have reacted accordingly."

If Twitter is indeed flooded by bigots eager to test the platform's boundaries under Musk, they will compound the already daunting challenge of trying to police an enormous amount of content for rule violations. Even before Musk bought Twitter, critics complained that the platform was failing at that task.

Last July, the Anti-Defamation League (ADL) reported that it had "retrieved 1% of all content on Twitter for a 24-hour window twice a week over nine weeks from February 18 to April 21, 2022." After running that sample through its Online Hate Index algorithm, the ADL found 225 "blatantly antisemitic tweets accusing Jewish people of pedophilia, invoking Holocaust denial, and sharing oft-repeated conspiracy theories."

Given the huge size of the sample, that tally suggests either that a minuscule share of Twitter users is openly antisemitic or that the platform was doing a pretty impressive job of blocking or removing attacks on Jews. The ADL had a different take: "Of the reported tweets, Twitter only removed 11, or 5% of the content. Some additional posts have been taken down, presumably by the user, yet 166 tweets of the 225 we initially reported to Twitter remain active on the platform."

Extrapolating from that 1 percent sample of "all content on Twitter," the total number of antisemitic tweets might have been in the neighborhood of 22,000. Is that a lot? The ADL obviously thinks so. But when a platform is dealing with 238 million users generating nearly 200 billion tweets a year, even a highly effective system for detecting Jew hatred is apt to miss many examples. And that's just one application of one content rule.

"Content moderation at scale is impossible to do well," TechDirt's Mike Masnick argues. Given the amount of content, the subjectivity of moderation decisions, and the fact that they are bound to irritate the authors of targeted messages, he says, such systems "will always end up frustrating very large segments of the population."

That observation, Masnick emphasizes, "is not an argument that we should throw up our hands and do nothing" or "an argument that companies can't do better jobs within their own content moderation efforts." Still, he says, it's "a huge problem" that "many people—including many politicians and journalists—seem to expect that these companies not only can, but should, strive for a level of content moderation that is simply impossible to reach."

Show Comments (66)