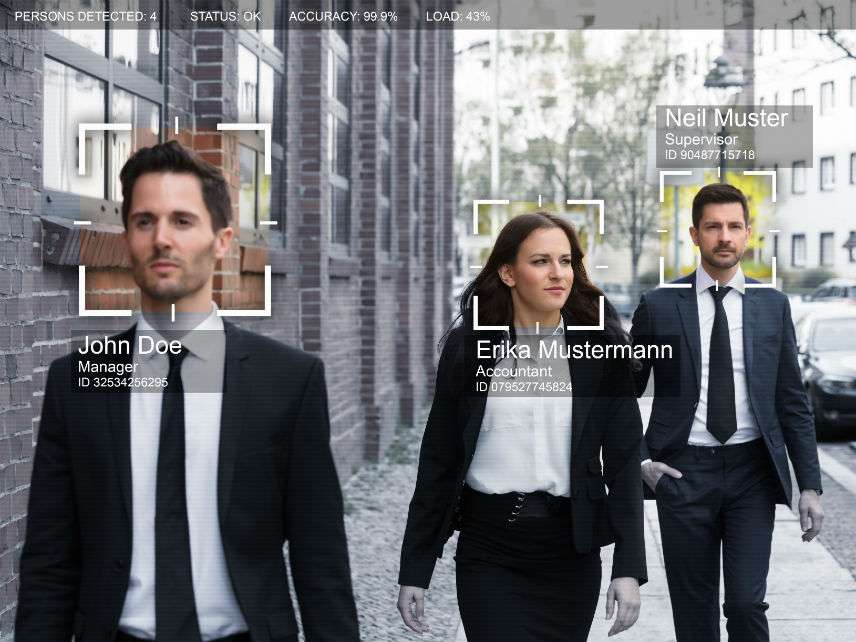

San Francisco Facial Recognition Ban Proposed

It's a good idea that libertarians should applaud.

Aaron Peskin, a member of San Francisco's Board of Supervisors, has proposed a new ordinance that could severely limit city agencies' use of enhanced surveillance of the city, including a ban on facial recognition technology. "I have yet to be persuaded that there is any beneficial use of this technology that outweighs the potential for government actors to use it for coercive and oppressive ends," Peskin said.

Peskin is not alone in worrying about how government agents can abuse the technology. Woodrow Hartzog, a professor of law and computer science at Northeastern University, and his colleague, Rochester Institute of Technology philosopher Evan Selinger, make a strongly persuasive case that "facial recognition is the perfect tool for oppression." As a consequence of the technology being "such a grave threat to privacy and civil liberties," they argue that "measured regulation should be abandoned in favor of an outright ban."

Their list of the harms to privacy and civil liberties entailed by the widespread use of this technology by government agencies is chilling. They point out that people who think that they are being watched behave differently. In addition, they cite a host of other abuses and corrosive activities entailed by the technology:

Disproportionate impact on people of color and other minority and vulnerable populations.

Due process harms, which might include shifting the ideal from "presumed innocent" to "people who have not been found guilty of a crime, yet."

Facilitation of harassment and violence.

Denial of fundamental rights and opportunities, such as protection against "arbitrary government tracking of one's movements, habits, relationships, interests, and thoughts."

The suffocating restraint of the relentless, perfect enforcement of law. The normalized elimination of practical obscurity.

The amplification of surveillance capitalism.

Why ban rather than regulate this surveillance technology and not others such as geolocation data and social media searches? Hartzog and Selinger argue that systems using face prints differ in important ways that justify banning them. Given the spread of CCTVs and police body cameras, faces are hard to hide and can be inexpensively and secretly captured and stored. All-seeing cameras and never forgetful digital databases will put an end to obscurity zones that secure the possibility of privacy for us all.

In addition, existing databases, such as those for driver's licenses, make facial recognition tech easy to plug in and play. In fact, it's already been done by the FBI. In their 2016 report, The Perpetual Line-Up, Georgetown University researchers found that 16 states had let the FBI use facial recognition technology to compare the faces of suspected criminals to the states' driver's license and ID photos, creating a virtual line-up of each state's residents. "If you build it, they will surveil," assert Hartzog and Selinger.

China's social credit system is already taking advantage of facial recognition technology. According to Fortune, the Chinese government is deploying facial recognition tied to millions of public cameras to not only "identify any of its 1.4 billion citizens within a matter of seconds but also having the ability to record an individual's behavior to predict who might become a threat—a real-world version of the 'precrime' in Philip K. Dick's Minority Report."

"The future of human flourishing depends upon facial recognition technology being banned before the systems become too entrenched in our lives," conclude Hartzog and Selinger. "Otherwise, people won't know what it's like to be in public without being automatically identified, profiled, and potentially exploited. In such a world, critics of facial recognition technology will be disempowered, silenced, or cease to exist."

Perhaps that's too ominous. Widespread use of facial recognition technology can certainly make our lives easier to navigate. But it doesn't take a cynic to worry that governments will demand the right to plunder private face print databases. It's not too soon to consider if the tradeoffs between convenience and not-implausible government abuses of the technology are worth it.

The proposed ordinance in San Francisco requires agencies to gain the Board of Supervisors' approval before buying new surveillance technology and puts the burden on city agencies to publicly explain why they want the tools as well as to evaluate their potential harms. That's at least a step in the right direction.

For more on how we are already constructing a pervasive surveillance state, see my January feature article, "Can Algorithms Run Things Better Than Humans?" The answer depends on what you mean by "better."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

"I have yet to be persuaded that there is any beneficial use of this technology that outweighs the potential for government actors to use it for coercive and oppressive ends,"

Who invited Debbie Downer?

But what if this technology can be used to identify people that haven't been vaccinated, so Ron can be protected from them?

That is like everyone in San Francisco so not sure it would help. (Vaccinate your kids already).

Disproportionate impact on people of color and other minority and vulnerable populations.

Why would it have a disproportionate impact on minorities? Is that just said so that some people will take notice and agree? Checking that box?

Yes, no explanation given, but looks good on the resume when applying for a job at vox.

Strengths:

*Disproportionate impact on minorities and women

In fact, from everything I've seen these systems have a really hard time telling minorities apart. Ironically, facial recognition technology generally works best on white people and badly, if at all, on black people especially.

Black people all look the same to facial recognition software.

There's no such thing as black people to facial recognition software.

Really? To the computer we are all just a string of ones and zeros.

The training sets are not very effective because the white do a poor job of selecting black people for them.

Citation needed.

Not enough contrast and shadow?

They don't care about anyone else. They consider non-minorities disposable. So they need to say it will be bad for minorities because otherwise the SF audience might say "who cares if it's bad, people deserve it".

Minority areas tend to have more crime, more crime equals more policing. So, minority neighborhoods may be subjected to it first, i suppose. Or not.

Doesn't the Real ID act require the driver's license to have a photo that works with facial recognition technology?

If facial recognition software prevents even one police department from kicking in the door and wasting two innocent people in a botched drug raid, isn't it worth the risk?

No, the cops generally have their faces covered when they do that sort of thing.

Nice

Do you want niq?bs? Because this is how you get niq?bs.

If San Francisco wants to ban stuff, it woulld do well to forbid shitting in the streets.

Wouldn't all the surveillance cameras change the offense from shitting in the street to public indecency?

Just set up live streams on the Web for each and every camera? Public property shouLd be publicly available, right?

How about some fecal recognition software, so we can identify the perpetrators? Of course, to make this work, all new toilets will be required to have a camera.

...has proposed a new ordinance that could severely limit city agencies' use of enhanced surveillance of the city...

Yeah, I mean why duplicate the effort of the NSA that they use for parallel construction right now today? Plus, this way the city gets to claim they're totally blameless for all that survillance that they're constitutionally prohibited from having or using that they have and are using?

/sarc

I was thinking the sheriff's department, but, yeah.

San Francisco proposes a ban that actually makes sense and enhances liberty. I guess it is true that even a blind squirrel occasionally finds a nut.

IF YOU WANT A SOCIAL CREDIT SCORING SYSTEM, AMERICA ALREADY HAS TWITTER.

Twintter is coming.

So Jimmy might need more than Jimmy to prove that he is Jimmy once more?

He is not Jimmy anymore. He's Saul Goodman now.

You can't stop facial recognition software any more than you can stop license plate scanners. If governments are banned from running such systems, the market will provide them, heck even free source versions will pop up; and then government agents will use their Facebook accounts to get the same data.

It's true. We're fucked.

Sure you can. In Sweden, you need a license to put up a camera in a public place.

"No Warrants shall issue, but upon probable cause, supported by Oath or affirmation, and particularly describing the place to be searched, and the persons or things to be seized."

I have video and stills of the perpetrators that stole my motorcycle yesterday (in the act), and even though the police may already have enough evidence to indict them, I still hope they run my video and photos against whatever databases they can in a facial recognition search--be it the DMV or the Sheriff departments' photo records of prior convicts.

I think a judge should order the database search, supported by my oath and the oath of the cop (that witnessed them tearing apart the electrical system on my bike), and particularly describing the database that is to be searched as well as the face and the address that match those of the perpetrators'.

It's different when a private citizen is using surveillance video in case a crime occurs rather than the government arbitrarily scanning everyone's faces regardless of whether a crime has occurred and without knowing exactly whom and what they're trying to find. As a way of gathering evidence to support the prosecution of a defendant beyond a reasonable doubt (or find exculpatory evidence!), there isn't any reason why the government's facial databases should be off limits to a thoroughly constitutional subpoena.

The problem is the technology being used in a way that violates the Constitution, not the technology itself.

I question whether this is something that is actually bannable to begin with. It seems like human cloning--it's gonna happen whether the government wants it to or not.

Sadly, I think you are correct. Short of a complete ban on computers, individual human liberty is going to become a thing of the past. Soon. To the applause of the fools who think they will be safer, richer, and freer, and that only someone else will get trampled.

Maybe Frank Herbert really was right.

The spice must flow?

"Get thee to a chipper!"

No, "The roads must roll"

"Thou shalt not build a machine in the likeness of a human mind"

"It's gonna happen whether the government wants it to or not."

The technology will happen. Whether the government will be able to use it to routinely violate our Fourth Amendment rights depends on whether we're willing to accept it.

the perpetrators that stole my motorcycle yesterday

Well, that sucks.

Most surveillance cameras lack the resolution necessary due to placement of the camera and the quality of the CCD. This is especially true of consumer cameras.

Only for now. Cameras follow the same generalized version of Moore's Law as all technology.

*beep*boop*

FACIAL RECOGNITION RESULTS: Hipster, Lesbian, Hipster.

Disproportionate impact on people of color and other minority and vulnerable populations.

Due process harms, which might include shifting the ideal from "presumed innocent" to "people who have not been found guilty of a crime, yet."

Facilitation of harassment and violence.

Denial of fundamental rights and opportunities, such as protection against "arbitrary government tracking of one's movements, habits, relationships, interests, and thoughts."

The suffocating restraint of the relentless, perfect enforcement of law. The normalized elimination of practical obscurity.

The amplification of surveillance capitalism.

A good reminder that you're dealing with San Francisco: The priority is fucked up and some of the reasons for opposing it are comical.

Matrix showed computers think we're green.

* surveillance scans face *

"Lizard? What tha...?"

* scans chest *

"Oh -- it's AOC!"

Will it run on the Gaydar principle?

"The future of human flourishing depends upon facial recognition technology being banned before the systems become too entrenched in our lives," conclude Hartzog and Selinger.

"... and ..., it's *gone*!"

"Disproportionate impact on people of color and other minority and vulnerable populations."

If EVERYONE is equally fucked then this would be totally fine?

"The amplification of surveillance capitalism."

Cops and city propose Orwellian measures against the citizens.

Surveillance capitalism

...

WTF

Start working at home with Google. It's the most-financially rewarding I've ever done. On tuesday I got a gorgeous BMW after having earned $8699 this last month. I actually started five months/ago and practically straight away was bringin in at least $96, per-hour. visit this site right here..... http://www.mesalary.com

This technology should be banned I think. It's interference with privacy, and it's illegal. I understand when AR VR software and facial recognition technology are developed to some good reasons that helps to secure or manage some routine things, but not when it deals with personal life.