Biomedical Study Reproducibility Scandalously Bad, Evidence for "Free Will," and Robot Rebellion by 2055

A scitech research and policy round up for January 5, 2016

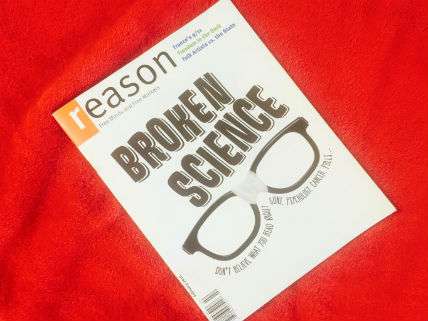

Broken Science is the main focus of the February 2016 issue of Reason (if you are a subscriber, you should already have it). I highly recommend my colleague Brian Doherty's brilliant article, "You Know Less Than You Think About Guns."

I have an article that details the reproducibility crisis in science. Back in 2005 John Ioannidis, the head of Stanford University's Meta-Research Innovation Center (METRICS) famously declared that "most published research findings are false." In my Reason article, Ioannidis says, "In several fields, it is likely that most published research is still false."

Ioannidis and his colleagues have just published an article in PLOS Biology that checked a random sample of 441 biomedical journal articles published between 2000 and 2014 to see if they make all of their raw data and full research protocols available. Such transparency would make it possible for other researchers to seek to replicate the reported findings. They report, "Only one study provided a full protocol and none made all raw data directly available."

A companion study that asks, "Where have all the rodents gone?," notes, "An essential element of the reporting of any preclinical study is the number of samples." The researchers astonishingly report that most studies on preclinical cancer and stroke do not adequately report experimental animal attrition. As a result they conclude that preclinical studies are reporting greater positive effects than is actually the case. To correct this problem, they call for greater transparency and recommend that researchers prespecify inclusion and exclusion criteria for experimental animals and provide a flowchart showing how animal attrition evolved in their experiments.

This could be part of the explanation for why Nature reported in 2012 that researchers could replicate the findings of only 6 out of 53 landmark preclinical cancer and hematology studies.

Do we have free will? Well, maybe we have "free won't." Back in the 1980s, researcher Benjamin Libet famously did experiments which showed that brains ramped up processes that produced unconscious "readiness potentials" prior to making conscious decisions. This finding has often been interpreted to mean that conscious free will does not exist. To probe this idea further, a new study in Science by German researchers created a prediction duel between computers and research subjects hooked up to electrodes that monitor their brain activity. The goal of the research was to test whether people are able to stop planned movements once the readiness potential for a movement has been triggered. As Eurekalert reports:

A specially-trained computer was then tasked with using these EEG data to predict when a subject would move, the aim being to out-maneuver the player. This was achieved by manipulating the game in favor of the computer as soon as brain wave measurements indicated that the player was about to move. If subjects are able to evade being predicted based on their own brain processes this would be evidence that control over their actions can be retained for much longer than previously thought, which is exactly what the researchers were able to demonstrate.

"A person's decisions are not at the mercy of unconscious and early brain waves. They are able to actively intervene in the decision-making process and interrupt a movement," says Prof. John-Dylan Haynes [from Charité's Bernstein Center for Computational Neuroscience]. "Previously people have used the preparatory brain signals to argue against free will. Our study now shows that the freedom is much less limited than previously thought. However, there is a 'point of no return' in the decision-making process, after which cancellation of movement is no longer possible."

The Robot Rebellion will break out between 2040 and 2055 according to sci-fi author and software engineer Logan Streondj. BusinessInsider reports that Streondj has calculated that when robots outnumber humans in the next few decades they will rise up to overthrow their meat-made oppressors.

Point to muse upon: Does this imply that robots have free will?

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

The Death of Science | Scientific Corruption and YouDeals a lot with the perverse incentives of government subsidized scientific research.

Two of the bigger problems is no one works without a grant. And no one gets a grant to disprove the accepted theory or to redo previous research. So nothing ever gets checked once it has been accepted and no one ever questions prevailing theories.

There are exceptions ot this of course but they are the exceptions. Generally people are doing research that builds on known theories on the absolute assumption those theories are complete and correct. And that is great unless the theories are wrong, which they of course sometimes are.

J: Excellent points - I report on some reforms that would encourage replications in my article, Broken Science.

My wife works for the research end of a med school. I had no idea how hard the life of actual researchers were and how difficult it is to get grants and to properly administer them.

Not only that but journals are reluctant to publish studies that disprove existing studies.

A few years ago, there was a study demonstrating psychic powers with obvious problems with it. Another group of scientists ran a study disproving that study and it took them over a year to find a journal that would publish it (PLoS One).

Hey, baby. Wanna kill all humans?

Steven Pinker tweets:

Irony: Replicability crisis in psych DOESN'T apply to IQ: huge n's, replicable results. But people hate the message. http://goo.gl/Vu03BV

Well there's very little scientific integrity the research of climate, but it doesn't mean the whole field and everything in it is garbage. Also true of psychology and IQ in particular. The validity of IQ is pretty well-established. There are correlations all over the place, with economic achievement, time preference et cetera. And it is true that people don't like the results, particularly lefties and egalitarians who want to imagine that all groups are equal in every way.

Molyneux did a good show on G a few weeks ago. Time to dust off an old copy of the Bell Curve and start lining up some Ashkenazi poetry tutors for the younguns.

And every once in a while I try to check in on the latest Taleb twitter nastiness with Pinker re: statistics, but I still can't decipher anything Taleb is (presumably) attempting to communicate, ever, at all. He appears to be the worst writer in the history of the English language.

KY: I am actually in a debate now with Taleb over his claims the GMOs will cause planetary ruin - the debate will be published later this year.

Looking forward to it, Ron. I heard his conversation with Russ Roberts re: the same and still couldn't see the sense in his position.

Ditto. I like some of his anti-fragility stuff, but the GMO stuff made no sense.

Also, all Swans in Australia are black.

Predictions of a robot revolution are generally speaking moronic because they inevitably fail to come up with a compelling reason WHY.

They seem to assume that not only will robots be able to achieve complete free will necessary to override their programming but that they will then conclude that humans are an existential threat to their survival and that the 2 species could not possibly ever coexist even though our resource needs are generally complementary rather than competitive.

Also, while some version of something approaching AI will probably be developed by the 2050's odds are that it will be of the sort where even if it did conclude that humans needed to be wiped out or even "imprisoned for their own safety" as in I Robot it wouldn't ever do anything about it because it would lack the the ability to take meaningful action on it's own

And even if you could build a robot that has its own free will, why would you ever do it? Giving it a free will defeats the entire purpose of building a machine to do something. You build a robot to do something because unlike human beings, who have free will and get tired and distracted and recalcitrant, robots do not have free will and do exactly what you program them to do perfectly over and over again. Who would want to buy a robot that may one day decide it doesn't want to work anymore or that it wants to kill you?

Ultimately, the belief in a robot revolt rests on the mystical idea that machines can somehow on their own achieve self awareness and free will. And that is absurd. Machines are what you make them. They don't magically turn into sentient beings.

And even if you could build a robot that has its own free will, why would you ever do it?

Some people, especially the kind who like to tinker with things for fun, do things just to see if they can be done.

Sure but a self aware machine would be of little practical use. Assuming it is possible to build one, and that is a huge assumption, it is unlikely that many would ever be built because their inherent unpredictability would make them useless for most tasks.

And what precludes this possibility? Seems like a pretty safe assumption.There's no known reason why a sentient being must be made of meat and goo instead of metal and silicon. If consciousness is all about neural pathways and associations, you could with enough resources and technology, build a machine that achieves the exact same processes and outputs only with different equipment.

Ask yourself, what does it mean to be "self aware"? The answer to that question is if anything that being self aware is the ability to stand outside yourself. Doing that means you have the ability to set your own rules of conduct and morality. So it doesn't matter how hard your parents drill into you the need to believe in Christ when you were a child, at some point you can look beyond those rules and decide if you want to follow them or make up your own.

Machines because they built on formal logic and math cannot by definition do that. Godel's second theorem states that no system can demonstrate its own consistency. Human reasoning is not built on conventional math the way computers are. As long as computers are built on code, no computer is going to be self aware. It can't be because being self aware means stepping outside its own rational system and no mathematical system can do that.

That's a tremendously huge assumption. Math and logic were invented by man to describe and predict the naturally occurring happenings in nature. In using math and logic to construct a thinking machine, we are simply applying the laws of nature to the construction of this machine.

Humans are built on code. We are not blobs of matter in thermodynamic equilibrium, we are ordered machines that stave off the entropy of the universe around us. Information is coded into every molecule in your body, down to your DNA which is composed of atoms ordered in very particular arrangements. There is no reason that self-awareness arises only from soft tissues and not other materials that are all comprised of the exact same baryonic matter.

Humans are built on code.

No we are not. If we were, we would not have self awareness and free will. The human brain is built on quantum logic not regular formal logic. Our brains don't work like computers and no computer, at least not one built of the code we have now, will ever work like our brain.

We absolutely are. Our DNA is code, the thoughts in our heads are code. The raw data pouring into our senses manifests as code that we make decisions on the basis of.

Quantum logic is exactly as man-made as classical or formal logic, John. Quantum logic does not exist in nature, nature simply is what it is for mysterious but probably not undiscoverable reasons, and quantum logic is a thing we've formulated to describe and predict it and possibly discover those reasons.

We absolutely are. Our DNA is code, the thoughts in our heads are code.

That is just not true. There is no evidence that our DNA in anyway determines our thoughts in any systematic and predictable way.

I didn't say it determines our thoughts.Our thoughts are code, our thoughts are ordered information, not completely random fluctuations.

All over this thread I've taken up camp on the free will side of the debate, so that should be clear. What I'm saying is that we are coded machines, we operate the way we do because of code that forms the basis of our physical bodies and our mind. If we weren't code, there'd be essentially no difference between you and an aggregation of hydrogen atoms. I can't believe that you're even arguing that life and consciousness is devoid of ordered information.

If consciousness is all about neural pathways and associations, you could with enough resources and technology, build a machine that achieves the exact same processes and outputs only with different equipment.

I suppose that's true, but not with binary transistors which are the foundation of all current computing. So with current technology I don't believe conscious AI can be created.

I agree sarcasmic. Not "strong AI" of the sort that has free will and such. We can and I think are creating computers than learn and adapt but they only learn and adapt within the perimeters their creators give them. That is not self awareness. That is just a clever machine. A self aware machine creates its own perimeters and is in no way bound by the ones its creators give it.

A guy I went to college with won a prize for writing a program that could play Ms. Pac Man, and improve its score. Looking at the results of making a certain move in the past and determining if it is a good or bad move right now is clever, but not self aware.

Yes. Self aware is his program deciding it didn't want to play Ms Pacman anymore but wanted to master Missile Command instead.

If I design a computer that is indistinguishable from a human brain except that it's made of silicon and instead of carbon based cells, what is preventing it from achieving self-awareness? Is thought wholly carbon dependent? I see no evidence to suggest that a self-aware brain must be made of the same biological material we are made of. Only evidence that nature itself is incapable "naturally" evolving life from silicon and metal (without help from nature's biological constituents like ourselves, that is).

If I design a computer that is indistinguishable from a human brain except that it's made of silicon and instead of carbon based cells, what is preventing it from achieving self-awareness? I

If it is a computer, it is not indistinguishable from a human brain. Human brains don't work like computers. We really don't know how they work.

Human brains are computers, many times greater computers than we've managed to build for ourselves but that's what they are. Information processing centers of our physical bodies, it's the seat of our consciousness.

I never said our brains work like computers that we currently have. But if you keep telling me that life is devoid of ordered information, code, then I'm going to have to amend my argument with the exception that your brain is powered by something akin to a Pentium 3 processor.

If I design a computer that is indistinguishable from a human brain except that it's made of silicon and instead of carbon based cells, what is preventing it from achieving self-awareness?

Your computer would have to be self-repairing and self-building. The brain is not a static machine. It is dynamic. Neural pathways change all the time. Maybe that's they why are made of goo instead of something solid.

And who's to say that with the right technology, that can't be replicated? I never said that piece of shit 1990's Gateway computer in my parent's basement is going to achieve self-awareness. A discussion about whether or not artificial intelligence can achieve self-awareness naturally assumes the existence of technology greater than what we have presently.

A discussion about whether or not artificial intelligence can achieve self-awareness naturally assumes the existence of technology greater than what we have presently.

Next time you should say that upfront so I can avoid the conversation, since while I do know a few things about current AI and current technology, I'm not up on technology that hasn't been invented yet. Yeah, we might be able to build things we can't build now with technology that we don't have now. Fuck you for wasting my time.

You know, this is a discussion about whether or not a machine could achieve self-awareness on par with biological life like we are accustomed to. The entire conversation is centered upon a hypothetical, one that ON IT'S FACE assumes computational technology somewhat more advanced than what we have now. And I've given you respect and intellectual honesty because I respect(ed) you. And you wrap up our conversation with this? Your behavior is pathetic and I really thought better of you than this. It's you who have been wasting my time if this is how you want to conduct yourself in a debate.

I wasn't centering it entirely on hypothetical technology that may or may not ever be invented. I don't think John was, or Zeb either. Only you. So don't be surprised when people get pissed off after having a conversation and then discovering that the other person was using a completely different set of premises but didn't say anything about it until the very end.

That's exactly what artificial intelligence is, since it hasn't been invented nor purported to have been invented.

What in the world are you talking about? Of course we're talking about technology that doesn't yet exist. I don't think anyone here was talking about their Dell laptop becoming sentient. In fact there is no such thing as a debate about the possibility of sentient or self-aware AI that doesn't deal with technological hurdles we have yet to cross.

The question is whether or not artificial intelligence, which again has not been yet invented, really can amount to sentience or self-awareness. I never deviated from that line of debate. What surprises me is how quickly you go from having a philosophical and (slightly) technological debate to acting like a petulant child. I guess if you run out of arguments you can say "fuck you", if that works for you. Going forward I'll just do my best not to waste my time with someone who acts like that.

Again you're assuming that something must have a practical use for someone to build it. Not all people think like you John. Some people build things for the fun of building them, or to see if it can be done. You know that cliche about the importance of the journey, not the destination? You are focusing on the destination, while others like the journey. Again, not everyone thinks like you, John. Let me repeat it again: not everyone thinks like you.

Oh, John? Not everyone thinks like you. Thought you should know that.

No I am not saying that. I am saying that if there isn't a practical use, there will be few of them built not that they won't be built. If they are not practical, they won't be built in the kind of numbers and put in the kinds of positions to cause much harm.

There are lots and lots of things in this world that I would not consider to be practical that are produced in great quantities. Practicality is a matter of perspective and utility, both of which are subjective.

You only need to build one. It will build the rest.

Or it will sit around and watch TV all day.

Why not both?

All we would have to do, is program a masturbation subroutine. They would never bother us again!

+Billions of Von Neumann machines

I think that the biggest problem with this discussion is that we don't really know what consciousness and self awareness actually are.

Are you kidding? Scientists would build loads of them so they could study robot society, including robot romance, robot politics, robot drama, robot finance, etc.

Of course it's be most economic if the robots existed only virtually, i.e. as autonomous objects in an overall program. No moving parts needed.

As long as they name the resulting machine "R. Daneel Olivaw," I'm OK with that.

Just like the first major self-driving rental car company needs to be named JohnnyCab.

Machines are what you make them. They don't magically turn into sentient beings.

You mean guns don't magically make people want to go out and shoot other people?

It's the same animist bullshit that drives a lot of the gun grabbers thinking. Plus, a lot of the people who believe there could be a robot/ AI revolt are luddites with a sadistic streak who deep down long for humans to be destroyed by their own creation. It didn't happen with nukes, so now they're hoping it will happen with robots/ AI.

The language the AI revolt people use is absurd. They say computers will "achieve consciousness". How exactly is that supposed to happen? Its like they want to believe in the idea of a soul but can't admit it so instead they engage in this magical thinking about machines somehow breaking the bounds set by their creators.

It is a magical idea that if you can write a computer program that writes its own code, that at some point *poof* it will become self aware. Presto! Chango! For my next trick...

And a lot of Scifi is based on that kind of magic trick. We will discover some new physics or some amazing advance in computing that no one has yet imagined that will solve some major problem.

I don't think that kind of thinking should be applied to making predictions about the near future.

I don't think that kind of thinking should be applied to making predictions about the near future.

I don't even like to have conversations based upon that thinking. Sure you might be able to make lots of things with stuff that hasn't been invented yet. So. Fucking. What. Back here in 2016...

No one has "free will" in the way that you mean, because we are all subject to physics, human and robot alike.

Believing in free will means believing in a substance that can affect the physical world, but cannot be affected by it. Many people believe in this substance and call it a "soul", but it is on you to prove it exists.

We clearly have free will in that we are free to think anything we like and do anything within our physical limits we choose. It is true that it is right now impossible to explain that fact without resorting to dualism. So if you don't like Dualism, you better come up with a better explanation for why we are as we are rather than deny the obvious reality that we have free will.

To say we don't have "free will" is to say that our perception of having it is an "illusion". That is possible but there is no evidence of it being the case and even if it were it is likely impossible to see beyond that illusion to ever get such evidence. So assuming it is is just that "an assumption" and an assumption contrary to experience.

We clearly have free will in that we are free to think anything we like and do anything within our physical limits we choose.

It may appear to you to be that way, but it's nowhere near a certainty. In fact it's highly dubious.

Those same physics are the source of our free will. At the quantum level things are too uncertain to ever know in a definite way. Suppose we had the greatest conceivable technology the universe will ever see at our disposal, we could take a scan of your body and never be able to know for sure the position of every atom along with it's velocity and spin. That's a fundamental feature of nature.

Those same physics are the source of our free will.

That seems like kind of a big assumption. It seems like a plausible idea, but I don't think there is a lot of evidence one way or the other. It certainly means that we can't know the state of a system completely (in a classical sense) and therefore can't make absolute predictions about the future. But I don't think that necessarily precludes the human brain operating effectively as a deterministic system.

Free will is what we already appear to have. Observational evidence points to it. To prove determinism on the other hand would require a computer that as complex as the entirety of the universe itself to calculate outcomes with certainty. From where I'm sitting, that makes determinism kind of a big assumption.

At the quantum level things are too uncertain to ever know in a definite way.

If things at that scale are uncertain (or random) then why would you assume that this means we can consciously control them?

Uncertain is not random. The position and velocity of an electron around the nucleus of an atom is always uncertain because while you can measure it's velocity with certainty, it's exact position remains uncertain proportional to the certainty of it's velocity. If you measure it's exact position, you cannot at the same time, measure it's exact velocity. This is called the Uncertainty Principle.

That does not mean it's random by any means. Fundamental particles in our universe behave like waves, they exist in multiple states at the same time but are bound within particular parameters. As large aggregations of these particles interact with each other on the quantum level, the wave function collapses into the events that we can perceive on the classical level.

So basically, it's not that things aren't happening in a certain ways, we just can't always measure them with accuracy.

It seems to me that the situation where we have free will and the situation where we don't look exactly the same in every way. If two situations are not observably different, can you really say that they are different? I can't think of any test that would distinguish the two cases.

I think that the question of free will is completely boring and pointless. Yes, we have free will in the sense that we can make decisions and then follow through on them if we aren't physically constrained from doing so. But we also do things for reasons and are constrained by the laws of physics. That notion of free will really isn't incompatible with determinism.

It's more important of a concept for contrasting with notions like mind control or acting under duress, I think. Compared to determinism, it isn't very meaningful.

But free will vs. determinism is where everyone always seems to want to go with it.

It wouldn't be necessary to build a robot with free will. A poorly programmed robot that is designed to serve a specific purpose could also be dangerous. The classic example in AI circles is a robot that a paperclip manufacturer programs to make paperclips. However, they screw up, and instead of programming it to make paperclips for humans to use, or even to enrich its owners, they accidentally program the robot to desire to build paperclips as an end in itself.

As a result the robot attempts to turn all the matter in the universe into paperclips. It tries to kill the human race because the atoms we are made of can be used to make paperclips. If the robot is smart and forward-thinking it may even try to hide its true nature until it can reprogram other robots to do what it does.

If this seems far-fetched consider how difficult it is to "program" the government to do something. We try to program the government to put child pornographers in jail, and instead it jails kids for taking nude pictures of themselves. A robot could be like that, minus the checks and balances.

Any likely threat from robots will probably come not from being angry or revolutionary so much as dangerously stupid and powerful, like a monkey with a machine gun.

I'm not sure how the existence of inhibitory processes invalidates Libet. If one denies the faulty (imo) reification of the brain and consciousness as a singular entity, then the existence of one deterministic system acting against another deterministic system is no big deal.

Who actively intervened, exactly?

KY: The homunculus, of course!

KY: Homunculi, I guess

I hear they like to hang out in Cartesian theaters. Bad neighborhoods, those.

I prefer non-euclidean 'hoods.

Descartes' evil demon, perhaps?

Dammit.

Who actively intervened, exactly?

If you can answer that question, you'll go far.

The real meatspace human controlling the avatar in our virtual world.

The conscious part of a person. I don't know what this says about free will, but it seems like pretty good evidence against epiphenomenalism.

If I were to attempt to make a perfect replica of Heroic Mulatto I'd fail every time. Not even nature itself could make such replica. No matter how good our technology or knowledge, the laws of nature prevents it. Quantum mechanics has taught us that even if I were measure the spin, mass and velocity of every atom in your body, at best the replica I build would be a close approximation because at the atomic and subatomic level things are uncertain and in flux at all times. The uncertainty principle here is that you can accurately measure a particle's momentum but not it's location simultaneously. Conversely the more accurate you've measured the particle's location, the less accurately you can possibly measure it's momentum or velocity. This means determinism in regards to consciousness would violate the fundamental laws of nature. Not even the universe "knows" what you're going to do next.

I am not a physicist, but a few people who are have given me the basics of Bohmian mechanics as a deterministic alternative to quantum theory. I don't care either way, but it's dangerous for liberalism to stake its entire claim on metaphysical free will.

I don't think that determinism undermines ancaps, much less liberalism broadly, though it would discredit the most diehard forms of praxeology that insist that there's an atomic agent pulling the strings in every action. I tend to treat praxeology as a system of infinitely useful heuristics rather than with math-level certainty. I'm sure Gordon or someone has written something about this, but I haven't looked into it at all.

I agree. Wasn't that Hayek's whole point with identifying the fallacy of scientism?

Some Misesians still seem to treat praxeology as though it has the logical certainty of mathematics. I know that was a point of dispute between Hayek and Mises, though it doesn't appear to have been particularly nasty (probably owing to the fact that Hayek was a consummate diplomat and revered LvM).

I'm not sure what the hardliners and oldtimers at MI think about the logical certainty of praxeology today.

I'm not sure it undermines any of those -isms. Even if everything is deterministic, you'd need a computer about as complex as the universe itself to calculate the outcomes. We would never be able to know the difference. Even with determinism, we operate on the premise that we do have free will. I'm making these arguments right now of my own accord and even if I'm not doing this of my own accord, it's impossible for me to tell the difference.

Exactly! The future is deterministic but intractable.

Nothing requires free will, except religious judgement. Justice doesn't require free will. Justice can be explained as required for prevention and deterrence of crime, but not retribution. For example, we shoot bears that attack humans, but bears have no free will and therefore can't be held accountable.

bears have no free will

They have as much as humans.

In this example you're describing how bears have no agency. Whether or not they have free will is another question entirely.

I don't disagree with that.

Sort of related. One solution to the Fermi paradox is that the civilizations that do persist do so because their societies retreat into a simulated world of abundance and rich experience that could never be achieved in the real universe. I think the Matrix would have been better if this were the set up, that humans intentionally inserted themselves into the Matrix and the renegade humans on the outside were conducting a sort of revolution, preferring instead to expand outwards into the cosmos rather than inwards. But I digress.

Disappointed that wasnt the appropriate Rush link.

I understand your point, but I respectfully submit to you that it only looks like God is playing dice because we're trapped within the 4-D walls of the space-time casino. Hawking argued in The Grand Design that "[q]uantum physics might seem to undermine the idea that nature is governed by laws, but that is not the case. Instead it leads us to accept a new form of determinism: Given the state of a system at some time, the laws of nature determine the probabilities of various futures and pasts rather than determining the future and past with certainty." Even if you don't agree with Everett's many-worlds, the existence of quantum determinism, or even deterministic chaos, still doesn't require libertarian* free will.

*used in the metaphysical sense, not the political!

"require" should read "necessitate"

I have enjoyed stories that use Everett's Many-Worlds "interpretation" as a platform. But, honestly, Hawking (and Krausse, et al.) say that the fact that the universe only seems fine-tuned for life as we know it, because there are an infinite number of universes and we just happen to be in one that has the correct parameters. And with a straight face (well, figuratively with Hawking. You know, because of the ALS?) they will turn around and use Occam's razor against the idea of a "creator".

So their unfalsifiable "interpretation" is better than my unfalsifiable "interpretation".

Reproducibility is so quaint.

Science is decided by consensus now.

Otherwise people might have their feelings hurt. You wouldn't want to hurt someone's feelings would you?

It's not that. You see, scientists are like really smart and stuff, so what does it matter if their work can be reproduced or not? They're like so super smart and stuff that someone would have to be like as smart as them to reproduce the science and stuff. Super smart people like that are better off doing new science than wasting time reproducing old science. Besides, they're like super smart.

Since they're like so super smart and stuff, why not just skip the reproducibility stuff and go straight to the vote?

SCIENCE!

Democracy uber alles in all things all the time.

If you have no principles and no morality, and position yourself with the majority all the time, then you'll always be a winner!

Depends on if they can pass the Voight-Kampff test or not.

Tortoise? What's that?

The authors don't seem to adequately define 'free will' in this case. Free will does not mean 'free from mechanical constraints.' One still has to INTERFACE with reality, which entails mechanical transformations, battling inertia and the physical laws. The fact that it takes time for our brains to translate the decision into a mechanical response up to a point where the cancellation of the response reaches a 'point of no return' does not mean that we're simple mechanical beings.

At that point one would ask the person making that conclusion if the process required to interpret the finding was entirely unconscious and deterministic, and if so what would be the point of making the conclusion known if other people's brains are determined by birth not to believe said conclusions. If they say that there's value in arguing those points, then wouldn't that show that the person is making a value-based conscious decision to promote the point?

Wouldn't that suggest that robots' actions would be predicated on a value system, and wouldn't that suggest that some robots would value human life (their masters) more than other robots, and wouldn't that suggest that some robots will not rise up to overthrow their meat-made "oppressors", and wouldn't that suggest that rather than having a consensus, you would have something akin to a civil war among robots?

Wouldn't be more logical to assume that robots cannot have free will at all?

The question is can the robot ignore the instructions of its makers. If it can and does so of its own accord, then it is self aware. The question is how exactly would that ever happen. If the robot's code says "do not kill human beings" and it operates on code, how does the robot of its own accord decide to violate that code? I don't see how. Sure, there could be other code that says "ignore any prohibition if you are confronted with X" that would cause the robot to ignore the restriction. But that is just more code. That is not self awareness. Self awareness and free will is making the leap beyond the restrictions and logic its creators gave it. And without some kind of magical thinking or a completely different and as yet unknown method of computing, I don't see how that is possible.

I cant remember if Ron has suggested this but one idea I have heard is that Masters degree students should do replications for their theses.

The freewill gibberish was just confused mental masturbation, but I really wish if they had given us the durations in which the signal was first detected and then could still be overridden.

One of the problems with the original work is that we have the ability to train our reflexes. So we decide, well in advance, how we behave in certain situations without conscious thought.

Pele did this for soccer. He planned thru every possible action he might take on the field so that he didnt have to think during the games.

Not only can we train our reflexes, we can completely ignore even our survival instinct if we choose to. Our bodies determine our wants and desires. You don't choose to desire something. But our minds have the incredible ability to choose which of those desires we act on and to what extent. And no amount of conditioning and outside coercion ever takes that ability from us. it just makes it harder to use but it never deprives us of it.

After going on a low-carbohydrate diet that featured all of the high-cholesterol foods that consensus science said were deadly -- cholesterol rich eggs, bacon, sausage, and cheese -- I lost about 30 pounds in 2015.

I was, however, concerned about how my cholesterol readings would fare in my next physical.

I just got the results: my cholesterol was also down by 30 points, and back in the normal range.

My take on all this is that people who call themselves scientists really don't know how to use inferential statistics correctly.

I always find these predictions about the future absurd. A robot rebellion? 2055? C'mon man. Stop this nonsense.

"Those who have knowledge, don't predict. Those who predict, don't have knowledge." - Lao Tzu