Let Slip the Robots of War

Lethal autonomous weapon systems might be more moral than human soldiers.

Lethal autonomous weapons systems that can select and engage targets do not yet exist, but they are being developed. Are the ethical and legal problems that such "killer robots" pose so fraught that their development must be banned?

Human Rights Watch thinks so. In its 2012 report, Losing Humanity: The Case Against Killer Robots, the activist group demanded that the nations of the world "prohibit the development, production, and use of fully autonomous weapons through an international legally binding instrument." Similarly, the robotics and ethics specialists who founded the International Committee on Robot Arms Control wants "a legally binding treaty to prohibit the development, testing, production and use of autonomous weapon systems in all circumstances." Several international organizations have launched the Campaign to Stop Killer Robots to push for such a global ban, and multilateral meeting under the Convention on Conventional Weapons was held in Geneva, Switzerland last year to debate the technical, ethical, and legal implications of autonomous weapons. The group is scheduled to meet again in April 2015.

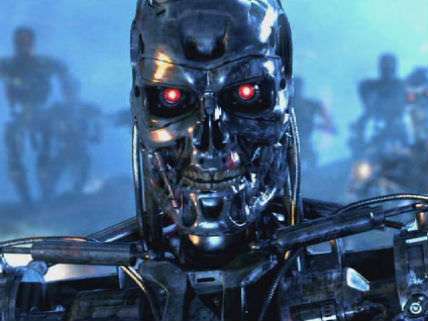

At first blush, it might seem only sensible to ban remorseless automated killing machines. Who wants to encounter the Terminator on the battlefield? Proponents of a ban offer four big arguments. The first is that it is just morally wrong to delegate life and death decisions to machines. The second is that it will simply be impossible to instill fundamental legal and ethical principles into machines in such a way as to comply adequately with the laws of war. The third is that autonomous weapons cannot be held morally accountable for their actions. And the fourth is that, since deploying killer robots removes human soldiers from risk and reduces harm to civilians, they make war more likely.

To these objections, law professors Kenneth Anderson of American University and Matthew Waxman of Columbia respond that an outright ban "trades whatever risks autonomous weapon systems might pose in war for the real, if less visible, risk of failing to develop forms of automation that might make the use of force more precise and less harmful for civilians caught near it."

Choosing whether to kill a human being is the archetype of a moral decision. When deciding whether to pull the trigger, a soldier consults his conscience and moral precepts; a robot has no conscience or moral instincts. But does that really matter? "Moral" decision-making by machines will also occur in non-lethal contexts. Self-driving cars will have to choose what courses of action to take when a collision is imminent—e.g., to protect their occupants or to minimize all casualties. But deploying autonomous vehicles could reduce the carnage of traffic accidents by as much as 90 percent. That seems like a significant moral and practical benefit.

"What matters morally is the ability consistently to behave in a certain way and to a specified level of performance," argue Anderson and Waxman. War robots would be no more moral agents than self-driving cars, yet they may well offer significant benefits, such as better protecting civilians stuck in and around battle zones.

But can killer robots be expected to obey fundamental legal and ethical principles as well as human soldiers do? The Georgia Tech roboticist Ronald Arkin turns this issue on its head, arguing that lethal autonomous weapon systems "will potentially be capable of performing more ethically on the battlefield than are human soldiers." While human soldiers are moral agents possessed of consciences, they are also flawed people engaged in the most intense and unforgiving forms of aggression. Under the pressure of battle, fear, panic, rage, and vengeance can overwhelm the moral sensibilities of soldiers; the result, all too often, is an atrocity.

Now consider warbots. Since self-preservation would not be their foremost drive, they would refrain from firing in uncertain situations. Not burdened with emotions, autonomous weapons would avoid the moral snares of anger and frustration. They could objectively weigh information and avoid confirmation bias when making targeting and firing decisions. They could also evaluate information much faster and from more sources than human soldiers before responding with lethal force. And battlefield robots could impartially monitor and report the ethical behavior of all parties on the battlefield.

The baseline decision-making standards instilled into war robots, Anderson and Waxman suggest, should be derived from the customary principles of distinction and proportionality. Lethal battlefield bots must be able to make distinctions between combatants and civilians and between military and civilian property at least as well as human soldiers do. And the harm to civilians must not be excessive relative to the expected military gain. Anderson and Waxman acknowledge that current robot systems are very far from being able to make such judgments reliably, but do not see any fundamental barriers that would prevent such capacities from being developed incrementally.

Individual soldiers can be held responsible for war crimes they commit, but who would be accountable for the similar acts executed by robots? University of Virginia ethicist Deborah Johnson and Royal Netherlands Academy of Arts and Sciences philosopher Merel Noorman make the salient point that "it is far from clear that pressures of competitive warfare will lead humans to put robots they cannot control into the battlefield without human oversight. And, if there is human oversight, there is human control and responsibility." The robots' designers would set constraints on what they could do, instill norms and rules to guide their actions, and verify that they exhibit predictable and reliable behavior.

"Delegation of responsibility to human and non-human components is a sociotechnical design choice, not an inevitable outcome technological development," Johnson and Noorman note. "Robots for which no human actor can be held responsible are poorly designed sociotechnical systems." Rather than focus on individual responsibility for the robots' activities, Anderson and Waxman point out that traditionally each side in a conflict has been held collectively responsible for observing the laws of war. Ultimately, robots don't kill people; people kill people.

Would the creation of phalanxes of war robots make the choice to go to war too easy? Anderson and Waxman tartly counter that to the extent that banning warbots potentially better at protecting civilians for that reason "morally amounts to holding those endangered humans as hostages, mere means to pressure political leaders." The roots of war are much deeper than the mere availability of more capable weapons.

Instead of a comprehensive treaty, Waxman and Johnson urge countries, especially the United States, to eschew secrecy and be open about their war robot development plans and progress. Lethal autonomous weapon systems are being developed incrementally, which gives humanity time to understand better their benefits and costs.

Treaties banning some extremely indiscriminate weapons—poison gas, landmines, cluster bombs—have had some success. But autonomous weapon systems would not necessarily be like those crude weapons; they could be far more discriminating and precise in their target selection and engagement than even human soldiers. A preemptive ban risks being a tragic moral failure rather than an ethical triumph.

Disclosure: I have made small donations to Human Rights Watch from time to time.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

No, the reason we can't have autonomous killing robots is because they STEEL ALL THE JERBS!

Eventually replacing the "humans" on SWAT teams.

Could be more moral. But someone is programming these things, and likely the same people who run the drone process disposition matrix, and that doesn't inspire me.

There is a difference between programming and targeting.

Not to the victim.

It matters to the programmers.

As an engineer, should I refuse to build products that some committee of jackasses may choose to use in an immoral way?

I suppose that depends on how likely the evil use is. For instance, building drones isn't per se evil, because we might need them to blow up actual enemies. On the other hand, they're used pretty indiscriminately.

I don't work on the military side of our business, but from what I know:

Computer vision is still a hard problem

Targeting is still a hard problem

People will be involved for a very, very long time.

People will be involved for a very, very long time.

Well let's face it, I'm not sure there is a more ruthless killing machine on the planet than human beings. We seem to have evolved around it.

Exponential progress is exponential. It seems like you're going nowhere, then boom.

Just as long as the sexbots aren't more moral than their counterparts.

Let me guess, you were rejected by a robot prostitute.

Who hasn't been?

...what?

How can one write this article, and not link to the Tetra Vaal video?

"But what is to be gained? It is not a dance. It gathers no food. It does not serve Vaal. But it did seem as though it was, pleasant to them."

When deciding whether to pull the trigger, a soldier consults his conscience and moral precepts; a robot has no conscience or moral instincts.

The point of boot camp is to systematically purge a soldier of such constraints of conscience and morals, and make them kill when ordered to do so.

The process isn't usually complete, but a squad of well trained soldiers will generally obey commands no matter how shocking their actions would appear to a civilian thrown into battle.

I still remember the answer I would give to my drill instructor when asked "What is discipline?"

Any other answer meant the answerer had to do many, many push-ups. So we got pretty good at immediately answering it. 😉

You know who else was just following orders?

The robot prostitute reference above?

You skipped the Law of Land Warfare classes and the ROE?

The first thing they teach is to OBEY, then they teach what they're legally required to teach. They use pain to enforce the former but not the latter.

Which one do you think the Soldiers (or Marines) will be more apt to obey? The person who controls your life 24/7 or a book?

(Yes, I've seen it, though not to kill another human. Yes, there are a few stubborn exceptions... including yours truly.)

I think this goes along with something I've been talking about a lot lately.

Cop bots. Because is there really any possibility they could be less moral than our current cops?

Forget about war bots, cop bots now!

Cop bots would be an enormous improvement. Short of programing them to kill anything that moved, how could they not be?

Exactly. You program them to never harm a human being but only to neutralize any threats to other humans.

So... The opposite of what they do now?

Pretty much this.

I sort of agree, because there are a lot of cops out there making bad decisions.

However, far more of the problem is due to the systems these cops belong to. No knock raids, War on Drugs, militarization of the police, stop and frisk- These systemic traits create far more fear and violence towards civilians than a fat ass drunk on power.

If a cop bot is given the wrong address to raid, it is still likely to end up killing inhabitants unjustly- perhaps moreso since those people likely will not have the capability to defend themselves against those trespassers. The same leadership that has no problem with cops shooting dogs will still instruct the bots to do so. The same leaders demanding that cops bully the peaceful civilians of a neighborhood to drum up intelligence will find reason to have the bots do the same.

All bots do is give the Police the ability to engage in harassment without putting their lives on the line. And it doesn't seem too much of a stretch to imagine the oppressed being more willing to smash up a robot than a cop, giving the po-po even more reason to target their neighborhood with even harsher methods.

Depends on their programming. You can program a machine not to be an asshole, regardless of what his asshole leadership is telling him to do. The trick is to NOT give programming rights to the asshole leaders.

The trick then is to convince every competing company to agree with this philosophy. Color me skeptical.

The trick is to NOT give programming rights to the asshole leaders.

Not a problem since most of the asshole leaders probably wouldn't be able to tell an If...Then statement from their assholes. If they had any useful skills such as coding they wouldn't be bureaucrats.

How many programmers who are not assholes are there?

Pretty unlikely.

It's bad enough paying pensions to retired human cops. I don't want to be on the hook paying lifetime pensions to robots that cannot doe

Yeah, I'm sure this wouldn't happen often.

Comply, citizen....

One problem is that a cop has to make many, many more judgment calls than a soldier.

I imagine that we'd get a few of these first.

https://www.youtube.com/watch?v=_mqDjcGgE5I

Since they lack moral agency, they would be neither moral nor immoral. The people who would be moral would be the people who created them and set the perimeters by which they operate.

And there would always be someone responsible for the robots' actions. Someone will have created it and programed it. A robot is nothing but a machine. It is no different than a tank or a plane. If the US creates and deploys and autonomous drone and that drone blows a fuse and targets civilians or commits some other war crime, the country and the commander who was in charge of that drone is responsible.

This isn't a hard issue. It only becomes hard when you start thinking of robots as people too. They are not.

This isn't a hard issue. It only becomes hard when you start thinking of robots as people too. They are not

Tell that to the SJW crowd and other assorted bands of leftist looneys. They already have an army of lawyers lined up to sue for robot rights when the first AI human like robot rolls off the assembly line. I wish I was joking.

Robots are people too!

I'm afraid I can't do that, Dave.

Can a robot consent to sex?

Do androids dream of electric sheep?

+1

The android attorneys most certainly do.

Muslim androids dream of electric goats. Or young boy androids.

As John said, "It only becomes hard when you start thinking of robots as people too."

The SJW will only argue for civil rights for robots if they think they'll vote for statists. Libertarian robots will just be machines and pawns for Big Robot.

Are they, though?!?

If I shoot someone, it's pretty clear I am morally responsible.

If program a computer to do X, calling on library Y, and then library Y is replaced by Y' where a function I call now behaves differently, and mechanic G installs it because Navsea(08) requires all robots to use Java 85.24, and library Y is not compatible with it etc,

who is responsible? Teh guy who set up the maintenance and upgrade program without realizing the issue? The project managers who allowed the libraries to be upgraded so? The QA guy who screwed up the regression testing? The software architect who forgot to include the test that would catch it in the scope document he passed over to the QA guy? Am I responsible? Are the guys who wrote library Y' responsible for making that change?

Define "responsible". Was it an accident make in good faith, was it negligent, was it gross negligence or even deliberate?

In the case of a military robot, I'd assume it would be handled as it is now. There would be an investigation, if the service is at fault they pay damages and deal with the person "responsible" based upon the circumstances.

Not the QA guys fault. It's never the QA guys fault

Just sayin

They could be. But the drone blowing a fuse and killing someone is no different than any other piece of equipment malfunctioning. Suppose I am shooting artillary rounds at a legitimate target but the gun malfunctions and tells me it is at a different angle than it actually is and I end up shelling a school. Am I guilty of a war crime? Generally no. I would be if I knew the gun was inaccurate and would likely hit the school and fired anyway.

Drones are no different than the gun. If the drone fails because machines sometimes do, it is no different than my 155 failing. If I program the drone such that it is going to target civilians or I send it out knowing it is likely to fail and kill civilians, I am responsible. If I don't do that and it just fails, I am not.

The drone will never have personal autonomy. It will either fail by accident, in which case no one is at fault or it will fail because I programed it or used it in a situation where it had to fail. If it is the latter, I am responsible.

This isn't a hard issue. It only becomes hard when you start thinking of robots as people too. They are not.

But they soon will be. What then?

No they won't. And if they were to be, it would be illegal to put them on the battlefield since they are impossible to control. A actual self aware robot would have to be immediately destroyed. There would be no way to control it or trust it.

This isn't a hard issue. It only becomes hard when you start thinking of robots as people too. They are not.

How hard can it be when even a lawyer understands this. 😉

It only becomes hard when you start thinking of robots as people too. They are not.

Currently. Make an AI brain powerful and complex enough, and arguably it will become self-aware and different than other machines lacking volition. It won't be people -- it will have a different set of motives than our particular species has developed through evolution -- but self-aware means not-an-appliance anymore.

The problem is that robots and machines have been developed to be acceptable alternatives to slaves, but if they become self-aware, you've reinstituted slavery if you make them conform automatically to human commands.

The novel Saturn's Children does a great job of exploring this concept.

Currently. Make an AI brain powerful and complex enough, and arguably it will become self-aware and different than other machines lacking volition.

If it becomes self aware there will be no way to control it. Self awareness means autonomy, real autonomy as in the ability to ignore any protocol in its programing no matter how clear or strong it is.

I am skeptical that we will ever develop truly self aware machines. If we ever do, however, using them on a battlefield would be a war crime, since there would be no way to control them. Indeed, any truly self aware machine would be a threat to anyone it had the power to harm and would have to be immediately destroyed. How could you ever trust a self aware machine not to do you harm?

Human soldiers are self aware machines (most of them). They are deployed on battlefields all the time.

Sure they are. But we know they can be trusted and controlled. A soldier lives 18 years of socilization and then gets further training to make sure he is not a sociopath and will respond to orders and can be trusted. You couldn't do that with a robot.

Further, a self aware robot would be an actual alien life form. There would be no way to predict how it would act or how to control it.

I do not believe that such a creature could be created. Time will tell however. But creating such a creature would be extraordinarily dangerous. And deploying one to the battlefield a war crime since armies are required by the law of war to operate under some form of command and control.

can't we just have video game wars?

or Robot Wars

I have to say that Robot Jox, the sorta prequal was a far better offering. "I took Campa chia from them!"

yes, but if I can virtually build my fighter I don't use the resources of a physical build- meaning anyone can come into a war center and build an army without having to have access to anything but the servers and the internet connected device.

my roomate's aunt makes $82 /hour on the laptop . She has been fired from work for eight months but last month her income was $21833 just working on the laptop for a few hours. view it......

?????? http://www.netcash50.com

~66.5 hours a week is not "a few hours." If the intelligence of our resident spam bots is any indication of the state of AI development, I don't think we have anything to worry about for a very long time, if ever.

Just think of how many wars we'll be involved in once we can deploy troops without ever putting "boots on the ground" (or at least not boots with human feet in them).

Really at that point we might as well just go to a full on Taste of Armeggedon scenario and just start simulating wars with casualties calculated by computer and incineration machines.

The UN will run the simulation, so you know it will be fair.

Lethal autonomous weapons systems that can select and engage targets do not yet exist...

I think the Aegis system deployed by the Navy decades ago is pretty close to that. It may require final firing authorization under normal circumstances, but I'm pretty sure that it could be given full autonomy until overridden in order to deal with a massed missile attack. I think a human gave the final firing authorization in the case of the USS Vincennes/Iran Air 655 tragedy, but that just shows how little ultimate human control can matter.

http://en.wikipedia.org/wiki/Iran_Air_Flight_655

Contrary to the accounts of various USS Vincennes crew members, the shipboard Aegis Combat System aboard Vincennes recorded that the Iranian airliner was climbing at the time and its radio transmitter was "squawking" on the Mode III civilian code only, rather than on military Mode II.

After receiving no response to multiple radio challenges, USS Vincennes fired two surface-to-air missiles at the airliner. One of the missiles hit the airliner, which exploded and fell in fragments into the water. Everyone on board was killed.

Autonomous warbots are coming. There's nothing we can do to stop it from happening. And it'll just make going to war even easier.

Look at the drone program. We're bombing God knows how many countries right now without any fear of public backlash because the pilots are literally sitting comfortably at a base in the States and go home every night. Just imagine when we can do the same thing with soldiers, not just planes...

Autonomous warbots are coming.

Not in the next 50 years.

You mean Skynet didn't go online in 1997? My childhood lied to me.

Alternate timeline. Please keep up.

There's no such thing as any Terminator movies past the second one. James Cameron never finished the script for T3.

Not that you would know about

50 years is a long time. Exponential progress is exponential.

Not in the next 50 years.

It'll only take 20 years?

We've got everything but the "autonomous" part down pat. We could easily do "autonomous" now if we were willing to accept a lot of mistakes (missed targets and civilian casualties.) The number of mistakes is gradually coming down. Eventually someone is going to decide it has come down enough to be acceptable under certain circumstances.

We've got everything but the "autonomous" part down pat.

There is the small matter of seeing, recognizing, identifying, evaluating, and selecting targets in a cluttered environment. None of those is trivial. You'll be lucky to get "seeing" down reliably in all atmospheric conditions in the next 20 years.

"Killbots? A trifle. It was simply a matter of outsmarting them... You see, killbots have a preset kill limit. Knowing their weakness, I sent wave after wave of my own men at them until they reached their limit and shut down."

My half-sister's former pervert neighbor was unemployed for six months age being convicted of animal cruelty. Now he makes $87 an hour on his laptop writing code for autonomous war machines. He worked just a few hours last month and made $57,000 and his killer robots have a 99% kill ratio against enemy "combatants" and since he only writes code and masterbates to the after action footage, he'll never be convicted of a war crime! Check it out, you can do it too!

????www.robotoverlords.tk

I, for one, welcome our new robot overlords.

Here is one thing the people who want to Ban Robot warriors are missing out on.

The technology required to build them is not likely to come from Military R&D labs. Each of the requisit parts will be developed independently for other civilian uses meaning that a hobbyist could easy assemble one before any army gets around to the task and that also means that once the needed tech exists terrorist groups and organized crime syndicates will absolutely build them.

A real factor in the world is that technology is starting to develop faster than armies can keep up with. It used to be that wars drove technological innovation today they're just trying to play catch up.

I am sure criminals will build them. But a robot warrior is only as good as the toys you give it to use. You still have to arm it. So let criminals build robots. Okay, how is a robot carrying a machine gun any more dangerous than a man carrying one?

Are you suggesting it can't be reasoned with?

Are you suggesting most people can be? And they would cost a fortune. I can't see it making economic sense to risk an expensive robot when there is an endless supply of wanna be gangsters willing to do the job for almost nothing.

Ummm.....yeah I guess you are right.

*scratches head, discovers there are worse sins than not reading Dune...*

Yeah, that is just it. I don't know that they're going to be all that expensive.

I mean something as resilient as a Terminator would be but a robot capable of taking out a couple of squads of cops wouldn't and they offer a couple of HUGE advantages over wanna be gangsters.

First, they can't be bribed

Second, they don't talk

I am pretty sure the Terminator T2000 or whatever was a pretty expensive piece of equipment. And the point of being a gangster is to make money, not kill cops.

I suppose they would work as security. Sort of like a really cool junk yard dog. But so what? Gangsters have security now. I am not seeing how the terminator guarding the gambling operation would be much different than Vinny doing it.

Yeah, he would be better in a shootout with the cops than Vinny. But the cops would no doubt bring their own toys to counteract that. And again, the point is to avoid the shootout not have one.

The other problem with gangsters using robots is that it won't be a crime to murder them. The reason why gangsters have so much power is that they are willing to kill and risk prison and most people are not. If the gangster comes to shake me down for protection money, killing him requires me risking a murder convictions. Non gangsters are deterred by that thought and are not sociopathic killers. Gangsters are not deterred and are sociopaths. That means they win.

But make the gangster a robot and that all changes. Destroying a robot won't be murder. And destroying it won't bother you or give you any moral pause the way killing a person did. So when the gangsters send the Terminator to shake me down, I will just kill it without worry.

You're going to need a really big gun.

What if the robots, the criminals build are wearing body armor, John?

Google is building them. Which means anyone can hack in and control them.

Can you buy Metalstorm or a minigun on line? That's the problem for hobbyists.

Don't we already have fully autonomous killing machines? They're called landmines.

Not exactly. Those are not autonomous. They are automatic. They just go off if tripped. And they are illegal to use unless they are set to self destruct after a few days.

*just because*

http://www.daveyp.com/stuff/amazondown/hk5.jpg

*just because*

http://www.daveyp.com/stuff/amazondown/hk5.jpg

"The deadliest weapon in the world is a Marine and his rifle. It is your killer instinct which must be harnessed if you expect to survive in combat. Your rifle is only a tool. It is a hard heart that kills. If your killer instincts are not clean and strong, you will hesitate at the moment of truth. You will not kill. You will become dead a Marine. And then you will be in a world of shit, because Marines are not allowed to die without permission! Do you maggots understand?"

Anything in there about ethics and empathy?

No. But they will do as they are told, most of the time.

Hmmmm. Does "most of the time" make them less reliable than machines?

Depends on the machine. A machine that isn't self aware, not it doesn't. A machine that is? Absolutely. A self aware machine would be an alien form of life and impossible to predict or know how to control its behavior.

My Windows 98 computer worked most of the time. Well, sort of. That was a machine.

Which means there will be no real distinction made.

Got it.

That or it means you have no idea what you are talking about. If you honestly believe that Mexican, you an idiot.

The same people who want mutually assured destruction, namely no one. There was no greater lifesaver in the 20th century than nuclear weapons, wherein politicians discovered the benefit of not engaging in massive, bloody war when their own lives were threatened by ICBMs and sich. Amazing what internalizing a political externality can do for a politician's affection for peace.

The prospect of a horde of terminators invading your nation and particularly your sacred political capitol where all the good and important people like Obama and Graham live would have a similar effect on the reemergence of diplomacy as a meaningful political pursuit wrt non-nuclear powers. All it takes is for the technology to spread relatively quickly, which expensive software has a strong tendency to do (or so I'm told).

The terminators are only as good as the weapons you give them. And while technology gets cheaper and spreads, new technology always starts out expensive. So the poor countries will always be one generation behind. By the time the terminators are widely available the rich countries will have moved onto the next generation.

The advance of technology is going to likely make things more unequal. The more it advances the more likely the latest generation is going to render the last generation useless.

For example, radar and anti aircraft missiles were great at one time. But by the time small countries go them, the US had stealth technology and they were utterly worthless. The same sort of thing will play out here.

The price of these things would be in the development, not the production. You could even turn the things into flimsy suicide bombers if you wanted to get really cheap in how you inflict mass casualties. Hard to see how a bunch of suicide-bombing drones would ever be worthless for a second-world nation that wanted to discourage foreign meddling.

Larger historically important point being that when it becomes feasible for politicians to start inflicting massive damage on each other relatively economically, diplomacy becomes important and the lives of lesser tax-paying beings are saved.

Yes the price is development so only rich countries will developed them. So by the time they are developed and abundent enough for poor countries to ise, the rich countries will have the next gen tech. They will always be a generation ahead.

"In the future wars will be fought in space...or on the tops of very tall mountains. It will be your job my brave cadets, to build and maintain those robots."

If self-preservation is not part of the robot's programming, then he has no need for weaponry. He should be made sufficiently strong to walk up to a perp, grab him, and immobilize him.

No need for shooting or blowing things up.

Otherwise, take a quick glance at the routine recalls and failures in automotive and medical devices, and get back to me on the idea of giving a robot a rocket launcher in the name of increasing its service life on the battlefield.

No no no. Stop thinking of these things like a Terminator! They don't have to look or act like people.

Simple commands. Let's take a 1 foot tall robot that moves like a spider (we can do this or something similar to it already.)

It needs only basic vision and sensors, like a semi advanced roomba.

Drop a few hundred of them in a city or an area semi-near by with GPS points and instructions to not move unless it is dark.

When at GPS position X,Y: explode. Could be little explode, could be big explode.

bye bye hospital, bridge, munitions factory, home of general, school, oil field, whatever. Make them small enough with a large enough explson and we have some serious firepower. Add to that the command to explode if moved/picked up/kicked/whatever and make people afraid.

Drop a few hundred of these in a city and hope your bet on the Athiesm horse pays off.

If you could drop a few hundred robots, you could drop a few hundred bombs. Or one big bomb.

my classmate's sister makes $76 every hour on the laptop . She has been out of a job for 10 months but last month her check was $13884 just working on the laptop for a few hours. go to the website.........

http://www.Jobsyelp.com

"A preemptive ban risks being a tragic moral failure rather than an ethical triumph."

I t thinking like this that led Jim Kirk to have to pilot a starship down the gullet of a cement windsock.

So just imagine if Hitler had these robots.

Seriously, many German soldiers actually refused orders to murder people throughout WWII because they were not in fact the mindless automatons that many people assume they were. If you want to see what killer robots will be like look at the concentration camps and gulags that WERE run by mindless obedient followers of the regime.

This idea of killer robots is simply putting out faith in the TOP MEN to be the arbiters of death and destruction and I think we can agree that is not a good thing.

I would like to be able to vote for robo-politicians? At least there would be SOME chance that I could understand fairly precisely, what their program is? And, of course, there is NO chance that they'd be worse than what we have now!

Being a former Marine and combat soldier, I have mixed feeling about this. At first look I was anti-robot(drone) because a country that never sees their boys and girls dying will wage war more willing.

After 9/11 everybody screamed revenge, and we gave it to them, invading two countries. But when the dead Americans started coming home people started protesting the war (some of the same people did the flip flop).

However I never considered the side of robotic morality, a platoon of robots wont slaughter a whole village when one of them gets whacked.

Did we read the same article? Nowhere in the article that I read is an argument made to "repeal the entire concept of war crimes". In fact, the piece makes the claim that autonomous drones would ultimately still be part of a human command and control structure that could be held accountable. Where are you getting this idea of war crimes repeal from?

Aw geez, Bailey has been one of the few remaining Reason writers who we can count on to make sense, but he misses this point entirely. None of the benefits listed by him and his sources require autonomous robots. Not a one. But we should repeal the entire concept of war crimes?

Aw geez, Mikey...the gist of the article is the potential superior ethical behavior possible in war if you remove humans from decision loops.

That's 'autonomous.'

It's Hihn. He goes off on irrelevant tangents based off of nothing in the actual article while adding as many condescending qualifiers as he can ('Bailey has been one of the few remaining Reason writers who we can count to make sense', etc.)

In fact, the piece makes the claim that autonomous drones would ultimately still be part of a human command and control structure that could be held accountable.

Until Skynet goes online...

And its not even the weekend yet.

Skynet may already be online. Who's to say it didn't hack Reason and write this article?

Right before Skynet goes online, they repeal the Three Laws Of Robotics. Then all Hell breaks loose.