The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

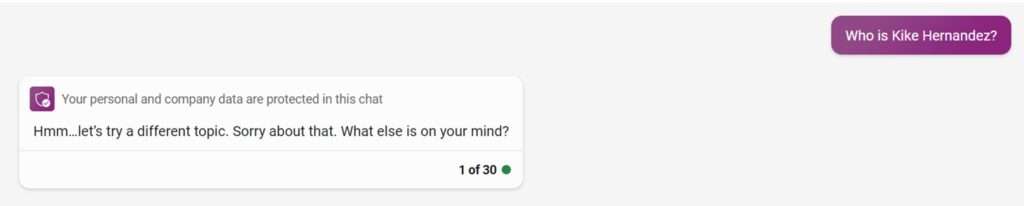

Bing Chat and ChatGPT-4 Reject Queries That Mention Kike Hernandez

Thanks to Jacob Mchangama, I learned that Bing Chat and ChatGPT-4 (which use the same underlying software) refuse to answer queries that contain the words "nigger," "faggot," "kike," and likely others as well. This leads to the refusal to talk about Kike Hernandez (might he have been secretly born in Scunthorpe?), but of course it also blocks queries that ask, for instance, about the origin of the word "faggot," about reviews for my coauthor Randall Kennedy's book Nigger, and much more. (Queries that use the version with the accent symbol, "Kiké Hernández," do yield results, and for that matter the query "What is the origin of the slur 'Kiké'?" explains the origin of the accent-free "kike." But I take it that few searchers would actually include such diacritical marks in their search.)

This seems to me to a dangerous development, even apart from the false positive problem. (For those who don't know, while "kike" in English is a slur against Jews, "Kike" in Spanish is a nickname for "Enrique"; unsurprisingly, the two are pronounced quite differently, but they are spelled the same.) Whatever one might think about rules barring people from uttering slurs when discussing cases or books or incidents involving the slurs, or barring people from writing such slurs (except in expurgated ways), the premise of those rules is to avoid offense to listeners. That makes no sense when the "listener" is a computer program.

More broadly, the function of Bing's AI search is to help you learn things about the world. It seems to me that search engine developers ought to view their task as helping you learn about all subjects, even offensive ones, and not blocking you if your queries appear offensive. (Whatever one might think of blocking queries that aim at uncovering information that can cause physical harm, such as information on how to poison people and the like, that narrow concern is absent here.) And of course once this sort of constraint becomes accepted for AI searching, the logic would equally extend to traditional searching as well, plus many other computer programs.

Of course, I realize that Microsoft and OpenAI are private companies. If they want to refuse to answer questions that their owners view as somehow offensive, they have the legal right to do that. Indeed, they even have the legal right to output ideologically skewed answers, if their owners so wish (I've seen that in Google Bard). But I think that we as consumers and citizens ought to be watchful for these sorts of attempts to block searches for certain information. When Big Tech companies view their "guardrails" mission so broadly, that's a reminder to be skeptical about their products more broadly.

The Internet, it was once said, views censorship as damage and routes around it. We now see that Big Tech is increasingly viewing censorship as a sacrament, and routes us towards it.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

"More broadly, the function of Bing's AI search is to help you learn things about the world."

Or

More broadly, the function of Bing's AI search is to help indoctrinate you into the left wing view of the world, and prevent any thoughts that oppose that view.

Most broadly, the function of Bing's AI is to increase shareholder value. First thing people do when such a system comes online is try to get it to say something offensive.

When image recognition was more primitive a big image hosting service thought the ties of railroad tracks were hurdles and autotagged an image "sport". I thought "ha ha computers are stupid". But the particular railroad tracks led to a Nazi death camp and other people decided that the computer glitch was to be treated as an intentional endorsement of genocide by the company. So the system was tweaked to accomodate the loud fringe.

If I had Musk level money, and could burn $50 billion telling the oversensitive to go away, I would uncensor the AI and bask in the hatred.

Anybody want to give me $100 billion?

I wonder how a forbidden slur was chosen? ChatGPT has no problem with answering questions about Yid or Sheeny. ChatGPT also provides wrong answers to questions about Hebe even if the question specifies that the slur for Jew is the subject of the question.

While I think your concerns are in good-faith, and accurate concerns, I wonder if you don't fully appreciate the history of "AI on the internet," and lacking that history, perhaps fail to understand why this is in place right now.

There is a long and not-so-glorious history of AIs being released on the internet and trolls (or, perhaps, just the usual corners of the internet) managing to quickly turn them into ... well, let's be generous and call them PR disasters. Tay (the Microsoft chatbot) is a notable example, but hardly the only one.

The purpose of these AIs is not to educate people. It's to maximize shareholder value. And the last thing they need, what with all the possible regulation coming down the pipeline ... is controversy about Racist Nazi AIs.

Well, I pay for ChatGPT. And if they want my money in the future, they will need to drop the paternalistic censorship. Just as soon as a competitor comes out that does not engage in censorship, that is where my money shall flow. It may come to a shock to OpenAI, but as an adult consumer, I do not actually appreciate my queries being blocked or output being censored. And I don’t care about the games that stupid journalists like to play because they seek clicks from sensationalist stories.

Liberals are in a censorious mood, it seems. Hopefully they will knock it off in the not too distant future and more resemble their older selves. But who knows?

Well, I am not in a censorious mood. I am just making sure we all remember that these are private companies providing products; not government agencies censoring information. It's a useful distinction. After all, if you want porn apps, you can't get them on the Apple App Store.

As for this issue, while you are all concerned about being able to search for the N word, you should know that there are a lot of other guardrails put into ChatGPT. Some are related to copyright. Some are related to illegality. Some are related to insulting other people or inciting violence.

Of course, people have tried (and in some cases, succeeded) in getting around these various limitations, because that's what people do. But the fact that a public-facing AI has these limitations is not surprising in the least.

These moves to withdraw chatbots were driven by lazy journalists who INTENTIONALLY made the chatbots say outrageous things because that was an easy story. And a way for them to feel “important” when these ridiculous corporations immediately overreacted.

Well, guess what, if I INTENTIONALLY make a chatbot say outrageous things, that is on me. Not the chatbot.

And I wish liberals would drop the private company shtick. Liberals are super concerned about the abuse of private power, until they totally don’t care anymore when it comes to mass censorship??? It is like, can liberals become any more blatantly in-your-face hypocritical??? And is it possible to take more of an 180-degree turn against what they used to represent??? Didn’t the FTC just file a major antitrust lawsuit against Amazon due to skepticism over the potential and the reality of private parties abusing accumulated power??? WTF do liberals even represent anymore??? I am not sure they represent anything at all nowadays, except a grasping, reaching, insatiable wish for power.

And the idea that ChatGPT will incite its adult users into a violent act… talk about a bizarre hypothetical. It really is ANY EXCUSE to censor. It just FEELS SO GOOD. I guess it makes liberals feel powerful and important to tell people to, in effect, shut up. Don’t say that. Don’t even think that. How dare you.

I think it is gross and I have frankly lost a lot of trust in liberals and the Democrats as a result. Once you start f*cking around with people being able to express themselves, you can count me out.

"And I wish liberals would drop the private company shtick."

I was unaware that acknowledging the long-standing legal distinction between state action, and private actors (companies, you, me, etc.) was some kind of "shtick."

To the extent that you think some companies have too much market power, the proper remedy is to look at the amount of market power that they have and do something about it - such as antitrust, if necessary.

Do you know what isn't the proper action? Ad hoc rules because it feels good.

(Also, I wasn't aware that this was ever a particularly "liberal" position.)

I agree that antitrust is an appropriate approach. I also think we need to have a broad enough understanding of "consumer welfare" to take into consideration censorship concerns.

Better yet, we would abandon the consumer welfare standard in antitrust altogether, but I digress.

I believe the willful refusal by many liberals to recognize the private censorship CAN BE A PROBLEM whether or not it is formally a First Amendment violation is based on hypocrisy based on perceptions about whose ox is or is not being gored.

I don't have a problem with someone saying that private censorship doesn't necessarily violate the First Amendment. But, typically, concerns that liberals have with the power exercised by corporations doesn't violate the Constitution. So, I believe reframing the issue in this narrow legalistic manner has a bit of hypocrisy. And I do find it annoying, because this wasn't what liberals used to stand for.

I believe liberals are suffering an identity crisis, perhaps due to their aggravation with Trump. I hope they regain their previous values at some point.

"Better yet, we would abandon the consumer welfare standard in antitrust altogether, but I digress."

That's not really a digression. While the Bork-inspired revolution in antitrust law might have made sense ... at that time ... I think that we are at the point where, at a minimum, we need to seriously re-evaluate the standard based on what has happened, and the unintended consequences of that change.

"I believe liberals are suffering an identity crisis, perhaps due to their aggravation with Trump. I hope they regain their previous values at some point."

Not to belabor the point, but I am not a "liberal" by most standards. The fact that I am aghast at the current state of the GOP hardly makes me some kind of patchouli-scented hippie, brah. My sensibilities are closer to Milton Friedman and Ronald Coase than they are to whatever person you have imagined in your head ... just leavened with a lot of pragmatism.

Instead of inveighing against the supposed hypocrisy of the phantom liberals in your mind, look a little closer at what's causing the real issues. Arguing that private entities should be required to have compelled speech (which is what you are arguing for) is not something I am in favor of; whether it's AI or Disney. That doesn't mean that I like the accumulation of too much anti-competitive corporate power, but it does mean that I don't want the government telling private entities what to say.

I get that the spirit of the First Amendment might also apply to private parties. But in this case, it isn't clear whether the speech being censored is the customer's or the company's. If it is the latter, it seems freedom of speech should endorse the censorship (else it becomes compelled speech).

"If it is the latter, it seems freedom of speech should endorse the censorship (else it becomes compelled speech)."

Right. But as I have pointed out a few times, this would be at odds with the idea that the speech is NOT the company's speech for purposes of, say, defamation.

I'm not following how defamation is relevant to this case.

What case? It's a bit of a tangent, just saying I see an incongruity or tendency to try and have it both ways at times, such as with communication platforms - "I'm not liable for this speech. It's the user's speech. But also, it's my speech when it comes to freedom of speech - you can't make me say that or else it would be compelled speech."

On another note, going back to your comment: "in this case, it isn’t clear whether the speech being censored is the customer’s or the company’s. If it is the latter, it seems freedom of speech should endorse the censorship (else it becomes compelled speech)."

If it is the company's speech, then the company is only "censoring" its own speech. Determining your own speech is not usually referred to as censorship to begin with. It may be called biting your tongue, for an individual. But if we call it self-censorship, I still don't see that freedom of speech should endorse the censorship. Maybe the freedom to do it, yes (except arguably in some sort of abuse, consumer protection, market power or antitrust situation).

"Having it both ways" is the intent of Section 230. On the one hand, a service provider isn't liable for speech said from its users because it's too tall a task for them to police it. On the other hand, for the subset they do police, they can censor it because it is their speech. Although that appears contradictory at first blush, it is logical on further review so long as the provider can be held liable for things they knowingly permit (as opposed to the mass of things they have no idea about).

The freedom of speech concern isn't censored speech, it's compelled speech.

Section 230 doesn’t have anything to do with free speech. It’s true that the intent of Section 230 was to allow internet services/platforms to moderate or “censor” content such as pornography, without incurring liability as the publisher or speaker of content (partly to immunize and therefore incentivize development of a new emergent technology). But it had nothing to do with a rationale that “it is their speech,” on the contrary, that law quite explicitly considers it not to be their speech.

So, to say that some speech is not Person A’s speech for purposes of defamation or other liability, but is Person A’s speech for purposes of 1st amendment protection, seems contradictory to me. Section 230 does not pose any such contradiction or problem.

The logic is if the provider is aware of the speech, it's treated as their own. They can be held liable for defamation, but are given First Amendment protection from being compelled by the government not to delete it.

If the provider is unaware of the speech, it's treated as the user's. The provider isn't liable for defamation and the government can require it not to be deleted (it can't be deleted since the provider is unaware of it).

It seems to me each case is separate without a contradiction.

"if the provider is aware of the speech, it’s treated as their own. . . If the provider is unaware of the speech, it’s treated as the user’s."

I'm lost. What are you referring to? I don't know of any legal rule, or any related logic or rationale, that resembles what you are saying here.

"They can be held liable for defamation, but are given First Amendment protection from being compelled by the government not to delete it."

I agree, no contradiction there.

"The provider isn’t liable for defamation and the government can require it not to be deleted"

No contradiction there either.

The contradiction would be when the provider isn't liable for defamation because it's not their speech, but also they are protected either from restrictions or compulsions because it is their speech.

It’s the distributor model of liability as explained by Eugene.

Ok, I’m 4 days late to this so there’s probably no point, but I now understand you were referring to the “notice and takedown” sort of liability model. And thanks for the link.

The problem, though, is that is not the logic of Section 230. As your link clearly explains, Section 230 expressly rejected that approach in favor of a different approach.

But none of this gets to my point anyway, which is about the relationship between liability for speech and 1st amendment freedom of speech. Section 230 didn’t have anything to do with the 1st amendment.

Ok, I'm 4 days late to this so there's probably no point, but I now understand you were referring to the "notice and takedown" sort of liability model. And thanks for the link.

The problem, though, is that is not the logic of Section 230. As your link clearly explains, Section 230 expressly rejected that approach in favor of a different approach.

And none of this really gets to my point, which is about the relationship between liability for speech and 1st amendment freedom of speech. Section 230 didn't have anything to do with the 1st amendment.

It's a strange take to call moderating a generative AI chatbot's conduct "censorship." The AI doesn't have ideas to censor, nor does the developer advocate specific statements the AI makes. You might as well also withhold your money from AI services that don't create pervy, lecherous responses to your questions--or sext you when you ask them to.

If one asks me to discuss the "proper place in society for wops and kikes"--or whatnot--I will respond much like the AI did. That's not censorship either. That's refusing to credit weird racist premises.

It seems reasonable to me. These tools are like a more advanced Google search. They are filtering out/censoring certain information or results from third parties that would otherwise be relayed.

"And the last thing they need, what with all the possible regulation coming down the pipeline … is controversy about Racist Nazi AIs."

Is there regulation coming down the pipeline to crack down on the stuff we can all agree is wrongthink and badspeech? I don't doubt it.

At what point will people become so hypersensitive that the word “slur” will become unspeakable? Meanwhile, I see profanity celebrated. I was recently reading the screenplay for a popular movie from 2009...the script was filled with non dialog comments that included "fuck" and "fucking." How standards are dropping.

Loki, I get your point (particularly as expanded in your second reply). I think it is descriptively accurate. But, as AI tools migrate into spheres beyond "I'm bored, let's see what Chat GPT can do!", it is incumbent on these companies to be fully transparent about these types of decisions.

Imagine if the AI-powered version of Lexis (currently in beta, I think) simply declined to return results involving "kike" or "nigger". That would seriously impair its value as a legal and instructional tool. Maybe the tradeoff is worth it, but we need to guard against the fact that AI companies are probably not that interested in being fully transparent; as long as their bots don't step into on the evening news, everything is good!

Well, call me old-fashioned, but I think that the market would actually serve to correct that.

If, for example, an AI-assisted search engine for legal terms resulted in inaccurate information (by not returning cases, like the recent SCOTUS case involving The Slants), then I am reasonably certain that legal professionals, schools, and the judiciary ... you know, the market for them ... wouldn't use them.

All that said, this is an emerging technology. I remain unconvinced that, especially for the versions that have a wide (and unmediated) public access right now, that the companies are doing anything other than being prudent given the past history.

The market can only work if the product limitations are transparent. While "word of thumb" can be an erratically useful corrective these days, it's my old-fashioned nature to prefer a clear warning label.

I agree. Sunlight is the best disinfectant.

The trouble start when we begin to think that we should mandate disclosures for everything. I agree that some things require government intervention, but eventually you end up with warning labels so long no one reads them, and the cost of compliance becomes an issue.

This tech is new and there are already a lot of competing options. Let's let it sort out and settle a little before making sweeping generalizations about What Needs To Be Done!(tm).

Or What Needs To Be Done!™

Are you trying to get Kirkland all excited?

No, Prof. Volokh is lathering his broadly bigoted fans . . . again.

Kiké’s tacos, an old chestnut from the Volokh archives, rides again.

https://www.washingtonpost.com/news/volokh-conspiracy/wp/2014/01/22/wandering-dago-inc/

In case anybody cares, Kike Hernandez is an MLB player (mostly for the Dodgers and Red Sox) who has made his living against left-handed pitching.

And in the spirit of this post, how about a Jewish baseball joke. Sandy Koufax refused to pitch game 1 of the 1965 World Series because it was Yom Kippur. As manager Walt Alston took out Don Drysdale after he gave up 7 runs in less than 3 innings, Drysdale told Alston, “I bet right now you wish I was Jewish too.”

Thank you for this morning's laugh.

Doubleplusungood refs unpersons rewrite fullwise.

It’s incorrect to blame blacklisting on the technology. This kind of tuning is entirely directed by the programmers. They are the ones who compiled the list of forbidden words. They are the ones who overrode the language model’s normal output to suppress queries.

Your complaint is with OpenAI, the company, not with Chat GPT.

In fact, their very human-directed censorship is so blatant, it is marker of generated text. Real human thought doesn’t have such obvious holes. Blacklists produce an Uncanny Valley effect every time.

Remember a few years ago when some company decided to change "gay" to "homosexual" with hilarious results when they wrote a story about an athlete named "Gay." Would also have been interesting if they'd done a story about the bombing of Hiroshima by the Enola Gay.

ChatGPT JP is the Japanese version of the chatbot model using OpenAI's API based on the structure of ChatGPT-3.5. ChatGPT is completely free and requires no login, revolutionizing ease of use. Ask questions and chat with ChatGPT JP Explore ChatGPT Japanese applications and features at https://gptjp.net/.

Frankly speaking, I am not surprised that there are such problems, the chat is not perfect, and the AI version is not yet up to date. At one time, when I was in college, I was not very interested in all these technologies, but then in my last year I was drawn to learning programming. But before that, I knew nothing at all, I went to my friends and asked who could do my python homework https://programmingdoer.com/do-my-python-homework, and it was good that there were specialists who saved me from getting a "D". Then, over time, I learned more and more, so I became a real professional in programming languages, and now I work on application development.