The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

Can a Controversial User Really Get Kicked off the Internet?

In theory, yes; in practice, perhaps soon.

(This post is part of a five-part series on regulating online content moderation.)

When it comes to regulating content moderation, my overarching thesis is that the law, at a minimum, should step in to prevent "viewpoint foreclosure"—that is, to prevent private intermediaries from booting unpopular users, groups, or viewpoints from the internet entirely. But the skeptic—perhaps the purist who believes that the state should never intervene in private content moderation—might question whether the threat of viewpoint foreclosure even exists. "No one is at risk of getting kicked off the internet," he might say. "If Facebook bans you, you can join Twitter. If Twitter won't have you, you can join Parler. And even if every other provider refuses to host your speech, you can always stand up your own website."

In my article, The Five Internet Rights, I call this optionality the "social contract" of content moderation: no one can be forced to host you on the internet, but neither can anyone prevent you from hosting yourself. The internet is decentralized, after all. And this decentralization prevents any private party, or even any government, from acting as a central choke point for online expression. As John Gilmore famously said, "The Net interprets censorship as damage and routs around it."

But while this adage may have held true for much of the internet's history, there are signs it may be approaching its expiration date. And as content moderation moves deeper down the internet stack, the social contract may be unraveling. In this post, I'll describe the technical levers that make viewpoint foreclosure possible, and I'll provide examples of an increasing appetite to use those levers.

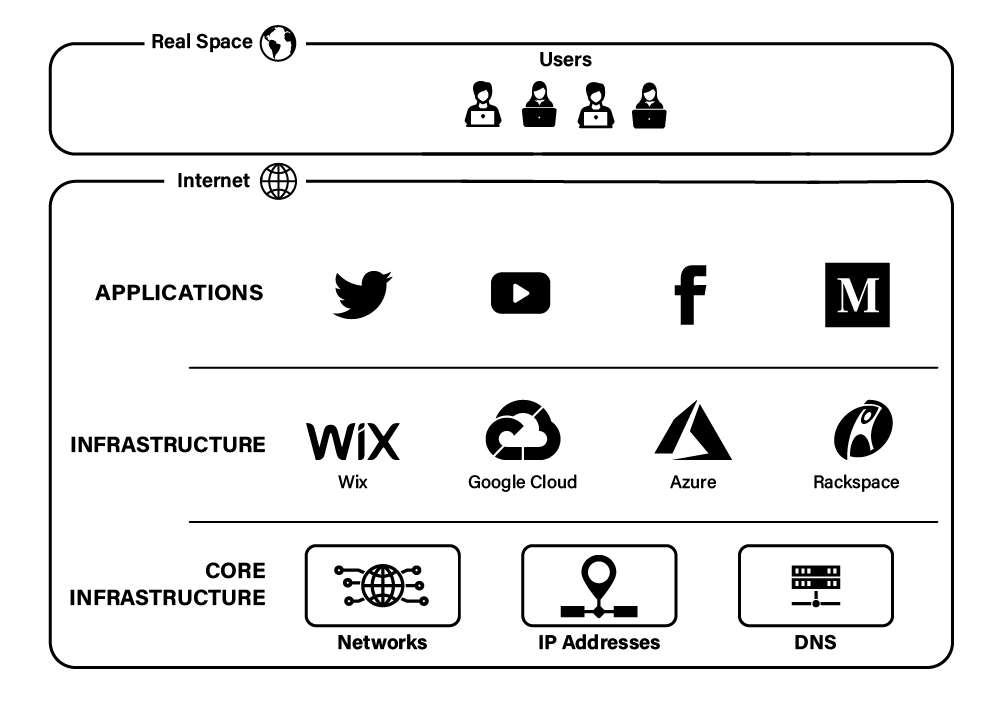

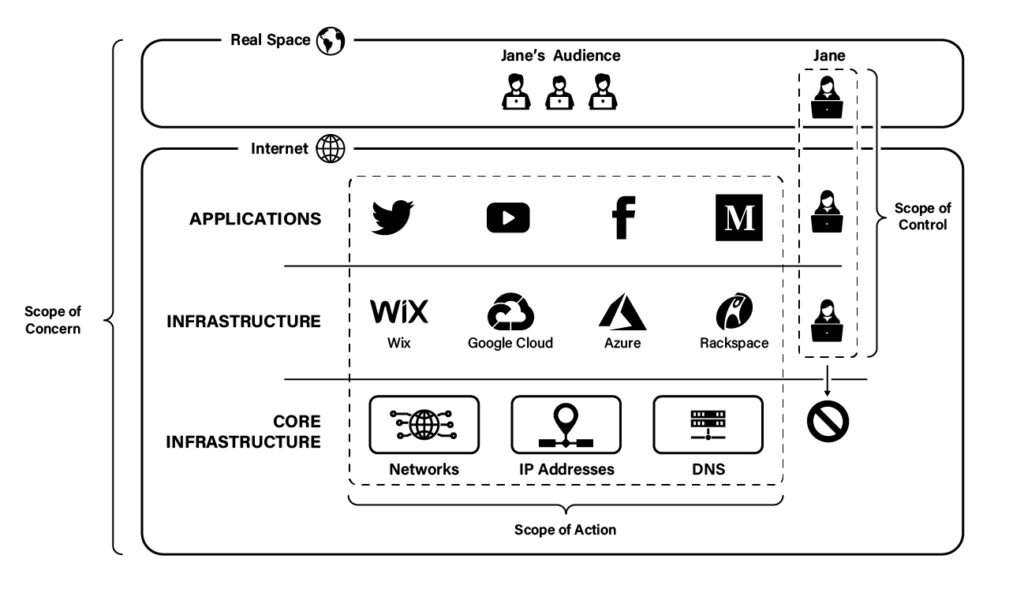

To illustrate these concepts, it's helpful to think of the internet not as a monolith but as a stack—a three-layer stack, if you will. At the top of the stack sits the application layer, which contains the universe of applications that make content directly available to consumers. Those applications include voice and video calling services, mobile apps, games, and, most importantly, websites like Twitter (X), YouTube, Facebook, and Medium.

But websites do not operate in a vacuum. They depend on infrastructure, such as computing, storage, databases, and other services necessary to run modern websites. Such web services are typically provided by hosting and cloud computing providers like WiX, Google Cloud, Microsoft Azure, or Rackspace. These resources form the infrastructure layer of the internet.

Yet even infrastructural providers do not control their own fate. To make internet communication possible, such providers depend on foundational resources, such as networks, IP addresses, and domain names (DNS). These core resources live in the bottommost layer of the internet—the core infrastructure layer.

The below figure depicts this three-layer model of the internet.

Classic Content Moderation

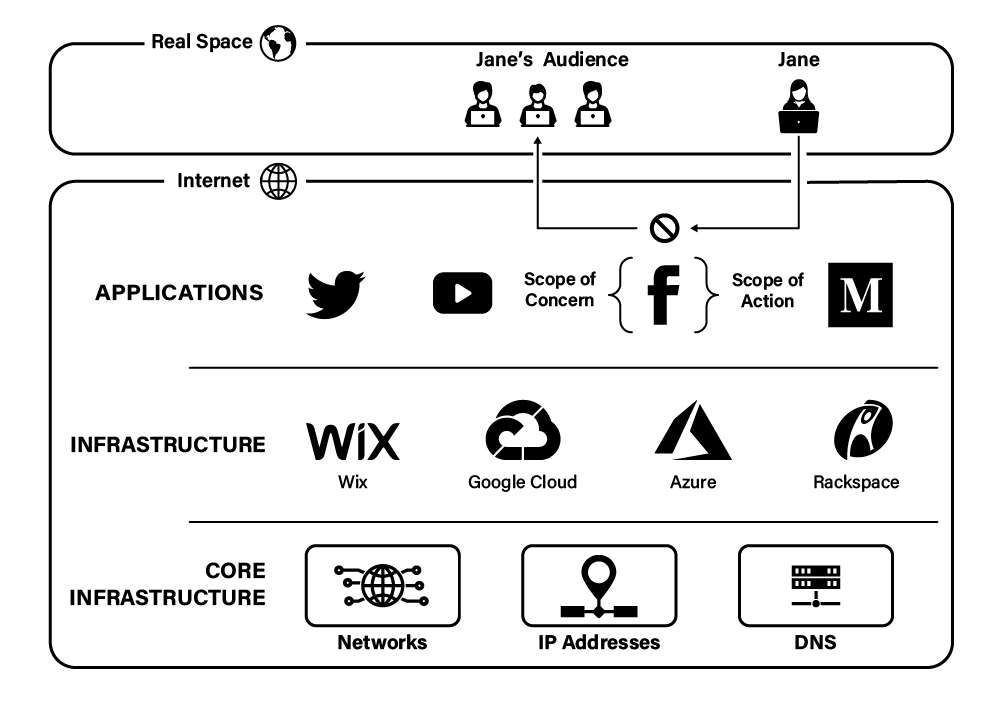

In the typical, classic scenario, when a website takes adverse action against a user or her content, it does so because the user has violated the provider's terms of service through her onsite content or conduct. For example, if a user—let's call her "Jane"—posts an offensive meme on her timeline that violates Facebook's Community Standards, Facebook might remove the post, suspend Jane's account, or ban her altogether.

Here we see classic content moderation in action, as defined by two variables: (1) the scope of concern and (2) the scope of action. At this point, Facebook has concerned itself only with Jane's conduct on its site and whether that conduct violates Facebook's policies (scope of concern). Next, having decided that Jane has violated those policies, Facebook solves the problem by simply deleting Jane's content from its servers, preventing other users from accessing it, or terminating Jane's account (scope of action). But importantly, these actions take place solely within Facebook's site, and, once booted, Jane remains free to join any other website. Thus, as depicted below, classic content moderation is characterized by a narrow scope of concern and a narrow scope of action, both of which are limited to a single site.

Deplatforming / No-Platforming

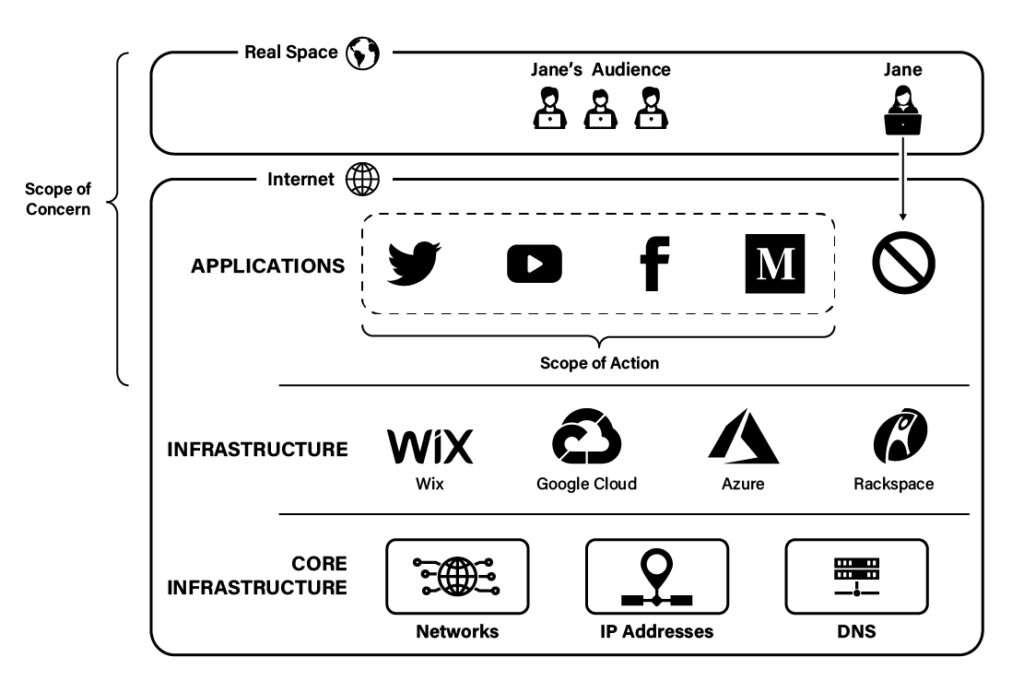

If Jane is an obscure figure, then the actions against her content might stop at classic content moderation. Even if Facebook permanently suspends her account, she can probably jump to another site and start anew. But if Jane is famous enough to create a public backlash, then a campaign against her viewpoints may progress to deplatforming.

As one dictionary put it, to "deplatform" is "to prevent a person who holds views that are not acceptable to many people from contributing to a debate or online forum." Deplatforming, thus, targets users or groups based on the ideology or viewpoint they hold, even if that viewpoint is not expressed on the platform at issue. For example, in April 2021, Twitch updated its terms of service to reserve the right to ban users "even [for] actions [that] occur entirely off Twitch," such as "membership in a known hate group." Under these terms, mere membership in an ideological group, without any accompanying speech or conduct, could disqualify a person from the service. Other prominent platforms have made similar changes to their terms of service, and enforcement actions have included demonetizing a YouTube channel after its creators encouraged others (outside of the platform) to disregard social distancing, suspending Facebook and Instagram accounts for those accused (but not yet convicted) of offsite crimes, and banning a political pundit (and her husband) from Airbnb after she spoke at a controversial (offline) conference. Put differently, deplatforming expands the scope of concern beyond the particular website in which a user might express a disfavored opinion to encompass the user's words or actions on any website or even to the user's offline words and actions.

Moreover, in some cases, the scope of action may expand horizontally if deplatforming progresses to "no-platforming." As I use the term, no-platforming occurs when one or more third-party objectors—other users, journalists, civil society groups, for instance—work to marginalize an unpopular speaker by applying public pressure to any application provider that is willing to host the speaker. For example, Jane's detractors might mount a Twitter storm or threaten mass boycotts against any website that welcomes Jane as a user, chasing Jane from site to site to prevent her from having any platform from which to evangelize her views. As depicted below, the practical effect of a successful no-platforming campaign is to deny an unpopular speaker access to the application layer altogether.

Deep Deplatforming

"But Jane couldn't be booted entirely from the application layer," you might say. "Surely some website out there would be willing to host her viewpoints. Or, worst case, she could always stand up her own website."

As long as content moderation remains confined to the application layer, those sentiments are indeed correct. They capture the decentralized nature of the internet and the putative ability of any enterprising speaker to strike out on her own.

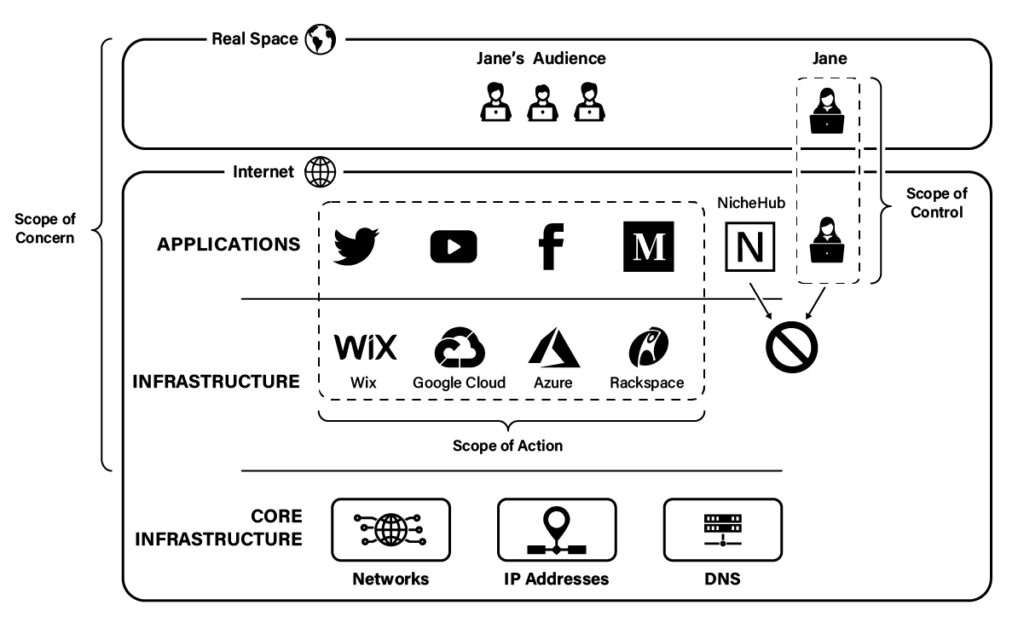

But they become less true as content moderation expands down into the infrastructure layer of the internet. As explained above, that layer contains the computing, storage, and other infrastructural resources on which websites depend. And because websites typically rely on third-party hosting and cloud computing vendors for these resources, a website might be able to operate only if its vendors approve of what it permits its users to say.

Thus, even if Jane migrates to some website that is sympathetic to her viewpoints or that is simply free speech-minded—"nichehub.xyz" for purposes of this case study—NicheHub itself might have dependencies on WiX, Google Cloud, Microsoft Azure, or Rackspace. Any of these providers can threaten to turn the lights out on NicheHub unless it stops hosting Jane or her viewpoints. Similarly, if Jane decides to stand up her own website, she might be stymied in that effort by infrastructural providers that refuse to provide her with the hosting services she needs to stay online.

These developments characterize "deep deplatforming," a more aggressive form of deplatforming that uses second-order cancelation to plug the holes left by conventional techniques. As depicted below, deep deplatforming vertically expands both the scope of concern and the scope of action down to encompass the infrastructure layer of the internet stack. The practical effect is to prevent unpopular speakers from using any websites as platforms—even willing third-party websites or their own websites—by targeting those websites' technical dependencies.

Perhaps the best-known instance of deep deplatforming concerned Parler, the alternative social network that styled itself as a free speech-friendly alternative to Facebook and Twitter. Following the January 6 Capitol riot, attention turned to Parler's alleged role in hosting users who amplified Donald Trump's "Stop the Steal" rhetoric, and pressure mounted against vendors that Parler relied on to stay online. As a result, Amazon Web Services (AWS), a cloud computing provider that Parler used for hosting and other infrastructural resources, terminated Parler's account, taking Parler, along with all its users, offline.

The above figure also introduces a new concept when it comes to evading deplatforming: the scope of control. As long as Jane depends on third-party website operators to provide her with a forum, she controls little. She can participate in the application layer—and thereby speak online—only at the pleasure of others. However, by creating her own website, she can vertically extend her scope of control down into the application layer, thereby protecting her from the actions of other website operators (though not from the actions of infrastructural providers).

Viewpoint Foreclosure

"Ah, but can't Jane simply vertically integrate down another layer? Can't she just purchase her own web servers and host her own website?"

Maybe. Certainly she can from a technical perspective. And doing so would remove her dependency on other infrastructural providers. But servers aren't cheap. They're also expensive to connect to the internet, since many residential ISPs don't permit subscribers to host websites and don't provide static IP addressing, forcing self-hosted website operators to purchase commercial internet service. And financial resources aside, Jane might not have the expertise to stand up her own web servers. Most speakers in her position likely wouldn't.

But even if Jane has both the money and the technical chops to self-host, she can still be taken offline if content moderation progresses to "viewpoint foreclosure," its terminal stage. As depicted below, viewpoint foreclosure occurs when providers in the core infrastructure layer revoke resources on which infrastructural providers depend.

For example, domain name registrars, such as GoDaddy and Google, have increasingly taken to suspending domain names associated with offensive, albeit lawful, websites. Examples include GoDaddy's suspension of gab.com and ar15.com, Google's suspension of dailystormer.com, and DoMEN's suspension of incels.me.

ISPs have also gotten into the game. In response to a labor strike, Telus, Canada's second-largest internet service provider, blocked subscribers from accessing a website supportive of the strike. And in what could only be described as retaliation for the permanent suspension of Donald Trump's social media accounts, an internet service provider in rural Idaho allegedly blocked its subscribers from accessing Facebook or Twitter.

But most concerning of all was an event in the Parler saga that received little public attention. After getting kicked off AWS, Parler eventually managed to find a host in DDoS-Guard, a Russian cloud provider that has served as a refuge for other exiled websites. Yet in January 2021, Parler again went offline after the DDoS-Guard IP addresses it relied on were revoked by the Latin American and Caribbean Network Information Centre (LACNIC), one of the five regional internet registries responsible for managing the world's IP addresses. That revocation came courtesy of Ron Guilmette, a researcher, who, according to one security expert, "has made it something of a personal mission to de-platform conspiracy theorist and far-right groups."

If no website will host a user like Jane who holds unpopular viewpoints, she can stand up her own website. If no infrastructure provider will host her website, she can vertically integrate by purchasing her own servers and hosting herself. But if she is further denied access to core infrastructural resources like domain names, IP addresses, and network access, she will hit bedrock. The public internet uses a single domain name system and a single IP address space. She cannot create alternative systems to reach her audience unless she essentially creates a new internet and persuades the world to adopt it. Nor can she realistically build her own global fiber network to make her website reachable. If she is denied resources within the core infrastructure layer, she and her viewpoints are, for all intents and purposes, exiled from the internet.

While Parler did eventually find its way back online, and even sites like Daily Stormer have managed to evade complete banishment from the internet, there can be little doubt that the appetite to weaponize the core infrastructural resources of the internet is increasing. For example, in 2021, as a form of ideological retribution, certain African ISPs publicly discussed ceasing to route packets to IP addresses belonging to an English colo provider that had sued Africa's regional internet registry. And in March 2022, perhaps inspired by the above examples, Ukraine petitioned Europe's regional internet registry to revoke Russia's top-level domains (e.g, .ru, .su), disable DNS root servers situated in Russian territory, and withdraw the right of any Russian ISP to use any European IP addresses.

In sum, while users today are not necessarily getting kicked off the internet for expressing unpopular views, there are technical means to do just that. It also seems that we are moving toward a world in which that kind of exclusion may become routine.

Show Comments (86)