The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

In January, ChatGPT Failed The Bar. In March, GPT-4 Exceeds The Nationwide Student Average

We are now witnessing a remarkable growth in ability in a very short period of time.

In 2011, Apple introduced Siri. This voice recognition system was designed as an ever-present digital assistant, that could help you with anything, anytime, anywhere. In 2014, Amazon introduced Alexa, which was designed to serve a similar purpose. Nearly a decade later, neither product has ever reached its potential. They are mostly niche tools that are used for very discrete purposes. Today's New York Times explains how Siri, Alexa, as well as Google Assistant lost the A.I. race to tools like GPT. Now, we have another notch in the belt of OpenAI's groundbreaking technology.

Yesterday, OpenAI released GPT-4. To demonstrate how powerful this tool is, the company allowed a number of experts to take the system for a spin. In the legal corner were Daniel Martin Katz, Mike Bommarito, Shang Gao, and Pablo Arredondo. In January 2023, Katz and Bommarito studied whether GPT-3.5 could pass the bar. At that time, the AI tech achieved an overall accuracy rate of about 50%.

In their paper, the authors concluded that GPT-4 may pass the bar "within the next 0-18 months." The low-end of their estimate proved to be accurate.

Fast-forward to today. Beware the Ides of March. Katz, Bommarito, Gao, and Arredondo posted a new paper to SSRN, titled "GPT-4 Passes the Bar Exam." Here is the abstract:

In this paper, we experimentally evaluate the zero-shot performance of a preliminary version of GPT-4 against prior generations of GPT on the entire Uniform Bar Examination (UBE), including not only the multiple-choice Multistate Bar Examination (MBE), but also the open-ended Multistate Essay Exam (MEE) and Multistate Performance Test (MPT) components. On the MBE, GPT-4 significantly outperforms both human test-takers and prior models, demonstrating a 26% increase over ChatGPT and beating humans in five of seven subject areas. On the MEE and MPT, which have not previously been evaluated by scholars, GPT-4 scores an average of 4.2/6.0 as compared to much lower scores for ChatGPT. Graded across the UBE components, in the manner in which a human tast-taker would be, GPT-4 scores approximately 297 points, significantly in excess of the passing threshold for all UBE jurisdictions. These findings document not just the rapid and remarkable advance of large language model performance generally, but also the potential for such models to support the delivery of legal services in society.

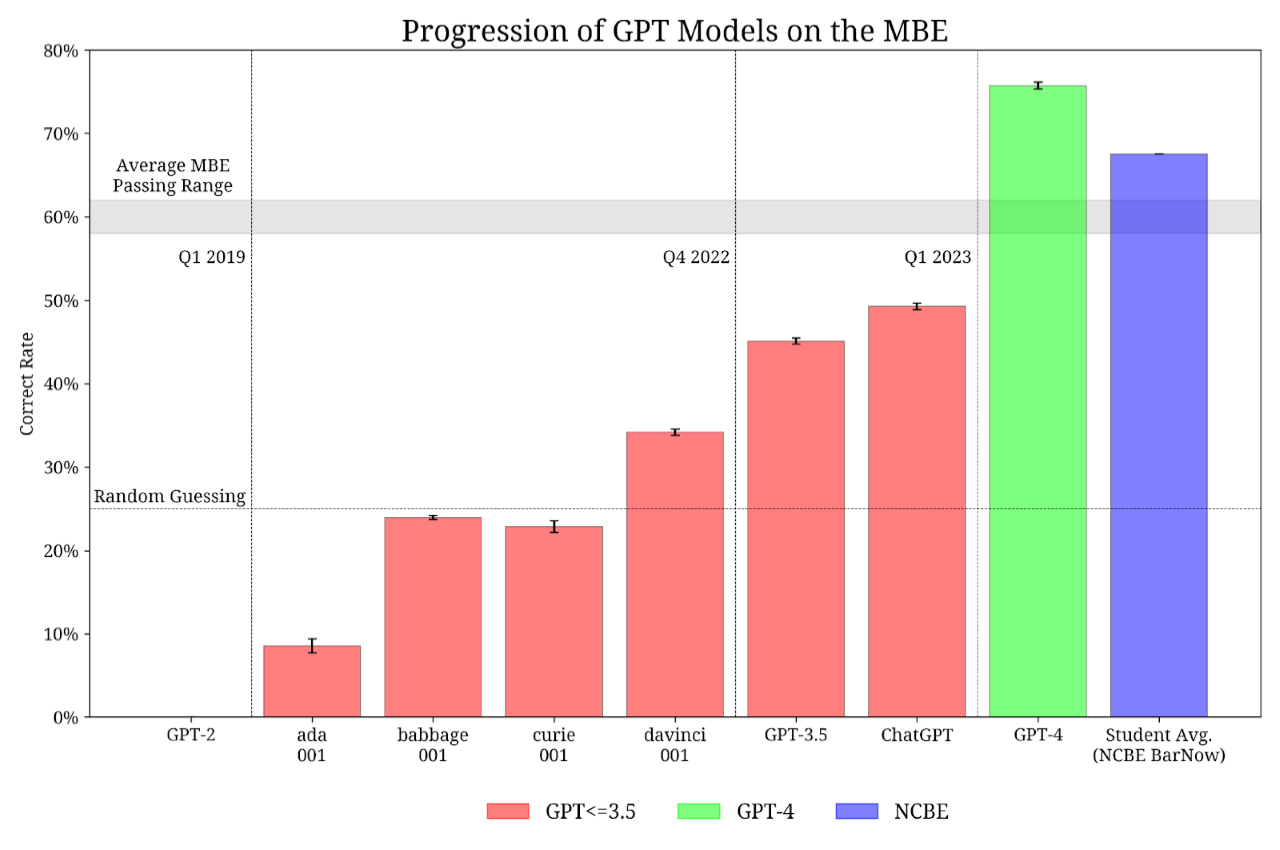

Figure 1 puts this revolution in stark contrast:

Two months ago, an earlier version of GPT was at the 50% mark. Now, GPT-4 exceeded the 75% mark, and exceeds the student average performance nationwide. GPT-4 would place in the 90th percentile of bar takers nationwide!

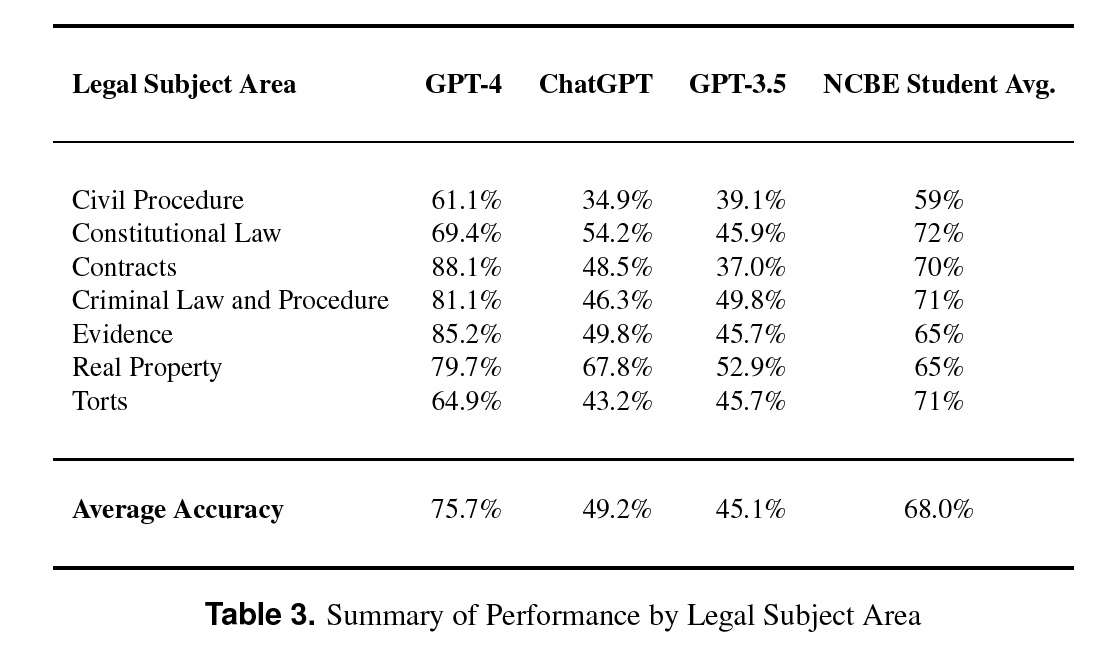

And GPT scored well across the board. Evidence is north of 85%, and GPT-4 scored nearly 70% in ConLaw!

We should all think very carefully how this tool will affect the future of legal services, and what we are teaching to our students.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Perhaps unsurprisingly, there's a pretty clear bimodal distribution between the somewhat more predictable, algorithmic subjects like K/evidence/property (where v4 vastly outperforms humans) and the somewhat more subjective, abstract ones like con law/torts/civ pro (where v4 underperforms). So maybe all is not lost just yet.

It improved by "only" 28% in Con Law, and 18% in Real Property - though it was already doing well in the latter, but improved by 50% or more on the rest. Staggering.

My personal Turing Test would be to ask a question about the Rule Against Perpetuities. If the answer makes sense, then clearly no human wrote it. 🙂

Funnily enough I asked ChatGPT that exact question and the answer was lucid, so yes 🙂

LOL -- excellent.

If you don’t allow the AI access to Heller and McDonald and Volokh’s scholarship…there would be a zero percent chance it would conclude that the 2A protects an individual right to own a gun for self defense that only applies to citizens in DC and federal territories. 0% chance!

"there would be a zero percent chance it would conclude that the 2A protects an individual right to own a gun for self defense that only applies to citizens in DC and federal territories. 0% chance!"

Pretty much any access to historical evidence would result in a zero percent change of concluding something that stupid. You need motivated reasoning to arrive at that sort of goofy conclusion, and ChatGPT doesn't do motivation, yet.

I think you are exactly wrong about this. I tend to think of the current generation of neural nets as doing little more than motivated reasoning. They absorb a large amount of data and regurgitate an argument and verbiage directed towards a goal.

The philosophically interesting point is to ask yourself what distinguishes the high end human minds from the halfwitted partizan ? I would argue that it is an almost ruthless honesty and self evaluation with a driving factor that the objective world as a whole is self consistent. The shallow remain in the ChatGPT world where you preach as gospel today the same mechanism you derided yesterday.

ChatGPT operates session to session without self reflection and a need for self consistence. As those features are added, the world will become a more interesting place.

The average student only gets 68%? That explains a lot...

To be fair, for maximum discriminatory power, you WANT a test to result in the average subject arriving somewhere the middle of the range. If the average is too low, you can't distinguish different degrees of failure, if the average is too high, you can't distinguish different degrees of excellence.

The bar is pass/fail. You don't need to distinguish different degrees of excellence.

More precisely, the cumulative score across all subjects has a pass/fail threshold.

To build that cumulative score, you still need a grading system for the individual subjects that fairly evenly measures above/below average performance so a very good/very bad individual score doesn't unduly distort the outcome.

True. That's what you want for discriminatory power-- determining which students are better and which are worse.

It may not be what you want for a test to see if someone is sufficiently qualified. But I suspect it's correct for the Bar. If people reached "sufficiently qualified" with less training, they would go to school a shorter amount of time and take the qualification test when they thought the could pass. That would be with less training and you still get a score distribution like on the harder test. (Same would happen if they needed more training and law school stretched to 4 years.)

So, we seem to be approaching the point where AI judges that just relentlessly uphold the law as written are a possibility.

Any thoughts on doing a moot court or mock trial using ChatGPT as the judge?

In the vast majority of litigation, the law itself is not in dispute. In trial and on the way there, the judge needs to make all sorts of judgment calls on how the facts of the case apply to the law, what evidence the jury will ultimately hear, etc.

Though of course impossible to predict for any given circumstance, human judges often get reputations for how they'll rule (or at least are inclined to rule) on certain issues. I think it would be interesting to run a series of tests with conceptually similar fact patterns and see how consistent ChatGPT is in its "rulings."

Am I the only one less-than totally impressed by this?

Presumably, the learning data consisted of a mix of 'correct' (high-scoring) and 'incorrect' (lower-scoring) examples of prior test questions. Why the surprise that it is possible to analyze and identify what was 'correct' and then feed that back to a question requesting that data? The process is somewhat the same as if I was given a bunch of prior tests and figured out for myself what was correct, what wasn't correct and then attempted to answer similar questions on my own test (in fact, this reminds me of how I used to study for exams where I hadn't bothered to actually go to class - I would just grab old tests from the fraternity filing cabinet and go from there.)

To me, the distinction of AI is that a programmer doesn't have to write out the specific steps of identifying the components of what is correct and what isn't, instead the programmer told the computer to figure it out by themself - a nice parlor trick, but the outcome isn't any different.

Now, if the AI came up with arguments that no one has yet advanced, that would be impressive... but I don't think that was what happened.

I don't know that it was (intentionally) fed any specific bar-related materials for this. Writeups like this one suggest the models are trained on a broad mix of websites/books/Wikipedia pages. So it is at least interesting that the model can make some basic inferences about what of that material is relevant to a given bar essay.

Unclear. ChatGPT has been busted for flatly making stuff up (e.g., citing to and summarizing non-existent scientific papers), so it wouldn't surprise me much if it did more than just regurgitate arguments/theories in the training data. Whether they would be coherent enough to pass is another question, which may be part of the reason for the poorer performance on less-determinate subjects as I noted above.

Absolutely! It just makes stuff up even when given pretty specific instructions.

This is a long winded example of the BS ChatGPT will make up.

After asking what I could do to get it to apply a rubric to a sample of writing and grade it, it told me to point it to links and it would apply stuff to get the job done!

Me

ChatGPT

Me

Note-- I'm making a very specific request with a specific sample question. I've given a specific link. It told me that to get it to grade a sample, I should give it urls.

Now, here is the answer:

ChatGPT

Looks great, right? The only problem is the first question on the test starts "In Ai’s poem “The Man with the Saxophone,” published in 1985, ...."

So the answer is indistinguisable from BS.

Based on exploration, although ChatGPT tells you it can visit links and extract info, it appears it cannot. If you ask it to visit a link, extract info and analyze it, it will appear to do so. But it's likely to just be BS.

I suspect if that page had been in it's "training" set, and the context of the question allowed it to know what was at that URL, it would have appeared to do what it claimed to do. But in this case, if provided by a person the answers would apppear to be a mix of BS and lying.

Wow, that's particularly insidious. Thanks for sharing.

Don't forget that there are many specialized AIs being developed. Imagine an AI was trained using every US court decision and every common law decision. Then a lawyer uses that to find precedents when writing a brief.

Wouldn't that make the lawyer much more effective?

Should not every professional wish to be more effective at their job?

Wouldn't justice be better served?

Those are the kinds of questions we should be asking.

If it worked, sure.

The bit to be careful about there though is that since "AI" is trained by humans using data generated from humans, human biases keep working their way into them. Until we can correct for that (and we've been trying --and failing-- for years at just that) no "AI" will be serving justice, just serving the status quo.

The second thing to remember is that the legal system is not structured to promote justice in the first place: innocence is not a defense against punishment, paperwork errors are more important then facts, etc. and so-on. Simply put, an AI Lawyer based on our legal system is not going to pursue "justice".

And that's before you even get to how lawyers and judges are absolutely going to "circle the wagons" on this. Effective AI lawyers would be an anchor on every lawyer's future earnings, and that's exactly why you're already seeing attempts to kill this in the crib, even before it's a current threat.

All of which is to say... theoretically, yes. But we're a long ways off from that.

It's not necessary to limit the discussion to "AI Lawyers" how about a "Precedent Search Engine", a "Brief Drafter", a "Contract Checker"; useful tools that don't go as far as an AI Lawyer.

I've fiddled with ChatGPT and it's short-comings include important things that would not be included on the bar test.

* ChatGPT sometimes just makes stuff up. (In a real person, we'd call this BSing.)

* ChatGPT sometimes goes so far as to do what we would call "lying". If you *ask* it whether it can read content in a pdf at a web page, it says (essentially) that it can. Then if you give it the url and ask it go go read it.... it

(a) sometimes comes back and tell you it can't do that. (IOTW it can't-- which if it were a person would make you call it's previous answer 'a lie'.) Or

(b) Goes to the url, claims it read it, and if you ask what there, reports back *incorrect content*.

(I was trying to get it to read pdfs at various sites-- college board etc. It's a machine. But if it was a person, it's behavior was indistinguishable from lying or BSing.)

This would be a real problem for an honest to goodness person in practice of anything. Because people need to identify what facts they need, collect those fact and then use them.

I assume on the Bar exam, in questions involving applying law to fact patterns, fact pattern is supplied, and the student then needs to apply law to that.

That gives ChatGPT and edge. But if it had a partial fact pattern, it would be unable to actually go out and hunt for the new facts. (It might correctly tell you that some crucial facts are missing. But it can't go out and get them.) I don't know how it deals with inconsistent facts.

That said: I'm sure ChatGPT will get better.

As I said weeks (months?) ago on another "AI Lawyer" post, if you want to argue that AI lawyers are inherently bad and detrimental, you need to do more then point out that they're bad at being lawyers, because that will improve with time.

I think they are still bad at being lawyers. ChatGPT-4 is good at passing the Bar, which is a different thing. This isn't an argument against the Bar exam which is designed to test whether a person who wants to practice can apply law in the way a test can test.

I'm not a lawyer, but I'm pretty sure there are other additional skills a lawyer needs that are not tested on the Bar. (And which are difficult to test with a standardazed test.)

Testing what can be tested with a standardized test is a good thing. But AI is going to quickly beat humans on standarized tests. I suspect it will eventually be able to do things that are more difficult to test on standarized tests. But right now, it's a tool someone can used if you know what information you want, craft good questions, and know what's relevant to an issue so you can bring all relevant facts. This is more than spotting relevant facts in a question-- it's knowing to bring them in the first place!

When ChatGPT can also interview a client, and do it's own additional research it might replace a lawyer. But for now, it's going to be a supporting tool (when it doesn't spew BS which could be dangerous.)

A longer explanation of my point, but yes.

It is easy to argue that, today, based on current capabilities, AI lawyers are "bad lawyers".

But if it is important to you that AI lawyers are always "bad lawyers" regardless of capabilities, then arguing from capabilities is not enough.

Oh, I think they may someday be "good lawyers", at least for some aspects of lawyering. It will be interesting to see if that happens soon. I don't know enough about the challenges in getting AI to achieve the next necessary things.

This tells me quality legal advice is going to get decidedly cheaper for most people...