The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

Noise in Law School Grading

Adam Chilton, Peter Joy, Kyle Rozema, and James Thomas have written an excellent, careful new paper entitled "Improving the Signal Quality of Grades." The central claim is that some law professors are better graders than others, where a better grader is defined as one whose grades correlate more with the law student's final GPA. Some top students thus have the misfortune of having their records tarnished by a professor whose grades are relatively arbitrary. They then miss out on opportunities, such as law review and judicial clerkships, that are granted relatively early, based on a small subset of the students' law school portfolio. The authors then think about various possible remedies for noisy signals, such as kicking bad graders out of the 1L curriculum and adding more gradations in grading levels.

A preliminary question might be whether law schools really want to improve the signal quality of their grades. It may be fair to say that law schools probably don't care all that much. For every student denied a clerkship in a pure meritocracy is another student who lucks into one. It seems doubtful that a law school that did implement a better grading system would become more attractive to applicants. Indeed, aren't students attracted to the lax grading systems at places like the Yale Law School?

But there is an argument that they should care, at least as to their strongest students. After all, judges and top law firms are in a repeat relationship with the law school, potentially hiring students year after year. If they have a great experience, they will be more apt to return and hire more students. When a student lucks into a job and doesn't perform well, then the employer is less likely to hire students in later years. The stronger the signal, the more employers can rely on it. And so, for employers who are willing to hire only the best students from a school, a strong signal is important.

As one moves lower in the class, the importance of a strong signal for a school may decrease. Some employers may be perfectly happy hiring an average, or somewhat below average to somewhat above average, student from a school. To be sure, the employers might appreciate being able to screen out the worst students at the school, but they also might be satisfied with the degree alone, which serves as a form of certification that a student meets the school's standards. That doesn't mean that grades are irrelevant, but, as the old joke suggests, a school might reasonably conclude that it has a stronger interest in accurately identifying the best graduate than the worst one. The desire to avoid making anyone look especially bad can help explain both grade inflation and an opaque grading system like the University of Chicago Law School's. Employers who need to distinguish the best students can, but many employers can just be happy hiring a graduate of that school without worrying about whether a GPA of 175 is any good.

Still, law schools do want to distinguish at least their best students, and that requires multiple gradations and good graders. The authors base their analyses on data from a top-20 law school over four decades. (It quickly became unmistakable to me which law school they are describing, but because they seem to be preserving some veneer of privacy for the school, I won't name it.) Their data shows that first-year grades are a fairly good but still imperfect predictor of final performance, with 68% of those in the top quartile after the first year remaining there by graduation. They show that with more semesters, the "share of missing students in the top 1 percent"--that is, those who will fill in the 99th percentile but aren't there earlier--drops from 40% after two semesters to 22% after four semesters.

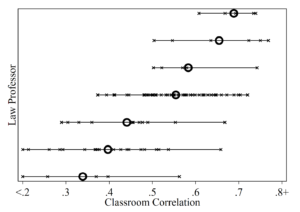

Overall, 1L grades are reasonably predictive, with the mean correlation between grades in a classroom and the student's eventual achievement equaling 0.66. But that figure masks a great deal of variability among professors. The diagram below, for example, shows the predictive value of seven different professors' grades. Each X refers to one time when the professor taught the class, and each O indicates the professor's average classroom correlation. It is striking that even for a single professor, there is a fair degree of variability; maybe some exams work better than others. But even more striking is that some graders are better than others.

Or are they? The authors consider the most obvious counterargument, that different professors are measuring different skills. Maybe the professor at the bottom of the chart above is the best grader, because that professor is focusing on skills that actually matter in the workplace. But the authors attack this possibility in several ways. First, they note that noisy graders tend to be bad at predicting their own future grades of the same students. Second, the noisy graders are not highly correlated with one another; indeed, their grades better predict the high-signal graders' grades than their fellow high-noise graders' grades.

Third, the authors run a Principal Component Analysis of all doctrinal first-year grades. This is a technique that reduces the dimensionality of data, for example converting eight-dimensional data representing first-year grades of many students into four-dimensional data representing the underlying qualities those grades are measuring. (For a nice explanation of Principal Component Analysis and similar techniques in the context of Supreme Court opinions, see this great article by Joshua Fischman and Tonja Jacobi.) They find that the first component of PCA--i.e., the most important thing that grades seem to be measuring--explains 61% of the variation in first-year grades, while the second component explains only 8%.

This is rather convincing, but I'm not entirely sold. After all, 61% is not 100%. And the noisy graders might be grading different portions of the remaining 39%. Moreover, what they are measuring might be harder to grade. There is a reason that law professors love to base their exams on subtle doctrinal points (nothing like an exception to the exception to separate out the best students). It's relatively easy. If the noisy graders are trying hard to discern something that's hard to identify, then one would expect their grades to be noisy predictors even of their own future grades (and their colleagues' grades), but whatever they are identifying might still be important.

But yeah, it could just be that the noisy graders are lazy. (My colleague with the most unusual technique for grading exams is not that outside of grading, however.) And maybe if schools identified high-noise graders, they could be coached--or shamed. One question I'm curious about is whether the noisy graders also tend to be the professors with low teaching evaluations. The authors don't provide any information on that. It would slightly strengthen my confidence in the results if we found that less effective teachers are worse graders. But it also might suggest that the real problem is teaching rather than grading. After all, if teachers don't clearly identify what the students need to learn, then different students might focus on different things, and that might add noise to the grading, even if the grading were performed by a third party.

Improving graders is just one thing that law schools could do to make grading fairer and more consistent. For example, law schools could do a better job of making the curve more consistent across classes--and more reflective of the quality of students in the class. The authors note that the 1L students were randomly assigned to classes, but 2L and 3L students are not randomly assigned to classes, and at many schools, students are effectively punished for taking hard classes like federal jurisdiction. Meanwhile, some graders tend to have relatively flat distributions, while others have much more humped distributions. The authors explain why that doesn't affect their measure of correlation, but schools might still do more to reduce the effect of professors' choices on students--unless, of course, it turns out that professors who give a narrower set of grades are also those who are noisy graders.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Sounds like an interesting article. I think most students (at least not those at the top) feel like the system is stupid. But once you've gone through it, and made it to hiring partner, there's not much motivation to change the system. Putting the onus on the schools to grade better is just perpetuating the messed up system.

Lets go back to letting people practice without law school.

I agree, completely.

This would force law schools to prove their value....

I encourage law students to take a half day off for Traffic Court. That is the contact point between the law and the greatest number of people. It is run according to the Rules of Criminal Procedure. Nothing they learned in school will be seen. Nothing they will see in court will have been covered in school.

"Lets go back to letting people practice without law school."

Sure. Let's bring ineffective assistance of counsel back to our appeals processes. Let the appeals courts figure out if you got convicted because the prosecutor was that good or because your lawyer shouldn't have been allowed to take the case. They love doing stuff like that.

1. Law professors like to give only one (or maybe two) monster essay exams per semester, and are too imperious/lazy/insecure to change to more frequent, non-essay testing which has long been shown to encourage retention of learning and more accurate evaluation by the professor. So there will always be a lot of "noise" in grading and some bad graders.

2. The only exam taking ability which actually "matters in the workplace" is issue spotting. The other abilities (writing fast; answering in too much detail or outside the scope of the question; knee-jerk thinking) are big handicaps for any lawyer and should not be encouraged.

As someone who ended up with average-to-poor grades in law school I certainly have a narcissistic interest in downplaying the predictive power of law school grades, but this certainly rings true to me. I've never been a particularly fast typist, and my habit of being an "edit as you go" style of writer rather than a "stream of consciousness, then edit down" style of writer has always served me well in other contexts (particularly in the practice of law), and the way law school exams are given and graded always seemed incredibly unfair to me. The only factor that seemed to correlate whatsoever with my law school grades wasn't how hard I studied, how well I thought I understood the material, or how often I went to class, but whether the final exam included a multiple choice section or not.

Never once has my inability to regurgitate the restatement of property at 160 WPM turned out to be a problem in my legal career.

Law-school exams generally require the student to answer questions from memory, a practice that is highly discouraged in actual practice. So the exam is rewarding skills that aren't actually useful in the real world.

My objection to this is the assumption that all professions should have correlated grades. There are lots of reasons why that may not be the case.

For example, maybe a particularly left-leaning student does well in ConLaw, Property, and Procedure, but doesn't do as well with a law-and-economics Contracts professor. Or maybe the professor uses a type of exam/scoring that other professors generally don't, such as a take-home exam or awarding points on class participation.

In those cases, we'd expect less correlation to overall GPA. Maybe the non-correlated scores means the exams are being accurately scored.

David, I think the idea is that yes, particular students might have outdone their average or under-performed in a given professor's class. But if year after year, loads of the professor's students earn grades that are substantially above or below their averages, that suggests arbitrariness in the professor's grading.

"if year after year, loads of the professor’s students earn grades that are substantially above or below their averages, that suggests arbitrariness in the professor’s grading."

Maybe. Or the professor is accurately grading on something that others are not measuring. Hard to tell.

I still fail to understand why a curve is used at all. A grade should simply indicate how well the student has mastered the material and their ability to demonstrate that mastery. Other students performance on those two metrics have nothing to say about how well any given student has performed.

That is also my objection to the curve implied by the Chicago system.

From my understanding some choice opportunities for law students primarily focus on their academic acumen. A curve would help differentiate at the top.

You know what really does that? Getting personal recommendations from people familiar with most or all the candidates.

" A grade should simply indicate how well the student has mastered the material and their ability to demonstrate that mastery"

With a significantly large enough population sample, that will inherently correspond to a bell curve. We can debate where the center will be -- but that depends on how you define "mastery."

That's great and might be the expectation but if it reflects reality there should be no need to calculate grades to make it so. Determine before-hand what constitutes mastery of the material and let the grades reflect student performance against that mark.

By the time you're being tested on legal ethics, different states require different passing scores for law licensure. Mostly, passing scores involve being in the top 85%. or so.

"With a significantly large enough population sample, that will inherently correspond to a bell curve."

Not if your admissions are selective.

Ideally, you'd have some kind of grading mechanism that had absolute accuracy in measuring a student's achievement. One that could reach past an instructor's accidental failure to cover a key topic in class, or a student's temporary impairment due to conflicting demands for time or attention, or other challenges (if the student's kid was home sick during study time for finals, their performance on the exam might be altered for reasons other than absolute capability.

I taught IT, which is a much easier subject to assess grading for. Either the student knows how to figure a subnet mask, or they don't, with not a lot of "almost there" to figure into the grading mix. I used to teach a TCP/IP class that had a lot of binary math in it. some people switch readily from using the decimal-based system to using binary, and some people struggle with it a bit more. The rules of TCP/IP are both simple and straightforward, so getting perfect final exams wasn't all that unusual. The rules are simple and straightforward in binary but seeing all the numbers written as decimal obscures the simplicity, so the final exam wasn't all story problems. We had some questions that were rote memorization, too. What protocol uses TCP port 443 (with fair notice of what to memorize.) Throw in some questions that rely on a general knowledge of TCP/IP (why can't the local computer at address 10.123.47.9 ping Google at http://www.google.com? How about the one at 127.255.255.0? Or the one at 37.265.255.28? Every one of those questions has an answer that is clearly and unequivocally correct, and the wrong answers are not correct. Law exams do not have this general quality. (although with the MBE, they try.)

The Bar exam has an impact on the student's life and on society. I needs to publish its reliability and validity statistics.

Reliability: inter-rater, test-retest, parallel forms, internal consistency.

Validity: accuracy, construct validity, content validity, face validity,

criterion validity, concurrent validity, predictive validity, experimental validity, statistical conclusion validity, internal validity, external validity, ecological validity, relationship to internal validity, diagnostic validity.

Then all the Bars should be sued for its racial and other protected class disparities.

The Supreme Court should cancel the Bar exams as anti-scientific garbage. Then is should cancel them for their discriminatory outcomes with hideous impact on the lives of the candidates and on those of their clients. The Bar exam is the new poll tax and literacy crazy questions to exclude black candidates.

The content of the Bar exam is crap. The format of the Bar exam is garbage. The outcome of the Bar exam dsicriminates against several protected classes. Why not apply the law of the land to the Bar exam?

Nothing encountered in the Bar exam will ever be relevant or even true in the real world. Nothing in the real world practice of law will have been covered by law school nor by the Bar examination. It is total, anti-scientific, discriminatory garbage.

It is a res ipsa loquitor that the people who passed the Bar in this blog, or in the Comments, don't know shit. Their behavior has resulted in the failure of every self stated goal of every subject, save one, the seeking of the rent. These scumbags take our $trillion at the point of a gun, and return absolutely nothing of value.

It sure sounds like somebody couldn't pass a bar exam.

You hardly sound bitter about it at all.

I don't think the Supreme Court, which has a black man who has admitted a lifelong preoccupation with "proper English," is going to dissolve bar exams on the idea that "black people no speak gud."

I also don't think the bar exam is all that concerned about science, whatever that means in relation to it.

The most validated exam in history, the Stanford Binet IQ test, was struck down in California. The Supreme Court refused to cert a challenge. The purpose of the test was to include, not to exclude. Based on the results, black children could get the extra help of special education class.

The Bar exam crushes the dreams of a career for more blacks than for whites. Its results have an extreme effect on the future of people, and on that of their potential clients. It should have some scientific validity.

To give you an idea, the score on the Stanford-Binet at 7 predicts success at age 57. The lawyer struck down its use. Then allows a highly racist Bar exam outcome. These contradictory results are in California. Go to p. 2.

http://www.calbar.ca.gov/Portals/0/documents/FEB2020-CBX-Statistics.pdf

"I also don’t think the bar exam is all that concerned about science, whatever that means in relation to it."

Daubert.

When I started law school at UCLA over 50 years ago, classes were all graded on the same curve: top 10% got "High," the next 20% got a "High Pass," and everyone else got a "Pass," unless one did so badly that the professor gave a grade of "Inadequate" or "Inadequate---No Credit." You can treat those grades as equivalent to A, B, C, D and F. Not that it mattered, but that curve was tough enough that a tad over a 3.1 grade point average resulted in being awarded Order of the Coif, which is limited to the top 10% of a law school graduating class.

And there was blind grading, meaning that students did not put their names on their exams, just a four-digit number that changed for each class. I loved blind grading, since I know I annoyed some professors and blind grading protected me from intentional or unintentional punishment for my annoying ways.

Decades later, when I taught community property law and family law as an adjunct professor at a different law school, I was required to grade on a curve, and there was blind grading of exams. I loved being required to grade on a curve, since it eliminated needing to figure out the absolute worthiness of students' performances, and I loved grading blinded exams since it protected both me and the students from even the possibility that I could reward or punish particular students for reasons other than their actual exam performance.

In short, requiring grading on a curve and blind grading made the system fairer to students than it otherwise would be. It didn't eliminate the problem that after the first year better students tended to take the same more challenging classes and still had to be graded on a curve.

Answer the question 100% = B minus

Answer the question 110% = A minus

Answer the question 120% = A

There seems to be an unsupported assumption in this article: That people who perform well in law school overall will perform well in 1L classes.

There is variability in exam performance for some people, because sometimes you just have a bad day, on a day that happens to be exam day.

In my 2L year, exam time happened to coincide with some unusual weather. We had a foot of snow followed by an inch of freezing rain, followed by two weeks of subfreezing temperatures in a location that normally gets snow that sticks to the ground about every other year. There wasn't any local infrastructure to clear roads from that level of icing, and the law school happens to be located at the top of a hill.

Wait what? This just assumes that any professor that either (1) grades harder or (2) actually has a harder class, where most students get a grade lower than their overall GPA, is a "bad" grader. Why would that be the case? Or maybe they have a class where students with different skillsets and knowledge bases than the "typical" law school student, inverting the "typical" grades?