Review: Rethinking the Stanford Prison Experiment

Did participants exhibit a natural inclination for cruelty, or were they just doing what they thought researchers wanted?

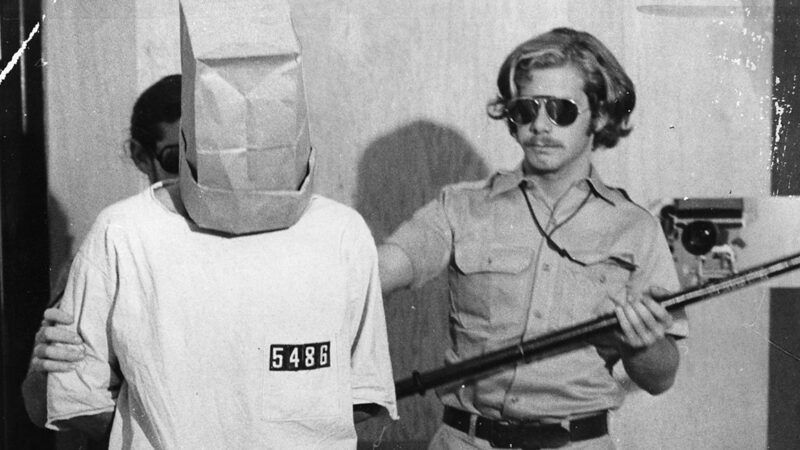

If you took psychology courses in college, you probably remember the "Stanford prison experiment," which monitored the behavior of 18 students assigned to play the roles of guards or inmates in a pretend penitentiary for a week. Stanford University psychologist Philip Zimbardo's 1971 study supposedly demonstrated that decent, ordinary individuals are apt to cruelly mistreat people when given authority over them, even when that authority is imaginary.

The Stanford Prison Experiment: Unlocking the Truth, a three-part National Geographic documentary, casts doubt on that gloss by interviewing academics and former subjects who say Zimbardo misrepresented his methodology and the implications of his results. Although Zimbardo claimed he gave very little direction to the "guards," for example, recordings show that he and his colleagues encouraged harsh treatment of the "prisoners."

The documentary suggests the so-called experiment is better understood as an improv game in which the subjects acted in ways they thought Zimbardo expected. Yet after torture at the Abu Ghraib military prison in Iraq came to light decades later, Zimbardo, a relentless self-promoter, glibly argued in media appearances and in his book The Lucifer Effect that his research anticipated such abuses by showing "how good people turn evil."

Director Juliette Eisner gives Zimbardo (who died last October, after the documentary was completed) ample opportunity for rebuttal. To some extent, his answers reinforce the impression of slipperiness. But his defenses also highlight an ambiguity at the heart of his experiment: Were the subjects just play-acting, as some now claim, or is that description a self-deceiving rationalization for mortifying behavior?

In the end, Zimbardo loses patience with Eisner's inquiry, declining to answer follow-up questions on the grounds that he has already thoroughly refuted his critics' arguments. Viewers can judge for themselves whether that is true.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

I thought this had already been debunked.

Debunked is a non-sequitur. Like picking through the wreckage of a plane crash and saying the conclusion that "The wings came off." was debunked.

Debunked is a non-sequitur.

That is, Milgram and others had already demonstrated similar phenomenon under better or more controlled conditions more varyingly and durably a decade before.

That's true. Milgram's experiment had actual variables and conditions. Though Milgram's experiment itself was far from perfect, lacking a proper control group, and there's some accusations that he tweaked with the data.

It also wasn't exactly measuring the same thing. It was about responses to authority figures, rather than innate human cruelty. To the contrary, some people who continued pressing the button they believed was shocking the victim were extremely distressed, in fact driven to tears, but did it anyway. So it wasn't some inherent cruelty but the way humans will respond to hierarchy-they don't take responsibility for their own actions if there's an apparent authority figure involved. It's why Congress naturally cedes so much of their authority to the executive branch, there are too many people involved who don't want to make difficult decisions.

It also wasn't exactly measuring the same thing.

[tilts hand] Right, we're talking soft science and before double-blind study methodology was widely understood and practiced. Point is, others working around Milgram had already demonstrated and even begun socially-culturally associating highly analogous concepts like institutional cruelty, ideological obedience, behavioral expectations and social roles, etc. and they notably couldn't predict precisely who would electrocute people, who would refuse, and who would emotionally break down, if they even would, up front. Zimbardo rather specifically intended for it.

The Milgram experiments were also crap.

What a useless contribution. Like to the point that I don't disagree with you, but I don't want your opinion because you apparently can't express it in any less retarded baiting fashion than that.

If you'd just posted, "I'm retarded." I would've thought, "Huh." But posting what you posted, the only think I can think is "What an asshat."

I actually haven't done a deep dive on Milgram. The thing is, there have been other, similar experiments which reinforce Milgram's conclusion. There are accusations he was tweaking the data and it wasn't the perfect test environment, but it was run professionally, especially in comparison to that whack Zimbardo.

It's interesting in that his initial hypothesis was that Germans were, due to whatever cultural reasons, much more likely to submit to an authority figure compared to Americans. What he ended up learning was that the vast majority of people he tested were submissive to perceived authority figures. I give a lot more deference to someone's conclusions when they have to conclude against their hypothesis than someone who is putting their thumb on the scale to affirm their hypothesis. And most psychologists put their thumb on the scale.

Also, fuck Zimbardo.

I actually haven't done a deep dive on Milgram.

It's been a while since I looked but the statement is itself retarded. As I indicated above, we're talking about an era when double-blind and placebo controlled studies were still gaining toehold or momentum. The work simply demonstrating the idea of "confirmation bias" had been done but not even the people who did that work identified it as such.

It's like saying Tesla or Edison's experiments were crap.

Milgram concealed, circular-filed, did not publish, an entire experiment because it didn't produce an outcome he was expecting.

Milgram's subjects frequently knew what was going on. The ones who did know kept "shocking" people. The ones who didn't stopped early. This was revealed in the post-experiment debriefings, but Milgram concealed and didn't publish that.

His experiments were crap. Sorry that this bothers you. Tesla and Edison produced things that actually worked. Edison didn't lie about the light bulb. Milgram committed fraud.

Debunked is a non-sequitur.

And, to wit, the Acali "Sex Raft" "Experiment" came to pretty much the opposite of Zimbardo's "conclusion" two years later despite an even more insanely "Zimbardo-esque" methodology.

It was. The kids that participated were told to act how they did, and the researchers told them he was going to end it early. Sullum is a subhuman retard

He's only responding to a recent documentary that apparently only now has learned about this. It's been known for a long time that Zimbardo is an absolute sham.

You don't even need accounts from the students involved that he was directing them-if you look into his methodology, you realize that this wasn't an "experiment" of any type. He had no methodology. There was a graduate assistant who he was telling about his experiment just as it launched. He was interested, inquisitive, so he asked a few basic questions. "So what do you use for a control group?" Zimbardo told him to shut the fuck up.

Without any actual parameters, no type of data to collect, he just set up an extended LARPing session. This wasn't science, and its fame is a indictment for how pathetic the field of psychology is.

About the same time there was a virology experiment at another university that confined college students to separate residential buildings each with a student who was acutely symptomatic with a cold. One group was told to play card games in groups to while away the time of confinement, while the other was allowed to interact socially but without playing cards. The hypothesis was that colds are not transmitted through the air but, rather, by contact with fomites (i.e. playing cards.) Since this was a REAL scientific experiment with proper design, the null hypothesis was rejected and we have had hand sanitizer in most department and grocery stores and medical centers ever since!

Debunked or not the thing is a red herring

Just from descriptions many years ago, this is how I always understood it. What I didn't understand was why some people gave it such a dramatic interpretation, or thought it said anything significant at all.

I had always understood it specifically to be intro- or retrospective to the science not to the conclusions.

Science, esp. psychological science, needs ethical checks, oversight, or transparency specifically because of Tuskegee, Zimbardo(, Wuhan, Tavistock, etc., etc.)...

I don't remember the exact discussion(s) but, more than once, that The Lucifer Effect wasn't really any sort of scientific treatise as much as a sifting through the pieces mea culpa.

Because psychologists are desperate. They are not a serious field of science and don't know how to do it, so they like to point something bold and dramatic as if it signified something. There's already a replication crisis in most scientific studies (where something like 40 or even 50% can't be replicated), but in Psychology it's close to two-thirds of results that can't be replicated.

JS;dr

So which part is The Science, the cosplay prison drama or the cosplay 3D psychology deflection?

And is the brave media investigation part of The Journalism?

Miligram was wrong. People would never blindly obey someone in a lab coat and *cough*covid*cough* and hurt others.

The Libertarian take away from The Hartford Prison Experiment is that the whole project itself was unethical.

FWIW - odd little factoid - Zimbardo and Milgram were at HS together.

One wonders what some of the teachers were like.

Always seemed to me that it drew too bright a line between acting in a play and acting in real life. Everybody plays multiple roles in real life -- solo, worker, child, parent, spouse, competitor, and they vary by who else is present or topic under discussion.

To think that telling students what to do is an exact simulacrum of what employees in a real job would do is the height of academic hubris.

Yup! The pseudoscience of Psychology was especially thin at Stanford that year.

""Did participants exhibit a natural inclination for cruelty, or were they just doing what they thought researchers wanted?""

It wasn't about finding if people had a natural inclination for cruelty.

It's about whether people would continue to obey the authority figure even when they believe someone is being hurt.

I was thinking Milgram not the Stanford.

What it was SUPPOSED to be about and what it was ACTUALLY about were probably two completely different things! When a clueless researcher designs a very imaginative experiment, with no testable hypothesis, and very shaky logic to tie the outcome to any logical conclusions, you better watch out!

The real controversy here both then and now was not about the validity of the study or the validity of the conclusions but rather how a major and highly respected university could allow such a study to be conducted at all. There was major fallout at the time about psychological harm done to the participants and, in retrospect, some serious questions about the researcher!

There was another major psychological experiment conducted at about the same time that put test subjects at the controls of ostensibly painful shock-administering apparatus with instructions to keep turning up the amperage as the restrained recipient of the shocks screamed louder and louder and begged them to stop. Of course, no shocks were actually administered but many of the test subjects at the controls required medical treatment as a result of participating.

One of the reasons prison guards might seem more inclined to abuse or cruelty is that it's a self-selecting job/career. It's a highly unappreciated, non-prestigious career. There are people who respect police officers, but almost nobody appreciates prison guards. The vast majority of people you interact with at your work environment are going to hate you or be innately hostile to you. You don't have a public-facing aspect of your job where you have routine interactions with people outside of the prison system.

Additionally, the pay is garbage. You don't need many special qualifications, other than a degree of physical fitness. So as a job or career, it's unrewarding, both from a social and monetary standpoint. So the only thing you get out of it is the joy of having authority over bad people. Due to attrition, burnout, etc., over time, the job is going to whittle down to the people who are corrupt in some manner, either by taking bribes or by enjoying being assholes. Nobody else has any incentive to work in this environment.

Depends on the prison for the pay. Private prisons - garbage pay. State prisons - decent pay.

And you don't even need physical fitness. The local state prison near me has a guard over 70. Yes, he's on the floor, not in a room monitoring.

Por que no los dos?

I mean, if you have a natural inclination for cruelty and you're put in a situation where that inclination is encouraged to be acted upon, why would you not?

Most people have a natural inclination for cruelty and we have built cultures to suppress and manage that.

Most folks I know would say "The conceivers of this experiment are showing the same cruel immorality they say they are studying" Just like those who study perversion as a guise to enjoying perversion.If it is wrong to mistreat others, IT IS WRONG, and calling it an experiment changes nothing.

Like using aborted baby parts

Test this hypothesis. Mention the 1955 Solomon Asch experiment which proved that 3 out or 4 U.S. college students will lie simply to blend in with a lying majority. The nearest collectivist instantly changes the subject to prate abt the bogus prison experiment. You can make money betting on this outcome.

The Asch experiemnt has been duplicated many times, with Youtube videos showing the (hilarious & tragic) outcomes. Mention of it is troubling to persons programmed by staged social pressure. https://libertariantranslator.wordpress.com/2016/09/12/the-25-v-social-pressure/

I've always viewed this and the Milgram experiment as studies in the nature of human action. What are the relative perspectives of value upon which the subject's choices were made?

I don't know if anyone else has done praxeological analysis of such contrived situations where the perception of futility is induced to see how subjects react. Futility flips the perceived value which drives human actions from one of: Which available action will improve my perception of the situation? To one of, I must choose an action which has observable consequences to PROVE my actions are not futile. This perception of futility lies at the root of many things that mind-probers think are mysterious. Pretty obvious in the praxeological view in my opinion.

This is close in my mind to the experiment that placed an actor pretending to need help on the path of theology students going to deliver a paper on mercy, something like that.

Good Samaritan experiment ( Princeton Theological Seminary

DANIEL BATSON

Well, people need to recognize it as moral at all. If that's what it is though by somebody's definition of moral and definitions of moral change it's a, a principle of right or wrong conduct and you know, so I mean, it's for a, a good Randian Ayn Rand you know, to sort of pursue self fulfillment and aggrandizement is moral.