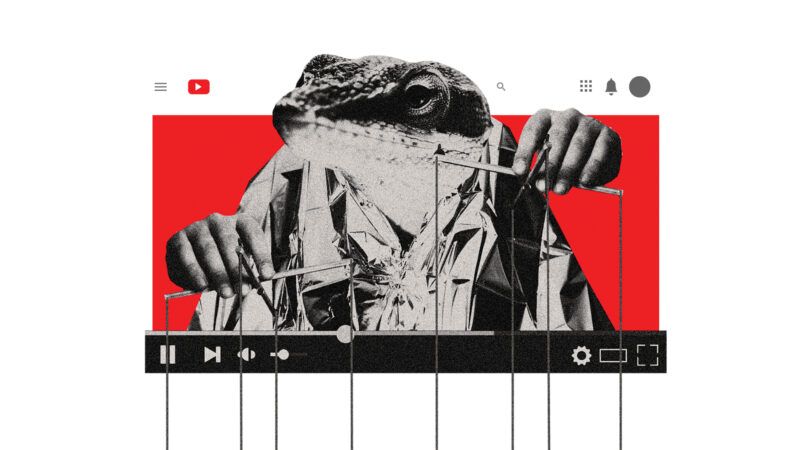

YouTube Algorithms Don't Turn Unsuspecting Masses Into Extremists, New Study Suggests

A new study casts doubt on the most prominent theories about extremism-by-algorithm.

"Over years of reporting on internet culture, I've heard countless versions of [this] story: an aimless young man—usually white, frequently interested in video games—visits YouTube looking for direction or distraction and is seduced by a community of far-right creators," wrote Kevin Roose for The New York Times back in 2019. "Some young men discover far-right videos by accident, while others seek them out. Some travel all the way to neo-Nazism, while others stop at milder forms of bigotry."

Never one to dial back alarmism, The Daily Beast put out a headline in 2018 calling YouTube's algorithm a "far-right radicalization factory" and claimed that an "unofficial network of fringe channels is pulling YouTubers down the rabbit hole of extremism." Even MIT Technology Review sounded the alarm in 2020 about how "YouTube's algorithm seems to be funneling people to alt-right videos."

A new study by City University of New York's Annie Y. Chen, Dartmouth's Brendan Nyhan, University of Exeter's Jason Reifler, Stanford's Ronald E. Robertson, and Northeastern's Christo Wilson complicates these popular narratives. "Using paired behavioral and survey data provided by participants recruited from a representative sample (n=1,181), we show that exposure to alternative and extremist channel videos on YouTube is heavily concentrated among a small group of people with high prior levels of gender and racial resentment," write the researchers. "These viewers typically subscribe to these channels (causing YouTube to recommend their videos more often) and often follow external links to them. Contrary to the 'rabbit holes' narrative, non-subscribers are rarely recommended videos from alternative and extremist channels and seldom follow such recommendations when offered."

The researchers were precise in their definition of what constitutes a "rabbit hole." They also distinguished between "alternative" content—Steven Crowder, Tim Pool, Candace Owens—and "extremist" content—Stefan Molyneux, David Duke, Mike Cernovich. (Methodological information can be found here.)

Less than 1 percent—0.6, to be exact—of those studied by the researchers were responsible for an astonishing 80 percent of the watch time for channels deemed extremist. And only 1.7 percent of participants studied were responsible for 79 percent of the watch time for channels deemed alternative. These study participants typically found those videos by having watched similar ones previously.

But how many people studied by the researchers watched innocuous videos and were led by algorithms from those videos toward extremist content? This occurred in 0.02 percent of the total video visits that were studied, for a grand total of 108 times. If you apply an even stricter definition of rabbit holes and exclude cases where a viewer was subscribed to a similar extremist or alternative channel and followed an algorithmic suggestion to a similar one, rabbit holes become even more rare, comprising 0.012 percent of all video visits.

Basically, the narrative that hordes of unwitting YouTube browsers are suddenly stumbling across far-right extremist content and becoming entranced by it does not hold much water.

Instead, it is people who were already choosing to watch fringe videos who might be fed extremist content by the algorithm. It's the people who were already Alex Jones-curious, per their own browsing habits, who receive content about Pizzagate and how the chemicals in the water supply are turning the frogs gay and who veer toward Cernovich or Duke types. (Duke himself was banned from YouTube two years ago.)

Plenty of tech critics and politicians fearmonger about algorithmic rabbit holes, touting these powerful algorithms as explanations for why people get radicalized and calling for regulatory crackdowns to prevent this problem. But this new study provides decent evidence that the rabbit hole concern is overblown—and, possibly, that the steps YouTube took to tweak their algorithm circa 2019 have led to the relative absence of rabbit holes. The changes "do appear to have affected the propagation of some of the worst content on the platform, reducing both recommendations to conspiratorial content on the platform and sharing of YouTube conspiracy videos on Twitter and Reddit," write the researchers.

"Our research suggests that the role of algorithms in driving people to potentially harmful content is seemingly overstated in the post-2019 debate about YouTube," Nyhan tells Reason, but "people with extreme views are finding extremist content on the platform. The fact that they are often seeking such content out does not mean YouTube should escape scrutiny for providing free hosting, subscriptions, etc. to the channels in question—these are choices that the company has made."

Algorithm tweaks have not stopped tech journalists and politicians from frequently bloviating about YouTube radicalization, regardless of whether their rabbit hole critique remains true today.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

I expect that most of the radicalization relating to YouTube is people on the Left being radicalized by stories of people on the Right being radicalized by YouTube.

Or just redefining what is right wing at all and lumping everything under that new definition as "extreme". Sorry, if you think Joe Rogan is a pipeline to neo-nazis then you have problems with reality.

Everyone knows words and ideas are dangerous. We need to live in a world where all communication is curated and approved. We can only have freedom if our thoughts are controlled.

Sticks and stones may break my bones,

but words are literal violence.

Help, I need puppies and play-doh!

Studying algorithms should have nothing whatsoever to do with particular extremism. It should be focused on exactly how those algorithms manipulate our lizard brain. The process of manipulation has been known for 100 years.

Well, to be fair, everyone needs puppies.

Just to be safe, YouTube should immediately ban dangerous rightwing extremists like shoe0nhead.

#LibertariansForTrustingBigTech

Obviously the solution is licensing people to use social media. This process would involve rigorous training in logic and IT, followed by written testing and psychological evaluation. After a successful probationary period, probably three months, the license would be granted for two years.

Don’t forget a loyalty test and “donation” The Party.

Or we could just teach critical thinking in schools. Well, except I don't trust the teachers to teach it.

Most of the teachers can't teach logic because they can't _do_ logic. That's why they majored in education rather than a well-paid specialty.

There is also the breathless assertions that the only sort of radicalization is movement to the political right. Movement to the political left is either ignored or considered proper.

+1

But only by the media, which is itself 90% partisan Democrat. So they naturally see any deviation form the (literal) party line to be a drift to the partisan right.

This wouldn't be so bad, except the whole concept of objective journalism and separating reporting from opinionating has been lost. So it's impossible to get the news without a heaping dose of being told what you're supposed to think. The other side isn't any better, but at least they're honest about their partisan agenda.

Solution: Seek out more neutral journalism outlets, and don't get your news from commentary and opinion pages.

There is also the breathless assertions that the only sort of radicalization is movement to the political right. Movement to the political left is either ignored or considered proper.

Again, this goes to the thesis that society has always been able to work out when the right goes to far, but we can never seem work out when the left goes too far.

how can they ever figure ouot when the left goes too far when they cant even figure out that the National SOCIALIST party was SOCIALIST... LEFT WING!!!

but of course we all know that THEY know this... its all part of the greater goal of changing defn... like man can be woman

etc.etc.

> "YouTube's algorithm seems to be funneling people to alt-right videos."

Akshually, I only get one kind of right wing videos on YouTube. They're all Dennis Prager videos whining that YouTube is being mean to Dennis Prager. o_O

And I really don't get most Left wing woke videos either.

So why is my YouTube so partisan free? The algorithm. I don't watch that stuff so it never recommends more of it to me. Simple as that. That these political types tend to only see political videos means that they are political types that have a history of watching political videos. Duh.

This may interest you...

I used to think that FacePooo must be TERRIBLE about shutting down conservatives! As much as these “victims” yammer all day about it! Turns out that FacePoooo doesn’t shut them down until they are WAAAAY into the red zone… ‘Cause all of the outrage-posters attract like-minded folks to FacePoooo, and generate revenue for FacePooo!

The below opened my eyes about all that…

https://www.theatlantic.com/technology/archive/2022/02/facebook-hate-speech-misinformation-superusers/621617/ Facebook Has a Superuser-Supremacy Problem …. Most public activity on the platform comes from a tiny, hyperactive group of abusive users. Facebook relies on them to decide what everyone sees.

Hey look, sarcasmic the drunken Marxist retard who consumes nothing but radical left wing media realized after reading a propaganda piece on a radical left wing media site that conservatives are bad!

"Everywhere I look I see exactly what I'm looking for!"

MASSIVE projection ya got going on there, Tulpa the Tulpoopy Tulpuppy! Echo chamber much?

(You're ENTIRELY too stupid to refute what I say, and what my links say, Tulpoopy!)

I know sarcasmic! Your links from Salon, The Atlantic, and Mother Jones are utterly irrefutable! You DESTROY the cons with FACTS and LOGIC! You're not at all a sub-literate stupid piece of shit drunk who subsists on taxpayer handouts! Facebook only bans radical right wing Nazis like The New York Post! I know it because it says so right there on Salon, The Atlantic and Mother Jones! QED!

That perfectly explains why visiting the site without an account shows you nothing but boring normie reddit-tier shit and MSDNC political coverage. Or why people who are subscribed to right-leaning content creators get constant recommendations for leftist pseuds like Destiny. Dumbfuck

"Everywhere I look I see exactly what I'm looking for!"

Why does Youtube and Google pretend they do?

My biggest problem with the “Algorithm” (cue loud stentorian echoes) is that after only a few minutes of watching texas hold’em videos I can’t get anything but those videos. If I watch movie trailers I have the same problem. I have to exit out of my browser delete all history and cookies (which I have set anyway so). The ability to just randomly browse is gone.

I don't use YouTube very often, but I've seemingly cultivated my feed into recommending me almost exclusively Norm MacDonald videos. So, I ain't got any issue with what the Google is pushing on me. I'm already a radical MacDonaldist, I'm willing to follow this rabbit hole to the end.

If you're waiting for new Norm MacDonald content I've got some bad news for you.

Oh goodness! Brother R.G. Stair would say you are too far gone! He went into fits when photos from the mainstream media showed President Little Bush Boy making the "Satanic" Texas Hold 'Em hand sign! 😉

Racist Algorithms!?!

If everything is racist, nothing is racist.

The Schwartz has led me to The One True Pizza Hut! Now that is the ONE and ONLY thing that I need in my life... The One True Pizza Hut gives me EVERYTHING that I might EVER want or need!

(So YES, "Use the Schwartz"... Use the Schwartz!!! May The Schwartz be with you!)

HAHAHAHAHAHAHAHAHAHAHAHAHAHA

HAHAHAHAHAHAHAHAHAHAHAHAHAHA

HAHAHAHAHAHAHAHAHAHAHAHAHAHA

HAHAHAHAHAHAHAHAHAHAHAHAHAHA

JESUS CHRIST SARCASMIC MY SIDES ARE IN ORBIT!!!!!!

HAVE YOU EVER CONSIDERED A CAREER IN PROFESSIONAL COMEDY?

You're too boring! The Russian programmers that write the code that generates you? Tell them to "up their game", a LOT!!!

Lmfao. Yeah, you're the true freethinker, sarcasmic. Copy-pasting the same half dozen 5 paragraph essays every day of your life, 12-16 hours per day, for well over ten years and the same 5 links dating to 2016 from Salon, The Atlantic, and Mother Jones. The real NPCs are the people who point out that you're a neurotic Marxist drunk still seething 20 years later about your life leaving you and bragging about your job as a fry cook who doesn't know what a Cuban sandwich is.

Fuck bro. Seriously. As much as I love tweaking you and baiting you into posting retarded shit, it's to a point where even I feel pity for you.

Sometimes I am tempted to pity myself for having to put up with PROUDLY wrong and ignorant lie-spreaders like YOU! But I keep on keeping on...

If you REALLY pity me, humor me and GET THE FUCK OFF OF THIS POSTING BOARD and spread your anti-freedom filth elsewhere, where people appreciate your evil and your stupid!

On the one-in-a-billion chance that you GROW UP and seek wisdom, start here...

The intelligent, well-informed, and benevolent members of tribes have ALWAYS been resented by those who are made to look relatively worse (often FAR worse), as compared to the advanced ones. Especially when the advanced ones denigrate tribalism. The advanced ones DARE to openly mock “MY Tribe’s lies leading to violence against your tribe GOOD! Your tribe’s lies leading to violence against MY Tribe BAD! VERY bad!” And then that’s when the Jesus-killers, Mahatma Gandhi-killers, Martin Luther King Jr.-killers, etc., unsheath their long knives!

“Do-gooder derogation” (look it up) is a socio-biologically programmed instinct. SOME of us are ethically advanced enough to overcome it, using benevolence and free will! For details, see http://www.churchofsqrls.com/Do_Gooders_Bad/ and http://www.churchofsqrls.com/Jesus_Validated/ .

I do tend to be suspicious of studies that require defining which opinions are extremist ahead of time for this. Looking at the methodology we have this paragraph:

In our typology, alternative channels tend to advocate “reactionary” positions and typically

claim to espouse marginalized viewpoints despite the channel owners primarily identifying as White

and/or male. This list combines Lewis’ Alternative Influence Network (31), the Intellectual Dark

Web and Alt-lite channels from Ribeiro et al. (22), and channels classified by Ledwich and Zaitsev

(23) as Men’s Rights Activists or Anti-Social Justice Warriors. Example alternative channels in our

typology include those hosted by Steven Crowder, Tim Pool, Laura Loomer, and Candace Owens.

I don't actually know the people since I don't watch Youtube or pay attention to anything that aren't essays in Commentary, but some of the lines there I think are interesting.

The extremist one is similar, using the SPLC as a judgement of an extremism is tenuous even if I'd agree that David Duke is pretty dang extreme and awful.

I'm not going to read the entire white-paper, it's 98 pages, but I just have some gut-level concerns with it. Though, I don't really know how you'd do this study otherwise. I don't know.

I don't actually know the people since I don't watch Youtube or pay attention to anything that aren't essays in Commentary, but some of the lines there I think are interesting.

I know who all of those people are, watch a couple of them semi-regularly, others almost never.

One, maybe two of them would be considered standard right, GOP talking-point channels, or channels which are favorable to GOP people. One of them identifies as being left-wing, but like many of the left-wing people I follow, has become horrified by the left's extremism and so gets accused of being right-wing.

When you start your premise based on:

The GOP is an extremist organization

You've jumped the shark. And this is while we've watched the mainstream "center" left become openly racist and Marxist. So... make of that what you will.

There's always going to be issues with identifying extremism. It's not objective, and extremism yesterday is standard today. Things change. This paper seems to very specifically locate extremism as well, but that's a different and political argument and is less interesting than the general problem.

I think there is a pessimism in the value of argument with these people though. There's always this assumption that "good argument can't win, therefore we must prevent people from ever even seeing bad arguments." I just don't think that's true.

Evidence of the Holocaust does quite well against Holocaust deniers.

The evidence of success and the moral arguments behind markets does quite well.

The pro-life movement is a long process of argumentation that has affected meaningful change in people's minds.

They give a list of examples, but then also say:

We are not making these lists publicly available to avoid directing attention to potentially harmful channels. We are, however, willing to privately share them with researchers and journalists upon request.

Reason should get us the list. I wonder if they're including broader channels like The Quartering, or people who don't even really do political content like Critical Drinker or Geeks & Gamers in their "alternative" or "extremist" category. Is Viva Frei on their list? Nick Rekieta?

I don't really know Mike Cernovich beyond the name, and I don't know Faith Goldy or Stefan Molyneux. I'm not sure if they're actually dangerous. And without them giving a full list of what qualifies as "Extremist," we don't know how broad the net is. It's not really a good study if they're keeping important information hidden.

I think I read "The MAGA Mindset" by Cernovich when Trump was first elected. He was a fairly straightforward "Alpha Male - Fight Hard" sort of voice. I have no idea how that may have shifted in the last 6 years.

I'm glad to know there is a scientifically valid methodology for identifying extremists.

(((Commentary?))) Oooh! David Duke wouldn't like you either! 😉

Seriously, using the SPLC as a guide to bigotry is worse than tenuous, it is hypocrisy given their internal practices of racism and sexual harassment:

The Southern Poverty Law Center is Everything That Is Wrong With Liberalism

https://www.currentaffairs.org/2019/03/the-southern-poverty-law-center-is-everything-thats-wrong-with-liberalism

It's rather telling they didn't generate the full lists used in the appendices.

youtube is valuable for sending my nephew music from the 70s & 80s he'll never hear otherwise. and Simpsons clips. if you're weak enough to become a nazi or isis because of youtube you were probably fucked anyway.

There seems to be a lot of youtube hate that feels misplaced to me. The people that run Youtube are objectively awful. Yes, youtube DOES "pick on conservatives" more than they do figures on the left-- but even that is highly nuanced as many of the left wing people I listen to are also getting picked on by youtube. This is mainly because youtube isn't just "favorable to the left", they're favorable to a particular narrative, which at this point in history happens to be left-wing.

I'm a little surprised by people (BUCS included) who don't watch youtube much or say "the only thing I get are cat videos".

Youtube, for all its faults is still an amazing service that allows you to get a wide range of views (even if the people I tend to get my views from have all long been demonetized and are teetering on the edge of a second or third hard strike). And say what you will, it doesn't have to be politically motivated content. You can get a goddamned PhD in history from the excellent documentaries that youtube historians and other people produce.

If you're a WWII buff as I am, I strongly recommend Mark Felton Production videos. Some of the best stuff out there. If you're into English history, you can watch a channel such as The History Chap who will give you great historical rundowns of events in British History.

If you're into language and language history and etymology (as I am) you can find a great channel called NativeLang which will teach you the original pronunciation of ancient Mongolian or the proper Latin pronunciation of Veni Vidi Vici. (Hint, yes, it IS "Weni Widi Wici, I'm sorry to say).

Part of the above is why the Youtube/Google leadership makes me so angry. Youtube IS the promise of the original internet. It's allowed people who are literally unheard of to make excellent, high value, high quality content for free. But Youtube literally can't stand what it's become. Youtube wants to be CBS, NBC, ABC of the 1970s. It's demonetizing creators of all types, pushing them out and trying to create favored content around mainstream corporate channels such as CNN, MSNBC etc. Youtube wants to be a tri-letter television network of the 1970s. It's stupid and incredibly frustrating. Youtube's leadership literally doesn't understand its own success.

cable access for the new millennium.

I don't watch videos almost at all. I don't watch TV. It's just a preference. I read widely, and probably read more extreme viewpoints than the average person. I play video games. I just never got into video stuff that much. I don't know why.

Might be an age thing. I do use Youtube for self-help stuff, which it is an excellent resource for. It has fundamentally altered self-learning and home improvement stuff. It's great. (Some old books are still great intros though.)

Do you listen to audio books? I'd say roughly 15-20% of the videos I "watch" don't require eyes on the screen. I listen to the video on wireless headphones while I putter around my house. Most podcast conversations can be taken in this way.

You know, I have considered getting into audio books, but no, not really. I tend to really compartmentalize what I'm doing. So, I work a lot in silence or only with music. I tend to read when I read. I tend to play games when I play games. I've broken a little on podcasts, but even though are mostly a driving or walking thing for me. It's a habit for me.

I was getting into podcasts for awhile, but at some point I found it to be too much, so I really cut down to a few ones I really like and otherwise ignore most. So, Comedy Bang Bang and Mad Dogs and Englishman. The Reason Roundtable got cut out because they got to be too Reason-y for me.

YouTube sucks ass. The algo is shit. The interface is shit. It's slow. It's probably the most ad-heavy site in the history of the web now (god help you if you try to use it without uBO and SponsorBlock). It's a pale shade of its pre-Google glory. On desktop I use FreeTube, which eliminates ads, the clunky web interface and the algo. On mobile I use NewPipe, which isn't as good as FreeTube but still passable.

though I did see a cool video of teens in lingerie having a pillow fight.

Btw, DTube and Odysee are also pretty good YouTube alternatives that you might want to check out. Odysee in particular allows single-click syndication from YouTube so a lot of YouTube content creators sync their content over there. It's another monetization channel, plus Odysee is very loose with the leash... thus far. They just recently introduced ads, which will likely change all that. It was originally a shitcoin-based service. They use the LBRY protocol and blockchain, but it's a fully-centralized service unlike LBRY. The ads there are less intrusive and easier to block than YouTube though. YouTube ads are a pain in the ass because they serve the ads from the same URLs as the main video content, so DNS-level blocking doesn't work - you need a script blocker like uBO or NoScript.

Dan Carlin isn't prolific, but has real "You Are There", gut-punch, "what was it like?" historical epics. Hardcore History is the overall title.

His six part "Supernova in the East" series about Japan from '31 to '46 was a horrifying revelation about a part of WWII I was wildly uneducated about. Highly recommended. And it's only about 25-30 hours!

Agreed. A lot of the history content is good. Basically in-depth podcasts with graphics.

Mark Felton hits some fascinating subjects. Have you checked out TIK at all on there?

Some of his definitions are a bit Rothbardian absolutist for my taste. But he does revisionist history (in the proper good sense of the term) well. His episodes on the Weimar Bank wars, and his focus on things like supply logistics have been eye opening and game changing for me.

His series on Stalingrad has been going on for almost two years now I think. Literally covering the fighting street by street.

It is increasingly difficult to read any news or commentary from any source without wanting to throw & break things. There is zero reality bar. The internet & social media have fundamentally altered human social relationships. More like the leap as we invented and developed language, than even the inventions writing & printing.

The techniques & devices of brainwashing & cultivating true believers are very very old. As old as religion. ISIS, & other radical extremists, Q-anon, & all of the White nativist groups, & pedophiles, work out of the same book. But hey, let's blame it on the algorithms. Instead of the people recruiting. Keep everything as muddy as possible. So the "normies" don't catch on.

Lmfao. Considering Nick Gillespie, Robby Soave and Michael Moynihan all write for The Daily Beast, I'm surprised you got this line to stick. It's a good thing Reason has 45 "editors" who never actually edit anything.

They write for "not-MY-tribe"?!?!? TOTAL PROOF that they are EVIL liars!!!

(Right, right-wing wrong-nut?)

Hey good job, sarcasmic. Most of those letters are from the alphabet!

The Algorithms would have a real problem with me! Back in the Nineties, you know, the Wild West Days of the Internet, I enjoyed a site called The Seventh Seal from Sweden (named after the Igmar Bergman film) and it had links to Web Sites of every conceivable political persuasion.

From Liberal to what would be called Tankie Communist today; from Conservative to Fascist/Nazi White Supremacist; Other Racist Sites such as Black Nationalist and Eugenics Sites based in China; Green/Localist/"Small Is Beautiful" Sites; Secessionist and National Liberation Sites; all the flavors of Anarchism; and, of course, a separate page for Libertarian/Free Market Capitalism! Too bad this Site is nowhere to be found anymore, not even archived AFAICT.

For general knowledge about both mainstream and off-shoots of World Religions as well as philosophical schools of Unbelief, I also refer to the Sites for:

Ontario Consultants for Religious Tolerance

http://religioustolerance.org

and

Internet Infidels

http://infidels.org

Again, I'm surprised that all the Big Tech companies servers haven't froze up and short-circuited over my browsing.

Sites like that were what made the internet fun before the soul-sucking content Nazis took over. The platformization of the internet was and remains a malignant fucking cancer. I really truly can't imagine the type of miserable stick-up-the-ass fucking cunts who would consciously choose Facebook, Twitter and post-Google YouTube over, say, SomethingAwful, 4chan and YTMND.

They have a technique to deal with such eclecticism nowadays-they claim all the "diversity" is a cover for your penchant for sites and damn you anyway.

Dang. Forgot Reason board formatting...

They have a technique to deal with such eclecticism nowadays-they claim all the "diversity" is a cover for your penchant for *Insert Badthink here* sites and damn you anyway.

No, YouTube algorithms don't turn unsuspecting masses into extremists.

That's what we have colleges and universities for.

My own sense is that social media is more homogenizing that radicalizing.

I personally think that groupthink via social media is an explanation behind Trump's rise and the widespread belief in the wisdom of covid inspired lockdowns.

F'Youtube.... Vimeo is much better.