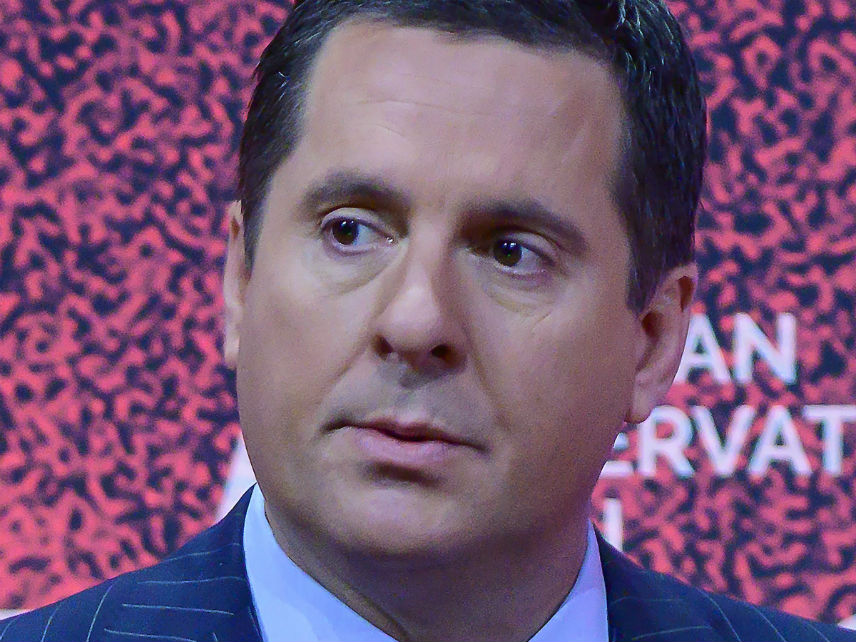

Devin Nunes' Hilarious Twitter Beef

...and its not-so-hilarious implications

The chief difficulty for an internet lawyer who, like me, is trying to soberly assess the legal merits of Rep. Devin Nunes' lawsuit against Twitter is that it's screamingly, impossibly funny. Still, as unintentionally hilarious as the Nunes complaint is, it needs to be taken seriously as a possible harbinger of renewed efforts in Congress and the courts to punish internet companies for (as some critics insist) unfairly censoring conservative thought.

In order to get to the serious issues, though, we should at least take a look at what's funny about the California Republican's complaint. And don't take my word for how funny it is—read it out loud to any group of your friends, and they'll crack up. Heck, read it out loud by yourself and you'll crack up. We can start with the defendants: in addition to naming Twitter itself (the congressman thinks the company surely has the cash to meet his demand for $250 million in reputational damage), Nunes names two pseudonymous Twitter users—"Devin Nunes' Mom" and "Devin Nunes' Cow"—as well as Republican political/communications strategist Liz Mair and her consulting firm, Mair Strategies LLC. Mair, the only actual human being to be targeted by name in the complaint, was a critic of Donald Trump when he ran for president, and is even more of a Trump critic now. Her snarky tweets about Nunes might be taken as merely an extension of her opposition to Trump, since the congressman has been an avid supporter of the president. Except for one thing: when you read her tweets (and other links to her public statements) in Nunes' filing, she seems plainly to dislike (and disapprove of) Nunes as a legislator and as a human being, independent of who happens to be in the White House these days.

You get the feeling when you read the complaint, though, that Mair was added mainly to make the lawsuit seem less…well, whimsical. Otherwise, the complaint's substance would be almost entirely a recitation of Bad Things Tweeted About Nunes by "Devin Nunes' Mom" and "Devin Nunes' Cow." There are, let's face it, a lot of mean tweets there. Read the long complaint straight through, and you find yourself not only wondering what the congressman's relationship with his real mom is like (she eventually does get mentioned in passing) but also whether any of his cows do have, shall we say, a beef with him. In the interests of fairness, I won't recite any of the mean tweets from the defendants—although I really want to, because the ones from "Mom" and "Cow" are so comically over-the-top—but will instead characterize them generally. Mair's tweets (comparatively few of which are included in the document) are fairly standard fare coming from a political opponent; the tweets from "Mom" and "Cow," in contrast, are relentlessly profane, peppered with the occasional pop culture reference that Nunes (or, more precisely, his lawyer, Steven Biss of Charlottesville, Virginia) labors to spell out for the court—although this reader found some of the explanations incorrect or incomplete. Biss explains in a footnote that "Scabbers"—a name "Mom" applies to Nunes—is the name of Ron Weasley's pet rat. That's technically true but so woefully incomplete an explanation of Scabbers's significance that one wonders if Biss read past the first Rowling book. (You Hogwarts alums will note I'm avoiding spoilers here.) Then there's the Human Centipede cartoon, which, perhaps mercifully, the plaintiff doesn't seem to understand, much less correctly explain.

Libel

Most of the tweets in question (and Nunes feels compelled to list other, non-Twitter sources for Mair's alleged calumnies) are so overtly mean-spirited that they seem aimed to sting Nunes personally rather than damage his reputation—they don't come across as serious statements of fact. Yet, although reputational damage is central to claims that the defendants committed libel, it seems unlikely that anyone who had a high opinion of Nunes would suddenly have lowered it due to anything "Mom" or "Cow" tweeted. It also seems clear that Nunes and his lawyer anticipated that the defamation claims might not be strong enough to build a winning case on, especially given that Nunes is a public official. After the key Supreme Court libel case, New York Times v. Sullivan in 1964, the American system of libel law has made it harder for public officials and public figures to recover. The theory is that the First Amendment provides stronger protections when people utter—or publish—critical opinions about politicians. This long-standing precedent does not please President Trump, who'd like to make it easier to sue his critics for libel (and win), and it recently has been criticized by Justice Clarence Thomas. It seems likely that Nunes wants to revisit the Sullivan case as well, although his complaint takes pains to state expressly that the defendants acted with the "actual malice" required for a public-official plaintiff to win a libel case. (There remains, of course, the related question of whether "Devin Nunes' Mom" has enough gravitas to damage Nunes' reputation in the real world among those who know and admire him. At the very least, it may be difficult to show that $250 million of reputational damage happened.)

Fighting Words

In addition to the libel/defamation claim in the lawsuit, Nunes also includes a "fighting words" claim, based on a Virginia statute. The theory here is that words designed to hurt someone's sensibilities can be the basis of a legal claim of damages—that they're a narrow exception to the First Amendment's broad protections. It's true that there's a long-standing Supreme Court precedent, Chaplinsky v. New Hampshire (1942), that supports this argument. But that case is more often cited to distinguish it from other cases in which courts are asked to punish offensive speech. Generally speaking, the subsequent cases suggest that the distinction is this: if the speech really led to a fight, then it may truly be a "fighting words" case—otherwise, the offensive words are likely protected speech, even if they cause anger. Nunes fudges this question a bit: "Defendants' insulting words, in the context and under the circumstances in which they were written and tweeted, tend to violence and breach of the peace. Like any reasonable person, Nunes was humiliated, disgusted, angered and provoked by the Defendants' insulting words." Yet there doesn't seem to have been any actual violence or "breach of the peace."

Nunes' defamation and "fighting words" claims aren't the strongest. But even if we allow that the individual defendants, pseudonymous and otherwise, might theoretically have defamed Nunes, the heart of the complaint is the inclusion of Twitter Inc. as a defendant. Suing a big tech company is the real heart of this case, and Nunes makes that clear with claims that Twitter somehow intentionally facilitated and orchestrated these efforts by some of his critics to damage his reputation and hurt his feelings. There are some big issues here, and even if Nunes' case itself doesn't meet with success in Virginia's courts, we know from other sources as well that a significant subset of American conservatives is certain that Twitter (and other big American internet companies like Facebook and Google) are engaged in actively censoring or otherwise discouraging conservative voices.

Hate Speech

The evidence that the America's tech companies are engaged in any systematic censorship or other content interventions based on ordinary political points of view is slim. Yes, certainly, some tech companies have engaged in targeted interventions against particularly egregious individuals—notably Alex Jones's, whose "hate speech" content seems to have been linked to real-world threats ranging from harassment of Sandy Hook victims' families to Pizzagate itself. But for the most part the companies know that, to the extent they engage in any moderating of user-generated content on social media platforms, the invariable response is more dissatisfaction—to wit, "you didn't censor enough" or "you censored too much" or "you censored the wrong stuff." It doesn't help that neither artificial-intelligence-generated algorithms nor the companies' leagues of boiler-room human content-standards monitors are good at nuance or context. A common complaint is that a tweet or status update that reports or criticizes "hate speech" may end up being censored for quoting it. Some factions in social media even "weaponize" formal complaint processes to hinder or cause the removal of speakers they dislike.

Nevertheless, some conservatives—and, to be clear, some critics among the progressive left as well—are dead certain that the platforms are actively imposing a political agenda on social media content. As an attendee at the Lincoln Network's "Reboot 2018" event last September, I listened as Jerry Johnson, president and CEO of the National Religious Broadcasters, railed against "Section 230" of the Communications Decency Act, which later became the provision of the 1996 omnibus Telecommunications Act that shields internet platforms from content they didn't develop or originate. Johnson pointed out, correctly, that Section 230 (the only section of 1996's Communications Decency Act to survive a constitutional challenge other civil libertarian lawyers and I brought to the U.S. Supreme Court in 1997) allows platforms like Facebook and Twitter to curate some user-generated content without automatically acquiring vicarious liability for the content they didn't remove (and, given the sheer volume of user content, likely didn't even see). His solution: let's impose common-carrier-like obligations on Facebook, Twitter, and other platforms so that they can never censor any content that happens to have some political dimension to it.

I talked with Johnson after the panel and got a chance to look at the booklet of censorship abuses he was carrying around to show people. In my view, it wasn't compelling evidence—it was anecdotal rather than numerical, and quite often listed instances of temporary takedowns of content that ultimately was restored after criticisms or complaints. I suggested that perhaps his sense that Twitter or Facebook were exhibiting anti-religious (or specifically anti-Christian) prejudice might be instances of confirmation bias. But let's be real—I was never going to persuade the leader of a faith-based organization that confirmation bias can be a real problem.

Shadow Bans

The question of faith brings us back to Nunes' complaint, and you can see that it's informed by what might be called a religious conviction that something nefarious is happening when he accuses Twitter of "shadow-banning." So-called "shadow-banning" is generally understood to have occurred when a user posts content but that user's post is somehow rendered invisible or less likely to be seen—either as the result of a moderator's intervention or as a result of software.

Nunes' complaint asserts, baldly and without evidence, that Twitter deliberately "shadow-banned" his tweets in order to "amplify the abusive and hateful content published and republished" by Mair, "Mom," and "Cow." His proof, such as it is, seems to lie in his having won re-election in his district by a smaller percentage than he normally wins with. It's terrible, of course, to endure a smaller-than-expected victory margin in an election.

Yet the platforms deny having "shadow-banned" anyone—at least not in the way Nunes means, and not on the basis of political persuasion. And, to put the matter plainly, their denials are credible. Most social media platforms are advertising supported, and it does none of them any good to discourage conservatives from participating in commerce that makes money for them (because conservatives see ads and buy things at least as much as progressives do). I will note, though, that Nunes, now with 405,000 followers, has an order of magnitude more followers than I do, even though he's written only about 2000 tweets compared to my 38,000. Maybe I've been shadow-banned! Devin Nunes' Cow has left us both in the dust, however—after Nunes' lawsuit was announced, the "Cow" account, which had just a few thousand followers, has skyrocketed to—as I write this—633,000 followers. Use your power wisely, cow. (The "Mom" account has been suspended, apparently due to a complaint from Nunes' actual mom.)

Section 230

As I can't help underscoring, the Nunes case against Twitter is funny, but it nonetheless needs to be taken seriously. Let's compare it to a much more substantively serious case, which is the case that the Feds brought to compel Apple to break iPhone encryption. That case, had it gone to a verdict, arguably would have been a no-lose case for the Department of Justice and FBI. If they'd won it, they would have been able to compel Apple to help them decrypt stuff or provide access to secure devices (and, most likely, to make their crypto and devices less secure overall). If they had lost, they'd have had a decision they could leverage in Congress so as to amend the law and allow the government to compel such cooperation. The reason the Nunes case needs to be taken seriously is that if Nunes is poured out of court (the most likely short-term outcome), it can be adduced as evidence that libel laws or Section 230 (which protects internet services from liability for content they didn't originate) need to be changed in a way that favors plaintiffs. Libel cases are almost invariably brought by rich people against poorer people, so that isn't an outcome one would hope for if one were not, say, Donald Trump or Clarence Thomas.

More important than the question of restructuring libel law, however, is the question of whether it makes sense to further amend Section 230—to shoehorn social media platforms into either a common-carrier model (like phone companies) or a traditional publisher model (like the Wall Street Journal or this publication). I think making either choice is a mistake, because society has a third First Amendment model that better fits what social media platforms do. That model, grounded in a 1959 Supreme Court case called Smith v. California and later adapted to the online world in the 1991 case Cubby v. CompuServe, points out that bookstores and newsstands deserve to be protected enclaves of a variety of content in which it's not presumed that, if the proprietary removes some books or magazines, it follows that she has adopted the content of all the other books or magazines she didn't remove. One could insist that a bookstore or newsstand be a common carrier, of course—but that would be a strong disincentive to get out of the bookstore or newsstand business. (I wouldn't much enjoy setting up my own science-fiction bookstore if I were also compelled to never exclude romance novels and biographies.)

Section 230 has its critics, but (together with 1998's Digital Millennium Copyright Act), it's generally regarded by those of us who work in internet and technology law as having created the environment that allows countless successful enterprises, from purely commercial enterprises like Google and Facebook and Twitter to purely philanthropic enterprises like Wikipedia, to flourish. The success of the commercial internet companies—in particular the U.S.-based ones—has been so remarkable in recent years because the current market leaders have mostly dusted their competition.

Ironically enough, the tech companies boosted by Section 230 owe some part of their success to conservatives (including religious conservatives) in the 1990s who insisted that online platforms ought to have the right to remove some content (like inappropriate sexual or criminal content) without risking greater legal liability for having done so. The statutory language itself provides that Section 230 protects "any action voluntarily taken in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected." In short, the idea that brought conservatives and progressives together back in 1996, when Section 230 was passed, was that platform providers ought to have incentives to yank what they see as offensive or disruptive off their systems--without creating legal problems for themselves if they overlooked anything. It's a mistake for today's critics of social-media content moderation to push for incentives to let the worst tweeted or posted garbage pile up rather than just take that rubbish out.

Show Comments (33)