Would Data Breach Notification Laws Really Improve Cybersecurity?

Responses to top-down federal dictates are hard to predict.

Another month, another major hack. This time, the compromise of consumer credit reporting agency Equifax has exposed the personally identifiable information (PII) of roughly 143 million U.S. consumers (not customers!) to outside groups.

People are understandably furious, and they want solutions. But we should be wary of quick legislative proposals that promise to easily fix our cybersecurity woes. Our problems with security are deep and hairy, and require lasting solutions rather than short-term Band-Aids.

There is no question that Equifax royally botched its handling of the corporate catastrophe. People generally don't have good experiences with credit reporting companies as it is. As a kind of private surveillance body, they collect data on people without permission to determine what kinds of financial opportunities will be available to us. They often get things wrong, which creates unnecessary headaches for unfairly maligned parties who must prove their financial innocence to a large corporate bureaucracy.

You'd think that a company whose sole purpose is to maintain credible, secure dossiers on people's financial profiles would make security one of their highest priorities and would have a strong mitigation plan in place for the horrible possibility that they did get hacked. You'd be wrong. While the details of what exactly went wrong at Equifax are still being fleshed out, their incident response leaves much to be desired, to say the least. (The fact that the company's Argentine website had a private username and password that were both simply "admin" does not inspire confidence.)

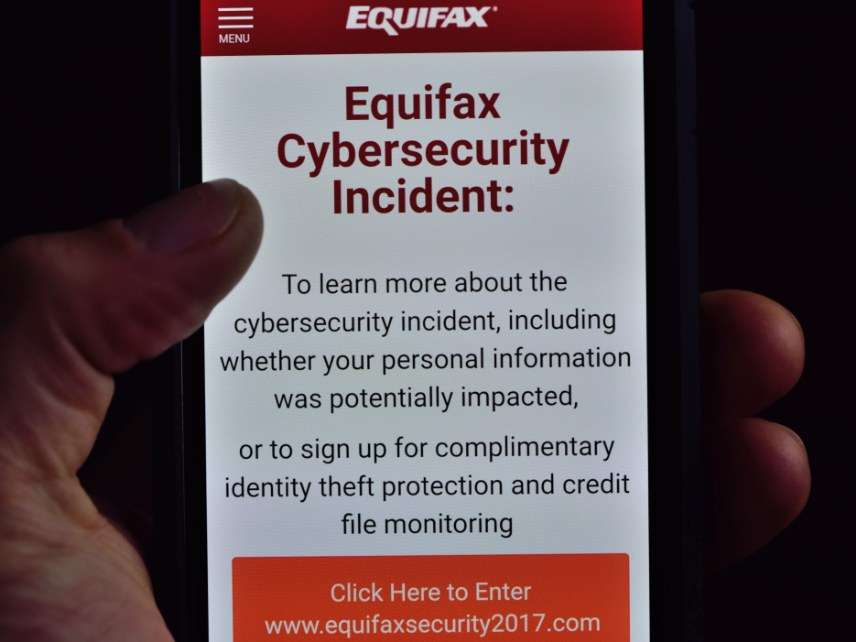

Many people feel that Equifax waited too long to notify affected parties (but Equifax executives made sure to cash out just in time). Even then, Equifax didn't reach out to victims directly, but asked people to visit a sketchy domain and enter more PII to determine whether or not you might be affected, as yours truly apparently was. This kind of arrangement primes people to be vulnerable to phishing scams. Rather than setting up a website that clearly associated it with Equifax—say, "equifax.com/securityincident2017"—Equifax directed people to a separate domain called equifaxsecurity2017.com. Illustrating the perils of such a poorly-thought arrangement, Equifax itself promoted a phishing scam in communications to customers, accidentally sending breach victims to a fake notification site called securityequifax2017.com. You just can't make this stuff up.

There is no question that Equifax screwed up majorly and should be held accountable. Already, federal regulators tasked with overseeing consumer safety and credit—namely, the Federal Trade Commission (FTC) and Consumer Financial Protection Board (CFPB)—are hard at work determining how to proceed.

But some feel that this is not enough. Legislators see the Equifax breach as an opportunity to promote data breach notification bills that had trouble getting passed in the past.

Specifically, Rep. Jim Langevin (D-R.I.) is pushing forward a new version of 2015's failed Personal Data Notification and Protection Act (PDNPA). An updated version of the bill is not available on Congress' legislation website, but the earlier version would have required businesses that collect PII on at least 10,000 individuals to notify affected parties within 30 days of a security breach. The bill outlines what information and resources the companies should make available to victims and designates the FTC as the enforcer. There are a few exemptions, such as for incidents that would affect ongoing legal investigations or those that are determined to not be a reasonable harm risk to individuals.

In terms of helping consumers pick up the pieces after a corporate hack, this kind of path forward seems reasonable. The sooner that people know they are affected by a hack, the sooner they can start changing the right passwords and flagging the right accounts. Already, companies are governed by a patchwork of 48 different state and territorial data breach reporting rules. These range from fairly broad, as in Alaska's guidance to notify affected parties "without unreasonable delay," to California's relatively specific requirements for what and when companies need to bring victims in the loop.

Langevin and supporters frame his bill as a boon to corporations affected by this patchwork. He argues that a single, clear federal standard is much preferable for both consumers and companies who would otherwise have to wade through a thicket of sometimes contradictory or unnecessarily duplicative reporting requirements. In general, federal pre-emption can be a handy tool for preventing excessive or unproductive state licensing and regulatory schemes—but of course, we'd need to compare the final bill text with the status quo to see just how this shakes out. Interestingly, some consumer advocates actually oppose measures like the PDNPA because it is actually less stringent than some existing state rules.

Supporters of federal data breach notification laws are on shakier ground when it comes to claims about the effect on overall cybersecurity, however. For example, Langevin told Christopher Mims of the Wall Street Journal that he believes his bill "would have had a direct impact in the case of the Equifax hack."

The reasoning is that if firms knew they had to quickly notify consumers of a breach, they'd proactively invest in better cybersecurity up front. It's very embarrassing to publicly admit that you messed up, and companies usually take a big hit in valuation once they break the bad news.

There's good reason to be skeptical of this claim. First of all, as Langevin himself admits, there already exist a wide array of strong data breach notification laws on the state level. It's possible that a federal-level law would have more of an impact, but his other arguments seem to contradict the claim that the PDNPA would notably improve our cybersecurity position.

More importantly, this kind of legislation could just as easily have the adverse effect of encouraging companies to not invest in security. Depending on the final wording of the bill, companies could make the cynical calculation to just not invest in appropriate monitoring techniques. If they don't know about a breach, they won't be compelled to report on them. Think of it as a "Schrodinger's hack" conundrum. It sounds silly, but regulations can sometimes make people behave in silly ways.

The quest to more closely align an organization's financial incentives with their incentives to take cybersecurity seriously is an admirable one. There is still a large gap between executive priorities and those of their information security underlings whose dire warnings too often go ignored. But the problem with top-down regulation is that it can never accurately predict how people will react to its dictates. One unintended consequence begets more regulations, which can create more unintended consequences and more regulations. The kludge builds up, and the underlying problems remain unaddressed.

This has unfortunately been the case with U.S. cybersecurity policy thus far. As I repeatedly emphasize, the federal government has an abysmal track with its own cybersecurity, and is hardly the entity that should be entrusted to singlehandedly solve our nation's security problems.

This does not mean that nothing should be done. On the contrary, there is a lot that the government could do to improve our cybersecurity position. In terms of better aligning financial incentives with security, the federal government should instead take a serious look at how to better encourage the development of a cybersecurity insurance market. And there's a lot of bad things that the government could stop doing as well. For instance, the National Security Agency and other intelligence bodies should stop hoarding security vulnerabilities that end up getting leaked and weaponized against U.S. bodies. Strong encryption should be supported, not undermined. And counterproductive laws like the Computer Fraud and Abuse Act that penalize security research should be reformed or scrapped.

In general, our politicians need to stop issuing short-term bands for problems that require long-term solutions. It may not be sexy, but our security position depends on it.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

A credit bureau should be liable for damages if their report contains false information about you. That's a pretty strong incentive to improve security.

I can see how that might improve the accuracy of the info in the report, but how would that improve security?

I'm making over $7k a month working part time. I kept hearing other people tell me how much money they can make online so I decided to look into it. Well, it was all true and has totally changed my life.

This is what I do... http://www.netcash10.com

As others have already pointed out, Equifax is going to handle this issue the way corporate nuclear mishaps are always handled - they'll change their name. Probably to something that directly addresses security concerns like SecuReport.

Congress will of course form a Oversight of High-Security Handling of Information Technology panel to maximize political gains from the breach.

Wouldn't HIPAA be a good model for legislation. I work in the medical business, and the phrase "HIPAA violation" makes people cringe. (It has a large notification element, plus fines.) I know that it's changed how we do things to make them more secure.

My big question is why "Identity Theft" is even a thing?

Someone goes into a bank and borrows a bunch of money. The bank does a crap job figuring out who this guy is and later realizes they have no idea who it actually was and now can't get repaid. This should be a problem for the bank. They got defrauded.

Since I was not involved in this transaction, this shouldn't actually concern me at all. But for some reason the bank got the legal authority to claim that no, this is actually MY problem, and that I'm responsible for cleaning up their mess for them.

This is precisely right. This problem would be solved pronto if the law said that a consumer isn't responsible for a debt, and the bank cannot report a debt to a credit bureau, unless the bank can prove the consumer actually took out the debt. And the mere fact that a person can present an SS number isn't sufficient. Let people sue banks for false reporting. With that rule in place, banks would come up with systems to verify identities (or be forced to write-off lots of noncollectable debt and damages if they decided it was too expensive to develop/use those systems).

Great idea. Require all barn owners to post a large sign saying "The horse has left the barn"

How about companies cannot use data about me as a product without my full and specific agreement, and compensation for each use of that data? If a silly singer can get money every time someone hums his tune, why don't I get paid every time someone looks at my personal and financial data? If useless legislation is required, how about creating a presumption of negligence when data is leaked, with a base loss of one million dollars per individual affected?

OPM already let the Chinese have all my data so WTF do I have to worry about from Equifax

As a security expert in the field for over 16 years, I can tell you that more laws will only create more jobs, and not have an affect on increasing security. HIPAA has generated a cottage industry of businesses and consultants that are required to be hired to comply. However, we have not seen a significant drop in health records breaches as a result of that law.

Removal of forced arbitration in contracts with companies, which you do not even know you are doing business with, and no ceiling on damages - to be directed at the affected, not a government agency - is a better carrot and stick approach.

The regulations around disclosures and breaches is only one part of HIPAA. I work in healthcare IT and investigate these incidents weekly. The reason they occur is due to human error, most of the time. When companies take the Security Rule seriously and actually put the appropriate safeguards in place, they work, but you can only remove the "human touch" so much. As long as it is up to a person to push the keys on the keyboard or put the PHI/PII in the mail or be responsible for handling the sensitive information in any way, there will be be breaches. If companies and individuals were truly held accountable for the mistakes, they would work harder to reduce them though.

Strict liability. Risk the existence of the entire company if you screw up this big.

I still have a question. How would this improve the security? What it can do is, improving the accuracy of the information. CC: Ethical Hacking