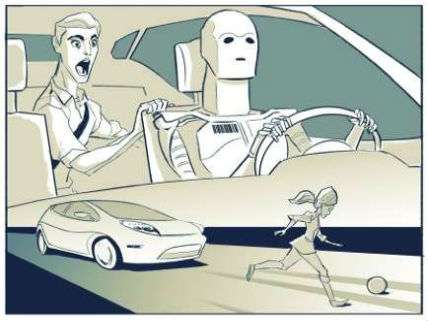

Would You Buy A Self-Driving Car That Would Kill You To Save Others?

The problem of programming ethics into autonomous vehicles

Three researchers are reporting the results of three surveys that find that most people are utilitarians when it comes to installing ethics in self-driving cars. Basically, most of those surveyed believe that cars should be programmed to sacrifice their occupants if that means that more lives will be saved in situations where harm is unavoidable. This is sort of a version of the famous "trolley problem" in which an onlooker can flip a switch to divert an out-of-control trolley car such that it runs over only one person rather than ten. Faced with that problem, most people say that they would divert the trolley to kill one in order to save ten. In other words, the greatest good for the greatest number.

But in the case of self-driving cars, the researchers ask another question: What happens when there is no onlooker, but the one who gets sacrificed to save ten strangers is you as the passenger in the car? Should cars be programmed to sacrifice you? In fact, most respondents agreed that self-driving cars should be programmed that way, but a significant portion believe that manufacturers will more likely program them to save their passengers no matter the cost.

Would they buy cars that they know are programmed to make utilitarian calculations to self-sacrifice? The researchers gave respondents three options: self-sacrifice (swerve), random, or protect the passenger (stay). Summed together most said that they think that other people should buy self-sacrificing cars, but they would prefer to own self-driving cars programmed to protect passengers (themselves) or make a random choice between self-sacrifice and protection.

Ultimately, the researchers reported:

Three surveys suggested that respondents might be prepared for autonomous vehicles programmed to make utilitarian moral decisions in situations of unavoidable harm. This was even true, to some extent, of situations in which the AV [autonomous vehicle] could sacrifice its owner in order to save the lives of other individuals on the road. Respondents praised the moral value of such a sacrifice the same, whether human or machine made the decision.

Although they were generally unwilling to see self-sacrifices enforced by law, they were more prepared for such legal enforcement if it applied to AVs, than if it applied to humans. Several reasons may underlie this effect: unlike humans, computers can be expected to dispassionately make utilitarian calculations in an instant; computers, unlike humans, can be expected to unerringly comply with the law, rendering moot the thorny issue of punishing non-compliers; and finally, a law requiring people to kill themselves would raise considerable ethical challenges.

Even in the absence of legal enforcement, most respondents agreed that AVs should be programmed for utilitarian self-sacrifice, and to pursue the greater good rather than protect their own passenger. However, they were not as confident that AVs would be programmed that way in reality—and for a good reason: They actually wished others to cruise in utilitarian AVs, more than they wanted to buy utilitarian AVs themselves. What we observe here is the classic signature of a social dilemma: People mostly agree on what should be done for the greater good of everyone, but it is in everybody's self-interest not to do it themselves. This is both a challenge and an opportunity for manufacturers or regulatory agencies wishing to push for utilitarian AVs: even though self-interest may initially work against such AVs, social norms may soon be formed that strongly favor their adoption.

Driver error is responsible for about 94 percent of traffic accidents. Consequently, if the widespread adoption of self-driving cars dramatically reduces road carnage, programing cars to make utilitarian ethical choices might be a trade-off that most of us would be willing to make.

Show Comments (179)