The End of Doom

Good news! Dire predictions about cancer epidemics, mass extinction, overpopulation, and more turned out to be a bust.

"The human predicament is driven by overpopulation, overconsumption of natural resources, and the use of unnecessarily environmentally damaging technologies and socio-economic-political arrangements to service Homo sapiens' aggregate consumption," declared notorious doomster Paul Ehrlich and his biologist wife Anne Ehrlich in the March 2013 Proceedings of the Royal Society B. They additionally warned, "Another possible threat to the continuation of civilization is global toxification," which has "expos[ed] the human population to myriad subtle poisons."

In 1968, Ehrlich infamously prophesied in The Population Bomb, "The battle to feed all of humanity is over. In the 1970s the world will undergo famines—hundreds of millions of people are going to starve to death in spite of any crash programs embarked upon now." Ehrlich was wrong then, and he and his wife are wrong now. The world is not going to be overpopulated, run out of resources, or see the outbreak of massive cancer epidemics due to exposures to synthetic chemicals. Let's take a close look at five threats that failed to materialize, despite the warnings of 20th century doomsayers.

The Cancer Epidemic

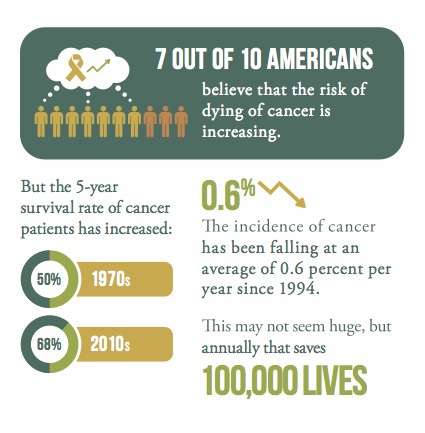

In 2007, an American Cancer Society poll found that 7 out of 10 Americans believed that the risk of dying of cancer is going up. And no wonder, with authorities such as the prestigious President's Cancer Panel ominously reporting that "with nearly 80,000 chemicals on the market in the United States, many of which are used by millions of Americans in their daily lives and are un- or understudied and largely unregulated, exposure to potential environmental carcinogens is widespread." One member of that panel, Howard University surgeon Dr. LaSalle D. Leffall Jr., went so far as to declare in 2010 that "the increasing number of known or suspected environmental carcinogens compels us to action, even though we may currently lack irrefutable proof of harm."

There's just one problem with the panic: There is no growing cancer epidemic. Even as the number of man-made chemicals has proliferated, your chances of dying of the disease have been dropping for more than four decades. And not only have cancer death rates been declining significantly, age-adjusted cancer incidence rates have been falling for nearly two decades. That is, of the number of Americans in nearly any age group, fewer are actually coming down with cancer. What's more, modern medicine has increased the five-year survival rates of cancer patients from 50 percent in the 1970s to 68 percent today.

In fact, the overall incidence of cancer has been falling about 0.6 percent per year since 1994. That may not sound like much, but as Dr. John Seffrin, CEO of the American Cancer Society, explains, "Because the rate continues to drop, it means that in recent years, about 100,000 people each year who would have died had cancer rates not declined are living to celebrate another birthday."

What's going on? According to the Centers for Disease Control and Prevention, age-adjusted cancer incidence rates have been dropping largely because fewer Americans are smoking, more are having colonoscopies in which polyps that might become cancerous are removed, and in the early 2000s many women stopped hormone replacement therapy (which moderately increases the risk of breast cancer).

How did it come to be the conventional wisdom that man-made chemicals are especially toxic and the chief sources of a modern cancer epidemic? It all began with Rachel Carson, the author of the 1962 book Silent Spring.

In Silent Spring, Carson crafted an ardent denunciation of modern technology, hostility to which drives environmentalist ideology to this day. At its heart is this belief: Nature is beneficent, stable, and even a source of moral good; humanity is arrogant, heedless, and often the source of moral evil. Carson, more than any other person, is responsible for the politicization of science that afflicts our contemporary public policy debates.

Rachel Carson surely must have known that cancer is a disease in which the risk goes up as people age. And thanks to vaccines and new antibiotics, Americans in the 1950s were living much longer-long enough to get and die of cancer. In 1900 average life expectancy was 47, and the annual death rate was 1,700 out of 100,000 Americans. By 1960, life expectancy had risen to nearly 70 years, and the annual death rate had fallen to 950 per 100,000 people. Currently, life expectancy is more than 78 years, and the annual death rate is 790 per 100,000 people. Today, although only about 13 percent of Americans are over age 65, they account for 53 percent of new cancer diagnoses and 69 percent of cancer deaths.

Carson realized that even if people didn't worry much about their own health as they aged, they did really care about that of their kids. So to ratchet up the fear factor, she asserted that children were especially vulnerable to the carcinogenic effects of synthetic chemicals. "The situation with respect to children is even more deeply disturbing," she wrote. "A quarter century ago, cancer in children was considered a medical rarity. Today, more American school children die of cancer than from any other disease [her emphasis]." In support of this claim, she reported that "twelve per cent of all deaths in children between the ages of one and fourteen are caused by cancer."

Although it sounds alarming, Carson's statistic is essentially meaningless out of context, which she failed to supply. It turns out that the percentage of children dying of cancer was rising because other causes of death, such as infectious diseases, were drastically declining. The American Cancer Society reports that about 10,450 children in the United States will be diagnosed with cancer in 2014 and that childhood cancers make up less than 1 percent of all cancers diagnosed each year. Childhood cancer incidence has been rising slowly over the past couple of decades at a rate of 0.6 percent per year. Consequently, the incidence rate increased from 13 per 100,000 in the 1970s to 16 per 100,000 now. There is no known cause for this increase. The good news is that 80 percent of kids with cancer now survive five years or more, up from 50 percent in the 1970s.

So did the predicted cancer doom ever arrive? No. Remember-overall incidence rates are down.

With regard to cancer risks posed by synthetic chemicals, the American Cancer Society in its 2014 Cancer Facts and Figures report concludes: "Exposure to carcinogenic agents in occupational, community, and other settings is thought to account for a relatively small percentage of cancer deaths-about 4 percent from occupational exposures and 2 percent from environmental pollutants (man-made and naturally occurring)."

Similarly, the British organization Cancer Research UK observes that for most people "harmful chemicals and pollution pose a very minor risk." How minor? Cancer Research UK notes, "Large organizations like the World Health Organization and the International Agency for Research into Cancer have estimated that pollution and chemicals in our environment only account for about 3 percent of all cancers. Most of these cases are in people who work in certain industries and are exposed to high levels of chemicals in their jobs." Like the American Cancer Society, Cancer Research UK advises, "Lifestyle factors such as smoking, alcohol, obesity, unhealthy diets, inactivity, and heavy sun exposure account for a much larger proportion of cancers."

Overpopulation

"To ecologists who study animals, food and population often seem like sides of the same coin," wrote Paul and Anne Ehrlich in 1990. "If too many animals are devouring it, the food supply declines; too little food, the supply of animals declines." And then the kicker: "Homo sapiens is no exception to that rule."

The Ehrlichs predicted that if the global climate system remained stable "it might take three decades or more for the food-production system to come apart unless its repair became a top priority of all humanity." They added, "One thing seems safe to predict: starvation and epidemic disease will raise death rates over most of the planet." It's 25 years later and instead of going up, the global crude death rate has dropped from 9.7 per 1,000 people in 1990 to 7.9 now.

More than two decades later, despite a distinct lack of apocalypses in the intervening time, the couple was still singing the same tune. For example, during a May 2013 conference at the University of Vermont, Paul Ehrlich asked, "What are the chances a collapse of civilization can be avoided?" His answer: 10 percent.

Did the Ehrlichs just get the timing wrong? Is the threat of overpopulation still looming over us?

In September 2014, demographers working with the United Nations Population Division published an article in Science arguing that world population would grow to around 11 billion by 2100. Nearly all of that increase—4 billion people—was projected in sub-Saharan Africa.

But Wolfgang Lutz and his fellow demographers at the International Institute for Applied Systems Analysis beg to differ. In their November 2014 study, World Population and Human Capital in the Twenty-First Century, Lutz and his colleagues take into account the fact that the education levels of women are rising fast around the world, including in Africa. "In most societies, particularly during the process of demographic transition, women with more education have fewer children, both because they want fewer and because they find better ways to pursue their goals," they note. Given current age, sex, and education trends, they estimate that world population will most likely peak at 9.6 billion by 2070 and then begin falling. If the boosting of education levels is pursued more aggressively, world population could instead top out at 8.9 billion in 2060 before starting to drop.

Falling fertility rates are overdetermined—that is, there is a plethora of mutually reinforcing data and hypotheses that explain the global downward trend. These include the effects of increased economic opportunities, more education, longer lives, greater liberty, and expanding globalization and trade. The crucial point is that all of these explanations reinforce one another and accelerate the trend of falling global fertility. Even more interestingly, they all emphasize how the opportunities afforded women by modernity produce lower fertility.

Insight into how the life prospects of women shape reproductive outcomes is provided in a 2010 article in Human Nature, "Examining the Relationship Between Life Expectancy, Reproduction, and Educational Attainment." In that study, University of Connecticut anthropologists Nicola Bulled and Richard Sosis divvied up 193 countries into five groups by their average life expectancies. In countries where women could expect to live to between 40 and 50 years, they bear an average of 5.5 children. Those with life expectancies between 51 and 61 average 4.8 children. The big drop in fertility occurs at that point. Bulled and Sosis found that when women's life expectancy rises to between 61 and 71 years, her total fertility drops to 2.5 children; between 71 and 75 years, it's 2.2 children; and over 75 years, women average 1.7 children. The United Nations' 2012 Revision notes that global average life expectancy at birth rose from 47 years in 1955 to 70 years in 2010. These findings suggest that it is more than just coincidence that the average global fertility rate has fallen over that time period from 5 to 2.45 children today.

In any case, it is a mistake to decry people as just consumers of resources. With the advent of democratic capitalism, they are also creators of new technologies, services, and ideas that have over the past two centuries enabled billions to rise from humanity's natural state of abject poverty and pervasive ignorance.

Killer Tomatoes

Given the well-established advantages of modern biotech crops, including such benefits to the natural environment as lessening pesticide use, preventing soil erosion, tolerating drought, and boosting yields, why do many leading environmentalist groups so strenuously oppose them?

Much of that answer is historically contingent. Just as biotech crops were being commercialized in the 1990s, they ran into a perfect storm of food and safety scandals in Europe. In order to protect beef farmers and suppliers, British food safety authorities downplayed the dangers of "mad cow" disease, which was spread by feeding cattle infected sheep's brains. The agency in charge of French public health permitted human transfusions of HIV-contaminated blood, despite the existence of screening technology. And the asbestos industry was revealed to have exercised undue influence over its regulators in evaluating the risks posed by that toxic mineral.

These incidents of fecklessness "led to strong distrust and caused people to think that firms and public authorities sometimes disregard certain health risks in order to protect certain economic or political interests," argues French National Institute of Agricultural Research analyst Sylvie Bonny. Consequently, the events "increased the public's attention to critical voices, and so the principle of precaution became an omnipresent reference." So when biotech crops were being introduced, much of the European public was primed to take seriously any claims that this new technology might carry hidden risks.

Bonny points out that opposition to biotech crops arose first among "ecologist associations," including Greenpeace and Friends of the Earth. In fact, hyping opposition to biotech crops served as a lifeline to these organizations. Bonny notes that in the late 1990s, Greenpeace in France was experiencing a serious falloff in membership and donations, but the genetically modified organism (GMO) issue rescued the group. "Its anti-GMO action was instrumental in strengthening Greenpeace-France which had been in serious financial straits," she reports. It should always be borne in mind that environmentalist organizations raise money to support themselves by scaring people. More generally, Bonny observes, "For some people, especially many activists, biotechnology also symbolizes the negative aspects of globalization and economic liberalism." She adds, "Since the collapse of the communist ideal has made direct opposition to capitalism more difficult today, it seems to have found new forms of expression including, in particular, criticism of globalization, certain aspects of consumption, technical developments, etc."

These concerns obviously go well beyond any scientific considerations regarding the safety of biotech crops for health and the environment, and have had significant negative consequences for the acceptance of this useful technology around the world, especially in poor countries.

No one has ever gotten so much as a cough, sneeze, sniffle, or stomachache from eating foods made with ingredients from modern biotech crops. Every independent scientific body that has ever evaluated the safety of biotech crops has found them to be safe for humans to eat. "We have reviewed the scientific literature on [genetically engineered] crop safety for the last 10 years that catches the scientific consensus that has matured since GE plants became widely cultivated worldwide, and we can conclude that the scientific research conducted so far has not detected any significant hazard directly connected with the use of GM crops," asserted a team of Italian university researchers in September 2013. And they should know, since they conducted the largest ever survey of scientific studies—more than 1,700—that evaluated the safety of such crops.

A statement issued by the board of directors of the American Association for the Advancement of Science (AAAS), the largest scientific organization in the United States, on October 20, 2012, point-blank asserted that "contrary to popular misconceptions, GM crops are the most extensively tested crops ever added to our food supply. There are occasional claims that feeding GM foods to animals causes aberrations ranging from digestive disorders, to sterility, tumors and premature death. Although such claims are often sensationalized and receive a great deal of media attention, none have stood up to rigorous scientific scrutiny." The AAAS board concluded, "Indeed, the science is quite clear: crop improvement by the modern molecular techniques of biotechnology is safe."

In July 2012, the European Commission's chief scientific adviser, Anne Glover, declared, "There is no substantiated case of any adverse impact on human health, animal health, or environmental health, so that's pretty robust evidence, and I would be confident in saying that there is no more risk in eating GMO food than eating conventionally farmed food."

At its annual meeting in June 2012, the American Medical Association endorsed a report arguing against the labeling of bioengineered foods from its Council on Science and Public Health. The report concluded, "Bioengineered foods have been consumed for close to 20 years, and during that time, no overt consequences on human health have been reported and/or substantiated in the peer reviewed literature." In December 2010, a European Commission review of 130 E.U.-funded biotechnology research projects, covering a period of more than 25 years and involving more than 500 independent research groups, found "no scientific evidence associating GMOs with higher risks for the environment or for food and feed safety than conventional plants and organisms."

Catastrophic Climate Change

Let's start by accepting that global warming is real. There are two ways to address concerns about climate change: adaptation and mitigation. In 2014, the United Nations Intergovernmental Panel on Climate Change (IPCC) issued two reports addressing these options: Climate Change 2014: Impacts, Adaptation, and Vulnerability (hereafter the Adaptation report), which describes adaptation as the "process of adjustment to actual or expected climate and its effects"; and Climate Change 2014: Mitigation (hereafter the Mitigation report), which defines mitigation as "a human intervention to reduce the sources or enhance the sinks of greenhouse gases."

The IPCC reports offer cost estimates for both adaptation and mitigation. The Adaptation report reckons that, assuming that the world takes no steps to deal with climate change, "global annual economic losses for additional temperature increases of around 2°C are between 0.2 and 2.0 percent of income." The report adds, "Losses are more likely than not to be greater, rather than smaller, than this range."

In a 2010 Proceedings of the National Academy of Sciences article, Yale economist William Nordhaus assumed that humanity will do nothing to cut greenhouse gas emissions. Nordhaus uses an integrated assessment model that combines the scientific and socioeconomic aspects of climate change to assess policy options for climate change control. His RICE-2010 integrated assessment model found that "of the estimated damages in the uncontrolled (baseline) case, those damages in 2095 are $12 trillion, or 2.8% of global output, for a global temperature increase of 3.4°C above 1900 levels." Nordhaus' estimate evidently assumes that the world's economy will grow at about 2.5 percent annually, reaching a total GDP of roughly $450 trillion in 2095.

What might the world's economy look like by 2100 using various policies with the aim of mitigating or adapting to climate change? In 2012, the IPCC asked the economists in the Environment Directorate at the Organisation for Economic Co-operation and Development to peer into the future and devise a plausible set of shared socioeconomic pathways (SSPs) to the year 2100. The OECD economists came up with five baseline scenarios. Let's take a look at a couple of the scenarios to get some idea of how the world's economy might evolve over the remainder of this century. The OECD analysis begins in 2010 with a world population of 6.8 billion and a total world gross product of $67 trillion (in 2005 dollars). This yields a global per-capita income just shy of $10,000. For reference the OECD notes that U.S. 2010 per capita income averaged $42,000.

The SSP2 scenario is described as the "middle of the road" projection in which "trends typical of recent decades continue, with some progress towards achieving development goals, reductions in resource and energy intensity at historic rates, and slowly decreasing fossil fuel dependency." If economic and demographic history unfolds as that scenario suggests, world population will have peaked at around 9.6 billion in 2065 and fallen to just over 9 billion by 2100. The world's economy will have grown more than eightfold, from $67 trillion to $577 trillion (2005 dollars). Average income per person globally will have increased from around $10,000 today to $60,000 by 2100. U.S. annual incomes would average just over $100,000.

In the SSP5 "conventional development" scenario, the world economy grows flat out, which "leads to an energy system dominated by fossil fuels, resulting in high [greenhouse gas] emissions and challenges to mitigation." Because there is more urbanization and because there are higher levels of education, world population will peak at 8.6 billion in 2055 and will have fallen to 7.4 billion by 2100. The world's economy will grow 15-fold to just over $1 quadrillion, and the average person in 2100 will be earning about $138,000 per year. U.S. annual incomes would exceed $187,000 per capita. It is of more than passing interest that people living in the warmer world of SSP5 are much better off than people in the cooler SSP2 world.

The OECD analysis adds with regard to climate change in this scenario that the much richer and more highly educated people in 2100 will face "lower socio-environmental challenges to adaptation result[ing] from attainment of human development goals, robust economic growth, highly engineered infrastructure with redundancy to minimize disruptions from extreme events, and highly managed ecosystems." In other words, greater wealth and advanced technologies will significantly enhance people's capabilities to deal with whatever the deleterious consequences of climate change turn out to be.

As noted above, the IPCC estimates that failure to adapt to climate change will reduce future incomes by between 0.2 to 2 percent for temperatures exceeding 2°C. Yale's Nordhaus is one of the more accomplished researchers in the area of trying to calculate the costs and benefits of climate change. In his 2013 book The Climate Casino: Risk, Uncertainty, and Economics for a Warming World, Nordhaus notes that a survey of studies that try to estimate the aggregated damages that climate change might inflict at 2.5°C warming comes in at an average of about 1.5 percent of global output. The highest climate damage estimate Nordhaus cites is a 5 percent reduction in income, though the much-criticized 2006 Stern Review: The Economics of Climate Change suggested that the business-as-usual path of economic growth and greenhouse gas emissions could reduce future incomes by as much as 20 percent.

Future temperatures will perhaps exceed these, but transient climate response temperatures over the remainder of the century are likely to be close to the 2.5°C benchmark cited by the IPCC. In the scenarios sketched out above, a 2 percent loss of income would mean that the $60,000 and $138,000 per capita income averages would fall to $58,800 and $135,240, respectively. Stern's more apocalyptic estimate would cut 2100 per capita incomes to $48,000 and $110,400, respectively.

So how much should people living now on incomes averaging $10,000 per year spend to make sure that people whose incomes will likely be six to 14 times higher aren't reduced by a couple of percentage points? As Nordhaus observes, "Most philosophers and economists hold that rich generations have a lower ethical claim on resources than poorer generations."

Making the extreme set of assumptions that all countries of the world begin mitigation immediately and adopt a single global carbon price, and widely deploy current versions of low- and no-carbon technologies such as wind, solar, and nuclear power, the IPCC's 2014 Mitigation report estimates that keeping carbon dioxide concentrations below 450 parts per million (ppm) in 2100 would result in "an annualized reduction of consumption growth by 0.04 to 0.14 percentage points over the century relative to annualized consumption growth in the baseline that is between 1.6 percent and 3 percent per year." The median estimate in reduced annualized growth in consumption is 0.06 percent.

The IPCC Mitigation report notes that the optimal scenario that it sketches out for keeping greenhouse gas concentrations below 450 ppm would cut incomes in 2100 by between 3 and 11 percent. How much would that be? As was done with regard to the losses from a lack of adaptation, let's look at how much the worst-case mitigation scenario might reduce future incomes. Without extra mitigation, the increase of global gross product to $577 trillion in the middle-of-the-road scenario implies an economic growth rate of 2.42 percent between 2010 and 2100. Cutting that growth rate by 0.14 percentage points to 2.28 percent yields an income of $510 trillion in 2100, reducing per capita incomes from $60,000 to $57,000 per capita. Growth in the conventional-development scenario is cut from an implied 3.07 percent to 2.93 percent, reducing overall income from over $1.015 quadrillion to $901 trillion, and cutting average incomes from $138,000 to $122,000.

All of these figures must be taken with a vat of salt since they are projections for economic, demographic, and biophysical events nearly a century from now. That being acknowledged, projected IPCC income losses that would result from doing nothing to adapt to climate change appear to be roughly comparable to the losses in income that would occur following efforts to slow climate change. In other words, it appears that doing nothing about climate change now will cost future generations about the same as doing something now.

If the results of adaptation and mitigation are more or less the same for future generations, how to pick which path to follow? Given what we know about the competence and efficiency of government, it seems likely that what governments try to do to mitigate climate change may well turn out to be worse than climate change. The better path is one that helps future generations deal with climate change by adopting policies that encourage rapid economic growth. This would endow future generations with the wealth and superior technologies necessary to handle whatever comes at them, including climate change.

Mass Extinction

Many biologists and conservationists are urgently warning that humanity is on the verge of wiping out hundreds of thousands of species in this century. "A large fraction of both terrestrial and freshwater species faces increased extinction risk under projected climate change during and beyond the 21st century," states the 2014 IPCC Adaptation report. "Current rates of extinction are about 1000 times the likely background rate of extinction," asserts a May 2014 review article in Science by Duke University biologist Stuart Pimm and his colleagues. "Scientists estimate we're now losing species at 1,000 to 10,000 times the background rate, with literally dozens going extinct every day," warns the Center for Biological Diversity. That group adds, "It could be a scary future indeed, with as many as 30 to 50 percent of all species possibly heading toward extinction by mid-century."

Eminent Harvard University biologist E.O. Wilson agrees. "We're destroying the rest of life in one century. We'll be down to half the species of plants and animals by the end of the century if we keep at this rate." University of California at Berkeley biologist Anthony Barnosky similarly notes, "It looks like modern extinction rates resemble mass extinction rates." Assuming that species loss continues unabated, Barnosky adds, "The sixth mass extinction could arrive within as little as three to 22 centuries."

Let's assume 5 million species. If Wilson is right that half could be gone by the middle of this century, that implies that species are disappearing at a rate of 71,000 per year, or just under 200 per day. Contrast this implied extinction rate with Pimm and his colleagues, who estimate that the background rate of extinction without human influence is about 0.1 species per million species years. This means that if one followed the fates of one million species, one would expect to observe about one species going extinct every 10 years. Their new estimate is 100 species going extinct per million species years. So if the world contains 5 million species, then that suggests that 500 are going extinct every year. Obviously, there is a huge gap between Wilson's off-the-cuff estimate and Pimm's more cautious calculations, though both assessments are troubling.

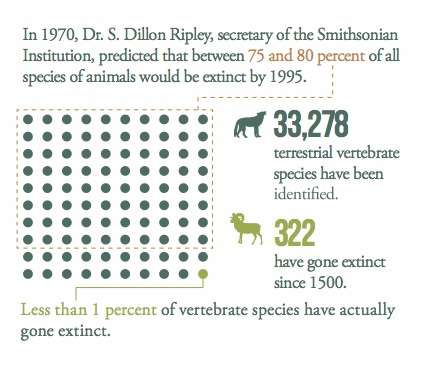

But this is not the first time biologists have sounded the alarm over purportedly accelerated species extinctions. In 1970, Dr. S. Dillon Ripley, secretary of the Smithsonian Institution, predicted that in 25 years, somewhere between 75 and 80 percent of all the species of living animals would be extinct. That is, as much as four out of every five species of animals would be extinct by 1995. Happily, that did not happen. In 1994, biologist Peter Raven predicted that "since more than nine-tenths of the original tropical rainforests will be removed in most areas within the next thirty years or so, it is expected that half of the organisms in these areas will vanish with it." It's now more than 20 years later and nowhere near 90 percent of the rainforests have been cut down, and no one thinks that half of the species inhabiting tropical forests have vanished.

In 1979, Oxford University biologist Norman Myers suggested in his book The Sinking Ark that 40,000 species per year were going extinct and that the world could lose a million species, or "one-quarter of all species by the year 2000." At a 1979 symposium at Brigham Young University, Thomas Lovejoy, a former president of the H. John Heinz III Center for Science, Economics, and the Environment, announced that he had made "an estimate of extinctions that will take place between now and the end of the century. Attempting to be conservative wherever possible, I still came up with a reduction of global diversity between one-seventh and one-fifth." Lovejoy drew up the first projections of global extinction rates for the Global 2000 Report to the President in 1980. If Lovejoy had been right, between 15 and 20 percent of all species alive in 1980 would be extinct right now. No one believes that extinctions of this magnitude have occurred over the last three decades.

What did happen? As of 2013, the International Union for the Conservation of Nature (IUCN) lists 709 known species as having gone extinct since 1500. A study published in Science in July 2014 reported that among terrestrial vertebrates, 322 species have become extinct since 1500. That's not nothing, but those assessments amount to just 1 percent of all vertebrate terrestrial species alive today.

Don't Panic

Why does it matter if the population at large believes these dire predictions about humanity's future? The primary danger is they may fuel a kind of pathological conservatism that could actually become a self-fulfilling prophecy.

The closest thing to a canonical version of the "precautionary principle" was devised by a group of 32 leading environmental activists meeting in 1998 at the Wingspread Center in Wisconsin. The Wingspread Consensus Statement on the Precautionary Principle reads: "When an activity raises threats of harm to human health or the environment, precautionary measures should be taken even if some cause and effect relationships are not fully established scientifically. In this context the proponent of an activity, rather than the public, should bear the burden of proof. The process of applying the Precautionary Principle must be open, informed and democratic and must include potentially affected parties. It must also involve an examination of the full range of alternatives, including no action."

Why was this new principle needed? Because, the Wingspread conferees asserted, the deployment of modern technologies was spawning "unintended consequences affecting human health and the environment," and "existing environmental regulations and other decisions, particularly those based on risk assessment, have failed to protect adequately human health and the environment."

As a result of these unintended side effects and the supposed regulatory inadequacy, the conferees insisted, "Corporations, government entities, organizations, communities, scientists and other individuals must adopt a precautionary approach to all human endeavors" (emphasis added). Contemplate for a moment this question: Are there any human endeavors at all that some timorous person could not assert raise a "threat" of harm to human health or the environment?

Promoters of the precautionary principle argue that its great advantage is that implementing it will help avoid deleterious unintended consequences of new technologies. Unfortunately, supporters are most often focusing on the seen while ignoring the unseen. In his brilliant essay "What Is Seen and What Is Unseen," 19th century French economist Frederic Bastiat pointed out that the favorable predictable effects of any policy often produce many disastrous later consequences.

Banning nuclear power plants reduces the imagined seen risk of exposure to radiation while boosting the unseen risks associated with man-made global warming. Prohibiting a pesticide aims to diminish the seen risk of cancer, but elevates the unseen risk of malaria. Demanding more drug trials seeks to prevent the seen risks of toxic side effects, but increases the unseen risks of disability and death stemming from delays in getting effective drugs to patients. Mandating the production of biofuels attempts to address the seen risks of dependence on foreign oil, but heightens the unseen risks of starvation.

Electricity, automobiles, antibiotics, oil production, computers, plastics, vaccinations, chlorination, mining, pesticides, paper manufacture, and nearly everything that constitutes the vast enterprise of modern technology all have risks. On the other hand, it should be perfectly obvious that allowing inventors and entrepreneurs to take those risks has enormously lessened others. How do we know? People in modern societies are enjoying much longer and healthier lives than did our ancestors, with greatly reduced risks of disease, debility, and early death.

The precautionary principle is the opposite of the scientific process of trial and error that is the modern engine of knowledge and prosperity. The precautionary principle impossibly demands trials without errors, successes without failures.

"The direct implication of trial without error is obvious: If you can do nothing without knowing first how it will turn out, you cannot do anything at all," explained Aaron Wildavsky in his 1988 book Searching for Safety. "An indirect implication of trial without error is that if trying new things is made more costly, there will be fewer departures from past practice; this very lack of change may itself be dangerous in forgoing chances to reduce existing hazards." Wildavsky added, "Existing hazards will continue to cause harm if we fail to reduce them by taking advantage of the opportunity to benefit from repeated trials."

Should we look before we leap? Sure we should. But every utterance of proverbial wisdom has its counterpart, reflecting both the complexity and the variety of life's situations and the foolishness involved in applying a short list of hard rules to them. Given the manifold challenges of poverty and environmental renewal that technological progress can help us address in this century, the wiser maxim to heed is "He who hesitates is lost."

This article originally appeared in print under the headline "The End of Doom."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Start making cash right now... Get more time with your family by doing jobs that only require for you to have a computer and an internet access and you can have that at your home. Start bringing up to $8596 a month. I've started this job and I've never been happier and now I am sharing it with you, so you can try it too. You can check it out here...

http://www.homejobs90.com

I would but i dont want to contribute to AGW, and mass extinction

boobies.

boobies.

Great.

And the fact that I'm taxed, regulated, debased over 50% of my labor? The fact that we've had 100 years of massive misallocation of resources? That market signals/forces are so damaged that people don't know up from down? That the whole economy is one big bubble on the cusp of bursting?

All the rest of the bullshit "problems" in the article are simply this era's worries of the superstitious. But REAL problems - war, tyranny, pestilence as a product of war, hunger, supply chain breakdowns, refugees, pogroms, death camps, death squads, these things are REAL. They are a burst bubble economy away from our reality. So let's concentrate on that, shall we?

If any of the stuff you mention were real, don't you think our Top.Men would be all over it?

The real threat is Global Warming, which will fall on us any minute now. Obama said "everyone agrees", so it must be true.

But in all seriousness, I thought I'd read more articles on Reason about the debt, regulation, free markets, the FED, etc., but they seem more interested in writing about nonsense clock stories that will have little or no impact when the big bubble bursts.

Mises still does a good article most of the time.

Three centuries ago or there about, humanity began to pull itself out of serfdom, poverty, and misery. Throughout that entire time period there have been groups so stupid, so servile and petty that they have done all they could to stop this advancement of the individual.

The end result of progressive ideology is turning citizens back into serfs.

The Singularity will save us all.

BS. It all ends in zombie apocalypse. Everyone sees it coming.

Haha, yeah, the atheist heaven. They got it all now, just like all the other silly religions.

" Given the manifold challenges of poverty and environmental renewal that technological progress can help us address in this century, the wiser maxim to heed is "He who hesitates is lost.""

That's a rather odd way to end a longish piece that was more about various models of future events than anything else. Since the days of Erlich's prediction, technology has progressed to the point where more food is produced than at any other time in history. But at the same time, there are more people suffering malnutrition, about a billion. Technological progress helps us to grow more food certainly. But it doesn't appear to help so much in alleviating these challenges of poverty, putting food into a billion hungry stomachs.

Actually, that's not true. Fewer people are suffering from malnutrition and starvation then ever before in human history. Look it up.

"Fewer people are suffering from malnutrition and starvation then ever before in human history."

Not according to the FAO, the section within the UN which measures such things. They say it's around 15% of the world's population, mostly the very young. One of the worst countries for malnutrition is India, a model of neo-liberal economic and technological development. All this Indian progress takes place in front of a back drop of countries like Myanmar, Vietnam and Zimbabwe improving their food situation.

You mean a country that endured 40 years of Democratic socialism, one whose transportation networks are run by a sclerotic civil service, with the incredible result that when, a few years ago, they outlawed exports of crops, vast harvests of rice rotted waiting for trains that never came?

Yessss.... the one thing India has is a surfeit of free markets.

It's either profound ignorance or absolute mendacity to claim India is an example of 'neoliberal' economic policies.

"It's either profound ignorance or absolute mendacity"

You underestimate my evil. It is both.

"the one thing India has is a surfeit of free markets"

The neo-liberal reforms of India were instituted some 25 years ago. It's been widely covered in the news media. Indian GDP growth has been pushing 10% in recent years, and has more than tripled since 1990..

And then for a half century prior to that, they were basically socialists. And there was no growth, and the hunger issues were worth. Capitalism is failing because it's not succeeding fast enough. Let's go back to...a regulated welfare state with no wealth to redistribute in the first place...

"Capitalism is failing..."

Who said anything about capitalism failing in India? It's probably thriving now as it was during the glory days of the East India Company. The problem I've been discussing here is malnourished children. There are scads of them. Capitalism is doing fine.

In India? Maybe in a couple of states, but the rest of the country is still a bureaucratic nightmare, almost impossible to do business in.

"Maybe in a couple of states"

Maybe a couple of states is all you need. Tripling an economy of over a billion people over a generation is something pretty rare these days.

Countries with a population over a billion are pretty rare. There are 2. And both of them have become more capitalistic while still retaining their heavy bureaucracy. When you go from dirt farming communism to allowing some "free" market activity, you're going to triple etc. your economy. But if most people aren't allowed to participate in the market economy, like in India, you'll still have huge pockets of poverty.

"But if most people aren't allowed to participate in the market economy"

I think the number of people in India the government prevents from buying food is vanishingly small. Are you seriously telling me that it's 'most people?'

There are more market participants than just consumers. If the producers, distributors, etc. are tightly controlled by the government then it doesn't matter how "free" the consumers are, at least as regards the effectiveness of the market.

I was discussing India. Do you have anything to offer other than these platitudes?

You are a lying piece of shit. I don't know what your measurement of 'malnutrition' is. Based on the UN's figures, world hunger has only continued to decline and sits only a few hundred million shy of the figure you just mentioned.

World Food Summit target. The target set at the 1996 World Food Summit was to halve the number of undernourished people by 2015 from their number in 1990-92. Since 1990?92, the number of hungry people in developing regions has fallen by over 200 million, from 991 million to 790.7 million. However the goal is 497 million (1/2 of 994 million), which means that the target will not be reached.

(Source: FAO et al, 2014b pp 8-12)

http://www.worldhunger.org/articles/Learn/world hunger facts 2002.htm

So, I'll just say again - you are a lying sack of shit. But we all already knew that about you.

You've almost certainly got a better handle on the numbers than I do. Fact remains though, and this was my point, that advances in technology and unprecedented surpluses in food have not fed adequately some 10% of the world's population. In fact, India, which has provided the poorer nations with a model for neo-liberal development, lags behind more traditionally hunger plagued nations like those in the sub-sahara in alleviating hunger.

Maybe anti-science retards like your butt buddy Tony should stop fighting GMO crops then?

Even Ron will tell you that it is not for lack of GMO, or any other kind of food, that is the cause of a billion malnourished. There is more food today produced than any other period of history. Or pre-history.

I'm sorry, but you're just flat out wrong. See Google.

on one hand, I hear these radio commercials telling me that 1 in 5 Americans are currently going hungry, while at the same time I'm told that America's poor are suffering from an epidemic of obesity

You guys are wasting your time. Trueman simply makes up 'facts' as his 'argument' requires:

mtrueman|5.4.15 @ 12:59AM|#

"[?] What you haven't fathomed is that I'm so morally depraved that my deserved rep here doesn't bother me or interest me in the least. I post for myself; your feelings about me are of no concern.

He is here to see his name on the screen only. Logic, facts, history? Does not matter.

true

Is the situation in India better or worse than it was before? If they have been genuinely liberalizing their economy then you would expect to see improvement not overnight perfection. Not to mention that "neo-liberal" could mean just about anything coming from you.

"If they have been genuinely liberalizing their economy"

IF? You're not aware of India's economic reforms over the past 25 years but you're still willing to spout off about them. Where is your humility?

It does help, but as a second order effect.

The primary 'cause' of poverty is the state; it diverts production that would increase standards of living towards predatory ends, it stifles production that displeases the elites (either by threatening their aesthetic senses or the rents they extract from the masses).

Technological developments can, on occasion, weaken the state. The invention of semiconductors on the whole have benefited the masses much more than the government officials.

But in the end, nation states being social constructs, are not going to be done away with by technological developments for the forseeable future, and as long as they have access to guns, communications gear, and tax revenues, will be impoverishing and grinding the poor for the foreseeable future.

Given the utter shit that econometric and climatological models are, I give their predictions about the same weight I give the crazy nutjob who used to hang out in Harvard Square warning passers-by that Reagan was planning on using nuclear weapons to rid the US of its black population. He had a model too. It was well tuned by hindcasting - explaining the assassinations of the Kennedy brothers and MLK as well as the Vietnam war.

His model was better than the IPCC ones: its predictions were about as reliable, but it was developed on a much lower budget; about $640 for Irish Rose whiskey.

love this

"Technological developments can, on occasion, weaken the state. The invention of semiconductors on the whole have benefited the masses much more than the government officials."

Maybe on occasion they do, but this is exceptional. I've mentioned before that technological developments go hand in hand with intensified hierarchy, bureaucritization, and division of labour which the state thrives on.

I think that the state typically has an interest in seeing its subjects happy and well fed. I'm not sure we can blame the state for the world's billion malnourished. Arguably the world's most repressive state, North Korea, has seen improvements in their food situation over the past few years.

Rather than blaming the state, I'd look to a system which rewards wasting food. Food production has never been higher but more food now is wasted than ever before. That's more of a market decision than one coming from any government.

Sorry but that's just wrong. Improved technology typically goes hand-in-hand with more overall economic efficiency, increased standards of living, and more personal freedom for everyone it all income levels.

I do admit, though, that you do frequently say wrong things.

"Sorry but that's just wrong."

You say it's wrong, but I don't see you disagreeing with me.

Sorry, but it's still wrong.

In many places, governments prevent people from engaging the market for food. That's a government failure, not a market failure.

Technology does not require hierarchy or bureaucracy. See reality.

You might as well say that biological diversity and complex ecosystems require God. Perhaps, if you're very close-minded.

"In many places, governments prevent people from engaging the market for food."

You're still not disagreeing with me. Even here:

"Technology does not require hierarchy or bureaucracy"

No requirement, but they tend to go hand in hand. Those drones at the NSA surveilling us don't organize themselves, you know.

Yes, I am.

The state doesn't thrive on the hierarchy, bureaucracy, and division of labor created by technology.

The state creates hierarchy and bureaucracy, and incorporates technology to accomplish its goals.

NSA surveillance drones didn't bring about a hierarchical NSA or the concept of surveilling the peaceful, civilian public, either. We actually had those already, you know.

"The state doesn't thrive on the hierarchy, bureaucracy, and division of labor created by technology."

Do you think the state would thrive better without hierarchy etc? Make your case. I promise to read whatever you put up here, provided it's not too long.

"NSA surveillance drones didn't bring about a hierarchical NSA"

I see you are still confused about cause and effect.

He's not saying that you fucking retard. He's saying that technology didn't cause the heirarchy.

Science damnit you're stupid.

Sorry, but I don't see any rebuttal to what I've said.

The state sets up hierarchy. I assume it's because it thinks it will thrive better that way, since it does that. However, since I care more about people than bureaucrats, I don't really care very much about that.

If you think that the NSA came about because of drone technology, then I definitely see how you would think I have cause and effect confused.

Can you please tell me which hierarchy the NSA drones created? Because, if the NSA drones are just an example of a technology being used by the hierarchical state, then you're just making my case for me: states create hierarchies and use technology, not the other way around.

The drones call on their owners to employ people to operate and monitor them. They are too busy with the burdens of owning them to do any of the work. Those people who operate them call for supervisors and managers to organize their work. The supervisors and managers call for yet more people to monitor their work. Pretty soon you've got a genuine bureaucracy going. If you have a idea to use unhierarchical drones, lay it out here.

A boy buys a drone and plays with it.

He decides to create a map of his neighborhood and share it with his friends.

I think we've identified the problem: your lack of imagination.

Your story assumes that the drone itself creates operators and monitors and supervisors and managers. This is confusion of cause and effect.

The NSA exists. It wants to establish surveillance over lots of geography and tons of information. It delegates these tasks and creates a hierarchy, with managers, supervisors, etc.

Some managers and supervisors estimate that they can do their jobs better drones, and use drones to collect information. This hierarchy was not caused by the drone.

They decide to hire more people and expand, and perhaps create few managers. But the desire to create the hierarchy is purely a management function of the pre-existing hierarchy. It's not caused by the drone. The drone is inert. The drone can't do anything. The drone can't "call" on its owners, ever.

You're engaging in Ludite anthropomorphism.

"Your story assumes that the drone itself creates operators and monitors and supervisors and managers."

No, my story assumes that those who own the drones want to use them effectively. This calls for the creation of the hierarchy. Do you really not get that?

Drones are mechanical equipment for surveillance. I don't mean to imply that the drones created operators etc.

"This hierarchy was not caused by the drone."

Without the drones, that hierarchy wouldn't exist. All those people would be employed examining satellite photos and whatever else NSA employees get up to.

"The drone can't "call" on its owners, ever."

It's called a figure of speech. I had assumed you wouldn't need to have that explained to you. You are becoming tedious. You can make sensible interesting comments which I promise to respond to or you can bore me and be ignored like our friend Sevo.

And without books, authors wouldn't exist.

This does not imply that books create authors, or that books cause authors. Authors create books.

Without food, people don't exist.

This doesn't imply that food creates and causes people. People create people. People create and cultivate food.

States create hierarchies. States use drones. States create hierarchies in which to use the drones. The drones don't cause any of that, your figures of speech not withstanding.

You don't understand cause and effect, and you don't understand the difference between alive and inert.

is mtrueman as retarded as his comments make him appear to be?

Serious question.

"is mtrueman as retarded as his comments make him appear to be?"

Yes, and evil too. Pure evil.

"You don't understand cause and effect, and you don't understand the difference between alive and inert."

I'm not talking about cause and effect, alive and inert. Read my first post on the matter again before you lead yourself further from my meaning.

As far as I can tell from your vague musings, you think we should avoid technology because it goes "hand in hand" with hierarchy and bureaucracy.

You cite examples of state hierarchies and bureaucracies.

I'm pointing out that technology doesn't organize people; people organize themselves and each other. As much as you may think that technology and hierarchies and bureaucracy go hand in hand, state power and hierarchies and bureaucracies go hand in hand even more. In fact, there's a direct, causal relationship between state power and hierarchies and bureaucracies, including the ones you associate with technology. If you confuse correlation with causation, and avoid technology in some ill-fated attempt to avoid hierarchy and bureaucracy, while ignoring the state, you won't succeed. You'll simply avoid efficient tools, while suffering the same costs.

If you want to remind me that you think it's still perfectly valid to notice that technology and hierarchies and bureaucracies go hand in hand, fine. Its your point. However, I think that your point is so insignificant and immaterial to my point that I really don't care. Noticing correlations and reacting with absurd, Luddite solutions doesn't really interest me.

If you wish to clarify your point, please do. Because vaguely claiming you're being misunderstand doesn't exactly explain your point, whatever it is.

"you think we should avoid technology because it goes "hand in hand" with hierarchy and bureaucracy"

I don;t think I ever said we should avoid it. I am only asking we acknowledge that we have to pay. That price is autonomy, self-reliance and even freedom. A high price for Libertarians, I would have thought.

Otherwise I pretty much agree with you. But you misconstrue me, reading what you think I say, rather than what I say. You assume at least twice stuff of me I disagree with. Avoid? No. More like approach with caution. And be aware of the price to be paid,

Please prove that the price of technology is autonomy, self-reliance, and freedom.

Also, lease show me where you said that the price of technology is autonomy, self-reliance, and freedom in this thread, instead of hierarchies and bureaucracies.

Because, really, if you're not actually going to take credit and claim what you're actually saying, there's no point in complaining about being misunderstood.

Please prove that the price of technology is autonomy, self-reliance, and freedom.

Has a deskilling effect separating the cognoscenti from the operators. Think McDonald's cash registers.

Collectivizing us as a result of the impulse to economies of scale. Think Facebook.

These aren't really proofs, but I'm not sure I know what you are asking for. I just gave a few thoughts for you to disagree with and if you want more, maybe I can come up with some.

"Also, lease show me where you said that the price of technology is autonomy, self-reliance, and freedom in this thread,"

I said it right here:

"I don;t think I ever said we should avoid it. I am only asking we acknowledge that we have to pay. That price is autonomy, self-reliance and even freedom. A high price for Libertarians, I would have thought."

"there's no point in complaining about being misunderstood."

Only meant to correct your mistaken assumptions. A more generous man would be thankful.

In the land of my little pony, perhaps yes. On Earth, no.

Human agricultural activity grows enough food to comfortably feed all of humanity. And free markets, by balancing supply with demand, and by rewarding people who identify the optimal way to distribute supply to those who demand it would ensure that all but a small fraction of that billion (guys like Namanamo hunter gatherers in Brazil) would have access to that food.

Every time crops rot unconsumed, fields are left fallow rather than actively farmed, where ships don't make it to their ports of call, there is a government (or some warlord) squatting in the way preventing it.

Given that 15 years ago they flooded something like 30% of their arable land for a giant hydroelectric project, the fact that they are regressing to the mean is in no way an evidence that nation states are not responsible for malnoruishment or starvation. It merely means that the North Korean dictator is for now grinding the masses a little less under his boot.

You mean the state that prevents charities from using leftovers to feed the needy, that state?

Gosh, I wonder why that cheap food is being thrown away rather than sold to hunngry people who are motivated to buy it? Gosh, those greedy capitalists are sure idiotic for leaving money on the table like that.

"You mean the state that prevents charities from using leftovers to feed the needy, that state?"

Are you suggesting that the state is preventing charities from distributing table scraps to a billion malnourished, and that is the challenge of hunger? Believe it or not, but I'm skeptical about government's role in our lives, but I think you are barking up the wrong tree in this case. Governments want stability. Food riots interfere with that. Therefore governments have an interest in a well fed population, not a malnourished one.

"Gosh, I wonder why that cheap food is being thrown away rather than sold to hunngry people who are motivated to buy it? Gosh, those greedy capitalists are sure idiotic for leaving money on the table like that."

It's not idiocy, I assure you. Sometimes it makes more economic sense to leave food to rot in the fields than to take it to market. This is due to market forces rather than government dictate.

I'm sure sometimes it does. But *most* of the time - I would say upward of 99% of the time - when food is rotting, it's not because those lazy farmers decided that taking it to market was tooo hard.

It's because someone is preventing the food from getting to customers. And unless its a local warlord, that someone is almost universally drawing his paycheck from a nation state's treasury.

"it's not because those lazy farmers decided that taking it to market was tooo hard"

It's because the value of what's in the fields is less than or not appreciably greater than the effort to get it to market. You don't need the presence of a local warlord for this to happen. It happens even in places where there are no local warlords. I really think you are wrong headed in putting so much stock in the notion that governments use hunger to control us. Governments subsidize the fuel necessary to grow crops. They have campaigns to urge the population to eat more agricultural products.

Transportation costs of getting something to market is part of the end price of the good to the consumer. If those goods cost less than the price of getting them to market then either transportation costs are artificially high or in any case the market price of those goods is not high enough. The only reason why prices across the entire market would be so out of proportion, is some kind of political interference. Another problem to lay at your ideological doorstep.

"The only reason why prices across the entire market would be so out of proportion, is some kind of political interference"

Have you considered the possibility that a bumper crop will drive down the prices?

Have you considered the possibility that a bumper crop will drive down the prices?

How will the bumper crop drive down the prices if no one is bothering to carry it to the market?

In order for the bumper crop to drive down prices, it has to be available for the consumer to buy, which means that people are benefiting from the lower prices and thus getting fed.

"which means that people are benefiting from the lower prices and thus getting fed."

I was talking about food wastage.

No, you were talking about how super-smart you are, while blatantly demonstrating otherwise.

"No, you were talking about how super-smart you are, while blatantly demonstrating otherwise."

Actually it was food wastage.

I already countered your argument you dimunitive halfwit, so either respond to it meaningful or stop whining.

I think I understand now. The great mind of mtrueman can't follow a logical chain of arguments more than a couple levels deep.

You said... people are starving

Because... food is rotting in the fields

Because... it's too expensive to take to market

Because... prices are too low

Because... there's a glut of food

You're like the unfunny version of Yogi Berra. In order for prices to go down due to a glut, the product has to make it to market. In which case, it's available for people to buy. Nobody starves because the price of food is too low.

Now you pretend it's all about "food wastage" but you haven't actually shown that any food which could plausibly end up in an actual starving person's belly is being wasted. The hypothetical tomato rotting on the vine in the US is not going to end up in the hypothetical starving African child's belly even if the farmer gave it away for free.

The problems with that distribution channel are not a result of market economics.

Now you pretend it's all about "food wastage"

You've misunderstood me. My advice: go back to the beginning and start re-reading. I'll make it easy for you:

mtrueman|9.22.15 @ 1:08PM|#

" Given the manifold challenges of poverty and environmental renewal that technological progress can help us address in this century, the wiser maxim to heed is "He who hesitates is lost.""

That's a rather odd way to end a longish piece that was more about various models of future events than anything else. Since the days of Erlich's prediction, technology has progressed to the point where more food is produced than at any other time in history. But at the same time, there are more people suffering malnutrition, about a billion. Technological progress helps us to grow more food certainly. But it doesn't appear to help so much in alleviating these challenges of poverty, putting food into a billion hungry stomachs.

(The numbers I use here frankly don't rise to my usual levels of accuracy, but the argument stands in any case.)

The mere existence of more corn doesn't drive down the price of corn. The amount of corn available to consumers can however. Meaning it has to be able to reach the market before it can effect prices, as kbolino said.

were you?

You were making claims about prices and the factors of production that make no sense whatsoever. Don't be a slippery little turd.

"Meaning it has to be able to reach the market before it can effect prices, as kbolino said."

Some of it reaches the market. Some of it rots in the fields. You've never lived on a farm? You're not telling me anything terribly interesting, I'm afraid.

You're forgetting that starving people are easy to control. See Cambodia.

Governments frequently use starvation as a means of control. That's not markets.

Just assuming that governments want a well-fed population to avoid food riots is simply an ignorant mistake, which ignores the history of the subject.

You're forgetting that starving people are easy to control. See Cambodia.

Governments frequently use starvation as a means of control. That's not markets.

Just assuming that governments want a well-fed population to avoid food riots is simply an ignorant mistake, which ignores the history of the subject.

You're forgetting that starving people are easy to control. See Cambodia.

Governments frequently use starvation as a means of control. That's not markets.

Just assuming that governments want a well-fed population to avoid food riots is simply an ignorant mistake, which ignores the history of the subject.

Thanks, server squirrels. 😉

"Governments frequently use starvation as a means of control."

You typically over-estimate the competence of a government like that of Democratic Kampuchea. They had no intention of starving the population. In fact their plans were to transform their nation into a rich rice bowl that would develop the people like never before. Cambodia's starvation was unplanned and a result of a lack of government control.

If you think your government is using hunger to control you, then make your case. You don't know enough about Cambodia, that's obvious.

Sorry, but Cambodia was exporting food under a centrally planned economy, while their population starved.

If you want to attribute that to an unintended consequence, then, that's fine.

However, the problem is your own naivety, and a rather rosy and revisionist history of Cambodia.

"Sorry, but Cambodia was exporting food under a centrally planned economy, while their population starved."

Again, you are not disagreeing with anything I wrote.

There's nothing rosy in the death of hundreds of thousands of Cambodians. The naivete is attributing these deaths to some central planning. What caused these deaths was a combination of incompetence, negligence, cruelty and bad luck, weather-wise. Those who died were city dwellers from Phnom Penh. The government had transferred the population of the entire city, overnight, to the countryside where they were to work in the fields, and with any luck, shed their bourgeois ways. If they died, so be it.

Where's the "lack of government control" in this situation?

A significant percentage of the people executed in the Killing Fields by "Brother #2" were peasants whom had been designated enemies of the state. Brother #1 had decreed that they were to be smashed, and his subordinates did just that to the skulls of the unfortunates.

It's a superlative signpost to Mr. Trueman's lack of a moral compass; he thinks facilely denying those peasant victims ever existed will establish him as a superior intellectual.

His parents must be so proud.

"A significant percentage of the people executed in the Killing Fields"

I see you've changed your tune. Gone are those who starved to death. Now you want to talk about peasants being executed in the killing fields.

"Where's the "lack of government control" in this situation?"

The government's intention was to put these people to work in various agricultural-related projects. They really did envision a nation propelled to riches from the purity of their revolutionary spirit. Irrigation, massive collective farms etc. Nothing came of these schemes. Nothing at all except death and suffering.

Sorry, but blaming the evil of central planners on incompetence, negligence, and bad weather is the height of naivety. And, "bad luck" is just question begging: I declare it an accident, by assuming so.

Historically speaking, human beings are fairly robust to bad weather.

"Bad luck" is always the excuse for central planning failures. Sorry, but completely foreseeable consequences are always intended.

Still, I see what you mean: they really just didn't have enough control. When I think about the Khmer Rouge emptying every city in Kampuchia, all I can think is, "Wow: Pol Pot was really hands-off."

-Robert A. Heinlein

"Sorry, but blaming the evil of central planners on incompetence, negligence, and bad weather is the height of naivety."

You are over-estimating the role of the central plan in DK. Things were done on an ad hoc basis. The evacuation of the capital was not planned, but according to those who were there to witness it and participate in it, the evacuation was done seemingly on the spur of the moment, with a great deal of confusion, even on the part of the KR cadres. As I said before, you overestimate the competence and foresight of the KR. There is absolutely no evidence that the famine was centrally planned.

Sorry, but you're just attributing great evil to incompetence and confusion and moving too quickly.

There's a great deal of evidence that starvation of people was intentionally planned, as well as executions and murders.

The evacuation of the cities was explicitly planned. The fact that you find people who think it was done "spur of the moment" does not refute the historical facts: that the Khmer Rouge implemented the evacuation of cities and mass starvation intentionally. There is a great amount of historical evidence for this.

Your views are just revisionist history. "Hey: I see an account describing the evacuation of Phnom Penh as 'haphazard' and 'confused': therefore, I conclude that it was just a big accident."

Pure nieve, revisionist history.

" I conclude that it was just a big accident."

Not centrally planned is all. Is that so difficult to comprehend?

Oh, it's easy to comprehend. The evacuation of the cities was intentional, and not an accident, but it wasn't centrally planned, by which you mean the execution was delegated to certain underlings in a haphazard way.

In other words, you're arguing about quibbling details, instead of the actual point anyone is making.

Got it.

"The evacuation of the cities was intentional, and not an accident, but it wasn't centrally planned"

It's the starvation of large numbers of Cambodians that you claim was centrally planned but are unable to provide any evidence of these central plans.

There's great historical evidence that it was planned.

And, you're not providing any evidence that it wasn't, except your assurances that you know the minds of the leaders of the Khmer Rouge, and that it was all just a big misunderstanding.

I am now realizing that Mr Trueman is one of that most depraved portions of humanity, worse than child molesters, worse than mass murderers like Ted Bundy.

He's the piece of shit who will support Konrad Adenauer one day, Adolf Hitler the next, and Stalin on day three, will - with not a single twinge of his conscience - not hesitate to rat out his neighbors knowing that they will never be seen alive again, and wheedle his way to becoming a REMF because blood is icky, and he's to talented to be wasted spilling it.

"I am now realizing that Mr Trueman..."

... knows a little more about Cambodia during the 70's than I do. Pure evil!

If you know anything about what happened in Cambodia, and claim that the problem wasn't caused by deliberate Khmer Rouge policy to starve a significant portion of the population, then yes, you are evil and depraved.

"To Keep You Is No Benefit. To Destroy You Is No Loss"

"If you know anything about what happened in Cambodia..."

But I do. I don't see any deliberate policy. In fact the KR had, in taking over the country, starting in the eastern provinces, where peasant parents would hand children over to the KR for military training and a full stomach, had carried out policies of city evacuation previously, with smaller towns and cities, without the disastrous results of mass starvation that followed the forced evacuation of the capital. Evacuating the capital and the multiplying complications overwhelmed the regime's capacity to deal with it. I think that's the reason and not any deliberately planned starvation.

I don't see any deliberate policy

Yes, lots of people create armies and sic them on the population by accident. Why, my neighbor just stumbled into being a warlord the other day.

What a fucking joke.

I think you are wrong to suggest the state has an interest in seeing its subjects starve to death. At times, yes, but these are exceptional. North Korea was faced with a combo of flood, drought and socialist planning, and a massive die off was in the offing, they demanded, pleaded and begged for food aid wherever they could get it. Even from nations like Japan, South Korea and America.

I didn't say starve to death.

Remember, we're talking about starving and malnutrition.

People who starve to death die, and skip the starving and living with malnutrition statistics entirely.

And there are far fewer people starving to death now than at any other time in history. Yet another way that the modern world is far superior than the past.

"Remember, we're talking about starving and malnutrition."

I don't agree that the state has an interest seeing its subjects suffering from malnutrition.

Since the world has had lots of states, and currently has many states, I'm not sure what you're talking about when you refer to "the state".

Whatever it is, it's definitely nothing I'm talking about.

"Whatever it is, it's definitely nothing I'm talking about."

Let me know as soon as you figure out what your talking about.

Revisionists gotta revise, I guess.

More evidence for ad hoc muddling. No evidence for centrally planned extermination offered. You even concede that the KR gave food to the city evacuees.

By that logic, I could grab you, drop you off in the wilderness, hand you two bowls of rice soup, and say "Hey: I gave you food. If you starve, it's just an accident. I muddled." I'm not sure you'd understand or give me the benefit of the doubt, however.

If you can only see history through a rosy, revisionist history lens, then I'm not sure much can be done for you.

After all, I was just trying to build utopia in the middle of the desert, to avoid those meddlesome capitalists.

If a few people starve to death or die of exposure: eh, I muddled. Bad luck.

Bad luck is the excuse of the evil, the ignorant, and the incompetent. It's an appeal to luck as a force, like "god" or "spirituality".

What collectivists typically refer to as "bad luck" is really just noticing the effects of freedom, or a lack thereof.They don't understand that. So, it's just some amorphous "luck" that ruins their plans.

For example: the founders of the USA set out to create the most limited government they can. Nothing else. They end up creating the the most powerful nation on the planet, and the largest economy the world's ever seen. That was caused by freedom. However, it was unintended. So its ascribed to "luck."

The Chinese, the Soviets, the Khmer Rouge, etc., seek to create socialtopia on the planet. They rule over people like dictators . The results are starvation and stagnation and death, and failure. We assume it wasn't planned, and ascribe it to "luck."

However, the very intentional preservation of freedom, or a lack thereof, was the cause. But the ignorant can't understand that.

It's similar to the way a medieval farmer would think that our technology was magic: it helps him make sense of it, but it's really just the way he handles ignorance.

Foreseeable consequences are always intended. There's no luck, but it may appear that way to the stupid.

"There's no luck..."

... too bad we can't say the same thing for smugness. Still no sign of that evidence I was asking for....

"By that logic, I could grab you"

Rather than your speculating on grabbing me and taking me into the wilderness, I had hoped for some evidence that the famine was centrally planned.

But it's a historical fact that people had food rationed to them by state dictate, and had their rations reduced to starvation levels. That's evil. That's not an accident.

It's also a historical fact that they extracted rice from the population for exports, while they were starving. That's evil. That's not an accident.

The evidence is out there. I suggest google.

However, if you find the excuses and revisionist history of the Khmer Rouge to be very convincing, then you go ahead and embrace that. It's very brave and unique, if somewhat foolish.

For example, when you look at historical accounts of the people, and the translations of their language, they refer to the period of the killing feels as a period of "starvation" and "withholding of food", but they never refer to it as a period of "famine."

I don't really have time to correct your ignorance here, though. But if you think that a few million people was a big "whoopsie" of a state which was actually trying to maximize human well-being with central planning, then I really don't know what to tell you, other then you win the "most nieve person I've ever talked to" award.