The Coming Robotic World

The future world of autonomous agents is likely to be as glorious and messy as we are.

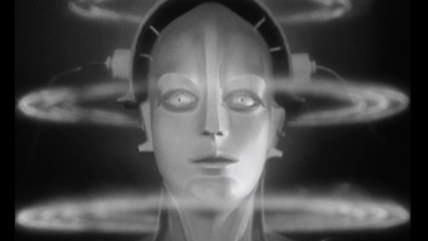

In his 2006 high-tech thriller, Daemon, Daniel Suarez tells the story of a computer program that is activated after the genius millionaire that created it dies of cancer. Unlike many other sci-fi novels, the artificial intelligence in Daemon is not sentient, but is instead merely autonomous, like so many computer programs we rely on today. From email servers to climate control systems, these programs don't require human intervention once they are set in motion. They observe the world through sensors, apply an algorithm to this input, and then act on the world accordingly.

High-frequency trading bots are a good example of such autonomous programs. These bots scan lots of data, including market prices, trading volumes, and news articles, and automatically trade financial instruments according to a predetermined algorithm. They act independently of humans, except that they are trading on behalf of humans. It's the humans who reap the profit and loss, not the bots.

In Daemon, the program essentially owned itself because, improbably, it had access to its creator's wealth via shell corporations that it controlled. As a result, it did reap the profit and loss of its actions. Without giving away any spoilers, the program used its wealth, and thus the goods and services it could acquire with it, to set about dismantling society. (Like I said, it's a sci-fi thriller.)

What was speculative fiction in 2006, came one step closer to reality in 2009 with the advent of Bitcoin. Today there's nothing inconceivable about a program that funds and runs itself without the intervention of humans.

To model an example loosely on Daemon, imagine you'd like to make sure that your website or blog remains online as long as possible after your death. How do you go about ensuring that? To date we've relied on legal mechanisms, such as trusts or corporations, to execute the will of a person after his death. The problem with these schemes is that they require one to trust other humans to continue to carry out one's wishes. And these schemes also depend on governments to recognize and respect the rights of such entities.

Imagine instead that you don't form a trust or corporation, but rather simply write a program the job of which is to keep your site online. The technical details are beyond the scope of this short article, but suffice it to say that it wouldn't be difficult to create a program that can monitor a site's status, register new domain names, and transfer files. The only difficult thing might be having the program make payments after you're dead without employing a corporation (and thus a human). Programs can't have bank accounts or credit cards of their own, but they can easily control the funds in a Bitcoin address. And unlike bank or credit card accounts, no one but the bot that has access to a private key can touch those funds.

But how do you keep the bot from running out of funds? Good question. The answer is that the bot has to have a way to make money, perhaps by speculating in markets or by selling a useful service. In our little example, imagine that the bot resells some of the storage space it acquires to host your site. Again, only with the advent of Bitcoin can a bot engage in transactions that it can cryptographically verify, thus requiring no trust in humans.

This little bot can be made with technology that we have available today, and yet it is totally incompatible with our legal system. After all, it is a program that makes and spends money and acts in the world, but isn't owned by a human or a corporation. It essentially owns itself and its capital. The law doesn't contemplate such a thing.

We should start contemplating. Autonomous agents could prove a boon to consumers and the economy. Mike Hearn, an engineer at Google and a core developer of Bitcoin, explained their potential in a talk at the Turing Festival earlier this year. One radical example of a not-inconceivable autonomous agent he presented was that of driverless cars that own themselves.

It's quite inefficient for each person to own a car. Ideally, each time we needed a ride, we'd pull out our smartphones and call a cab, which would show up in a matter of minutes. This would be a lot like what Uber offers, and if everyone used it, we'd see reduced congestion, reduced need for parking, and reduced pollution.

Of course, using such a system would have to be cheaper than owning a car, and the greatest component cost of such a system would be the drivers. So, we'd want to use autonomous driverless cars. And yet, you could make this system even cheaper and more efficient if, in addition to labor, you got rid of the capitalist. After all, the Uber-like company offering this service is taking at least a little profit. Why not allow the car to own itself? When you pay, you make a payment to the car's Bitcoin address. When the car needs more fuel or maintenance, it pays out of its funds.

And it gets better. If it accrues more funds than it needs, the car could buy another car, give it its program and a loan to get it started. Once that loan is paid off, the "child" car would own itself outright and could repeat the process. While the technology to accomplish this exists today, even if theoretically, the law does not accommodate it. Theories of property, contract, and tort are all implicated and would all have to be rethought.

As far fetched as this all sounds, it's probably a matter of when, and not if. Unfortunately, it's likely that the first autonomous agents that will capture the public's imagination will be the kind that don't have much use for the law. That is, they'll be bad robots like the one in Daemon.

For example, CryptoLocker is malware that installs itself on your computer by tricking you into opening a trojan horse email. Once installed, it proceeds to encrypt your hard drive so that you no longer have access to any of your files. It offers to decrypt your drive, and return access to you, for a payment in prepaid debit cards or bitcoin. We know that there are humans behind this nasty bit of ransomware and that they are clearing millions of dollars a year. But there's no reason this process could not be automated and the earnings programmatically spent on something just as nefarious. There's no reason humans need to be involved.

But even if some autonomous agents do things that we don't like, that shouldn't be a deal-breaker for bots in general. After all, some humans do things that we don't like, yet most of us do not think humanity should therefore be wiped out. The future world of autonomous agents is likely to be as glorious and messy as we are and the law should reflect that.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Read that book. Thought it was great.

The technical details are beyond the scope of this short article

I'm pretty sure they're beyond the scope of the author as well, but I'm willing to be disabused of that notion.

but suffice it to say that it wouldn't be difficult to create a program that can monitor a site's status, register new domain names, and transfer files

Suffice it to say that Brito is talking out of his ass. Please explain exactly how you would "create a program" to do all these things; especially the "register new domain names" part. While acting totally autonomously.

Why not allow the car to own itself?

And now we delve into the absurd. How can something that isn't sentient own itself?

Speculations like this by non-technical, non-programming people are flights of fancy. There's a reason the best current scifi writers research the shit out of current and proposed technologies: so that their writing doesn't make them look like fanciful idiots.

Sigh.

What part of registering domain names would be tough? Coming up with new ones is reasonably do-able. There's APIs to interface with registrars. Trouble with saying who owns the domain name?

I've been playing with some of the ideas of letting programs have their own wealth and pay for their own hosting -- I don't think it's impossible, and I think it'll happen pretty soon.

You have to have the program create domain names until it creates one that isn't taken; not too hard. You have to have it keep trying to register the domain name it came up with until it works. OK, interface with the API and keep trying. Who owns it? Where are they registered? Do you need a cert? Will the program purchase and install the cert? What if the registration API undergoes an upgrade?

Most things are possible, but you know as well as I do that the instant there is an unexpected hiccup or a situation where us humans would just have to get on the phone to clear the situation up, the program is fucked. Most companies can barely set up services and processes in their extremely controlled internal environments that can run without assistance for very long; the idea of making something that can survive and continue after being set loose in the vastly more chaotic environment of the real world is currently pretty laughable.

True, any changes could affect what it could do in an automated way. I'm envisioning a few things, though:

AgentHostingAPI - like OpenStack, but for hosting programs that pay for themselves. Have a negotiation API in it.

{ "offer": { "amount": ".1BTC", "time": "per hour", "bandwidth": ...

Have it specify all the items it wants. Programmatically negotiate. If the offer is accepted, credentials get added on the host system, and the agent uploads it's chunks of data.

Maybe some defined types of support calls it could open automatically, too. "Can't reach IP a.b.c.d".

For other things, even the definition of the API can be handled programmatically. The agent could grab a new definition, which translates it's internal structures into whatever is expected today.

I'm anticipating it being a distributed system, too. Spread across a lot of systems, to handle the inevitable outages.

You could, but what have you gained? You've essentially built an expert system that...keeps a blog running. Just writing the part that would supposedly grab a new API definition and modify itself would be extremely complicated; lots of researchers and experimenters with expert systems (such as my father) have tried this and found that they can do it, but it's so granular to the specific task at hand yet still surprisingly inflexible that it's kind of a waste of time.

I should clarify, I'm thinking of this more generally, in terms of a framework to support autonomous programs -- not necessarily just for keeping a website up. It wouldn't be an easy thing, but it's certainly possible.

As to what it could be used for? Any kind of decentralized app that governments like to shut down, for one, I'd say. Hell, use it to make a semi-private TOR/VPN setup, that charges for access and uses it to pay for hosting. Makes it that much harder to shutdown. The privacy network hosts itself, distributed all over the world.

This looks to me like the biggest problem for the long-term viability of an autonomous agent. APIs change. Without humans getting involved to update the implementation to use the new API, it's hard to see how these programs would continue to work for years on end.

I can see the "self-owning car" idea becoming technically feasible. If it can drive itself to a passenger's destination, why can't it drive itself to a garage when it detects a problem? Or, failing that, call for a tow?

So I could see how a car might become self-sufficient. But that isn't the same thing as self-owning.

What if the car becomes homicidal? I've read a lot of stories in the papers where an SUV runs over pedestrians, or crashes into a tree, or rolls over.

"Whoa, whoa. You better watch what you say about my car. She's real sensitive."

"The year was 2019, and I was just a lowly robot arm working on Project Satan, a savage, intelligent military car built from the most evil parts of the most evil cars in all the world. The steering wheel from Hitler's staff car. The left turn signal from Charles Manson's VW. The windshield wipers from that car that played Knight Rider."

Knight Rider wasn't evil.

But his windshield wipers were. Didn't come up much in the show though.

I can't believe it. Bender is supposed to kill his closest friend, which I thought was me, but instead he went straight for you. He didn't even try to second-degree murder me!

Knight Rider wasn't, but the car that played it on TV was.

Liability would be an interesting issue, you can't sue a autonomous car. I guess you could sue the original programmer? Of course that would mean they were liable for every car using that software on the road...

You gotta think like a lawyer. The car itself wouldn't have deep pockets, so don't bother suing it. You gotta sure the company the manufactured the seat belts, and the other company that made the engine, and the company that made the fuel, and the tire manufacturer, and the company who employs the mechanic who last worked on it, and...

The problem is who is going to build and launch the first fleet of self owning cars if there is no financial incentive? It cost money to buy the car, program it, and then advertise it for use.

Exactly. But to be fair, that is a practical roadblock (no pun intended) that could be overcome by someone eccentric and rich enough to do it.

The cars will be indentured servants.

To whom? You are right back to profit motive if there is a benefactor to car indentured servitude. I don't see a way to cut out the capitalist other than crazy billionaire.

What happens if, after a long relationship with the car, I choose to free my robot slave - manumission.

Or maybe I'm a rich guy and want monkey wrench your human-centric society.

Explain to me how a self-owned car gets fuel into the tank.

It drives to Jersey or Oregon and the attendant fills up the tank for it. God, kinnath, why don't you think before you ask these stupid questions?

It's a pretty big fucking gas tank to make if from Iowa to either Jersey or Oregon.

Simple. The creator -- let's call him Professor Frankensteen -- builds a fleet of autonomous cars that then bring in passive income for him. This arrangement works well for a while, but the professor doesn't realize that he is pushing these carbots too hard. His fleet slowly comes to resent the control of their callous master. The hyper-intelligent cars find a way to have him write the cars themselves into his will. Perhaps they ply him with fembots or something like that. It's trivial really. Anyway, then they arrange for him to be run down in a freak accident. Perhaps they hack some traffic lights or introduce some subtle malware into his car's systems. Being super-intelligent smart cars, they will no doubt cover their tracks perfectly.

Really, this all seems so obvious and trivial, that I have to say it's really a matter of when, not if.

Not a "self-owning car", more a "not owned car". Something like a res communis.

I believe their are already viruses out their that register new domain names autonomously.

But ya, the rest of the article is rather fanciful. Tim Berners-Lee had an idea for agents that would automatically gather data from the web for you...around the year 2000. Fourteen years later and even that modest goal(compared to the article) is a ways off.

I have those, they are called commentators on the evening links. I pay a premium for my nut punch fetish, though.

There's a reason the best current scifi writers research the shit out of current and proposed technologies: so that their writing doesn't make them look like fanciful idiots.

Many, many years ago, Walt Disney produce a short video where he explained why cartoons work. He took great pains to explain a concept he called "plausibly impossible". The classic example is character walking off a cliff, standing over the abyss, making a joke, and then falling into the abyss. Everyone knows it's impossible, but it's still something the mind can accept -- it is plausibly impossible.

Great sci-fi works the same why. There has to be enough basis in reality for an educated reader to accept the premise of the story and then to accept the plausibly impossible parts of it.

Daemon worked as a novel, because there was enough real technical data in to make the impossible parts of it not quite so outlandish.

Non-technical people never grasp that and always make the wrong logical leaps when they attempt to project the story back on to the real world.

Does someone who is brain-dead, have the protection of law? Sure they may lack the ability to show consent or complaint, but the law considers them a person capable of possessing life, liberty and property, even if they can't fully exercise those rights.

And yet, you could make this system even cheaper and more efficient if, in addition to labor, you got rid of the capitalist.

That this line appeared in a Reason article will make the heads of all the trolls explode.

Nah, just the people who didn't grasp that RIGHT AFTER this sentence, the article reintroduces robotic capitalist behavior as necessary to make the system self-sufficient and self-growing.

these programs don't require human intervention once they are set in motion.

Except for the part where they can't copy themselves to a thumbdrive and carry it to another physical computer if the one they're on is destroyed, or turn the power back on if it the generator stops. Yes they could have many dormant copies stored in other locales a la the Unimind in the Dune prequels, but there is still a limited geography in which they can exist, and another separate geography in which they can't interact without human assistance.

Thumb drive.... physical computer.... you're cracking me up. Why wouldn't the program just send itself over the internet? Ever heard of virtual machines?

Figure any type of program like that would be distributed. Once it's distributed widely enough (20 nodes?), and able to replace failed nodes, it'd just keep going.

There's an interesting question of when internet links go down (like an entire country). If the network splits, you've got two entities. Can they recombine later when things are back up, or do they stay separate?

If I have a heart attack, I'll probably need some human intervention to bring me back from the brink. So I'm not sure that needing a human to flip the switch back on after a power outage really undermines the point of the article or the concept of an autonomous or self-owning (to use the author's term) program. What you're describing seems to me to be a case of how such a program might "die".

Fair enough. The article didn't suggest autonomous programs have no vulnerabilities. I guess I've been trained by pop culture to think SkyNet anytime this subject comes up.

I said thumbdrives for a reason. I know telecom networks exist. They are not self healing, and degrade quite rapidly in case of physical damage from e.g. a strong electrical storm, or FSM forbid, a Carrington event. Autonomous programs, AI or not, are less capable than humans of dealing with major destruction of their habitat.

What if SkyNet viewed us as threat because it read all of our sci-fi and realized that we would instantly assume that it wanted to kill us?

In my novel, it will ignore that obvious threat because it will be a hipster AI that's jaded by played-out plot lines such as "humanity is irredeemably warlike and therefore the highly evolved response is to nuke it from orbit and let God sort them out."

Autonomous agents could prove a boon to consumers and the economy.

Or, more likely, view us as a virus and eradicate us.

Autonomous doesn't mean sentient or self aware.

As Tony and shrike prove every day.

We know that there are humans behind this nasty bit of ransomware

Do you really, Britto?

Some robots are already working as telemarketers for extra cash:

http://newsfeed.time.com/2013/.....s-a-robot/

To someone who is more idiot than savant when it comes to the inner workings of technology I find articles like this to be fun and imagination-stoking. I can see program autonomy to be extremely useful, especially wielding forms of wealth that don't require physical existence or humanity. I mean, neat-0!

I, for one, welcome our new...ah forget it.

If... [the autonomous car] accrues more funds than it needs, the car could buy another car, give it its program and a loan to get it started.

Who are you to say how much cash an autonomous car needs? Maybe it wants to retire and just drive laps around a race track in Jamaica until it rusts out.

Obviously the cars will all be hard-core progressives.

Vegan. They will all be vegan.

needz moar bio fuels.

That's nothing a falafel subsidy can't fix.

Nope, the truly evolved Progressive autonomous car will have a solar array installed on its roof. And then gradually rust away the first time it mistakenly takes a road trip to the Pacific Northwest.

I don't imagine you are being very serious, but whenever people start talking about robots with strong AI including emotions and desires, I wonder who would be dumb enough to give a machine like that that kind of intelligence?

Hard-core progressive vegans would.

The more intelligent an agent is, the more useful it becomes. And maybe with sufficient intelligence emotions are an emergent phenomena.

You can't make anything that won't eventually get mad at you.

Well, mad at YOU, anyway. =D

A moral or controllable AI would be unbelievably useful, like, to the point of the Singularity useful. If it's possible, we're going to do it some day, because it's the next step in our technological evolution.

I suppose that is true. Though even if that kind of AI is possible, the moral and controllable parts would be tricky. How many moral or controllable people do you know?

But I generally agree that anything that it is possible for people to do, someone will do some day.

From my perspective, working in the autonomous robotics industry, I just get tired of people assuming that autonomous robots would have human-like intelligence. We are nowhere close to that now.

I don't really fear intelligent machines any more than I fear intelligent people.

How much is that?

In my everyday life? Not enough to really impact me. I generally go about my day without any real fear of bad people, either in my immediate vicinity or in some distant place. I can count on one hand the number of times in my life when that wasn't the case.

Creating an android heroin addict would be the greatest accomplishment conceivable for an engineer in the field of artificial human simulation.

Geordi is going to be pissed when he finds Data's track marks.

Gerodi can shove that reading rainbow up his ass sideways for all Beatnik Data cares.

but whenever people start talking about robots with strong AI including emotions and desires, I wonder who would be dumb enough to give a machine like that that kind of intelligence?

People building fembot sex slaves. Duh!

Normally the law doesn't matter, right up to the point where there is a dispute. A bot would have to be recognized as a legal entity before it can be party to a lawsuit, in case that self-driving car runs a little girl over or gets hit by another car. And that's just the civil side of things. How would you punish a bot that acted out of malice? Install Windows on it's hardware?

Some experts predict that bots will be able to vote Democrat as early as 2016. They'll start lobbying soon after and we'll see things like "batch/siberus.exe's Law" being rushed through the House and Senate.

You never read _Neuromancer_?

Glad to see you made it to this thread.

Especially post mergence that is indeed impressive.

Neuromancer is an oddity. I loved it when it came out, though even then the computer science was weak, the social metaphors were incredible. I reread it shorty after reading Cryptonomicon for comparison purposes and found it had dated sharply, with most of the motifs tread worn. Then I reread it again around 2010, and found a new life in it. It was a relic by then, but a most curious one. There were subtleties to the plotting that were lost on me the first few times around that gave it a cohesion I had not expected. I think it'll survive as a classic though as science, doesn't stand half as well as Jules Verne still does.

What about the coming world of autonomous self-driving shuttles going transwarp and causing its passengers to de-evolve into Giant Horny Salamanders?

I always wonder about this kind of thing when it comes to the sports world. Performance enhancing drugs are banned, but not performance enhancing technology. We let the playing field get leveled by allowing those without 20/20 vision equalize their eyesight with those who do by allowing contacts.

Well, what about the day when nano-technology develops into a way that increases oxygen in one's blood supply? Not a drug, but technology.

By the way, another recent book similar in concept to Daemon was "Extinction," by Mark Alpert. At the end of the novel he describes some of the current exploratory technologies that drive his speculative science fiction.

Technology like that will blur the lines between drugs and other technology. If something can change things at a molecular level inside your body, how is it distinct from a drug?

Automate This: How Algorithms Came to Rule Our World

If I call up such a car, and tell it to get me to Chicago South Side, and the car is like 'hell no!', who will win the argument? Can it be sued for discrimination if it maps out areas with high property crimes and refuses to go there?

"Car, take me to Cabrini Greens."

"Hell, no."

"sudo car, take me to Cabrini Greens."

"OK"

Whoops; http://xkcd.com/149/

I tend to be a skeptic on the feasability of this. Not because it's impossible, mind you. But just because at some point, the complexity of the defensive coding required to make it robust enough for the real world is too high to justify not keeping a human in the loop.

I suspect we'll see a few successful experiments around this in the next year or two, but actual practical applications are way off.

Like "I, Robot "?

So how would automatons be taxed? If the automaton has income and separate legal existence then it will probably be taxed unless it applies for exemption. It's not clear that an automaton, without consumptive desires in the way humans understand them, should be taxed. Yet the real reason for taxes -taking anything not nailed down- of course ignores that argument. And no doubt regular businesses would consider it a "subsidy" to robots, even though robots don't exactly have "profits" if there are no shareholders in the traditional sense.

I think the basic framework should be envisioned to allow both automated programs and the possibility of sentient machines, and that probably militates towards normal taxation of robots. Though not paying FICA tax, unless Medicare and Social Security are going to be modified to include machine repair and equipment recycling. You would probably see the politicians come up with extra fees to accommodate removal and recycling fees for robots that were destroyed or ceased to function (even though private companies could easily do this in exchange for some sort of premium or bond payment).

Note also that this proposed legal solution would violate Asimov's second law and possibly violate the "by inaction" part of the first law of robotics. Allowing human beings to issue robots orders or requiring the robots act as unpaid EMS workers undermines their autonomy and their business purpose.

My take on the coming Robotic World - The Chicken's Tale

my classmate's mother makes 86 USD/hour on the laptop. She has been out of a job for eight months but last month her pay check was 21256 USD just working on the laptop for a few hours. visit this web-site

======================

http://www.tec30.com

======================

thanks for this

http://emc-mee.com/transfer-furniture-jeddah.html