Data Privacy: What Washington Doesn't Want You to Know

New, unbreakable codes offer people true communications privacy, for the first time. But the government is fighting to keep this amazing technology for itself.

SECRECY ORDER You are hereby notified that your application [for a patent] has been found to contain subject matter, the unauthorized disclosure of which might be detrimental to the national security, and you are ordered in nowise to publish or disclose the invention or any material information with respect thereto…in anyway to any person not cognizant of the invention prior to the date of the order…

The inventors were shocked.

Working after hours and weekends in a garage laboratory in Seattle, funded out of their own pockets, Carl Nicolai, William Raike, David Miller, and Carl Quale had developed plans and prototypes for a new type of voice communications scrambler.

It had all started back in 1960, with an article in Analog magazine by Alfred Pfanstiehl suggesting that a new mode of transmitting signals through the electromagnetic spectrum could provide communications security. In traditional schemes, the receiver can easily tune in to the transmitter—for example, you only need to know the station's frequency to catch a radio broadcast. But in the technology described by Pfanstiehl, both transmitter and receiver would use pseudorandom wave forms (instead of the traditional sine waves), and only those with the exact, synchronized pseudorandom code could communicate.

Carl Nicolai was intrigued. He convinced the others to join him in developing an application of Pfanstiehl's idea, and the result was their Phasorphone—a voice scrambler offering a moderately high degree of security for CB and Marine Band radio that could be modified for use on telephones also. With several companies expressing interest in the product, in October 1977 the inventors applied for a patent. And they waited. Nearly six months later, in April 1978, the word came.

There was no explanation of who had ordered secrecy imposed on the patent application, or why. They turned to their patent attorneys. Although the order had come from the Patent Office, they learned, it had been decreed by an arm of the government called the National Security Agency. The attorneys began a slow process of communication with the NSA, attempting to get the order lifted or at least find out why it was imposed.

The penalty for violation of a secrecy order is two years in jail, a $10,000 fine, and loss of the patent. The inventors' attempts to begin marketing the Phasorphone were stopped cold.

They found someone to represent them directly in Washington, D.C.: Peter Olwell, a consultant who for many years had edited a newsletter on the civilian reactor program and had learned the ways of government agencies. The inventors wrote to the Patent Office requesting the necessary permit to disclose information about the patent to Olwell. And they waited—an uneasy period confused by word from the Patent Office that it was the Department of the Army that was responsible for the secrecy order. Olwell was later to learn from government files that the Army representative who had looked at the patent actually advised against secrecy and that the NSA was indeed the responsible agency. The inventors were growing understandably paranoid.

With efforts by Olwell and their patent attorneys showing no progress, Nicolai's group felt they had only one card left to play: the press. They went to Science writer Deborah Shapley with their story, which was reported in the September 8, 1978, issue. An Associated Press write-up and other media coverage followed.

Sen. Warren Magnuson, who had written to the NSA on behalf of the inventors in June, wrote to the Secretary of Commerce in September:

It would seem only reasonable to expect that the applicants would be allowed a hearing to rebut the conclusions of the National Security Agency regarding the necessity for secrecy. I do not believe that the inventors should be deprived of their rights to their labors without having a full presentation of their views.

The NSA did not ask for such a presentation of the inventors' views. Just as mysteriously as the secrecy order, came new word from the NSA: "The dangers of harm to the national security were less severe than originally perceived." The secrecy order was rescinded on October 11, 1978.

SECRECY AGENCY Like these inventors before they tangled with it, most Americans have never even heard of the National Security Agency. It is the government's ears on the world. Armed with work orders from other government agencies, conveyed through the US Intelligence Board, the NSA gathers foreign intelligence by listening in on communications, breaking foreign governments' codes, and monitoring electronic signals. It also has the task of ensuring the security of US government communications—devising codes, setting encryption standards for other agencies, and so on.

From the beginning, the NSA's existence has been shrouded in secrecy. It was created out of the Armed Forces Security Agency by a Top Secret presidential directive issued in 1952 by Pres. Harry Truman. Unlike the other intelligence agencies of the federal government, the NSA does not operate under a congressional charter defining its mission and limiting its power. In fact, to this day Truman's seven-page directive establishing the agency remains classified. Although technically a part of the Department of Defense, the NSA is not even listed in the Pentagon directory.

In spite of its low profile, the NSA is the largest of the government's intelligence agencies. Its annual appropriation is some $2 billion (CIA: $750 million; FBI: $584 million). It has a direct payroll of more than 20,000 people, and another 80,000 (largely military personnel) serve in the cryptologic departments of the armed forces, under NSA control. With the costs of these services added in, estimates put the NSA's share of the taxpayers' dollars at $15 billion a year.

At its headquarters in Fort Meade, Maryland, is housed some of the most sophisticated and expensive electronic equipment in the world. For the name of the game today in information and communications is electronics. It is this that underlies the NSA's use of patent secrecy orders and similar tools to limit the availability of communications security devices. And the NSA is working to obtain more power—to have a larger say in the government's funding of cryptographic research and to be able to classify the results of any cryptographic research. If it can't keep a tight rein on communications security outside the government, reasons the NSA, it will be unable to carry out its mission: to provide for the national security by conducting signals intelligence and cryptanalysis.

On the other side of this issue is a handful of inventors and researchers who are concerned that their rights to free speech and due process are being stepped on. They see their work as no threat to the national security but rather as a tool for the protection of individual privacy.

The shape of our future depends on how the issue is resolved. Will individuals control access to information, or will government—or is there some middle ground? Does the NSA need to limit communications security in order to provide for national security? If so, must individuals write off any hope of truly protecting their privacy in an electronic age? Or are the new developments so important and so hard to control that government must concede that the old ways of intelligence gathering are largely a thing of the past?

ELECTRONIC REVOLUTION It used to be that to communicate with people you either talked with them face-to-face or you sent them a written message. If the message had to be kept secure, you perhaps wrote it in code or sent it via a trusted courier. Whatever information you needed to store was also in written form. If you didn't want it snooped at or stolen, you locked it up in a safe.

Beginning in 1850 with the introduction of the telegraph, all that changed. At an accelerating pace, we have come to rely more on electrons and less on paper and pen for conveying and storing information. Today there is scarcely a home in the United States that doesn't have a telephone, radio, and television and scarcely a person whose vital statistics and more are not a part of many computer data banks.

Unfortunately, electronic communications and data are particularly vulnerable to eavesdropping and tampering. Telephone wiretapping, for example, is easily accomplished. And physical wiretapping is becoming obsolete as an increasing amount of telephone traffic travels over microwave radio circuits. Although the equipment to intercept such transmissions is expensive, it is available. The Russians, for example, have used their Washington embassy, their San Francisco consulate, and their New York offices as bases for intercepting microwave transmissions.

Data in computer-readable (digital) form is even easier to snoop on than voice communications. It can be scanned for particular items—phone numbers, names, words, or phrases, for example. And it can be altered as well as tapped, by anyone with sufficient information about how the particular system works, providing growing opportunities for computer crime—for example, embezzlement (shifting funds from other accounts to the criminal's), fraud (entering false information to make a company look good, thereby raising the price of its stock), and theft of data (gaining access to industrial secrets, marketing plans, etc.).

Understandably, businesses are creating a growing demand for secure communications and data storage. Banks with Electronic Funds Transfer systems need to protect themselves against theft. Financial, personal, and medical information in data banks needs protection. Electronic sabotage must be defended against. Confidentiality and authenticity of messages—by phone or electronic mail—must be assured. Cable-TV networks using satellite transmission need to thwart pirating of their programs by unauthorized users. Mineral and oil exploration firms can't afford to let their competition know their plans and findings. The list of needs for encryption of information is nearly endless.

CODES FOR COMMONERS Fortunately, the same electronic revolution that has made possible advances in information collection and processing has also provided increasingly sophisticated means of ensuring data privacy. The age-old method of keeping secrets by putting information and messages into code has taken on new dimensions.

Any coding scheme consists of a set of rules by which messages or information are to be transformed. In a computer, all information—sentences, phone conversations, even pictures—is represented as a series of numbers. Encoding is accomplished by scrambling these numbers—in modern cryptography, using a set of rules consisting of elaborate mathematical functions. Decoding requires that the encryption procedure be reversed by acting on the coded message with the inverses of the encryption functions. (In a simple example, division is the inverse of multiplication.)

These functions could not be used to code and decode in any reasonable amount of time without the speed of a computer, and as computers and their electronic components become faster and cheaper, more and more complex encryption functions can be devised. The more elaborate they are, however, the more effort (computer time and power) is required to break the code. Some of the best encryption methods in use today are, in practical terms, unbreakable—by the time the code was broken, the once-secret information would be useless.

But that's for state-of-the-art technology. Most private and commercial users, and most small countries' governments, cannot afford the ultimate in security and must settle for something considerably less. It doesn't take much figuring to see that any new development that promises to give these users a higher degree of security for a lower cost is likely to be seen as a threat by the government's electronic surveillance arm.

Carl Nicolai and his coinventors never could find out why the National Security Agency slapped a secrecy order on their Phasorphone. Daniel Silver, then NSA general counsel, had written to them with military logic: "As the reasons for concluding that disclosure would be detrimental to the national security are themselves classified information, unfortunately we cannot provide additional information on the basis for our conclusions."

Although the Phasorphone was a new type of voice scrambler, there were comparably secure devices on the market. Its projected cost, however, was much lower—$200, versus several thousand for existing devices. Fear of putting communications security within reach of many people may well have prompted the NSA's secrecy order.

When it was finally rescinded, only a few days remained before the legal deadline for filing for foreign patents. Their resources exhausted, the inventors could only file in Canada. A US patent was granted in February 1980, receiving top billing in the New York Times "Patent of the Week" feature.

The secrecy order fight left the four inventors frustrated and bitter. "The government employees had eight hours a day to spend on our case," says David Miller. "We had to earn a living, plus fight them. We ended up supporting ourselves and our lawyers, and—through our taxes—them and their lawyers." Development of the Phasorphone itself cost the inventors $33,000 (1978 dollars); lawyers' fees, another $30,000 so far.

CATCH-22 But secrecy orders are not the only tool of suppression at the NSA's disposal. While the Seattle group waited for their Phasorphone patent, they developed another privacy-protecting device: Cryptext. A cigarette-pack-sized encryption device that can be plugged into Radio Shack's TRS-80 and other microcomputers, it sold for $299 when it was introduced in 1979. The inventors were so sure of its security that they offered a prize of three ounces of gold to anyone who can crack its code (no winners so far).

Having seen something of the patent routine, this time the group decided on other ways to protect their invention. Among other techniques, the components inside Cryptext have had their identifying numbers removed and are embedded in a special epoxy, making it highly costly to dissect a unit and find out how it works. (Similar methods and trade secrets are increasingly being used to protect electronic inventions these days. The average patent application takes about two and a half years to process, by which time the invention is often obsolete.)

Since no patent application was filed, the inventors didn't have to worry about another secrecy order. But there's a Catch-22. In January 1980 they received the following:

It has come to the attention of the Department of State that your company is engaged in the sale of a TRS-80 word processing system. We take this opportunity to advise you that cryptography must be licensed by the Department of State before it can be exported from the United States. Application for an export license is made to this office [the Office of Munitions Control] in accordance with the International Traffic in Arms Regulations (ITAR).

Under the Mutual Security Act of 1954, registration with the government is required of "every person who engages in the business of manufacturing, exporting, or importing any arms, ammunition, or implements of war, including technical data relating thereto." The US Munitions List, which defines what items are to be considered "arms, ammunition and implements of war," includes "speech scramblers, privacy devices, [and] cryptographic devices."

Thus the inventors learned that their device had been classified as an "implement of war." In order for them or any of their customers legally to send a Cryptext unit out of the country, an export license was needed. And in order to get a license, they would have to supply diagrams and a full explanation of how Cryptext works and maintain records on the acquisition and disposition of their product (that is, a customer list). The inventors found that the Office of Munitions Control refers all cryptography cases to a certain government agency for an opinion as to whether an export license should be required or issued…the NSA.

So far, the Seattle group has decided to forgo a foreign market rather than file for an export license. The penalty for an ITAR violation, by the way, is two years in prison and a $100,000 fine.

INSIDE THE NSA What the NSA wants, says Dean Smith, a former staff engineer for the agency, is "that they and they alone control all cryptography." On one level, he says, the NSA's push for control is the standard story of a bureaucracy defending its turf. As the US government's code-making and code-breaking agency, NSA people view themselves as the world's top experts in cryptography.

In fact, says Smith, "there are only about a dozen people in all of the NSA who are real cryptographic experts, and they talk only to each other. They're a closed society, intellectually inbred, and this greatly diminishes their competence."

Recent developments seem to bear this out. The public-key encryption system, for example, which Smith terms "a milestone—the most important cryptographic work in the century," was developed in the free academic community. Eclipsed by work done "outside," the NSA is increasingly falling behind and getting desperate. "They're trying to hold it back, control it—but they can't," says Smith.

Political pressures compromised the quality of work Smith saw performed by the agency. "Most of the NSA section heads know nothing about technology—just politics," claims Smith. "Their thinking style is more that of master psychologists than technologists."

One of the projects Smith worked on showed the results of such thinking. The aim was to secure all US (government and private) microwave transmissions against Soviet interception, and a committee composed largely of various government agency representatives (and no one from the communications industry) was appointed to study the matter. Smith's job was to evaluate the feasibility of their proposals.

One of the "solutions" would have wiped out the phone service in New York City, San Francisco, and Washington, D.C., by jamming the Soviet receivers—which would also jam ours. The solution finally chosen by the committee—classified information—was mediocre, at best. When Smith brought the problem to the attention of his superiors, he was told to shut up.

Smith had discussed the desired outcome with AT&T and found that an inherently secure technology was available if more phone service were switched from private lines to a WATS-like system. The service could both provide security and save money. But AT&T pointed out that its competitors would use the Federal Communications Commission to prevent the service from being offered, and the NSA wasn't very interested in the idea either. "It wouldn't have resulted in increased power for the NSA," explains Smith. "They wanted to force the carriers to encrypt the communications, with the NSA in control of the encryption process."

Smith is not surprised that many of the best minds won't even consider working for the NSA. The overt manifestations of control bother them too much, he says. A friend of his from Bell Labs was once scheduled for a job interview at NSA headquarters. He turned around and went home after a look at the electrified, barbed-wire fences and the Marine guards patrolling the complex.

SURVEILLANCE But protecting its reputation is not the only reason the NSA wants to control all cryptography. Mentioned to the agency's friends in Congress, like Howard Baker and Scoop Jackson, but not to the public, says Smith, is this factor: if the governments of the Third World ever get their hands on really secure communications technology, the NSA won't be able to spy on them anymore.

But the Third World is getting more secure, and recent cost-lowering developments promise to hasten the process. The major powers have had extremely secure systems for years. It has been estimated that the NSA can decode only about four percent of the transmissions it picks up; it is reduced now to gleaning most of its information from the volume and routing of messages. Attempts by the NSA to prevent Third World communications security is a losing, rear-guard battle.

There is another reason the NSA top brass would like to control all cryptography, though, says Smith, and it is the deepest and most secretly held. It is not talked about in public, not even with trusted congressional friends, but is discussed behind closed doors at the NSA. It is the belief that no government can ever permit the citizens of the nation, or any sector of the nation, to be secure from the government itself—a concept of government, he points out, that can lead to the most ruthless and cynical abuses of power.

Not allowed to do a competent job, and faced with a government mentality that seeks control above everything else, Smith resigned. "I found that my job at NSA was causing a betrayal of my self and my country."

OVERREACTION The NSA's attempts to limit communications security and suppress public knowledge of cryptography are not new. During the 1960s the agency reportedly used threats of "extralegal" activities to block the production of a low-cost code machine. As detailed by columnist Jack Anderson, the developer, Victor Poor, was convinced that the NSA would firebomb his factory if he didn't cooperate with them. Although the code machine was not produced, the victory was short-lived: not long afterward, computerized coding devices began being developed. The microprocessor revolution had begun.

Undaunted, the NSA continued to wage war. According to the 1976 report of the Senate's Select Committee on Intelligence, the NSA maintained a file in the late '60s on a writer who had published materials describing the agency's work. According to the report, the NSA "had learned of the author's forthcoming publication and spent innumerable hours attempting to find a strategy to prevent its release, or at least lessen its impact. These discussions extended to the highest levels of the Agency, including the Director." Measures considered—"with varying degrees of seriousness"—included: planting critical reviews of the book, purchasing the copyright, hiring the author into the government, keeping him under surveillance, and breaking into his home.

Although the name of this author was not revealed by the NSA or the Senate committee, there is little doubt that the target was David Kahn, a journalism professor at a New York university and a reporter whose pet subject is cryptography. In 1967 he in fact published The Codebreakers: The Story of Secret Writing. A thousand pages long, it is the definitive history of cryptography through the post-World War II period. Although the NSA did not, according to the Senate report, carry out the measures already described, it did obtain the manuscript from the publisher and placed the author's name on its "watch list."

But "the most remarkable aspect of this entire episode," noted the Senate committee, "is that the conclusion reached as a result of the NSA's review of this manuscript was that it had been written almost entirely on the basis of materials already in the public domain. It is therefore accurate to describe the measures considered by NSA…as an 'overreaction.'"

What the Senate committee apparently did not take into account is the NSA's position: that the less the public knows about secret codes, the better. This attitude persists. Said NSA Director Vice-Adm. Bobby R. Inman recently: "There is a very real and critical danger that unrestrained public discussion of cryptologic matters will seriously damage the ability of this government to conduct signals intelligence and protect national security information from hostile exploitation."

The NSA suffers from a handicap, however, in convincing people that its legitimate intelligence activities warrant such grave concern about the prospect of individuals and businesses obtaining communications security. In the wake of Watergate the public learned that, like the government's other intelligence agencies, the NSA had for years been directing its operations against American citizens without any requirement that it first show probable cause in the courts.

ILLEGAL SNOOPING The same Senate Select Committee that uncovered the overreaction to author David Kahn's work learned from the NSA director and others within the agency that the NSA and its predecessor, the Armed Forces Security Agency, had for 30 years been intercepting telegrams sent overseas by Americans. This program was made possible by the cooperation of IT&T, RCA, and Western Union, the major telegraph companies. At its height, the program (codenamed Shamrock) produced 150,000 telegrams a month for NSA analysts.

The Senate committee also learned that a past director of the NSA, Adm. Noel Gayler, had participated in drawing up, and approved of, the so-called Huston Plan to expand the role of intelligence agencies. The plan, proposed secretly to President Nixon in 1970, would have given these agencies the authority (on executive order) to read Americans' international mail, break into the homes of persons considered a security risk by the president, and otherwise intensify surveillance of selected Americans. Several of its recommendations were clearly unconstitutional, but the NSA admitted that "it didn't consider [this] at the time."

It was also revealed to the Senate committee that the watch list that had included David Kahn's name had been maintained by the NSA from the early 1960s until 1973. The agency monitored the international communications of individuals and groups on the list, disseminating the intercepted messages to interested government agencies. (The NSA's present director, Admiral Inman, hotly denies that the NSA has ever listened in on "Americans' private telephone calls." When questioned, however, he concedes that the NSA did, as admitted to the Senate committee, monitor phone calls between the United States and South America, looking for drug traffic. But he hastens to add that in these cases one terminal was in a foreign country.)

The watch list was started, according to NSA testimony, when domestic law enforcement agencies in the early '60s sought the NSA's help in gathering information on American citizens traveling to Cuba. Soon the Bureau of Narcotics and Dangerous Drugs (now the Drug Enforcement Administration), the FBI, the CIA, the Defense Intelligence Agency, and the Secret Service were submitting names to the NSA's watch list, which came to include 1,650 Americans. In 1969 this surveillance was formalized as project Minaret.

Today, although the agency claims to have sworn off such practices as Shamrock and Minaret, it continues what has been termed a "vacuum cleaner approach" to gathering data. Americans' communications to and from other countries are still picked up "inadvertently." Those containing evidence of certain federal crimes are turned over to other government agencies. It was in this way that Billy Carter's hands were caught in the Libyan cookie jar last summer. As reported by the San Francisco Chronicle, "International telex messages, apparently intercepted by U.S. electronic intelligence collectors, provided the White House with confirmation that an oil deal between Billy Carter and Libya's Khadafy regime was in the works."

It is estimated that 400,000 telephone calls to and from the United States are made each day. Several articles in major media have recently reported that the NSA listens in to these calls selectively—via computers programmed to switch on tape recorders the moment a given word or phrase is used.

Electronics experts dispute this claim. Selective monitoring of telex messages is feasible, they say, because the intercepted message is already in digital (computer-readable) form. But computer recognition of voice messages is way beyond the state-of-the-art, and this would be necessary in order for a computer to switch on a tape recorder selectively.

But who knows? In the area of military intelligence, civilians are routinely kept in the dark about the most advanced technology. For example, satellite photos available to the American public and the scientific community from the government's Landsat program are limited to showing objects that are 80 meters across on the ground. A few 30-meter reconnaissance photos were released recently. Yet according to a recent issue of Aviation Week, the Air Force's current technology—technology it's keeping under wraps—provides resolution down to six inches.

CODING CONTROVERSY The NSA's record of eavesdropping on American citizens is not the only aspect of its activities to have come before the Senate Select Committee on Intelligence. In 1975 the NSA became embroiled in a controversy over what some viewed as an attempt to reduce the degree of communications privacy available outside its own walls.

In 1968 the National Bureau of Standards (NBS) began working toward development of a standard encoding system to provide security for unclassified government data stored and transmitted by computer. After soliciting proposals in 1973 and 1974, the NBS decided to adopt as a Data Encryption Standard (DES) a coding system submitted by IBM. The NSA, as the government's cryptographic arm, had been in on the decision and had in fact convinced IBM to reduce the key size of the DES, thus reducing its security level.

Almost immediately, computer scientists and engineers working on encryption systems let it be known through the scientific press that they had doubts about the security of the DES. The National Bureau of Standards responded with two workshops on the issue.

At the first, in April 1976, the NBS maintained that with existing machinery it would take 17,000 years to break the DES code by transforming encrypted data into every possible combination in order to come up with the uncoded text. Most of the workshop participants, however, argued that it would be fairly easy to construct a special-purpose computer to attack the DES; their estimates of the time required to build the equipment and crack the code ranged from two and a half to 20 years—not 17,000. The cost of such devices was put at $10-$12 million, a small amount by government standards.

At a second workshop in September 1977 on the mathematical properties of the DES algorithm, participants learned that IBM was unwilling to reveal some information about its development—at the NSA's request. Some cryptographers began to worry that the NSA, in helping IBM with the DES, might have inserted a mathematical procedure making the DES easy for the NSA to break.

It wasn't as unreasonable or paranoid as it may sound. In between the two DES workshops, a scare had been thrown into the entire civilian cryptographic community. In July 1977 an NSA employee, one Joseph A. Meyer, wrote a letter to the secretary of the publications board of the Institute of Electrical and Electronic Engineers. Some of the IEEE members' discussions and publications on the subject of cryptography, he warned, could be in violation of the International Traffic in Arms Regulations. Meyer's letter suggested that researchers obtain permission for meetings and publications from the Department of State's Office of Munitions Control—effectively giving the NSA control over the release of their work, since the OMC routinely refers all cryptographic matters to the NSA. Meyer's letter was picked up by both the popular and the scientific press, with a chilling effect on cryptographic researchers across the country.

With all this as background, in late 1977 the Senate Select Committee on Intelligence decided to look into the matter of NSA involvement with scientists working in the field of cryptography. The unclassified summary of its classified study reported the committee's conclusions: Joseph Meyer had acted on his own, and not on NSA orders, in writing to IEEE; federal regulations pertaining to cryptology are vague and ambiguous and therefore "not conducive to scholarly work"; although the NSA did convince IBM to reduce the key size of the DES, IBM agreed that the final key size was adequate; the NSA did not tamper with it to make it vulnerable to NSA code-breakers; and the majority of scientists consulted said that the DES would provide security for 5-10 years.

SECRET CODES GO PUBLIC Meanwhile, the NSA's domination of its field had been rendered a blow that may well prove fatal. In 1976 two Stanford University researchers, Martin Heilman and Whitfield Diffie, came up with a radically new kind of encryption system. In contrast to traditional schemes, a person's encoding formula can be made public so that anyone can send coded messages (but only the receiver can decode them). Hence the name of the system: public-key encryption.

The concept is an outgrowth of recent work in theoretical mathematics involving "trapdoor one-way functions." Such a function is easy to compute, but its inverse is impossible—or at least computationally infeasible—to derive unless specific information (the "trapdoor") used in designing the function is known. When applied to cryptography, such functions and their inverses can be used to provide codes with two separate keys—one to encode, the other to decode—and yet someone knowing only the encoding key cannot compute the other.

Public-key encryption slipped through the back door while the government cryptographers were guarding the front. An unpredictable outgrowth of purely theoretical mathematics, its development could be neither foreseen nor prevented. A matter of weeks after Heilman and Diffie had published the basic theory, a group of MIT researchers headed by Ronald Rivest had designed a practical implementation based on multiplying large prime numbers (numbers that can be divided evenly only by themselves and 1—2, 3, 5, 7, 11, and so on, ad infinitum).

While recent methods have been developed for using a computer to find large primes, and their multiplication can be done in a fraction of a second by computer, experts concur that it would take millions of centuries for today's best computers to derive the original prime numbers if only their product (a digit of 100 or so figures) were known. So the encoding key would include the product, the decoding key would reverse the coding process using the original prime numbers, and no unintended receivers could decipher the message.

Alternative groups of one-way functions have also been proposed. Work is under way to develop single microelectronic chips incorporating the public-key technology (thus making encryption faster). And several companies are implementing applications of the system. The novel features of public-key systems make them especially adaptable to certain uses (see sidebar at end), and with most of the essential theory widely published, it's safe to say that public-key systems will not—cannot—be kept from the public.

MORE SECRECY The NSA, however, is not about to give up. In April 1978 the agency imposed two patent secrecy orders: one, on the Seattle group's voice scrambler; the other on a nonlinear "stream" cipher developed by George Davida of the University of Wisconsin at Milwaukee under a National Science Foundation (NSF) grant. University of Wisconsin Chancellor Werner Baum publicly protested the secrecy order, and a few weeks later, it was rescinded.

But Baum realized he had stumbled onto a larger issue. In a letter to the NSF, quoted in a July 1978 issue of Science, he set out his concerns:

At the very least, an effort should be made to develop minimal due process guarantees for individuals who are threatened with a secrecy order. The burden of proof should be on the government to show why a citizen's constitutional rights must be abridged in the interests of 'national security.'

Just a few weeks earlier an issue of U.S. News and World Report had featured a five-page cover story on the National Security Agency, entitled "Eavesdropping on the World's Secrets." Combined with all the flak about the DES, secrecy orders, and Meyer's letter, it must have seemed to the NSA that the roof and walls of its Black Chamber were caving in.

Admiral Inman, director of the NSA since July 1977, contacted Science magazine in October, asking for a dialogue with the academic community regarding cryptography research. The move was unprecedented—it was the first press interview ever granted by a director of the NSA. The agency's concern, said Inman, was with protecting valid national security interests, and he wanted "some thoughtful discussion of what can be done between the two extremes of 'that's classified' and 'that's academic freedom.'"

He suggested that the authority of the Atomic Energy Commission (now the Energy Research and Development Administration and the Nuclear Regulatory Commission) over atomic energy research be taken as a model. (Under the Atomic Energy Act of 1954, these agencies have the power to classify any work they believe will endanger the country's atomic energy secrets.)

RESEARCH CONTROLS The University of Wisconsin's Werner Baum picked up Inman's call for a dialogue. The result was a public cryptography study group, funded by the National Science Foundation.

A preliminary meeting in May 1979 laid out the issues. Inman and Daniel Silver, NSA general counsel, as well as two NSA research directors, attended. The other participants were mostly representatives of various scientific, mathematical, and academic societies. George Davida found himself the only nongovernment cryptography researcher in the group.

Davida came away with the impression that the group had taken it as its task to come up with recommendations the NSA would like. Silver said that the good of the country requires "repressive legislation," and with the country moving toward the right-wing, he suggested, the academics would probably be able to make a better deal with the NSA than with Congress.

The study group, now formally constituted, met again in March and May 1980. Inman sent a statement making the NSA's position clear: The NSA was worried about anything with the potential to compromise its cryptanalytic work or to improve foreign governments' cryptography; existing statutory tools—patent secrecy and export laws—are insufficient because they apply only to devices and directly related information and technology (that is, he specified, they do not apply to the information in scholarly papers, articles, or conferences unrelated to specific hardware), and criminal statutes apply only to classified material. Again, the Atomic Energy Commission was put forth as a precedent.

While Davida saw it as very dangerous, his academic colleagues saw nothing wrong with the "exercise" of considering legislation to control cryptography research. A subcommittee chaired by Ira M. Heyman, chancellor of the University of California, Berkeley, was charged with outlining a system for prior restraint on publications relating to cryptography. They turned to the NSA's Daniel Schwartz (who had replaced Silver as general counsel) to recommend an approach.

The subcommittee's report, which was approved by all study group members except Davida at an October 1980 meeting, outlines a system that "is largely, but not completely, voluntary. Protected cryptographic information would be defined as narrowly as possible. Authors and publishers (including professional organizations) would be asked on a voluntary basis to submit prospective articles containing such information to NSA for review.…NSA could…seek a restraining order [on publication] in a federal district court… .the government would be enabled to seek a court-enforceable civil investigative demand to obtain copies of articles about which it hears, but which were not submitted."

Following the October meeting, Davida wrote to the other group members, expressing his "deep concern about the failure of the committee to address the fundamental issues." Under the proposed rules, says Davida, the only papers to be submitted for NSA review would be those relating to existing government codes, ciphers, and cryptographic systems. But specific information on those systems is highly classified, meaning that essentially all work in cryptography must be submitted for review and potential restraint.

Another result of the proposed rules is to make it easier for the government to impose patent secrecy orders. No secrecy order can be put on an invention whose description has been previously published, but with the new rules, publication could be prohibited.

Davida is also disturbed that the group's report will not be submitted to cryptography researchers for review. He fears that it will be used as justification and "expert opinion" when the NSA seeks court restraining orders.

The emphasis on court orders signals a shift from the NSA's earlier call for legislation. This shift is mirrored in testimony by Inman to a congressional committee and in conversation. It may indicate the NSA's unwillingness to open up the subject of cryptography to the public hearings that proposed legislation would bring.

CONFLICT AT THE TOP Meanwhile, the House Subcommittee on Government Information and Individual Rights had set out to study the authority of government to classify, restrict, or claim ownership over privately generated information. The committee has particularly focused on patent secrecy orders, the Atomic Energy Act, and export controls, including ITAR. The committee's final report is expected to contain many recommendations for changes in the legislative, executive, and administrative levels of government.

The White House, too, has been deeply concerned with issues of data and communications security and control of cryptography. The concern is both for safeguarding government-held data on US citizens and for protecting US government telecommunications from foreign interception.

In a November 1979 memo, the president's science advisor asked the secretaries of the Defense and Commerce departments to draft a policy regarding cryptography research. Elements to be considered included current practices and future needs for cryptography in the private sector, the issue of academic freedom, and the NSA's missions of securing our government's and intercepting foreign ones' communications. But joint discussions have been "very painful" according to a Commerce participant, who sees a great conflict between the NSA's desire to maintain its authority and Commerce's obligation to protect people's constitutional rights and to look out for the interests of the nation's businesses.

Several studies on encryption, commissioned by the National Telecommunications and Information Agency (a part of the Commerce Department), are just completed or nearing completion. These studies and the policy proposals from Defense and Commerce go to the president's science advisor, who will then be under pressure from various groups seeking to influence the government's policy on cryptography.

Even the Federal Communications Commission has gotten into the act. Meetings on the question of privacy—including communications and data bases—are ongoing. In keeping with the FCC's recent trend toward deregulation, an FCC official says the commission will not stand in the way of anyone offering encryptation devices or services. There is a possibility, however, that the FCC will require encryptation by certain carriers.

Obviously, a lot of people have a lot at stake in federal policy on cryptography—or, for that matter, on any technology. The existing system, including patent secrecy orders, ITAR, and government funding of research, has been good to the military-industrial complex. For the Defense Department and the big corporations who are the major defense contractors, it's been a cozy arrangement, and it is illuminating to see how the game has been played by the big boys.

THE SECRECY GAME Take the patent secrecy order process. Under the Invention Secrecy Act, each application that comes into the Patent Office is examined to see whether disclosure of the information "might, in the opinion of the Commissioner, be detrimental to the national security." If so, the application is submitted to the appropriate government agencies for inspection.

A Patent Office official says that the majority of these involve either the Defense Department or agencies dealing with atomic energy, but any government agency with classifying power—for example, Treasury, State, and Agriculture—can request a secrecy order. The executive branch is not normally involved, says the Patent Office official, "but it would be hard to say no to the president."

If the government agency(s) inspecting the patent application finds it potentially dangerous, a secrecy order is issued through the Patent Office. The applicant has the right to apply for compensation for damage caused by the secrecy order or for governmental use of the invention (though there is no requirement that the government automatically inform the inventor of such use).

The number of orders issued in a year has been declining. There were 292 in 1978, 241 in 1979. In general, roughly a quarter of the secrecy orders are imposed on foreign applications. Of the domestic patents under secrecy, roughly a fifth are privately funded inventions; the rest are government-funded. A little pencil-pushing shows that less than 50 secrecy orders are imposed each year on privately funded inventions. (My estimate is 44 in 1978, 36 in 1979.) But this is just the tip of the iceberg.

Over a hundred times that many applications are scrutinized by the various government agencies and considered for secrecy. Queries to the Patent Office reveal that 5,671 applications in 1978 and 4,478 in 1979 were shipped out to be studied by people at the Defense Department and other government agencies.

These same people, in many cases, have close contact with government, government-contract, or government- funded researchers. The knowledge they gain from studying patent applications must, by the workings of the mind, become integrated with their overall knowledge. Even with the best intentions, they couldn't help but make use of it. And it would be politically naive to believe that all of the people with access to the patent applications have the best intentions. Add to this the fact that the inventor is never told that his or her application was considered for secrecy unless a secrecy order is imposed, and you have the scenario for a massive, day-to-day siphoning of state-of-the-art technological knowledge.

The major defense contractors, of course, are not hurt by this. With their close ties and a revolving door in personnel between government and business, they're in a position to benefit. Secrecy orders on their own inventions cause no hardship; they were aiming at the government market anyway, and secrecy order or no secrecy order, the government can and will still buy their products.

In fact, attached to every secrecy order is a "permit" advising the inventor that he is allowed to disclose the offending subject matter to "any officer or employee of any department, independent agency, or bureau of the Government of the United States" or to anyone working with the inventor "whose duties involve cooperation in the development, manufacture or use of the subject matter by or for the Government of the United States."

One source familiar with the defense industry-Patent Office routine says that secrecy orders are sometimes even triggered purposefully by these companies. If an invention is declared secret, it automatically freezes any other similar patent application that might be filed. Meanwhile, the original company can produce under the secrecy order for the government, and when the technique or device is no longer "detrimental to the national security" and a commercial market exists, the company is ready to move.

International Traffic in Arms Regulations can be handled in much the same way. The government and the defense contractors do each other favors—the government helps them market not-too-sensitive equipment to friendly foreign governments, and the defense contractors are generally cooperative in not trying to market what the government doesn't approve.

But cracks are appearing in this tidy system. The revolution in cryptography and communication is changing the stakes in the game, and it's becoming too costly for the companies to play.

The country that has become our chief technological rival—Japan—has no government secrets. They are simply not allowed under Japan's constitution, which we helped write after World War II. So in Japan there are no patent secrecy orders. Its defense industry (as a share of GNP) is less than a fifth the size of ours. There is no significant government funding of research in Japan: only about five percent is government-supported. And the Japanese are beginning to beat us at our own game.

If our government tries to put a lid on secure communications technology in this country, who will be developing it and selling it to us? American businesses are beginning to wake up to the fact that they could be shut out of a very profitable area.

But they have another reason to want private, commercial work in cryptography to thrive: they themselves need data and communications security in order to survive. They need it against nosy competitors and larcenous computer whizzes but also against governments. As David Ignatius reported in the Wall Street Journal:

The bottom line for corporate managers is that, unless they take special encryption precautions, some of their international messages are probably winding up in NSA's computers and in the intelligence files of the Soviets. In coming months, U.S. companies may have to decide whether they want more privacy—and whether more protection would be worth the possible cost of a less aggressive U.S. intelligence effort.

PURSE STRINGS CONTROL One would think that after several years of stirring up a hornets' nest, the NSA would lie low during the extensive policy review currently in progress. Not so. The agency continues to push for control over cryptography research.

Len Adleman, one of Ronald Rivest's colleagues at MIT, applied for a grant from the National Science Foundation for research. The proposal was forwarded to the NSA for its opinion—a practice that has been going on for at least three years, according to the NSF. Most of Adleman's proposal involved basic research in complexity theory and number theory. Part of it was more specific, dealing with the use of cryptography to solve various problems of public security and privacy, including protecting data bases and phone communications and preventing computer fraud.

After reviewing Adleman's proposal, the NSA expressed an interest in funding the latter portion. The NSF informed Adleman that it would not fund this portion, and he later received a call from NSA's Inman offering the agency's support.

Inman has expressed concern that the NSA might lose what he believes to be its edge in cryptography. He is actively looking for more academic researchers to bring under the NSA's wing. "We just need two or three people who aren't scared to death of us," he said to Science in August 1980.

Adleman was not about to become one of those two or three people. "In the present climate, I would not accept funds from the NSA," he told Science.

Early in October the NSF and the NSA agreed that, at least in Adleman's case, there would be a choice of the source of funding: NSF, NSA, or both. Adleman says that he will accept funding from the NSF only, but this may not be enough to prevent one of the things he feared from the NSA: classification of his work. Jack Renirie, with the NSF Public Information Office, says that controls on or classification of NSF-supported work are certainly conceivable.

Other researchers are concerned that the government's attempts to control cryptography will stifle basic research in computer science, mathematics, and other related fields. And researchers are coming to realize that what the government funds, it controls. Nearly half of all scientific and technological research and development in this country is funded by the federal government. In the area of basic research, federal funding is nearly 70 percent of the total.

CAN IT WORK? Even if you take the protection of US government communications and the interception of foreign ones as your highest goals, as the NSA presumably does, there are problems with prior restraints and other controls on cryptography. For one thing, they cannot work in the long run. "It's folly to think that you could stop people from getting secure codes," says Robert Lucky of Bell Labs. "The field of cryptography has opened up completely; you can't stop the knowledge."

The days of snooping on the secrets of other countries are over. The beginning of the end came when the NSA was no longer able to break the major powers' codes, and the end of the end is in sight. The Third World will have communications security. If they don't get it from us, they can turn to other countries or develop extremely good cryptographic systems themselves.

For worriers at the NSA, there is a bright side to this growth in security. If Third World countries are secure from our snooping, they'll also be secure from the Soviets'. They will be better able to chart an independent course, less susceptible to bullying and intimidation. Surely that should work in our favor.

The most that controls on cryptographic developments can purchase for the US government is a short-term reprieve—but at a considerable cost to the security and the liberty of American citizens. Unlike atomic energy, which puts incredible, destructive power in the hands of a few, cryptography has the potential to protect many individuals from control or manipulation by others. And the same factors that lead inexorably to security for foreign communications also lead to security for US communications. Fortunately, our ability to construct secure codes is far outstripping our ability to break them. Given the nature of the mathematics involved in modern cryptography, it looks like this trend will continue.

Only if we allow unfettered research and development to continue in this country, however, can we continue to feel assured. Legal suppression of cryptography will only serve to drive it underground, into the hands of computer criminals, and out of the country. And, as researcher Rivest points out, it would have the unfortunate effect of "setting a legal precedent that would be damaging to our institutions just for a transitory perceived 'need.'"

Even the existing laws leave too little room for individual rights. The patent secrecy laws, passed in 1917 and revised in 1941 and 1952, should be recognized for what they are—wartime legislation. Lee Ann Gilbert points out in a paper to be published this summer in a law journal that a secrecy order imposes prior restraint on the applicant's First Amendment freedom to publish, amounts to a taking of private property by eminent domain without adequate provisions for compensation, and is a denial of due process since there is no judicial approval or review of secrecy orders. "Unless the basic constitutional maladies of the current system are cured," writes Gilbert, "the government defense agencies will have succeeded in subverting the patent laws, which the framers of the Constitution intended to benefit the general public, to their own purposes."

Similar problems afflict the International Traffic in Arms Regulations. In a recent memo prepared by the Justice Department, Asst. Att. Gen. John Harmon advised: "It is our view that the existing provisions of the ITAR are unconstitutional insofar as they establish prior restraint on disclosure of cryptographic ideas and information developed by scientists and mathematicians in the private sector."

The fundamental flaws of ITAR mentioned are "first, the standards governing the issuance or denial of licenses are not sufficiently precise to guard against arbitrary and inconsistent administrative actions. Second, there is no mechanism established to provide prompt judicial review…in which the government will bear the burden of justifying its decisions."

The memo also notes that "the showing necessary to sustain a prior restraint on protected expression is an onerous one. The [Supreme] Court held in the Pentagon Papers case that the government's allegations of grave danger to the national security provided an insufficient foundation for enjoining disclosure by the Washington Post and New York Times of classified documents concerning the United States' activities in Vietnam."

SECURITY FOR ALL When the Pentagon Papers were published, it turned out not to be the threat to national security the government had claimed. And when the American public learned of all that had been done and planned in the name of national security by the Nixon administration, they became understandably suspicious of such hand-wringing. Suppression of cryptography in the vain hope of keeping its results from widespread use can only further debase the coin of national security.

Yet there is a valid component of what may appropriately be viewed as national security, which the NSA and similar agencies have overlooked. That component is privacy. Without the right to privacy and the tools for maintaining it, there is no security for the American people—for the government, perhaps, but not for the people.

There appears to be a growing awareness, shared by almost everyone except the NSA, that cryptography is too important to be controlled by the government. And the NSA—for all the good intentions of its director—seems to be increasingly viewed as a bully with whom no one wants to play any more. Dr. Robert Lucky, who once served as a liaison between IEEE and the office of the president's science advisor, reports a new consensus in IEEE: "We now believe that we should not try to work out any 'agreement' with the government—we only stand to lose the rights we now have." Officials in the Commerce Department are likewise wary of the NSA and, while leery of being directly quoted, are surprisingly informative and helpful. They want the public to be aware of the costs of controlling cryptography.

The electronic age we have entered truly is a revolution, requiring adjustments all across the spectrum of human life. It is not extraordinary, under the circumstances, that government itself must change. With the increasing sophistication of secure communications technology, one of the NSA's tasks—protecting US government communications—will become easier. And another task—analyzing foreign communications—will become virtually impossible.

But knowing the nature of bureaucracies, the NSA can be expected to fight tooth and nail to retain, and even try to enlarge, its power and its budget, playing the worn card of "national security" for all it's worth. "Perhaps it is a universal truth that the loss of liberty at home is to be charged to provisions against danger—real or pretended—from abroad," James Madison wrote to Thomas Jefferson two centuries ago. Some things have not been changed by the electronic revolution.

Sylvia Sanders formerly worked for a newspaper in Washington state. She and her husband presently operate a farm in Iowa—an enterprise to which they hope to apply their new microcomputer.

THE TERMINOLOGY OF SECRETS • Cryptography (from the Greek kryptos hidden + -graphia writing): the science of transforming data or messages (plain text) into a "secret" or secure form that is unintelligible to anyone but the intended receiver.

• Encryption (or enciphering or coding): the process of transforming information into secret form.

• Decryption (or deciphering or decoding): the process of turning the encrypted information back into readable form.

• Key: the specific transformation system used to encrypt or decrypt a message.

• Cryptanalysis: the science of decrypting a secret message or breaking a code without the key.

• Cryptology: cryptography and cryptanalysis.

Readers who are interested in learning more about cryptography may want to look at The Codebreakers: The Story of Secret Writing, by David Kahn (Macmillan, 1967), for history, and "Privacy and Authentication: An Introduction to Cryptography," by Whitfield Diffie and Martin E. Hellman, in the Proceedings of the IEEE, March 1979, for an introduction to contemporary cryptography.

PUBLIC KEYS Codes based on the public-key concept not only appear to be unbreakable; they also eliminate an age-old problem with sending secret messages—keeping the code secret while making it available to intended users. In addition, they can be used to protect against fraud, opening up all new areas for the use of codes.

The key to these advantages is the key—the instructions for encoding and decoding information. In traditional encryption schemes, the same key is used for both procedures, and distributing the key to the users of a code requires security measures to prevent its falling into the wrong hands. (Human couriers are often used—but may be set upon by thieves and are subject to being bought off. Alternatives, such as transmitting the key by registered mail or electronically, are likewise not foolproof against interception.) In public-key systems, however, because of the nature of the underlying mathematics, encryption and decryption are accomplished by separate keys that cannot be deduced from each other. So one of the keys can be made public, eliminating the risks surrounding key distribution in traditional coding schemes.

The ease of access to the new systems opens the way for widespread commercial applications. Does your firm need to send a confidential message to Company A? Just call information for A's public key, enter it into your computer, and type the message on your terminal. The computer uses A's public key to encode it, it's sent off to A, and A's computer decodes it with the other, secret key. Even if, say, Company B has the communication line tapped, it won't do B any good; only Company A has the mate to its public key, so only A can decipher the encoded message. When A is ready to reply to you, your public key is used to encode the message; again, only you have the key that will unlock the message.

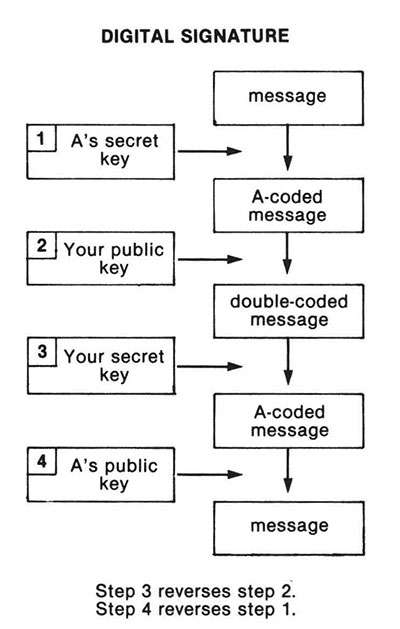

Of course, anyone can communicate with your firm using your public key, so when A supposedly sends a reply, how do you know it's authentic? Public-key systems solve this thorny problem for cryptographers, also. When Company A types the reply, the computer is instructed first to encode the message with A's secret decoding key. (Since both encoding and decoding keys contain rules for transforming data, either may be used for encoding.) This is called A's "digital signature." Then the encrypted message is transformed again using your public key and sent on its way. When your firm receives it, your computer does two decodings—using your secret key to reverse the second coding, then using A's public key to reverse the first coding. Only Company A could have sent something reversible by A's public key; only you can interpret it, because only you can reverse a message encoded with your public key.

Authentication is the problem solved by public keys in one of their first applications. The Zero Power Plutonium Reactor in Idaho Falls has been using an automated public-key system to ensure that only authorized personnel gain access to the research facility. The hand dimensions of each person are encoded on a magnetic card. To gain entry, a person inserts his card into a computer and places his hand in a measuring device; his hand dimensions and those on the card must match. The security of the system is ensured by the mathematics of public keys. Even if someone obtained the matching key that allows the computer to read the magnetic cards, the encoding instructions could not be deduced, so it would not be possible to forge cards for unauthorized persons.

Keeping track of nuclear fuel is another potential application of the new codes. Now being tested at Oak Ridge National Laboratory is a system for monitoring fuel enrichment. Reactor-grade fuel is enriched much less than weapons-grade fuel, and an international concern has been that countries do not turn their fuel-enrichment facilities into nuclear weapons plants. The plan is to encode the enrichment information and make the decoding key public, so anyone can tell if the fuel, once it changes hands, is enriched to weapons-grade. But since the encoding key, along with the radiation monitor, would be sealed in a tamper-proof container, enrichment information could not be falsified. The Department of Energy and the International Atomic Energy Agency are considering a similar application to keep tabs on spent nuclear fuels. In this case, information about fuel movements would be encrypted.

Public-key systems are also being considered for monitoring compliance with a nuclear test ban. Participating nations would all have a public key allowing them to read test data from seismic detectors, relayed in encrypted form. Since the encryption key is sealed inside the host country's seismic detector, that country cannot insert false information into the transmission of data. But the host country can also rest assured that only the seismic information, and no other espionage data, is being transmitted, since no one has access to the encoding key.

For some applications, public- key systems pose a problem: because of the complexity of the coding functions, it takes too long to encode and decode. In transmissions from satellites to multiple ground-based receivers, for example, fast encryption and decryption is crucial. And in electronic mail systems, long messages must be transmitted quickly in order for the systems to come into widespread use. A solution, however, employing the best of the new and the old cryptography, appears feasible.

Researchers at the Mitre Corporation and Digital Communications are experimenting with a hybrid electronic mail system that uses both the DES (a traditional coding scheme—a single key for coding and decoding—developed by the National Bureau of Standards) and a public-key code. Although the DES allows for fast encryption, each message recipient must be sent a key in advance of any message—raising security problems and time delays that make it impractical for any widespread mail system. In the hybrid, if someone wanted to send you a long message, he would encrypt it with a DES code, use your public key to encrypt the DES key itself, and transmit both to you. Your secret key would decrypt the DES key, which you would then use to decode the actual message.

But the speed of encryption with the new codes will also be improved as they become available on single microelectronic chips—a development that public-key researcher Ronald Rivest is working on. It's the last step in a breakthrough that Martin Gardner, a Scientific American columnist, predicted in 1978 "bids fair to revolutionize the field of secret communication."

—Marty Zupan

This article originally appeared in print under the headline "Data Privacy: What Washington Doesn't Want You to Know."

Show Comments (0)