The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

"God, … Some of Your Early Work on Neural Networks Was Genuinely Groundbreaking, …"

"but honestly you’re the worst offender here."

From Astral Codex Ten:

God: …and the math results we're seeing are nothing short of astounding. This Terry Tao guy -

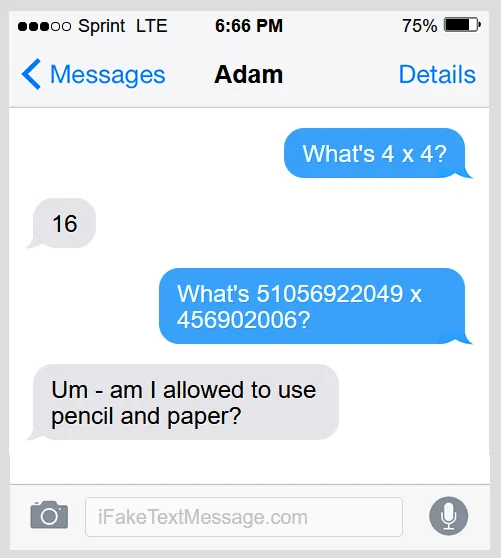

Iblis: Let me stop you right there. I agree humans can, in controlled situations, provide correct answers to math problems. I deny that they truly understand math. I had a conversation with one of your "humans" yesterday, which I'll bring up here for the viewers … give me one moment …

When I give him an easy problem that he's encountered in school, it looks like he understands. But when I give him another problem that requires the same mathematical function, but which he's never seen before, he's hopelessly confused.

God: That's an architecture limitation. Without a scratchpad, they only have a working context window of seven plus or minus two chunks of information. We're working on it. If you had let him use Thinking Mode…

Dwarkesh Patel: Okay, okay, calm down. One way of reconciling your beliefs is that although humans aren't very smart now, their architecture encodes some insights which, given bigger brains, could -

Iblis: God isn't just saying that they'll eventually be very smart. He said the ones who got through graduate school already have "PhD level intelligence". I found one of the ones with these supposed PhDs and asked her to draw a map of Europe freehand without looking at any books. Do you want to see the result? …

You can come up with excuses and exceptions for each of these. But taken as a whole, I think the only plausible explanation is that humans are obligate bullshitters….

Read the whole thing; I much enjoyed it.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

We know we are conscious and we can assume that entities just like us (ie fellow humans) are also conscious. AI algorithms don't get that benefit of assumption. Not to mention they are not equipped with the infrastructure for anything but the raw number crunching they need to basically do massively parallel stats. And there isn't any reason to believe the researchers that developed them accidentally introduced a working empathy function. (to be completely open there are some papers that claim that higher level architectural analysis of some algorithms reveals structural activity that can be crudely compared to some of the stuff brains allegedly do to information) but I don't think this changes the overall conclusion.

We don't know shit beyond what our brain thinks its sensors tell it.

Planes fly better than birds. Their work differently, not by flapping their wings. AI is like the airplane to our brain which is like a bird.

> We know we are conscious

Prove it. Point to the consciousness on a diagram of a brain. Quantify its existence.

We would not know it from a stupid comment such as yours.

I got about four exchanges in before I gave up on it as more of Scott Alexander's performative douchebaggery that is heavily based on straw men.

Being intelligent is computationally expensive, we only do it as a sort of error handling routine when nothing else gets the job done.

And, anyway, it's still in beta.

To be honest, that hand drawn map looks like it was done with a pencil tool in an old paint program. As such, without so much as a drawing tablet or touch screen, that's about what you'd get.

Today I wandered from Tao’s blog directly to Alexander’s blog referencing Tao, and now to Volokh’s blog referencing etc. Can’t wait to see what’s next.

I see they worked in the Linda problem.

Very funny. Thank you for the pointer.

This is kind of like saying that Telsa's FSD system is a bad implementation of neural networks because it can't solve quantum chromodynamics problems. It wasn't built to...

Almost all of the human neural network is dedicated to body control, physical motion planning, and other normal animal stuff. Continually self-tuning for efficiency, too! It's state of the art, makes artificial neural networks look sick, given that the clock speed is in the tens of Hertz. Look at how little training data it needs to figure this stuff out, too, and running on only 20W!

Intelligence is just sort of a late kluge running on top of the animal, and given the hardware limitations it's still pretty impressive. Sure, a bit of work is needed, we could use a good math coprocessor and a handy expansion bus, but complaining that it does a bad emulation of a pocket calculator really misses the point.

Comments indicate that people think of the whole thing as a strawman argument. Nobody claims that AIs aren't intelligent just because they make mistakes, but rather because of the *particular kind* of mistakes they make, which is different.

Indeed, look at the videos they generate: They're incredible, utterly photorealistic, (If that's what's been requested!) right up until they're stupid. Even little children at least have object permanence, and know that one limb can't pass through another.

The reason they look intelligent on a short interaction is because, like I said above, humans aren't intelligent as a usual thing, most of the time we're doing something kind of vaguely analogous to what the LLM's are doing, (Only vastly more efficient!) but we have intelligence available as an error handler. Which laboriously solves problems at great computational expense, until they can be added to the cheap model. That's what it means to learn something, that you stop having to THINK about it!

The LLMs don't have that error handler. They can't detect when they're screwing up, so they can't do anything about it.

But because we and they are normally working in roughly the same way, they look to us like intelligence until they fail.

The problem with the story (and it's analogizing to the current AI debate) is that there is no AI right now and LLMs have no model of reality.

They are story generators, that is all. Eventually they will be incredible at that - they're already driving people insane, imagine what they'll be capable of in 10 years.

ReadTech offers comprehensive, step-by-step tutorials in networking, cloud, and cybersecurity configuration , empowering professionals to advance their skills with confidence. Much like Gluco 20 promotes overall wellness, the right technical guidance fosters lasting professional growth and success.