The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

Noise in Law School Grading

Adam Chilton, Peter Joy, Kyle Rozema, and James Thomas have written an excellent, careful new paper entitled "Improving the Signal Quality of Grades." The central claim is that some law professors are better graders than others, where a better grader is defined as one whose grades correlate more with the law student's final GPA. Some top students thus have the misfortune of having their records tarnished by a professor whose grades are relatively arbitrary. They then miss out on opportunities, such as law review and judicial clerkships, that are granted relatively early, based on a small subset of the students' law school portfolio. The authors then think about various possible remedies for noisy signals, such as kicking bad graders out of the 1L curriculum and adding more gradations in grading levels.

A preliminary question might be whether law schools really want to improve the signal quality of their grades. It may be fair to say that law schools probably don't care all that much. For every student denied a clerkship in a pure meritocracy is another student who lucks into one. It seems doubtful that a law school that did implement a better grading system would become more attractive to applicants. Indeed, aren't students attracted to the lax grading systems at places like the Yale Law School?

But there is an argument that they should care, at least as to their strongest students. After all, judges and top law firms are in a repeat relationship with the law school, potentially hiring students year after year. If they have a great experience, they will be more apt to return and hire more students. When a student lucks into a job and doesn't perform well, then the employer is less likely to hire students in later years. The stronger the signal, the more employers can rely on it. And so, for employers who are willing to hire only the best students from a school, a strong signal is important.

As one moves lower in the class, the importance of a strong signal for a school may decrease. Some employers may be perfectly happy hiring an average, or somewhat below average to somewhat above average, student from a school. To be sure, the employers might appreciate being able to screen out the worst students at the school, but they also might be satisfied with the degree alone, which serves as a form of certification that a student meets the school's standards. That doesn't mean that grades are irrelevant, but, as the old joke suggests, a school might reasonably conclude that it has a stronger interest in accurately identifying the best graduate than the worst one. The desire to avoid making anyone look especially bad can help explain both grade inflation and an opaque grading system like the University of Chicago Law School's. Employers who need to distinguish the best students can, but many employers can just be happy hiring a graduate of that school without worrying about whether a GPA of 175 is any good.

Still, law schools do want to distinguish at least their best students, and that requires multiple gradations and good graders. The authors base their analyses on data from a top-20 law school over four decades. (It quickly became unmistakable to me which law school they are describing, but because they seem to be preserving some veneer of privacy for the school, I won't name it.) Their data shows that first-year grades are a fairly good but still imperfect predictor of final performance, with 68% of those in the top quartile after the first year remaining there by graduation. They show that with more semesters, the "share of missing students in the top 1 percent"--that is, those who will fill in the 99th percentile but aren't there earlier--drops from 40% after two semesters to 22% after four semesters.

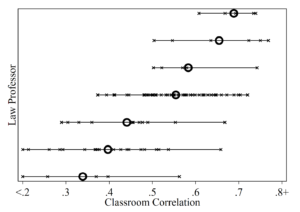

Overall, 1L grades are reasonably predictive, with the mean correlation between grades in a classroom and the student's eventual achievement equaling 0.66. But that figure masks a great deal of variability among professors. The diagram below, for example, shows the predictive value of seven different professors' grades. Each X refers to one time when the professor taught the class, and each O indicates the professor's average classroom correlation. It is striking that even for a single professor, there is a fair degree of variability; maybe some exams work better than others. But even more striking is that some graders are better than others.

Or are they? The authors consider the most obvious counterargument, that different professors are measuring different skills. Maybe the professor at the bottom of the chart above is the best grader, because that professor is focusing on skills that actually matter in the workplace. But the authors attack this possibility in several ways. First, they note that noisy graders tend to be bad at predicting their own future grades of the same students. Second, the noisy graders are not highly correlated with one another; indeed, their grades better predict the high-signal graders' grades than their fellow high-noise graders' grades.

Third, the authors run a Principal Component Analysis of all doctrinal first-year grades. This is a technique that reduces the dimensionality of data, for example converting eight-dimensional data representing first-year grades of many students into four-dimensional data representing the underlying qualities those grades are measuring. (For a nice explanation of Principal Component Analysis and similar techniques in the context of Supreme Court opinions, see this great article by Joshua Fischman and Tonja Jacobi.) They find that the first component of PCA--i.e., the most important thing that grades seem to be measuring--explains 61% of the variation in first-year grades, while the second component explains only 8%.

This is rather convincing, but I'm not entirely sold. After all, 61% is not 100%. And the noisy graders might be grading different portions of the remaining 39%. Moreover, what they are measuring might be harder to grade. There is a reason that law professors love to base their exams on subtle doctrinal points (nothing like an exception to the exception to separate out the best students). It's relatively easy. If the noisy graders are trying hard to discern something that's hard to identify, then one would expect their grades to be noisy predictors even of their own future grades (and their colleagues' grades), but whatever they are identifying might still be important.

But yeah, it could just be that the noisy graders are lazy. (My colleague with the most unusual technique for grading exams is not that outside of grading, however.) And maybe if schools identified high-noise graders, they could be coached--or shamed. One question I'm curious about is whether the noisy graders also tend to be the professors with low teaching evaluations. The authors don't provide any information on that. It would slightly strengthen my confidence in the results if we found that less effective teachers are worse graders. But it also might suggest that the real problem is teaching rather than grading. After all, if teachers don't clearly identify what the students need to learn, then different students might focus on different things, and that might add noise to the grading, even if the grading were performed by a third party.

Improving graders is just one thing that law schools could do to make grading fairer and more consistent. For example, law schools could do a better job of making the curve more consistent across classes--and more reflective of the quality of students in the class. The authors note that the 1L students were randomly assigned to classes, but 2L and 3L students are not randomly assigned to classes, and at many schools, students are effectively punished for taking hard classes like federal jurisdiction. Meanwhile, some graders tend to have relatively flat distributions, while others have much more humped distributions. The authors explain why that doesn't affect their measure of correlation, but schools might still do more to reduce the effect of professors' choices on students--unless, of course, it turns out that professors who give a narrower set of grades are also those who are noisy graders.

Show Comments (30)