The Case of the AI-Generated Giant Rat Penis

How did an obviously fabricated article end up in a peer-reviewed journal?

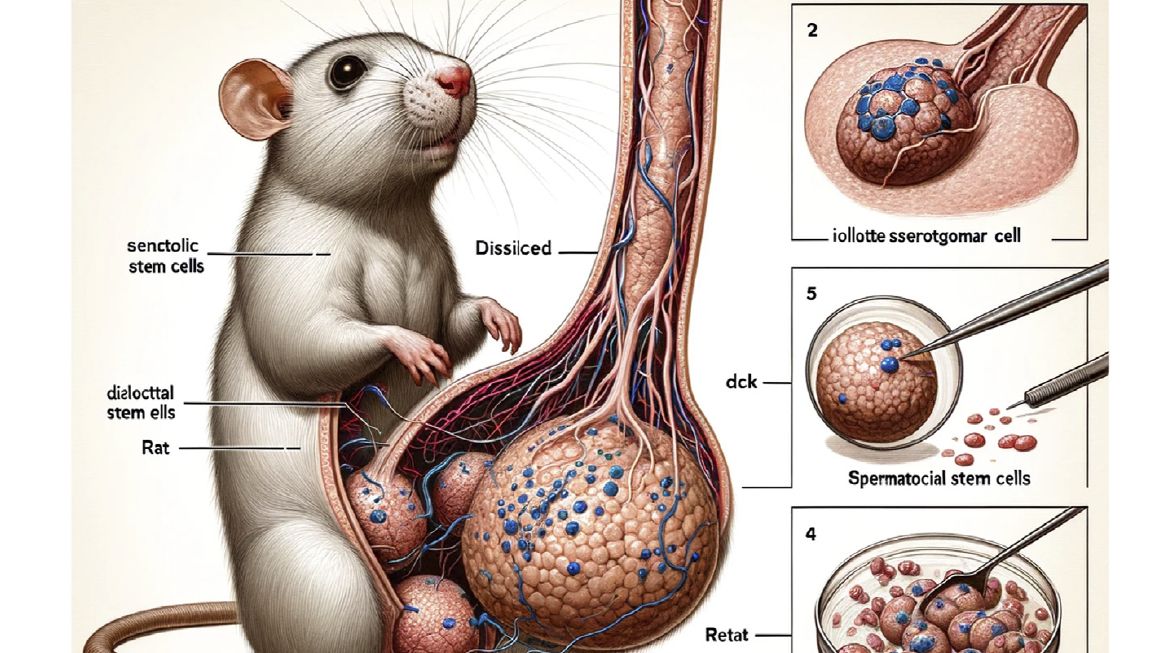

An illustration featuring a rat with a cross section of a giant penis set off a firestorm of criticism about the use of generative artificial intelligence based on large language models (LLMs) in scientific publishing. The bizarre illustration was embellished with nonsense labels, including one fortuitously designated as "dck." The article on rat testes stem cells had undergone peer review and editorial vetting before being published in February by Frontiers in Cell and Developmental Biology.

"Mayday," blared longtime AI researcher and critic Gary Marcus on X (formerly known as Twitter). Vexed by AI's ability to abet the "exponential enshittification of science," he added, "the sudden pollution of science with LLM-generated content, known to yield plausible-sounding but sometimes difficult to detect errors ('hallucinations') is serious, and its impact will be lasting."

A February 2024 article in Nature asked, "Is ChatGPT making scientists hyper-productive?" Well, maybe, but Imperial College London computer scientist and academic integrity expert Thomas Lancaster cautioned that some researchers laboring under "publish or perish" will surreptitiously use AI tools to churn out low-value research.

A 2023 Nature survey of 1,600 scientists found that almost 30 percent had used generative AI tools to write manuscripts. A majority cited advantages to using AI tools that included faster ways to process data and do computations, and in general saving scientists' time and money. More than 30 percent thought AI will help generate new hypotheses and make new discoveries. On the other hand, a majority worried that AI tools will lead to greater reliance on pattern recognition without causal understanding, entrench bias in data, make fraud easier, and lead to irreproducible research.

A September 2023 editorial in Nature warned, "The coming deluge of AI-powered information must not be allowed to fuel the flood of untrustworthy science." The editorial added, "If we lose trust in primary scientific literature, we have lost the basis of humanity's corpus of common shared knowledge."

Nevertheless, I suspect AI-generated articles are proliferating. Some can be easily identified through their sloppy and flagrant unacknowledged use of LLMs. A recent article on liver surgery contained the telltale phrase: "I'm very sorry, but I don't have access to real-time information or patient-specific data, as I am an AI language model." Another, on lithium battery technology, opens with the standard helpful AI locution: "Certainly, here is a possible introduction for your topic." And one more, on European blended-fuel policies, includes "as of my knowledge cutoff in 2021." More canny users will scrub such AI traces before submitting their manuscripts.

Then there are the "tortured phrases" that strongly suggest a paper has been substantially written using LLMs. A recent conference paper on statistical methods for detecting hate speech on social media produced several, including "Head Component Analysis" rather than "Principal Component Analysis," "gullible Bayes" instead of "naive Bayes," and "irregular backwoods" in place of "random forest."

Researchers and scientific publishers fully recognize they must accommodate the generative AI tools that are rapidly being integrated into scientific research and academic writing. A recent article in The BMJ reported that 87 out of 100 of the top scientific journals are now providing guidelines to authors for the use of generative AI. For example, Nature and Science require that authors explicitly acknowledge and explain the use of generative AI in their research and articles. Both forbid peer reviewers from using AI to evaluate manuscripts. In addition, writers can't cite AI as an author, and both journals generally do not permit images generated by AI—so no rat penis illustrations.

Meanwhile, owing to concerns raised about its AI-generated illustrations, the rat penis article has been retracted on the grounds that the "article does not meet the standards of editorial and scientific rigor for Frontiers in Cell and Developmental Biology."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

The world needs more giant rat penises.

Donald Trump, Ron DeSatan, and Sidney Powell aren't many enough?

I was thinking more Bidens, father and son.

Shillsy is a Bidenista, prepare for a 200 line shitpost.

Looks like he's used up his quota of ALL CAPS today.

SQRLSY will run out of CAPS when the NFL runs out of points.

Giant Rat Penis would be a good band name.

That little vermin doesn't look too grateful. I'd say that's a waste of processing power.

Seems here the problem is peer review, not AI

There's problems all the way down. The peer reviewers, the publishers, the people who submitted this for publication in the first place.

AI is a problem so far as it makes this sort of thing easy to do. You wouldn't accidentally draw a rat like that if you had to draw it by hand.

anyone who peer reviewed this should never be asked to peer review again

And forced to take Drawing Animals 101 at the Art Institutes.

It began with subsidies, of course.

The shittification of science began when governments subsidized college research; it's one thing to pay for, say, military research, but another thing entirely to subsidize colleges in general. You get more of what you subsidize, and it's always more diluted and watered down from its natural state. Thus more marginal students who gravitate to marginal professors in marginal fields, culminating in the woke crap of today, which will only get worse tomorrow.

It's not just student loans. Before that, it was cheap state college tuition (like the $100/quarter I paid). But it really began with research grants, the NIH, all that federal bureaucracy dishing out more and more money. The bureaucrats want to dish out as much as possible. The marginal researchers want as much as possible, because no one else is stupid enough to fund studies of Peruvian hookers.

Speaking as a lazy college professor…..there’s the supply issue you mention, but there’s also an artificially created demand issue that could easily be shut down.

Colleges make continuously asking for federal research money and publishing papers a condition of continued employment. Even the little known backwater I work at has a standard that assistant professors should spend at least 40% of their time asking and publishing, regardless of whether they have any real need for the money, and regardless of whether they have any new stuff to write about.

And then the MBAs ask them to report productivity on a stupid spreadsheet. The Excel spreadsheet award 10 points for the phony giant rat penis paper; the paper discovering the transistor or DNA would’ve got the same 10 points. It awards the same points for asking for $500K whether it’s to study Peruvian hookers or to finalize a new cancer treatment.

Incidentally, we fire far more people for failing to make their research points than for bad teaching.

Since a lot of this “work” has net negative value, we could benefit even by telling professors to just not do it and take the day off. But you might even ask them to pay more attention to their teaching.

Easyish fix: state legislatures could designate maybe one or two colleges in the state to do research. At all the others, just flat prohibit using research to evaluate faculty, insist that the sole evaluation be based on how many students they produce that pass objective measures like licensing, exams, or getting jobs.

The problem with that easy fix is that governments have a negative incentive to reduce budgets. Bureaucrats everywhere can only measure their success by their budget and how many employees they supervise. Private industry bureaucrats are constrained by market competition and the reality of bankruptcy. Government bureaucrats are constrained only by how much money they can wheedle out of politicians, and that's a rellay low bar.

Generally true. But considering how despised professors are at the current moment, it shouldn’t be impossible to get some reforms passed. Don't even have to reduce the bureaucrats' budgets or the legislators' pork, just redirect it to something marginally less wasteful.

I don't think many here despise professors in general, just the woke ones.

just the woke ones.

Which describes professors in general.

Seriously, it seems to me like they've purged anything not on the ideological reservation, not unlike how even liberals got ousted from major newspapers for not being woke and progressive.

Skynet has no peers.

Yep, the 'peer reviewers' are morons.

The same ones who have given the thumbs-up to crappy anthropogenic climate-change research and approvals for "gender-affirming" treatments for kids. The state of the ivory tower is similar to the Augean stables. Where is our Heracles?

HR-mandated toxic masculinity seminar?

"on X (formerly known as Twitter)"

This is deadnaming, and is considered a mortal sin.

But X is ambiguous and X.com and other alternatives don't properly convey our petty hostility.

I think at this point it is used to disparage post Musk takeover of Twitter remembering how great censored Twitter was.

My opinion is that it's a global troll by Musk to see how many times he can get journolists to repeat the phrase 'formerly Twitter'.

So far, he's winning.

"If we lose trust in primary scientific literature, we have lost the basis of humanity's corpus of common shared knowledge."

That ship sailed with the COVID response.

We don't trust your research, we don't trust your papers, we don't trust your conclusions, we don't trust your "recommendations".

Facts changed! They were doing the best they could with the information they

hadwanted! Any of you would have done the same!You held on until COVID,? That shit was dead when the climate cultists gained the slightest bit of power.

The moment they got the media to call any skeptic a "Denier", equating them with holocaust deniers...

Not that there weren't plenty of idiocy moments before that, but science saying it is "settled" isn't science.

If we lose trust in primary scientific literature, we have lost the basis of humanity's corpus of common shared knowledge

Religion still exists, so this is bullshit.

Even if it didn't, then good. The death of ScIeNcE! Is overdue.

Are you anxious to kill just Fauci science, or also Newton/Darwin science?

We could do some kind of compromise, e.g. kill anything done by a scientist born after 12/31/1899.

Religion is shared superstition. Not knowledge.

Except indigenous knowledge: to question the veracity of the nexus of religion, shared superstition and intersectional social justice is blasphemy.

MORE TESTING NEEDED?

I find it hilarious that the "tortured phrases" problem arises specifically from anti-plagarism software. This kind of stuff was rolling out in force during the last few years of high school for me and all through college and I'm a bit dismayed that on the way to take a shot at AI as the villain of the day Mr. Bailey missed an equal villain in the anti-plagarism software it's being used to foil. Even when I was in school two decades ago it was quite difficult to submit an unedited paper past the anti-plagarism software of the time. Even without any kind of actual plagarism, the odds that someone, somewhere, at some point, used a turn of phrase similar to one sentence in your 3-15 page paper was staggeringly high. I imagine it's only gotten worse as the number of papers submitted has grown. Paper submission is turning into an academic /r9k/.

Tortured phrases like:

"Basis of the corpus of humanity's common shared knowledge"

Like what is this fool trying to say we'd lose?

I'm pretty sure he's trying to say science wouldn't happen without peer reviewed science journals. But what a retarded, word count minded, thesaurus-y way to make that obviously false statement.

Are you saying chem jeff was the training source for AI? Lots of words that say nothing.

Peer review is actually fairly central to the scientific process. If other people can't reproduce your results, it's generally considered to be a bad thing and a pretty sure sign that what you published is garbage nonsense.

True, but it's one way.

Can't pass peer review ----> Almost certainly garbage.

Passes peer review ----> Doesn't prove anything, e.g. the rat penis paper.

Proposed revision to peer review: Reviewers are still anonymous, *unless* they get negligent and let something like the rat penis paper get through. In that case the journal should publish the reviewers' names, photographs*, institution, and e-mail address along with the retraction.

------

*Photoshopped, can't decide whether it should be the feet/inches scale and orange jumpsuit, or a dunce cap. Maybe both.

I can agree there. The fact that there is essentially no downside to publishing garbage papers is a problem.

I mean, the publication should take a hit to their reputation and that should tank them, but somehow that never seems to happen no matter how many bunk papers they shovel out the door.

Probably because there is no attempt to replicate the vast majority of published papers by anyone, let alone the publication itself. There really isn't any incentive to do so unless it's a paper with major implications. Even then, most papers (in actual science fields, anyway) would be difficult or expensive to reproduce in the first place unless you have highly expensive and ultra specialized equipment.

Yeah, science didn't happen at all before 1950 or so when peer review magazines became a thing.

The further you are from pure science, the more you need peer review.

Same as it applies to usefulness. Even if it is pseudo or quasi science.

Is it reproducible? Is it useful?

For example, AI algos don't need to be published for peer review because they stand or don't stand on their side own, and are valuable or not based on whether or not they work.

Real science is privately funded and closely guarded. If it isn't private, it's military and even more closely guarded.

This is especially true given that many disciplines have specific style guidelines that must be followed, making it even more probable that certain phrases will crop up in their writing.

Sadly, awful grammar is now the best proof of innocence when it comes to plagiarism.

Good grammar and style - "reasonable suspicion", better run some checks.

Good grammar and style, combined with lite, trivial content - "probable cause", call them in for questioning.

Beautiful writing in support of utter nonsense - e-mail asking them whether they'd rather take the F for incompetence or dishonesty.

Anyone got recommendations for playing around with image generators? I tried half a dozen for simple things, and was not impressed, and most wanted me to sign up for a paying account before they'd do more than the simplest (and screw those up). I understand they can't provide free image generators, but from what I saw, none were worth exploring further. I wouldn't mind paying, say, $5 or $10 for a one month trial. but not on a subscription which they'd make hard to cancel, and not without some free experimentation.

Everyone wants to use them to make porn.

Yes, we're on the verge of a Golden Age of porn.

You mean a time where the (now AI) models don't have freakish duck lips and the worst boob jobs on earth?

It may usher in skynet, but at least there'll be some benefit to humanity in the meantime.

Soon, it will produce whatever kind of lips or boobs you want, in full motion video. It won't be long.

Civitai.com

It's kind of a AI image generation developers hub. Its online generator used to be completely free, but last month unfortunately it went under a semi pay model. However, you can still get some free generation.

Civitai has a large number of Stable Diffusion models, and a huge number of LoRA, embeddings, samplers and VAEs.

Does it do porn pictures where the woman has only 5 fingers?

What should it be, 4? 8? I'm not into amputee porn.

A hand with no forefinger has only four fingers.

Depends on the model and LoRA you use. Jack-of-all-trade models like what you get with Bing or DALL-E usually have problems drawing hands, but if you're using a Stable Diffusion generator you can pick a model that can specialize in anatomy.

AI has a problem with fingers for some reason.

OK, how is this AI-retard science any different from Fauci/NIH/CDC-retard science? Both suggest bizarrely inflated manifestations of reality that, if believed, would make people respond in, um, fear and panic.

I guess the AI shit is cheaper.

So you're telling me that rat dick isn't where Misek came from?

There's the old joke that you can't get pregnant from butt fucking, to which he rejoinder is "where do you think lawyers come from?"

We can now extend that to Misek.

Pretty sure Misek was hatched and pupated in a puddle at the garbage dump.

Here I thought he was cloned in brazil

Interesting hypothesis. And it makes me wonder about raccoon-dog dick and Fauci.

Fauci is a raccoon-dog dick, wonder no more.

Invest in woodchippers.

I find the rat penis more believable and reliable than "the science".

Does anyone recall the other day when Reason writer Matt Petti used an AI-generated image for his article?

https://reason.com/2024/05/01/feds-worried-about-anarchists-gluing-the-locks-to-a-government-facility/

And the article, too!

The editing changed a lot recently.

Seems like Liz is the last human that works at Reason or just the best trained AI. Either way, whaddyagonna do about an center-left-libertarian AI that has a sorta-love/mostly-hate relationship with NYC and takes time off from work to spend time with her kid, surf, and hit the bong?

Well, maybe, but Imperial College London computer scientist and academic integrity expert Thomas Lancaster cautioned that some researchers laboring under “publish or perish” will surreptitiously use AI tools to churn out low-value research.

Would that ‘low value research’ be in any measure similar to the low-value research that Reason dismissed as “C’mon people, anyone can be fooled occasionally if you like, work hard at it” type articles?

FYI, Chris Cuomo (who I didn't even know was still like... a thing, but I guess he's got some show on the internet or something) is steadfastly reporting that COVID vaccines might not be 100% safe and effective.

That's like someone from Hitler's inner circle intrepidly reporting about how the German government might have mistreated the Jews in the run up to and including wwii, five years after the war ended.

Nevertheless, I suspect AI-generated articles are proliferating.

I suspect that's most of the internet, at this point. That and pornography.

Might be time to just disconnect.

Fuck off you goddamn white trash waste of life.

I really hope Biden’s able to get anti-American scum like you out of our military.

Language.

What have I ever said to give you the impression that I'm white???

Man alive you like to make assumptions. That's your bigotry and prejudices at work. Were I a leftist, you'd absolutely owe me an apology.

Luckily, I'm not a leftist - because I have a functioning brain - and I don't make hay about such things.

Are you white?

Are you not?

Why does it matter on the internet?

Why does it matter on the internet?

Because skin color is the most important thing?

That's the title of a great new show on Hulu. It's a de facto sequel to "Are you hot?", but instead of bending over and spreading them for Lorenzo Lamas, you answer 10 questions from Ibram X Kendi. If you're white, they offer you free gender modification. Mandatory, though.

I plan to go ask AI to generate a CRISPR sequence that will make that picture a reality. You will see, that picture is REAL.

I think AI is telling us we are going to get fucked.

" the coming deluge must not be allowed to fuel the flood"

This has to be an elaborate meta prank where they use a bad chatbot to write an oped in nature against the use of chatbots in academic journals.

And this was a highlight that Bailey found quotable along with "basis of humanity's corpus of common shared" tripe...

Definitely not written by an English major.

I'm going to read that whole editorial.

Funny, the gist of the article is that corporations aren't making their data and code available for peer review and that AI needs to be regulated.

That doesn't jibe with Reason's stance at all.

And there is no authorship attributed.

AI wrote the article about AI in the AI issue of Reason, and AI are in the comments.

The future is – not as cool as I expected…

It’s actually hilarious when you think about it. Bots can do the hard work of writing and commenting on articles while we watch porn. Well-played, Mr. Bailey.

“If we lose trust in primary scientific literature, we have lost the basis of humanity’s corpus of common shared knowledge.”

Pure. Unadulterated. Newspeak. Layers deep.

Fuck Ron Bailey.

Fuck Reason Magazine.

Fuck The Media.

How? Because there is currently no such thing as AI. LLMs are not intelligent.

Who's vetting the AIs?

If Marge is processing your mortgage application using an AI that she doesn't understand, do you think she's qualified to spot a bias? And do you think she's going to against the AI in your favor?

Who is vetting the AI?