Keep Testing Self-Driving Cars, Even If They Kill People

Self-driving cars are likely to save lives. One tragic, accidental death should not stop that from happening. Keep testing.

If March 18 was a typical day on America's roadways, about 90 people lost their lives in car accidents during that 24-hour span.

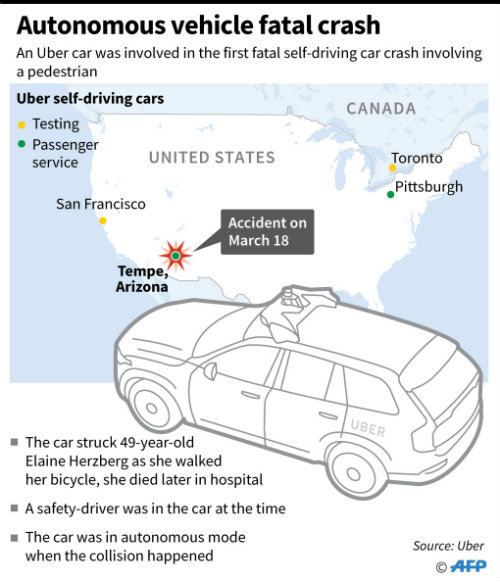

Unless you were unfortunate enough to know one of them, you probably didn't hear about the vast majority of those crashes. But you've probably heard about the accident that killed Elaine Hertzberg, a 49-year-old Arizona woman who was struck and killed by an autonomous Uber vehicle undergoing testing in Tempe. Video of the incident released by police shows that Hertzberg was crossing the street outside of designated crosswalks, and that neither the self-driving car nor the human back-up driver stationed in it had time to detect Herzberg before the collision took place. Police determined that the pedestrian was at fault.

It was an unfortunate accident, the kind that unfortunately happens every day on roads all over America. The fact that the vehicle was a self-driving car has made the accident a national news story, but policy makers should try not to overreact. Self-driving cars have an incredible potential to save lives, and officials should not put that prospect at risk.

Already, Arizona Gov. Doug Ducey, a Republican, has suspended Uber's self-driving car testing privileges in his state, citing concerns about public safety. That move is a "a major step back from his embrace of self-driving vehicles," notes Melissa Daniels of the Associated Press. Ducey had previously welcomed autonomous driving tests to the state after they were subjected to strict regulation in California.

Meanwhile, four state senators introduced a bill this week to ban autonomous vehicles from being tested in Minnesota. "Arizona confirmed my concerns," state Sen. Jim Abeler (R-Anoka) told Minnesota Public Radio. "I've been hearing about this and am very worried about it. And very frankly the idea of driving home while you ride in the back seat is just a recipe for trouble."

Want to hear an even bigger recipe for trouble? Driving home while he rides in the driver's seat.

To err is human, and human error is the cause of 90 percent of car crashes. "We should be concerned about automated vehicles," University of South Carolina law professor Bryant Walker Smith told the Associated Press in 2016. "But we should be terrified about today's drivers."

If driverless cars can amass a better safety record than that, they will literally save lives. Unfortunately, those futures lives saved are invisible relative to lives lost in the present, which have significantly more weight for governors, legislators, and regulators.

In aggregate, car accidents are a massive public health problem. And I don't just mean the deaths they case. Americans spend $230 billion annually to cover the costs of accidents, accounting for approximately 2 to 3 percent of the country's GDP.

Driverless cars will not be perfect. Certainly, they won't be perfect when human beings are darting out into traffic on dimly lit streets where there's no crosswalk, as Hertzberg apparently did. But we shouldn't expect perfection. If they can be better than human drivers—and that's a low bar—then they should continue to be tested and developed. They will only get better.

And even if you're skeptical of the assumption that computers can drive better than human beings, the only way to find out for sure is to allow more testing. As Megan McArdle of The Washington Post points out, Americans drove 3.2 trillion miles in 2016, with a 1.18 fatalities for every 100 million miles driven. How far have driverless cars driven since they began being tested? Fewer than 100 million miles. It sounds like a lot, but it's a very small sample size. To get a better perspective, we need a bigger sample.

Prior to the accident earlier this month, Arizonans seemed willing to give autonomous vehicles that chance. A February poll sponsored by the Consumer Choice Center found that 51 percent of Arizonans favored testing self-driving cars in the state, while 42 percent were opposed. Residents aged 18 to 44 were far more likely to support self-driving cars than older residents were.

Those feelings may shift in the wake of Hertzberg's death, but officials should keep the long-term perspective in mind.

As Reason's Ron Bailey wrote in a prescient July 2016 article, driverless cars have the power to make us richer, less stressed, more independent, and safer. "Unless," he added, "lawmakers and regulators manage to screw everything up."

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Do children feel unsafe around self-driving cars? If yes, they should be banned.

I fully support the banning of children.

Chipper / Chinny 2024!

Btw, this site is no mobile friendly at all. Nick, I'll send you $50 in Freelancer.com credits to spare us all the goofy operation.

Google pay me $135 to 175$ every hour for web based working from home.i have made $21K in this month online work from home.i am a normal understudy and I work 2 to 3 hours per day in my extra time effectively from homelook here more

http://www.richdeck.com

I'm making over $7k a month working part time. I kept hearing other people tell me how much money they can make online so I decided to look into it. Well, it was all true and has totally changed my life.

This is what I do... http://www.onlinecareer10.com

Some homeless woman jaywalking is no reason to ban new technology that will save lives of people who are worth something to someone. Pushing a bike ... across a dark street, without paying attention to traffic. Awesome decision making that underscores the fallibility of humans, not machines.

Herzberg wasn't homeless (as if that matters) and if it's OK to kill jaywalkers then we're all in trouble because we've all jaywalked.

Straw man.

The difference is I've never been so stupid when I'm jaywalking that I walk straight into a car!

Certainly, they won't be perfect when human beings are darting out into traffic on dimly lit streets where there's no crosswalk, as Hertzberg apparently did.

I agree with Eric Broham. Self-driving cars will never be perfect until they introduce self-walking shoes.

The world won't be truly safe until it looks like the space station from Wall-E.

"self-walking shoes"

Movable sidewalks are more realistic. Do you even Jetsons, brah?

The future is a 500-year-old, 400lb Ron Baily in one of those levitating chairs from WALL-E. No try and tell me death isn't such a bad option.

Well, that's certainly going to replace the Hillary with a strap-on in my nightmares.

Your nightmares sound like my kind of fantasy.

Eric is either lying in his description of the victim's actions or sloppy in his writing. She certainly didn't "dart into traffic." An attentive driver on a well-lit road would have been able to see her with enough time to avoid her, and it's not at all clear why the AV's LIDAR didn't detect her or cause the AV to respond.

Or why the backup safety driver failed to respond either.

From the first headline, I've suspected there is more to this story than us voyeurs will ever know.

Let's assume the human backup driver was dead of a heart attack. Would the robocar have just continued on after it collided and thus become a hit-and-run? How is 'hit-and-run' programmed differently than 'hit-a-garbage-can' or 'narrowly missed a pedestrian who then died after hitting their head on the pavement'.

Especially if the vehicle is as oblivious to physical contact as it was to sensors/response - and the 'passenger'/'owner' is drunk, asleep, or surfing porn?

it's not at all clear why the AV's LIDAR didn't detect her or cause the AV to respond.

Or why the default response of a car when any of those two failures do happen (which seem more 'catastrophic failure' than 'normal mileage/usage-based failure') isn't 'Stop'.

the car just didn't like her looks...simple as that

That makes little sense.

The question isn't "what happens if the LIDAR is broken". If it were broken, then there likely would be a different response.

But in this case the pedestrian was invisible to both human and apparently robotic visual systems.

But the LIDAR system does not work on ambient light, nor does it rely on the headlights. It is a laser scanner that maps the terrain. The LIDAR should have been able to detect the pedestrian, one would suspect. But for some reason the car did not react as if it detected the pedestrian.

Hence the question. What happened? Was there a weird effect where the LIDAR was fooled? Was there some other obstruction? Was the software fooled by the discrepancy between the LIDAR and the cameras?

If you watch the videos, it is clear that no human driver was going to stop in time. Because of the combination of the path of the road and the lighting, the pedestrian seems to magically appear about a half-second before the collision, moving directly into the path of the car. Far too quickly for any human to react.

Of course, the 'too fast for a human to react' is literally one of the things touted as something these autonomous vehicles are better at.

Guess not. Not that I expect a computer to really make good decisions, but if they can't predict shitty human behavior than what are they really worth?

Not much, but of course that's why human's will be banned from driving to make way for our robot Ubers.

Having failed to sell trains to the vast majority of American's, I think our 'betters' have decided to simply turn our cars into trains.

Someone looking to fix the problem at their leisure might want to ponder and address all that. My point is - why doesn't the car - by default - come to an absolute stop - immediately? If you suffer sudden 'catastrophic blindness' (or say headlight bulbs blow out) while driving, you stop the car. You don't keep driving. Robocar was not aware of its failures nor was it structured to react to its failures. That is the Johari window - or Rumsfeld's known unknowns.

If you watch the videos, it is clear that no human driver was going to stop in time...the pedestrian seems to magically appear about a half-second before the collision, moving directly into the path of the car.

I have and any human who was driving at that speed at that time in that place - without high beams - would've either been drunk or incompetent. That 'magical appearance' is, literally, not being able to see the next lane over. The pedestrian did not dart into the road. Most human drivers don't give a fuck about peds because peds can't hurt them. A vehicle in a large blind spot in the next lane at night can kill you - which is why a COMPETENT driver would've put on their highs (and thus seen the pedestrian in the next lane over - and made their own car more visible) or slowed down. That excuse is illegitimate. Robocar is useless if it is the same as a drunk self-absorbed incompetent.

Did the woman killed in that accident consent to be the subject of a tech industry beta test that would cost her her life? I don't think so. So, where do the tech companies get the right to test these cars on the public? You say they will someday be safer but unless you have magic powers to see the future, there is no guarantee of that. Moreover, until you do a lot more testing than this, there is no way to say for certain they are safer now. So, what gives the tech companies the right to test them in public when the risk is unknown and the people who are being placed at risk haven't consented to be so?

They are already safer than a car driven by a human.

You don't know that. If you did, there would be no reason to test them.

The data on all accidents suggests that self-driving cars are already much safer than human driven cars under the conditions they have been used in so far. The number of "fender bender" accidents per mile is much lower in self-driven cars - so far. In fact, most collisions with self-driven cars have been shown to be the fault of the other driver.

But this particular case is about fatalities, which are much more rare. So far we have one incident. It may be an outlier, or it may be a hint at a more spectacular failure mode for autonomous vehicles that is less common in human driven vehicles. That is what we don't know.

There was a human in the car when the accident occurred.

Either the human was responsible for this, in which case it isn't a self-driving car or she wasn't, in which case her presence is irrelevant.

The police report says that the pedestrian is at fault.

Even if the car were at fault, the fact that this is a test and the human is supposed to be the backup safety device means that the human or the engineers who designed the test/protocol would be liable.

The link in the article does not fault the pedestrian. There is much speculation, then a statement that the Tempe PD does not determine fault.

Exactly. Eric's conclusion is crap. Looking at the video, the car is clearly at fault. The sensors should have had no trouble detecting her and yet the car did nothing. Uber is also a distant also ran in self-driving cars. Some AI genius fucked up.

My understanding is that we are nowhere near what true AI is meant to be. This isn't AI. This is the 2-ton equivalent of that chat-bot that eventually steered every conversation toward Hitler when you talked with it. Calling it "AI" is a attempt to make it seem more sciency than it really is.

She appears to be crossing in a section of the street that is not illuminated. There's about 1 second between when she becomes visible in the video and when the collision occurs.

The only thing that makes this noteworthy is the car failed to see her. A human would absolutely have failed. I seriously doubt I could have stopped my car in time given how quickly it happens.

If you had been driving at that speed with that visibility, then you would probably also have been drunk. That pedestrian-obstacle could just as easily have been a truck-that-died-without-lights-obstacle or a big-boulder-obstacle. Or in a perverse anti-driver world, a big-building-that-suddenly-moved-into-the-road obstacle

For the 15th time, THE. CAR. HAS. MORE. THAN. HEADLIGHTS.

The LIDAR is good out to at least 100m. The radar should have had no issue with her cross section. She couldn't have been moving at any significant speed since she was walking her bike.

Uber fucked up. The only question is how. Thos corner case can be solved for all future vehicles. Humans can never be.

For the 15th time, THE. CAR. HAS. MORE. THAN. HEADLIGHTS.

For the 20th time - THAT. DOESN'T. MATTER.

IF its LIDAR is working, then its maximum velocity can be the velocity that allows it to come to a complete STOP within THAT range. IF its LIDAR isn't working, then that maximum velocity is entirely irrelevant and actual maximum velocity is the STOP range of a WORKING sensor that is known to be working.

What is obvious here is that the car was both a)unable to stop within those headlights AND b)unaware that those headlights themselves were not working as a sensor. That you can't seem to understand this - AND that you pose as a tech expert - tells me that the entire robo industry ignores this too.

And if that is the case, then the notion that the car should not only react to its own sensors - but should also signal HUMANS outside the car as to the car's impairment - who will also forever be human - is completely alien.

And you have offered ZERO evidence that the sensors failed. All you keep going back to is "muh headlights."

"b)unaware that those headlights themselves were not working as a sensor."

Based on... the bumps on your head? How do you know they weren't working? For that matter, the headlights aren't even the damn sensor! The CAMERA is. Again, there are THREE SENSORS that should have detected her:

1) LIDAR

2) RADAR

3) a) Far field camera

3) b Near field camera

Now in the real world, where Occam's Razor applies, the ONLY hypothesis that you can think of is that ALL 3 sensors failed. Another possibility is that the algorithms failed. And given that the pattern rec is different for those three sensors, it is FAR, FAR more likely that the computer made a bad decision.

The fact that you're too stupid to realize this possibility tells me that you are the poster child for why we need self-driving cars. The fact that someone with your incoherence can operate a motor vehicle should be terrifying to every sane person.

The car has to avoid obstacles, not Vulcan mind-meld with them.

Another possibility is that the algorithms failed.

And? You can't even recognize that the video itself - before the collision - proves that the car was malfunctioning even before then. Because it should have been programmed to have already come to a complete stop already. And you're the one who is supposedly 'expert enough' to develop these systems?

The car has to avoid obstacles, not Vulcan mind-meld with them.

So basically your standard of success for a robocar is a psychotic deaf invisible hi-speed MasterBlaster from Thunderdome - as long as Master has no control.

From other articles I've seen, I speculate the camera was either messed up or the video has been processed. The actual area is reportedly much better lighted than the video shows. Also, the car was travelling at about 60 fps, so it must have had pretty crappy headlights.

I conclude that Eric is crap...lets strap him to the hood of a self driving car and let the chips fall where they may...

the Tempe PD does not determine fault.

Get real. The responsibility of every PD in a collision is to assess the circumstances and present its interpretation of who is at fault (and how the PD will testify) to the DA. Obviously only a court determines who is legally at fault.

What the PD is at least implying here is that the dead ped will be blamed and Uber will get off scot free because the PD will 'advise' the DA that the police will be testifying that Uber ISN'T at fault here. It's Corruption 101.

The human (and what a specimen it is) appears to be looking at her phone in the video, so if she's the backup and not just a warm body in the driver seat to comply with some regulation, she was shirking her duty.

Never do anything for the first time.

Do whatever you want for the first time. But don't do it on people who haven't consented to the risk. Maybe we should just go out and inject unwilling people with experimental vaccines? Hey, it will be good for humanity in the long run and they have the risk of getting the disease anyway? That is the same logic here.

This is why logic will NEVER be able to resolve questions that involve a risk spectrum.

While you are not making an unreasonable argument, others who are diametrically opposed to your position are also making reasonable arguments.

This phenomenon pops up frequently in sundry contexts, so it should at least give you pause before advancing your own arguments so vehemently.

When these situations arise, the most likely outcome is regulation, which, from an individual perspective, is likely to be suboptimal.

As far as I'm aware, driverless cars are and will be regulated by elected or appointed officials. It will necessarily become a political issue. When this happens, your consent is irrelevant.

This is why you will need to look at the issue more closely. Luckily, driverless cars will slowly win out, your type will die out, and society will be better off for it,

Luckily, driverless cars will come right after you get your jet pack. Sadly, your dream of losing your freedom and privacy in the name of safety will likely have to be fulfilled some other way.

NO, NO, NO, I want my flying car first and then my robot girl friend...then my jet pack...how many times must I tell you this!

Any pedestrian who crosses a roadway at night without lights or reflective clothing has already consented to the risk of being struck by a human-piloted vehicle (particularly if they refuse to use a lighted and marked crosswalk). How is that any different?

If one fatality is enough to ban self-driving cars, all cars should be banned tomorrow.

Autonomous cars don't have to drive better than humans to have a better safety record. They're never distracted, can see 360 degrees and have a very much smaller decision envelope than humans have.

Besides I've never consented to be subjected to all those learning-to-drive teenagers on the road.

"Besides I've never consented to be subjected to all those learning-to-drive teenagers on the road."

I don't believe that's true, it's one of the many things you (admittedly, implicitly) consent to when you get a driver's license and agree to abide by the rules of the road.

Not that I necessarily agree with John, I think driverless cars will be safer in my lifetime, if not immediately.

And that is different from self-driving cars how?

It isn't, and I wasn't suggesting it was, he was.

just get Eric off the road for a couple of hours and watch the fatality numbers improve dramatically!

So, where do the tech companies get the right to test these cars on the public?

In this case, the state literally gave them the right to test the car on public streets.

Glad you're on the bandwagon of common sense curtailment of liberty and free markets.

With that attitude, everyone would still be living in caves. There are always risks associated with progress. Every new thing, every single thing, introduces risk.

It isn't quite accurate to say the human condition is to seek, and to try new things, because we know that certain individuals fall to the extreme end of the 'open to new experiences' personality trait. Thankfully, that is a small minority.

Kneel before self-driving Zod

Nice wooden shoes you got there, Hans.

What right do humans have to drive when we know for a fact that they cause 90% of all car crashes?

Philosophical question:

If a self driving car fails (for whatever reason) to see a person crossing the road, despite it's advanced sensor array, and the person behind the wheel is looking in their lap, texting on their phone, was the accident avoidable?

Accidents by definition are unavoidable.

That's not really true.

Actually it is true, since events are only designated accidents once they have occurred, and you can't avoid something that has already happened.

So everything is unavoidable.

Or maybe more strictly, everything either does not exist or is unavoidable. So, determinism basically.

This thread was unavoidable...

Eventually.

Car collisions are avoidable, accidents are not.

And I'm saying that's not even technically correct. Say I'm reading my phone while driving, and hit someone. That could be avoidable, but it is still an accident because it wasn't done intentionally, which is definition of an accident.

An event isn't called an accident until after it has happened. If someone intentionally steers their car into oncoming traffic and causes a crash, it wasn't an accident. If someone nods off behind the wheel, swerves into oncoming traffic, and causes a crash, it was an accident. Many cars these days are coming equipped with collision detection systems, not accident detection systems.

So your issue is based on an argument that all events that have already happened, cannot be changed, and so therefore accidents are unavoidable because they are not classified until after the fact?

accidents are unavoidable because they are not classified until after the fact

Correct, an accident isn't an accident until it has happened.

"Correct, an accident isn't an accident until it has happened."

Which seems to imply that a collision is a collision before it occurs. Or is it that even speaking of an accident, or a potential accident (god forbid), is verboten until such time as it occurs?

You'll have to provide a fuller treatment of your ontology. I'm sure it is dizzying.

Wouldn't it be easier to simply say that all (car) accidents are collisions but that not all collisions are accidents? Now all we have to establish is whether the collision was intentional or not to determine if it was also an 'accident'.

No need to get into paradoxical discussions of temporal semantic assignment.

"Accident' is just the euphemism we use because we don't want to admit our fallibility and to assuage our guilt..

But, when a self driving auto is involved, of course we wish to assign blame to an inanimate object.

Car collisions are avoidable, accidents are not.

Relevant.

That's not true. "Accident" also refers to a potential future harm to someone from a specific course of action with a preventable negligence factor. So at least some accidents are certainly avoidable.

Can you plan to have an accident? If you can't, then how can you plan to not have an accident?

Of course. Just walk around blindfolded.

Then we should stop calling crashes "accidents."

What is it when the engine compartments (most likely the batteries) explode and crater the front end like on 101 yesterday?

Poor maintenance.

Traffic collision. Accident implies there's no one to blame.

Here's a better video of the crash site - https://youtu.be/CRW0q8i3u6E - crash location at 0:34

The actual site is far better lit than the Uber video hints at. No question in my mind - the human 'monitor' is partially to blame here if they actually have real intervention responsibility/capability. It wasn't an accident. There was time and light for human to see and stop.

The video could have better lighting than the person in the car sees though. Someone at night in dark clothing with no light is virtually invisible. I would expect the robo-car to have better sensors and faster reaction time though -- they need to improve them if not. Pedestrians need to use lighted crosswalks.

Pedestrians need to use lighted crosswalks.

I've seen the street view of that intersection/'crosswalk'. Ignoring the legal right of way, which doesn't mean shit to most drivers when the lights turn, it was actually safer to cross where she did. Where she crossed - two lanes - looking in one direction - where there's a large safety median. At the intersection - seven lanes - looking in four directions - no median at all - no markings on road that it is a pedestrian crosswalk - lights timed for cars not peds.

Just one more 'sprint dodge or die' type crossing that is designed by drivers for drivers where peds get hit all the time.

Oh - and the streetlights at that intersection are entirely for the drivers to see where the corners of the intersection are - NOT to see pedestrians already in the crosswalk crossing seven lanes of traffic at night. Once she gets two lanes into that 'crosswalk', she is less illuminated than where she did cross.

Rough daytime street view of actual collision location

daytime view of that intersection/'crosswalk'

"Keep Testing Self-Driving Cars, Even if They Kill People"

Should read: "especially if they kill people, particularly bicyclists"

If these things start killing cyclists, even I might have to reconsider my aversion to them.

Increased cycling deaths is almost a certainty, given the way cycling infrastructure is designed in this country.

I like the graph, because it subtly reminds people that the death happened in the Valley of the Sun! Come Visit Beautiful Tempe! Enjoy the many world class golf courses of Scottsdale. Go get a Sonoran Hot Dog at the Phoenix location of the Tucson-Based, James Beard Award Winning, Guero Canelo. Live it up.

It really should a have another graphic for context that lists the leading causes of death in Arizona:

1) Heatstroke

2) Exposure

3) Eaten by a Gila Monster

4) Old

5) Spontaneous Human Combustion

You missed melanoma.

It's definitely on the list, along with starving to death in a Maricopa prison camp.

Where does "meth lab explosion" fit in? Or is that more a New Mexico thing?

Don't hear about it as much anymore.

They run those out of tents on city property mostly these days.

5) Didn't kill John McCain, but 4) may yet get the job done.

Not until someone finds his phylactery.

I keep telling people that it's buried in the Vietnamese camp where he was a POW. His first death was in that camp.

Guero Canelo?

Is Tempe where Calexico is from?

No, Calexico is from Tucson. As is Guero Canelo. Phoenix just stole one of our most famous restaurants.

Omelettes, eggs, you do the math.

So you're saying Ducey is a shitbag politician?

Eh, he's okay. He has a lot of dumb shit, but not particularly worse than Napolitano or Brewer. Also he was CEO of Cold Stone.

Can't say I've followed his shit since I left though.

We hold them to such high standards...

At least he's not Hillary.

Self Driving cars are not going to be safe much less safer than human drivers outside of very controlled conditions anytime soon. AI is being way oversold.

http://www.atimes.com/article/.....oadblocks/

These promotions rely on public ignorance of what the new technology can actually do and what it can't. This allows the imagination to run free about its future benefits. The beneficiaries of the hype are promoters of a brand or company, or ambitious entrepreneurs looking for the next big thing to raise money.

This is where the awareness and quick response of a human driver comes into play and where the response of a computer making the decisions is quite another matter. And this is the skill that differentiates race-car drivers from the rest of us ? and computers from all of us.

The more potential events that a computer has to react to, the more difficult the ability to program becomes. Just imagine city traffic in most countries of the world where road conditions can physically change hourly, not to speak of traffic patterns.

These issues limiting truly self-driving cars are lost in the hyped hope for wonderful things like reducing traffic deaths, allowing incapacitated people to use cars, and saving on the cost of professional drivers.

Though I can also tell by their usage of "program" that they also misunderstand AI pretty significantly.

In the case of driving a car, for example, the driver responds to traffic conditions based on reactions that are conditioned by previous experience and calculation of least risk moves.

...

The computer has none independent of what it has been programmed to do. So in human terms, it is like having an idiot where every move is determined by a human contact.

This also shows that he does not have any understanding of what ML is. My guess is he probably thinks that self-driving cars use hand written rule systems. This does not prove or disprove the current efficacy of self-driving cars either way, but it does show his article to be founded on a misunderstanding.

Self driving cars use elaborate rule system. That makes them more elaborate but it doesn't make them fundamentally different than a handwritten set of rules. They are dumb machines. They don't think for themselves and don't do anything their program doesn't tell them to do. To the extent they "learn" it is by following set rules about how to store and react to situations, they have encountered. They will never on their own figure out a solution to a situation. At best they will see a situation, fuck it up and then learn not to do that again should that situation ever arise.

Machine learning is not just learning a big set of rules and building up a large repository. It can be, but in this case they are using a different system. And in this case they are able to generalize to previously unseen events based on situations that have been seen already. This has been shown and done successfully many times at this point. And has led to situations where machines discover things humans had not even previously noticed.

They are not as smart as some people say they are, but many people misunderstand it in general.

But that is not the same as driving. It saw things in a confined set of parameters. Learning in a restricted environment is not the same as doing it in the entire world.

That's true, but the difference between creating driving algorithms vs machine language is huge. In the case of true ML, you cannot tie specific results to a well defined algorithm can be tweaked. Just like humans, ML results are evident, but how the decision was made to react in one manner versus another, isn't known.

Take Google taught a computer to recognize pictures of cats - not by trying program directly things like general size and shape of cats, but instead they taught it by providing a great number of cat pics and telling the ML program which has cats and where the cats are.

They then test it by giving it pics of cats and ones with no cats and seeing if it finds them.

I forget actual results, but the point is that when the results correctly identified a cat or lack thereof, one can say it's correct X%, but you can never know nor really find out, exactly which details from training and from test that were used in any prediction.

In short, what people are saying is because these vehicles rely on machine learning is that no one is actually at fault the object is at fault. Since it wasn't 'programmed' than it's self-learned rules are at fault, thus in situations where the machine decides to kill someone it's a no fault situation.

Sounds ideal if you're the company that put these on the road.

John, you're not exactly the most cognizant person when it comes to AI. In this thread and before you've expressed clear animosity to delegating any duty, let alone driving. Forgive me for saying so, but you give off a paranoid aura. You don't trust others to drive, but you don't trust a computer to do it either. I'm fairly certain you're not the best driver on Earth and probably not even in the top 1% of drivers in America. You've just avoided having an accident so far. Or you have had one, and are being disingenuous regarding your own driving ability.

Furthermore, there are differences between the self-driving cars and how they approach the problem of autonomy. Some use an elaborate rule system that seeks to avoid accidents by sharply delineating what the car may do. And some, like Tesla and comma.ai, use machine learning, which is fundamentally different. They don't have a rigid playbook: they are making decisions in a game that has rules.

My driving ability has nothing to do with it. Maybe I am a terrible driver. But it is a risk I happily take. And the risk of other people being bad is a risk I take as well. Why? Becuase my freedom is worth more than the small risk to my safety that comes with driving. If you think safety is more important than freedom, go become a totalitarian because if it is more important here, it is more important in every other context.

Once self-driving cars become measurably safer than you, if you take the wheel yourself, you will statistically be putting everyone else on the road at a greater risk, endangering their liberty. Today, do you feel it is your right to drive while stoned or intoxicated, because ... freedom? That will be the proper analogy at some point in the future.

Depending on how old you are you might not need to worry about it. Due to difficulties with machine learning of anticipating what others will do, self-driving cars will take much longer than most popular media people predict. But it will happen. The benefits to cities are just too great to ignore.

I am a safer driver than an old person. Should old people be prohibited from driving? Sorry but marginal improvements to safety do not justify taking away people's freedom.

Once self-driving cars become measurably safer than you, if you take the wheel yourself, you will statistically be putting everyone else on the road at a greater risk, endangering their liberty. Today, do you feel it is your right to drive while stoned or intoxicated, because ... freedom? That will be the proper analogy at some point in the future.

Depending on how old you are you might not need to worry about it. Due to difficulties with machine learning of anticipating what others will do, self-driving cars will take much longer than most popular media people predict. But it will happen. The benefits to cities are just too great to ignore.

Genetic algorithms and other machine learnkng come up with novel solutions to problems all the damn time. They even learn how to cheat.

I remember one time an algorithm turned an FPGA (digital device) into an analog controller by exploiting the non-linearities inherent in the underlying transistors. That's something no human would ever think of.

The AlphaGo saga is worth reading. There's plenty of breakdown about what happened for the person who doesn't play Go. But essentially, it performed novel moves in a game that is thousands of years old, surprising the best player in the world (Lee Sedol) with it's unpredictability. It also made some very poor moves, when that person challenged what it thought the computer's decision-making process was. In the end the computer won 4 out of 5 games.

It will never cease to amaze me that a group of people who ostensibly understand the poont of emergent order in I, Pencil, are completely convinced that that same emergent order cannot work with the simpler task of driving.

Go is still a set of parameters.

Like driving. Do you believe missile defense can ever work? That's a helluva lot harder problem than driving.

The number of parameters is infinitely larger than a chess game. And I think missile defense can work but will never be foolproof or effective enough I would want to bet my life on it. I would want it because it is better than nothiing. Well, self driving cars have to be better than me driving.

Go has more than 10^172 combinations. What was that you were saying about infinitely larger?

And this is who the author is

Kressel's parents and sister died in The Holocaust during World War II, after which Kressel emigrated to the United States. He entered Chaim Berlin High School in 1947 and graduated in 1951.[4]

Kressel earned a bachelor's degree in physics from Yeshiva College, a master's in applied physics from Harvard University, an MBA from The Wharton School at the University of Pennsylvania and a Ph.D. in material science, also from the University of Pennsylvania.[1]

Career

Kressel joined RCA Laboratories in 1959, and spent 23 years there.[3][5] He was in charge of development and commercialization of research developments in a variety of fields including light sources, light detectors, and integrated circuits. The development of the first practical laser diodes and the first epitaxial silicon solar cell are also attributed to him.[3] He eventually became vice president of solid-state electronic research and development.[1][3]

Kressel joined Warburg Pincus in 1983.[1]

I doubt he is as ignorant of the basic rules of programming as you are claiming.

This is not the basic rules of programming. ML is a paradigm that people frequently misunderstand. He used in his article an example of machines not learning from experience. This is the definition of Machine Learning.

Also, and have no doubts that he is an intelligent person, but he makes statements here that seem to fundamentally misunderstand machine learning.

I disagree. I think you are overselling machine language. Just because it is intricate doesn't get rid of the fundamental limitations of it.

What are the fundamental limitations of it, and what unique quality do humans possess that a computer does not? Because I can prove to you that everything computable is capable of being represented using this system. Whether we are there or not yet is a good question, but that capability is known.

What is the situation that falls outside the realm of computability here?

What are the fundamental limitations of it, and what unique quality do humans possess that a computer does not?

To see outside the rules of its program. Go read Penrose's book "The Emperor's New Mind" sometime. Penrose destroyed the strong AI position over 20 years ago. Human intelligence is not machine intelligence. Human intelligence is boundless. A machine intelligence is bounded by the rules it has because it is impossible for a system to demonstrate its own consistency or prove all its elements. (Godel's Incompleteness Theroms) That is a fancy way of saying machine logic is not self-aware. It can't step outside itself and break its own rules the way a human brain can.

I will go read that book. Until then, here is a page giving many years of argumentation for and against the idea in that book

You can find more if you wish. But Penrose did not definitely prove that the human mind is fundamentally unmodelable. In particular, it did not prove in any rigourous sense that the human brain is capable of breaking outside of the limits of Computability.

Also, "That is a fancy way of saying machine logic is not self-aware" is not an accurate summation of Godel's Incompleteness Theorem.

He absolutely did. You can keep believing in strong AI all you want. But until it happens, you are just believing in the tooth fairy.

And you're believing in a 20 year old, debunked paper.

You're confusing strong AI with something that matters. We're trying to build a task oriented machine here, not a sexbot who can also fulfill your ontological needs.

STrong AI is the only thing that can fulfill this task Skippy. Read the link below about the failure rates and how the rate of improvement has flattened out. They are hitting the limits of machine language here.

Bullshit. This is an expert system task. Don't go throwing around terms that you do not understand.

You are using Machine Language again, and now I'm confused whether you think that's what ML is. Or if you're confusing Machine Language, which is just the low level language used to specify commands to a processor, and Machine Learning which is a family of algorithms.

The facts are what they are skippy. The improvement rates have stopped. It is not that hard to get a car to drive around a perfectly mapped course in great weather. They have done that. Now they are hitting the hard problem of getting a car to drive in all conditions. And they are not solving it. And they won't solve it. You people might as well believe in angels coming down to drive all of our cars because that is just as likely as a robotic car that is going to perform as you claim.,

And you've got a citation to back up that claim, right? Yeah, didn't think so.

What is the situation that falls outside the realm of computability here?

My guess? Signalling to the world of humans that the vehicle was clearly and provably impaired here (well before the 'collision'). Obviously can't 'get feedback' from the dead pedestrian - but why did she step into the street to cross it? She was within two feet of crossing the multi-lane street -- NOT suddenly appearing from the median/offstreet. She wasn't drunk or impaired herself - even though that was deliberately imo insinuated into the story by mentioning her as 'homeless'.

How 'different' does a robocar look from a human-driven car - at night - to a pedestrian - if the robocar doesn't 'need its headlights to limit its speed'? If there is a difference, does the difference make it more likely that the pedestrian will step out and try to cross the road believing it is safe?

Of course, this issue can be tested for computability by positioning techies of all ages IN the street at various positions and seeing how effectively they can get out of the way of a robocar travelling at various speeds and with different ways of those robocars signalling their presence to notify the techies as to when to begin their own collision avoidance.

Or maybe the whole point is to test whether this is possible by using everyone EXCEPT those who understand the experiment.

As long as I get to position you an equal number of times with a car driven by a person. Deal?

You STILL don't even think hitting pedestrians is an issue here do you?

Do you actually work in that industry? Because if you do, you should be fired.

Ridiculous straw man.

Nobody wants pedestrians to die. There is a difference between noting that the pedestrian is ultimately responsible for their own safety by not crossing the road in front of a car,and wanting pedestrians to die.

No there isn't a difference. Americans have created a transport system that is uniquely deadly to pedestrians. Blaming peds for their death - while refusing to even contemplate who is ACTUALLY responsible in aggregate - is exactly the same as wanting peds to die.

And finally, an obvious question: Is the average consumer actually interested in programming his car before every trip?

I don't even understand his point here.

Or being reliant on a computer connected wirelessly to the "cloud" with its potential reliability issues?

This is a very relevant question though. The big fear we should all have is government mandating this. That's the fear. I like driving my car damn it.

A self driving car is placing your life in the hands of the reliability of the code that drives it. Would you bet your physical safety and possibly your life on the code in your desktop computer working properly every time, every day for years on end? That is what you are doing with a fully robotic car.

We already do that with our cars. They are complex computers and we rely on them functioning to keep us alive every single day. This is true of many machines in modern life.

Does the current technology of we have scale to the complex task of driving, I don't know. But your point does not provide any meaningful criticism.

No. We bet the reliability of our cars. If the code in my car fails, it won't start. Code failing is not going to cause it to kill me in most cases.

That's untrue. The code can fail and prevent deceleration. The code can fail and the anti-skid technology breaks and you spin off and die. The code can fail and the power steering suddenly has issue, causing you to not turn as much as you thought and crash.

This isn't even counting mechanical failure, which is something people seem to find tremendously less frightening than computer failure, even though it tends to be quite catastrophic.

Sure one piece of code doing one simple thing can fail. in a robotic car any one of millions of lines of code can fail causing the car to do real harm. Stop pretending that having a car with a fly by wire accelerator is anything like having a car that drives by code. They are orders of magnitude different. And you know it.

I do, and you can find in this article alone many examples of me expressing skepticism at the technology being ready quite yet.

My issue is you present everything as absolute, to the point that you contradict yourself. You have a mentality that things are possible to Point A, but no further. Without giving any particular justification that further improvement is impossible other than emotional arguments.

Your entire argument is predicated on the emotional argument that humans are completely distinct, and in all ways are unemulatable.

Your entire argument is predicated on the emotional argument that humans are completely distinct, and in all ways are unemulatable.

It is not an emotional argument. It is a conclusion based on observation. They have never recreated human consciouness or even explained it. Until they do, I am skeptical of anyone who claims they will.

You are the one who is emotional here. You are convinced that something that has never been done and has never been explained how it could be done both can be done and will be done. Get back to me when it happens. Until then, you are just telling yourself stories.

We should program it in Rust then. Then it will be 100% super safe.

And race condition free.

Which do you consider to be a more difficult task based on the number of inputs to be evaluated and acted upon, driving a car at 35 miles an hour, or landing a jet plane at a speed between 150 and 200 miles an hour?

Guess which one computers currently do safely hundreds of times a day?

The car all day long. The Jet plane involves a set of variables that are the product of the laws of nature and can be predicted. Driving the car involves interacting with human beings who can never be predicted in the same way the forces of nature are. Moreover, the number of possible circumstances which you land a jet plane is infinitely smaller than the number of circumstances you can drive in. The runway is always straight. The runway never has potholes. There is never a wild animal on the runway or another plane trying to cut you off or a pedestrian who has wondered out on it. You don't land on the runway if the weather is not within a given set of parameters. The list goes on and on.

The problem is external conditions, which driving a car on a street has far more than a plane landing.

Driving the car, obviously. Presuming of course that the plane is landing on an FAA approved landing strip with tight aircraft/traffic controls and the airstrip isn't surrounded by homeless camps with drunks wandering out onto the runway at all hours.

Wait, was this a trick question?

Not everything needs to be about Seattle, Paul.

Everything is about homelessness.

Society gets more out of development of self-driving cars than just the prospect of self-driving cars themselves. Much of the modern safety and convenience technologies that are in production today are part of the autonomous development. Adaptive cruise control, forward collision warning systems, automated parallel parking algorithms, etc all have been developed with self-driving or increased automation in mind.

I'm certainly no expert on the subject, but I suspect various other industries have benefited from the technology development that has gone into autonomous car development.

There is a place for this technology. But its place is for cars to help humans be better drivers. The future is a car that is easier to drive because it steps in when you screw up. That is a real improvement. The future is not a robotic car. Humans are great at judgement and adapting but terrible at monitoring. Machines are terrible at judgement and adapting but great at monitoring. A robotic car gets things exactly backward and expects the machine to judge and adapt and the human to monitor or if not accept the consequences when the machine fucks up. Things like adaptive cruise get it right. They leave the judgment and adapting to the human and lets the machine monitor and step in when the human screws up.

If humans are so great at judgement, why do we have so many DUIs?

When compared to the total number of drivers, there are not.

About 1% every year. Is that an impressive performance in your mind?

99% sounds very safe. Driving is a very safe activity. If it were not, people would be afraid to do it. Notice, other activities like hand gliding and riding a motorcycle are much less common than driving. Why? Because they really are risky. Driving isn't. There are 300,000 people in this country who drive over a trillion miles every year. And less than 50,000 of them are killed in an automobile accident. Your chances of dying in an automobile accident are very small. They are just "large" in relation to your chances of dying of anything else, which are even smaller. We live in an incredibly safe world. Reason often notes how safe we are today compared to the past. But then totally forgets that fact when talking about shiny new robotic cars. Then they act like everyone is destined to die young in a car accident.

So you would be satisfied if pilots got you to your destination 99/100 times? That's safe, right?

Nice try, but 1% DUI does not equate to a passenger jet pilot failing to land safely 1% of the time.

That article makes me think of a guy with a dialup modem explaining why we'll never have video over the internet.

The sensors will change. The amount of information will change. The quality of information will change. The ability to anticipate will change. At some point, the cars will be much much safer than the average driver. Because the average driver is also still learning from their experiences as well!

The discussion below of game-playing algorithms is interesting. When do self-driving cars become viable? When they are better than the average driver? Drivers in the 90th percentile? The best race-car drivers in the world?

AI based algorithms have historically taken a long time to evolve from beating average players to beating grand masters. Do self-driving cars have to go that far before going into widespread use?

Playing a game is not the same as driving on public roads. Even something like chess that has trillions of positions is a small problem when compared to the number of variables involved in driving. Moreover, machines that play games are given perfect perception of the game. A self driving car not only has to know how to play the game, it has to know how to see the pieces. And that is a very dificult problem.

Teaching a computer to play chess is not the same as teaching it to drive.

Based on... Right, you got nuthin.

Based on driving. The world is a lot bigger and more complex than a chessboard

They can follow "the rules of the road" much better than the human pilots I encounter in commute traffic every morning. That's a 90% improvement right there.

If we used todays methods of approval we would have never crossed the Atlantic let alone left Africa

You sailing across the Atlantic is your choice. You are assuming the risk. You testing your self driving car on public roads is forcing other people to assume the risk. Sorry, but you should not be able to do that. You can put yourself at any risk you like, or at least you should be able to do so. But you should not be able to put other people at risk without their consent.

Many incompetent drivers get on the road everyday and cause fatal accidents. They too are making others assume risk. And yet, there's no issue. I think this incident is the unfortunate collision (ayyy) of the victim doing something stupid by crossing their awfully lit road and Uber's questionable deployment of their tech.

Sure they do. And that is why things like reckless driving are crimes even if it doesn't cause a wreck. You are putting people at risks they didn't consent to. Testing a self-driving car is no different. And don't tell me you know it is safer than humans because if you knew that for sure, you wouldn't have to test it.

Someone driving on a road doesn't consent to being rear-ended; whether it's by human or machine. I think Uber might culpable; indeed, if one of their cars causes an accident, Uber should be punished just like any human would.

And don't tell me you know it is safer than humans because if you knew that for sure, you wouldn't have to test it.

I don't. Why would you think I would?

Someone driving doesn't consent to someone creating a risk beyond what a normal, safe driver does. So when I drive recklessly or drunk or whatever, I have committed a crime even if I haven't yet caused a wreck. Why? Becuase I exposed people to risks they did not consent to taking. The same logic applies here.

We don't know if self-driving cars create more risk than the norm. Perhaps Uber's implementation does.

Well that's certainly what the miles between intervention metric is saying.

Yeah, and just think of all the civilization-destroying and genocide that would never have happened, huh?

Naturally they'll never just directly admit it, but you know that most of these fake-ass "libertarians" at Reason believe everyone should eventually be forced to use a self-driving car and not be allowed to operate their car themselves.

Nothing says freedom like turning control over your movement over to a tech corporation and the government that regulates it in the name of safety.

How the fuck do the same people who rightfully say security and safety are no justification for violating people's privacy and autonomy turn around and claim everyone needs to give up their privacy and control of their cars because the roads will be safer? These things are an enormous threat to privacy and civil rights. And these dumb asses don't care because it will make things safer. How does one engage in that kind of cognitive dissonance?

Because the Free Market wants self-driven cars, and you pesky Luddites are holding back Progress. We'll force you to accept the freedom of not being able to drive your own vehicle for the greater good of society.

Worship the Market. Submit to the Tech Companies. Their rule over you will be just, because they are not the government.

The security state is way cool as long as it is run by corporations and there is lots of free porn and pot!!

And diverse food from around the globe. Gotta have our food trucks.

You know that the tech companies wouldn't be the one stopping me from driving my car. Your situation presupposes a law coming into existence.

Because there is no such thing as regulatory capture and money and political power has never combined to take away our freedom. In what universe do you live?

And I'm saying that's still the issue of government and that we need to focus our actions on destroying them. Because your situation is that you're sarcastically blaming the free market while contriving a situation with tremendous amount of government force.

You are just telling me to believe in something that is contrary to all past experience. Sure, this time money and desire for safety and uniformity won't prevail unlike every other time in human history. Moreover, you won't be able to stop it because you have already given away the argument. The moment you say that these things are justified because of safety, then you have lost the argument. If safety is more important than freedom, then there is no way to say these things shouldn't be mandated.

Yup. Companies totally don't use money and power to influence laws. Go on with your dream.

Even without a law, it seems likely that they'll just attempt to stop producing cars that allow me to drive myself. When that day comes, you'll be there, BUCS, screaming about how I'm a Luddite for having a preference different to your own, and you'll demand that I be made a serf by these companies. And you'll dance with glee, because, for libertarians serfdom is fine as long as it's not the government subjugating the masses.

No, he and I will be laughing at you screaming that your purchase preferences must be provided for. So much for that free market.

yes. We should be free to buy whatever car we like. And you hate that but we are the ones who are against freedom.

No, you think that the carmakers have to provide you with the car you want. Sorry, i couldn't see your freedom behind all that coercion

No I don't. I am quite sure someone will build the car I want provided I can pay for it and the law will allow me to buy it. The only thing that will stop that is people like you making it illegal for me to drive.

You're goong to have to show where I've ever called for outlawing your right to drive. Feel free to come back and admit your failure.

No you are not calling for that. But, the people behind this are and will if they can. I really don't give a shit if you want a robotic car. They are not going to work well and the novelty will wear off very quickly. So, absent government mandates, they are not replacing human drivers anytime soon. The danger is the government will step in to ensure they do in the name of safety and cash for the people making them.

So you were making a bullshit claim.

"for libertarians serfdom is fine as long as it's not the government subjugating the masses."

This is actually probably true for a lot of libers, although I don't think it's intentional. Big corporations are the wrench in my dream of libertopia. In a totally free market, the same types of assholes who exploit governmental powers now would just go into business and exploit them there. A corporation and a government are the same in that they're pieces of paper that shield the flawed humans running them from liability. They both need to have internal checks and balances that do not depend on one-upping each other like they do now.

A corporation and a government are the same in that they're pieces of paper that shield the flawed humans running them from liability.

This.

"A corporation and a government are the same in that they're pieces of paper that shield the flawed humans running them from liability."

That's the best sentence I've read on the internet in a long time.

Because for the masses, getting to work in 90% less time will be far more important to them than giving up control over driving! You won't be allowed to drive because you, as a human, don't have the processing or motor skills to drive like the automated cars managing busy intersections without slowing down. You want to try and drive with the cars at the 6:47 mark of that video?

It's the exact same reason you give up substantial freedom and control if you want to fly somewhere at high speed. Because you are not good enough to do it on your own. It's also the same reason we have speed limits.

If the demands of driving and keeping up with auto-driving traffic don't overwhelm human abilities, then there won't be any reason to take away your freedom. So if you are correct, and the whole thing is a sham and untenable, then you have nothing to worry about! Seriously - your driving freedom will only ever be at risk if the self-driving cars work really, really well. And in the best case, that is still many years away.

You are not getting to work in 90% less time. That is an absurd dream. It is going to take you longer to get to work, because these things are going to drive as your betters think they should and that won't be fast. You are going to trade your freedom for safety and end up with neither.

If the demands of driving and keeping up with auto-driving traffic don't overwhelm human abilities, then there won't be any reason to take away your freedom.

I own my freedom not you asshole. Technology really can turn Libertarians into full on totalitarian statists.

If they believe people should be forced to do anything they aren't libertarians at all.

It must be exhausting for autonomous vehicle opponents to spend so much time calling for a ban on all moving vehicles because of the deaths they cause every day.

GIVE ME BACK MY PM LINKS YOU FUCKS

FUCK YOU ROBBY

FUCK YOU BRITCHES

KMW: The commentariat seems rather upset that the PM links have been discontinued. Maybe we should bring them back

Robbie: I'm not doing it. They always mock me for not being able to change a tire- so they can go fuck themselves. Besides it's just some guy named "yellow tony" who's upset

Welch: It's fine. "Yellow Tony" is actually Gillespie's sock puppet

"Yellow Tony": I'm increasingly drawn to the conclusion that those who discontinue PM links are intrinsically opposed to modernity. All old divides between "left" and "right" are meaningless, as the real divide is between those who support PM links and those who don't

I'm all for a link war. Let the threads run red with the blood of the anti-linkers!

Shouldn't you or your Red version have been able to warn us that this was going to happen? Do they have PM links in your alternate reality/future or wherever you are from?

I'm not sure why I play along with you Tonies anyway...

Links are ubiquitous in my home dimension since the Libertarian Moment? occurred. You should be asking this dimension's future me if he's been lying about reason he's here. After all, why would dimension's future me let such a calamity befall the innocent if he were here on good faith?

Police determined that the pedestrian was at fault.

[...]

Already, Arizona Gov. Doug Ducey, a Republican, has suspended Uber's self-driving car testing privileges in his state, citing concerns about public safety. That move is a "a major step back from his embrace of self-driving vehicles," notes Melissa Daniels of the Associated Press.

While I agree it's unfortunate that politicians immediately swing into action on something they don't understand, this is the Way It Is.

My town has seen a rash of pedestrians hit by vehicles-- where the pedestrians are almost always at fault. Politicians swung into action and made it only legal to turn when pedestrians were in the crosswalk.

Yes, you read that right, their plan to save lives was to make it only legal to turn when pedestrians were in the way.

It makes a certain amount of Darwinian sense.

Good point. Maybe the city council is doing something about the homeless.

If you want the roads to be safer, raise the driving age to 21, require an actual content based course on the relevant physics, and charge a few hundred dollars for the license. These are already proved common sense measures to prevent deaths. After all, fewer cars and fewer drivers means fewer deaths.

Driving is a privilege not a right.

and charge a few hundred dollars for the license.

Racist.

*drops microphone*

If you want a gun, raise the gun-buying age to 21, require an actual content-based course on the relevant physics and safety features, and charge a few hundred dollars for the license to own one. After all, fewer guns and few gun-owners mean fewer school-shootings.

A gun is a privilege, not a right.

I see a rather large flaw in that analogy.

I still say I'm waiting for self-driving technology to be successfully deployed on vehicles that are locked to two steel rails.

http://spectrum.ieee.org/cars-.....ing-better

The two remaining companies, Waymo and GM (with its Cruise subsidiary), accounted for over 95 percent of the autonomous miles travelled in 2017, tallying more than 480,000 miles. Their average disengagement rate was once every 2,900 miles?although the most recent data for GM suggests that it is approaching Waymo's level of competence.

While a single disengagement every 5,000 or 6,000 miles appears to be thousands of times better than some startups, both Waymo and GM have announced the imminent start of commercial autonomous ride-sharing services. Waymo recently put in an order for "thousands" of self-driving Pacificia minivans with Chrysler, to start operations later this year, while GM said it will mass-produce autonomous Chevrolet Bolts without steering wheels or manual controls by 2019.

In a fleet of a thousand driverless vehicles, each driving a few hundred miles a day, even Waymo's best ever performance?a single disengagement in over 30,000 miles of driving last November?would correspond to multiple failures daily.

Waymo needs to improve its average disengagement rate by two or three orders of magnitude to avoid having a crowd of disappointed customers. However, even by its own figures, Waymo's performance barely improved year on year. In 2016, it had a disengagement every 5,130 miles. In 2017, it averaged 5,600 miles. And remember that by Waymo's definition, each of those disengagements represents a failure of its system to cope with real world conditions. (Unlike last year, Waymo did not report how many of its 63 disengagements in 2017 might have led to an actual accident).

It is possible that Waymo put its technology into more challenging scenarios in 2017, thus generating a higher level of disengagements. But it is also conceivable that, about every 6,000 miles or so, today's technology encounters a situation or combination of problems that it simply cannot handle without human assistance.

One solution could be to deploy a teleoperation system such as the one being developed by Phantom Auto, where a remote human driver can take control for a short time to navigate an unexpected obstacle or assist passengers. Another possibility is that the road to our driverless future is going to be bumpier than expected.

These things are going to be the jetpack of the 21st century. In 2030, Ron Bailey will still be writing about the wonders of the fleet of robotic cars that are coming soon.

It would be nice if Prius drivers would "engage" at least once per block.

It was an unfortunate accident,

No it was NOT an unfortunate accident. It is a direct outcome of poor road/street/grid design, complete domination of all transportation grid/network decisions by 'cars' to the exclusion of any competitive 'mobility', and increasingly aggressive/distracted/crappy drivers (which is what robocar is emulating in order to 'fit in').

'Unfortunate accident' is just what one says when one doesn't give a shit and one doesn't want to see any of that as a problem.

Nothing to see here. Move along.

It is a direct outcome of poor road/street/grid design, complete domination of all transportation grid/network decisions by 'cars' to the exclusion of any competitive 'mobility', and increasingly aggressive/distracted/crappy drivers (which is what robocar is emulating in order to 'fit in').

Are you being sarcastic here?

Not at all.

Roads are built for cars. To the extent they are unsafe for other means of movement, it is because they are not built enough for cars and we try and pretend those other modes belong on roads with cars.

"JFree" should be posting as "JSerf". That's what he is, and that's what he wants all the rest of us to be.

Roads are built for cars.

Roads are PUBLICLY OWNED LAND. Yes obviously they are diverted entirely for the private benefit of cars here in the US. But that is merely the cronyism that leads to poor design of those roads and the entire grid.

The PURPOSE behind why so much land is kept in the public sphere rather than privatized is because MOBILITY is deemed (correctly) a natural inalienable right of humans - and that is prohibited on private land as 'trespassing'. Once we deem only one form of mobility to be realistically exercisable, then we have infringed on that natural right of mobility.

There is no upper limit to how much land 'cars' need for their own use. Jack up the speed and the 'designed' traffic - and the laws of physics say that the amount of land they will need goes up exponentially to that. That's not a problem with rails or with air - because of the way they are designed to use land. It's a problem with cars - and we refuse to think about that.

Aren't you just making his point? If roads aren't built to accommodate any mode of transportation other than driving, doesn't that just mean that planners have chosen to prioritize car traffic to the exclusion of all else?

In a thread some months ago someone argued that the self-driving car couldn't 'see' what a human could see. I (somewhat skeptical of the technology in that I think its practical implementation is much further off than the proponents claim) argued in favor of the technology, pointing out that the sensor array on a robot car should be far superior to that of a human driver. It should see in various spectra of light, have radar, proximity sensors, and even environmental sensors that might predict things like ice, slippery roads etc.

The fact that the self-driving car in this case couldn't "see" an object moving across it's path on an obvious collision course-- beyond the visual cone of the low-beam headlights-- should give everyone pause.

The fact that the self-driving car in this case couldn't "see" an object moving across it's path on an obvious collision course-- beyond the visual cone of the low-beam headlights-- should give everyone pause.

Agreed. I don't know if it's a sensor issue, a bandwidth issue, or something else. It could easily be the underlying Machine Vision, which is still a very complex issue and still no where near solved, no matter what evangelists tell you.

That inability to 'see' is less concerning to me than inability to even know IF one is able to see or how far one is actually able to see.

As a human, what is your reaction as a driver when the kids in the back seat decide to play 'lets cover daddy's eyes'?

It is pretty obvious with this Tempe homicide that robocars reaction is to keep driving blind. Presumably because that is deemed a problem that (like fog/night/etc) only applies to human drivers and hence needn't be incorporated in constant on-road testing/feedback of all the hardware/sensors/software/etc. Not end-of-day testing.

As for warning other humans (like pedestrians) that 'hey I'm impaired' - its pretty clear to me that the entire purpose of robocars is to ignore ALL consideration of humans outside the vehicle entirely. Everything outside the car is merely an obstacle - and its pointless to use flashing lights or high beams or horn honk or anything else like that when everything else is basically a garbage can.

BTW - for those of you who think 'the market' will actually work at figuring out which robodrive technology succeeds. Tell me this - let's say the technology itself diverges into - oh - a predominantly 'Driving Miss Daisy' type error vs a predominantly 'Grand Theft Auto' type error. Which it will since in the real world tradeoffs have to occur and designs always have to tilt one direction or the other.

Is that going to be up to purchasers of a vehicle to decide? What about pedestrians or cyclists or pretty much everyone other than the driver? No choice for them? Whatever drivers decide is the world they will have to live and die in?

Given the way tort and product liability law work, there is no way on earth any company will sell a self driving car that can violate any traffic law absent the need to avoid killing someone. All of them will drive like driving Miss Daisey whether you want them to or not.

A self driving car takes away your freedom to determine how you get somewhere and how much risk you are willing to assume. Maybe you really need to be somewhere and consider the risk associated with speeding is worth taking. With self driving cars you don't get to make that decision. The car will. If that means you are late to something really important and suffer great harm, fuck you, you gave up your freedom to make that choice when you gave up your ability to drive.

That is a huge loss of autonomy and freedom. Yet, a lot of Libertarians are so in love with technology and shiny objects that they don't even see it let alone object to it.

no way on earth any company will sell a self driving car that can violate any traffic law absent the need to avoid killing someone.

They have already blamed the pedestrian here. And bluntly - that is EXACTLY what happens most of the time. The pedestrian gets blamed. Because 'overdriving your headlights' (which is what caused the accident) - 'slowing immediately when your vehicle can no longer see beyond its sensors' (the robobehavior that caused the accident) - is not a behavior where a 'law' can be written.

So we will instead force humans OUTSIDE the robocars to obey laws that are written for the robocars - which will of course be able to comply with those programming instructions.

That is not the point. The first time a robo car speeds and causes an accident, there will be hell to pay. There is no way the government or the public is going to allow people to sell cars that can be programmed to break traffic laws. Understand, to the fucking weirdos who are promoting the idea of every car driving like Miss Daisy and in a totally uniform and "safe" manner is most of the appeal. Allowing these cars to deviate from the traffic laws would totally ruin it for most of the people who support them. So it won't happen.

The first time a robo car speeds and causes an accident, there will be hell to pay.

Ya sure ya betcha. The Tempe car was going 38 in a 35 mph zone. The pedestrian has already been blamed. No hell to pay anywhere. BET on that getting pushed more and more in the car direction - because there is no fucking way human drivers want to be held accountable either for '3mph over the limit'. And they got the power to make that happen. They've already made that happen here in the US. It is 3-5x more fatal to be a pedestrian here than in other developed countries - and we don't even walk.

And the even bigger point is - WHATEVER the limit that the cars can be programmed to do can easily BECOME law. And only humans outside the car will be expected to and forced to comply. Robocars that are programmed to 'fit in' with a universe of crappy/aggressive/distracted drivers will turn those drivers into exactly the self-interested who can make sure the laws get changed in that direction. That's actually what I meant by the Grand Theft Auto type error - where robodriver IS the law and pedestrians/others are merely - well - obstacles to a high score.

YEs, the cars' programing will be whatever the law is. That is my point. And that will be Driving Miss Daisy.

The law will be whatever the cars are programmed to. That will be Grand Theft Auto. It works both ways.

As long as we are more concerned about 'collisions'/'crashes' than about 'fatalities', then it will more likely be the Grand Theft Auto scenario that plays out.

In urban areas, pedestrians are involved in 2% of crashes - 25-40% of traffic fatalities.