The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

How to Make Friends and Influence Judges

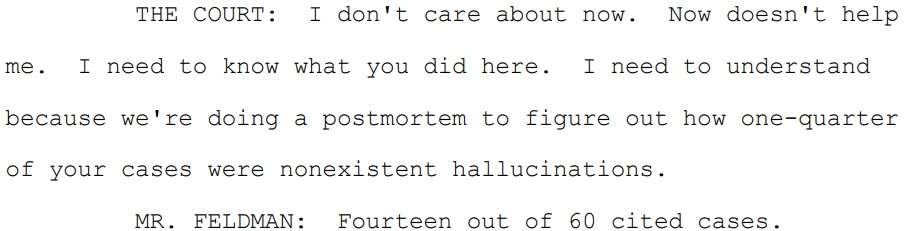

From the transcript of a hearing in Flycatcher Corp. Ltd. v. Affable Avenue LLC:

Unsurprisingly, the judge (Judge Katherine Polk Failla [S.D.N.Y.]) viewed this not as an important correction, but as Mr. Feldman's "attempt[] to minimize his responsibility." For more on what happened in the case, see the post below, Lawyer's Repeated Filings with AI Hallucinations Lead to Default Judgment Against Client.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Nope, still don't understand why anyone, when using an AI to compose something like this, would not check each and every citation before submitting/publishing.

How can you not know that the technology is not infallible? If it saved you that much time up front, is it really all the time consuming to manually check those 60 citations? Hope is not a plan. In this case, hoping no one else will double check those citations.

He even admits that he has access to free Westlaw/Lexis through a library but he just decided not to use it and thought running it through three separate AI programs was somehow a better solution.

If I were in his position, I don't think it would even have occurred to me to have quibbled about the difference between 14 and a quarter of 60. I'd have been too busy groveling.

Of course, I'd never have been in his position, because I don't trust LLM output further than I could throw a server farm.

Well... ackshually, Judge, it was 23 and 1/3 percent, not a quarter.

That's a bold strategy, Cotton. Let's see how it pays off for him.

Same.

The thing about "vibe coding" is that it ignores that only 5-10% of development time is spent writing original code. The rest is verifying, integrating, documenting, and distributing. Which is what scares me about this sudden embrace of AI. If you don't know how it works, how do you even know it's broken or begin to fix it? In this case, the lawyer had a clear minimally full-proof verification path to avoid rank humiliation but chose an easier path. Sure, even if the AI cites a real case, the context might be wrong. But at least you've only made a stupid argument, not facing fraud sanctions.

Using AI for initial code generation has forced me to really focus on how to validate what it generates. When I think of all the time I've spent coding up command line switches, it's a Godsend, letting me focus on true functionality. I still worry about how best to test, but at least I can also use AI to alleviate the initial drudgery of that.

Is Feldman a Reason commenter?

Well your honor, 14/60 is really only 7/30 when you reduce the fraction.

Isn't that what you are supposed to do in court? Correct factual errors and minimize wrongdoing? If the lawyer said, "your honor, my client only killed four people, not five", would the judge accuse him of minimizing responsibility?