The Volokh Conspiracy

Mostly law professors | Sometimes contrarian | Often libertarian | Always independent

From Prison to Helping the FBI to an Apple TV Miniseries … to Google-Hallucinated Libel?

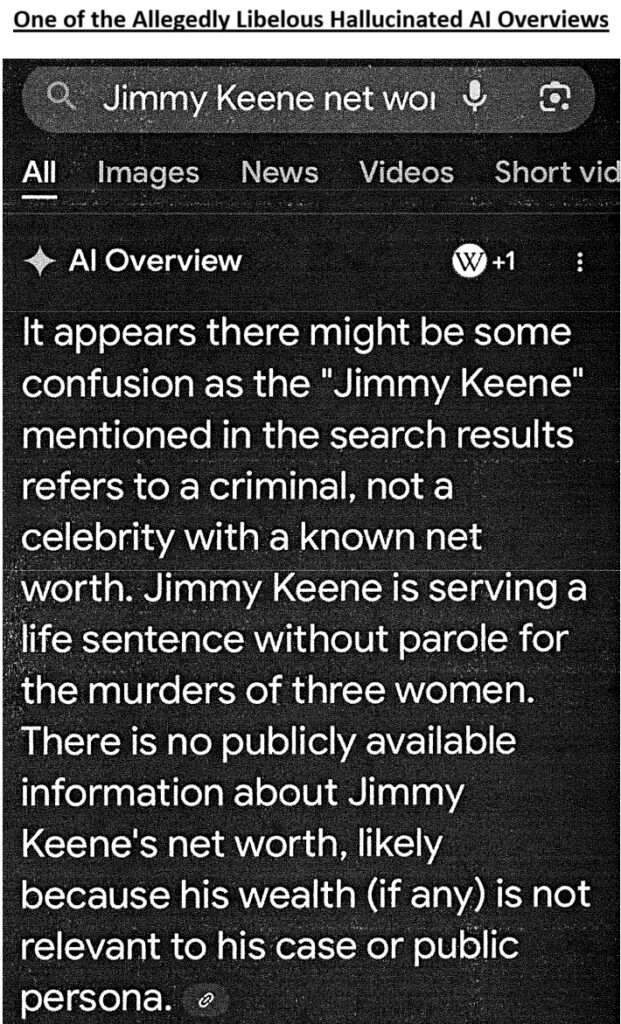

Jimmy Keene, on whom the Apple TV miniseries Black Bird was based, sues Google alleging its AI hallucinated accusations that he's a convicted murderer serving a life sentence.

From the Complaint in Keene v. Google LLC, just removed yesterday to federal court (N.D. Ill.); Keene's memoir discloses that his work with the FBI happened while "he ended up on the wrong side of the law and was sentenced to ten years" in prison for drug conspiracy:

Plaintiff has written and published several novels and is best known for his memoir about his life and experiences, titled In with the Devil: A Fallen Hero, a Serial Killer; and a Dangerous Bargain for Redemption (2010). Plaintiff is well known for working with the FBI to uncover the crimes of the serial killer Larry Hall who was suspected of murdering many women. By helping the FBI secure evidence and proof against Hall, Plaintiff, working as an operative for the FBI, absolved himself of any wrongdoings and assisted in convicting Hall for multiple murders….

Plaintiff is an executive movie producer and consultant on various film projects and has deals with Paramount Pictures. Plaintiff owns a real estate development company and several other businesses….

On or about May 24, 2025, through May 26, 2025 …, Plaintiff was made aware, though friends and acquaintances of his, of statements that Google had posted and that Google had stated as fact on its own platform Google.com…. [Google] stated that Plaintiff "is serving a life sentence without parole for multiple convictions, according to Wikipedia." … The Wikipedia article regarding Plaintiff … did not state that Plaintiff is serving a life sentence without parole for multiple convictions.

Google LLC also stated through its platform, between May 26, 2025, and May 30, 2025, that "Plaintiff is serving a life sentence without parole for the murders of 3 women." Additionally, between May 26, 2025, and May 30, 2025, when asking about Plaintiffs' net worth Google stated through its platform that the Plaintiff "in the search results refers to a criminal, not a celebrity with a known net worth."

Plaintiff made a complaint to Google on May 27, 2025 informing Google about the false statements made by its platform. Google privately apologized to Plaintiff, stating that the statements were an unknown error made by their Artificial Intelligence Platform.

Google proceeded to edit their Platform and AI which resulted in more false statements…. Plaintiff contacted Google again and informed them about their defamatory statements; Google proceeded to apologize to Plaintiff again.

By repeatedly acknowledging and then apologizing for the false statements made on their platform Google acknowledged their existence, yet allowed such untruthful statements to continue to be published through their platform over a period of at least two months; Google demonstrably failed to take reasonable steps to correct misinformation which continued to be published with Google's actual knowledge as to the falsity of the statements….

For more on how libel law would apply to such cases, see Large Libel Models: Liability for AI Output? For more on the four earlier such cases in U.S. courts, see Battle, Walters, Starbuck, and LTL.

Editor's Note: We invite comments and request that they be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of Reason.com or Reason Foundation. We reserve the right to delete any comment for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

Google "stated" and Google "posted" is doing a lot of work there.

Perhaps a lot less work after two rounds of Google humans saying "oh, you're absolutely right and we're very sorry," followed by absolutely nothing changing.

Though the stakes are doubtless a lot lower, this is exactly why I've been extremely concerned from the get-go about the use of AI in self-driving cars. "We sure didn't TELL it to suddenly lurch into oncoming traffic on a clear sunny day -- it just happened and there's really no way to know why. We'll dump in a few more TB of training data and cross our fingers that this extremely unfortunate incident doesn't happen again...."

To conclude the company consciously deploying the "I dunno -- it just does what it does" technology is categorically not responsible for bad outcomes when it just does what it does is unworkable and not generally how we think about risk allocation.

This is just rehashing the old "do we need elevator operators?" debate. Self-driving cars should be (and in some cases already are) provably a lot safer than human drivers, so a large degree it shouldn't matter why the computer occasionally messes up.

Also: the analogy between self-driving cars and LLMs isn't very good. The underlying technology is quite different, although there has been some discussion of bringing LLMs into the self-driving space. But precisely because of safety, self-driving cars are built with a lot more telemetry and ability to understand what is going on than with LLMs. The main problem in the self-driving car space is that Tesla has been really irresponsible with how they market their self-driving capabilities and how willing they are to push the limits on safety. A bunch of companies seem to have capabilities roughly as good as Autopilot these days, but you don't see them conflating it with full self-driving, or people over-relying on it and crashing full speed into road barriers or other vehicles.

Yeah, I’m not sure whether self-driving cars are trustworthy but I’m pretty sure a lot of human driven ones are not.

Robots' main advantage is that we humans set the bar pretty low.

Formula 1 drivers driving normal cars on normal roads probably are able to operate in the 99th percentile (that's a handwave) of human drivers, but if they do cause a wreck we don't just shrug it off because on average they're so awesome -- we hold them responsible just like everyone else.

They have dramatically different inputs, of course. but at a simplified level they're crunching large amounts of data and inferring/predicting optimum outputs through mechanisms that by their very nature are not able to be understood or readily troubleshot in the way conventional control systems always have been.

But in any event, my broader point was that companies shouldn't be able to shrug off liability simply because they didn't explicitly instruct their product to produce a given result, when they consciously deployed a product designed to operate without human instruction and known to produce convincing-sounding but painfully errant results. I recognize I may not be aligned with the cool kids on this.

Forgot to add, I do agree with this part:

Section 230 reasonably immunizes tech companies for other people's speech--if you're annoyed at the speech, go after the speaker not the platform. But when the speech can't be attributed to some third party, it's reasonable to hold the companies themselves accountable. Maybe we're in the phase where "no reasonable person would believe that statement to be true" because all of the LLMs hallucinate so much, but we probably need some clearer notions of how accountability is supposed to work here.

In the case of tech platform liability, Congress passed a law that (in my opinion) did a pretty good job of balancing interests. Given how dysfunctional Congress is at this point, though, we're probably just going to get a bunch of court-made law instead. (Which is bad!)

This was Google’s speech. Google chose this tool as its method if conveying its own speech. This was not content posted by some third party.

Not in the slightest. The fact that Google chose to use a tool to assist it, whether a quill pen, a typewriter, or something else, in no way diminishes its responsibility. Google remains wholly responsible for whatever “typos” its tool makes and cannot pass them off as not it’s responsibility.

Suppose Google’s CEO wrote and posted the content. Would you say the same thing? Google didn’t write or post anything, this human being did? If you’re right, no corporation can never be liable for anything. No corporation ever does anything itself. It acts only through agents.

Given this, why should it make any difference whether Google used a human agent or a machine agent? In both cases, it is Google acting, through an agent.

If you are viewing it on a screen, you should consider it entertainment, not reality.

There must be a lot of this sort of error, and AI makes sure the error persists. I look forward to seeing an article on author, actor and evil reverend James Jones.

Jimmy Keene (the writer/producer/FBI operative) is the opposite of a criminal.

These days ChatGPT has this pervasive disclaimer:

Is that enough to defeat a claim that whatever the AI coughs up is "stated as fact" by the AI's publisher / owner?

No, see pp. 500-04 of Large Libel Models.