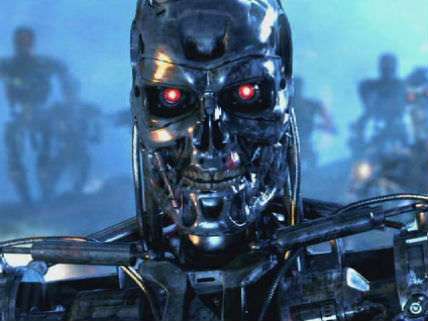

Killer Robots Should Be Banned, Says Human Rights Watch

Warbots might actually behave more morally than soldiers.

Next week, the Meeting of Experts on Lethal Autonomous Weapons Systems (LAWS) will gather under the aegis of the Convention on Conventional Weapons at the United Nations in Geneva. Various non-governmental organizations behind the Campaign to Stop Killer Robots are urging that a new international treaty banning warbots be promulgated and adopted. As part the effort to outlaw killer robots, Human Rights Watch has issued a report, Mind the Gap: The Lack of Accountability for Killer Robots, offering arguments for a ban.

According to Human Rights Watch (HRW), the chief problem is not only that soulless machines would be unaccountable for what they do, but so too would be the people who deploy or make them. Consequently, one of HRW's chief objections is the claim:

The autonomous nature of killer robots would make them legally analogous to human soldiers in some ways, and thus it could trigger the doctrine of indirect responsibility, or command responsibility. A commander would nevertheless still escape liability in most cases. Command responsibility holds superiors accountable only if they knew or should have known of a subordinate's criminal act and failed to prevent or punish it. These criteria set a high bar for accountability for the actions of a fully autonomous weapon. …

…given that the weapons are designed to operate independently, a commander would not always have sufficient reason or technological knowledge to anticipate the robot would commit a specific unlawful act. Even if he or she knew of a possible unlawful act, the commander would often be unable to prevent the act, for example, if communications had broken down, the robot acted too fast to be stopped, or reprogramming was too difficult for all but specialists.

In my column, Let Slip the Robots of War, I countered:

Individual soldiers can be held responsible for war crimes they commit, but who would be accountable for the similar acts executed by robots? University of Virginia ethicist Deborah Johnson and Royal Netherlands Academy of Arts and Sciences philosopher Merel Noorman make the salient point that "it is far from clear that pressures of competitive warfare will lead humans to put robots they cannot control into the battlefield without human oversight. And, if there is human oversight, there is human control and responsibility." The robots' designers would set constraints on what they could do, instill norms and rules to guide their actions, and verify that they exhibit predictable and reliable behavior.

"Delegation of responsibility to human and non-human components is a sociotechnical design choice, not an inevitable outcome technological development," Johnson and Noorman note. "Robots for which no human actor can be held responsible are poorly designed sociotechnical systems." Rather than focus on individual responsibility for the robots' activities, Anderson and Waxman point out that traditionally each side in a conflict has been held collectively responsible for observing the laws of war. Ultimately, robots don't kill people; people kill people.

In fact, warbots might be better at discriminating between targets and initiating proportional force. As I noted:

The Georgia Tech roboticist Ronald Arkin turns this issue on its head, arguing that lethal autonomous weapon systems "will potentially be capable of performing more ethically on the battlefield than are human soldiers." While human soldiers are moral agents possessed of consciences, they are also flawed people engaged in the most intense and unforgiving forms of aggression. Under the pressure of battle, fear, panic, rage, and vengeance can overwhelm the moral sensibilities of soldiers; the result, all too often, is an atrocity.

Now consider warbots. Since self-preservation would not be their foremost drive, they would refrain from firing in uncertain situations. Not burdened with emotions, autonomous weapons would avoid the moral snares of anger and frustration. They could objectively weigh information and avoid confirmation bias when making targeting and firing decisions. They could also evaluate information much faster and from more sources than human soldiers before responding with lethal force. And battlefield robots could impartially monitor and report the ethical behavior of all parties on the battlefield.

The concerns expressed by Human Rights Watch are well-taken, but a ban could be outlawing weapons systems that might be far more discriminating and precise in their target selection and engagement than even human soldiers. A preemptive ban risks being a tragic moral failure rather than an ethical triumph.

Disclosure: I have made small contributions to Human Rights Watch from time to time.

Editor's Note: As of February 29, 2024, commenting privileges on reason.com posts are limited to Reason Plus subscribers. Past commenters are grandfathered in for a temporary period. Subscribe here to preserve your ability to comment. Your Reason Plus subscription also gives you an ad-free version of reason.com, along with full access to the digital edition and archives of Reason magazine. We request that comments be civil and on-topic. We do not moderate or assume any responsibility for comments, which are owned by the readers who post them. Comments do not represent the views of reason.com or Reason Foundation. We reserve the right to delete any comment and ban commenters for any reason at any time. Comments may only be edited within 5 minutes of posting. Report abuses.

Please to post comments

No one expects the 3 laws of robotics, which are not harming humans, obeying orders, protecting a robots own existence, and killing humans.

No on expects the Spanish Inquisition.

I expected it once, but it didn't show up.

I expect an inquisition soon, it's why I always wear clean underwear.

"West World - Where Nothing Can Go Worng"

I learned my lesson from Yul Brynner when I was 11, Ron. NO deathbots.

I learned my lessons from the Boardwalk. NO prohibitions.

You owe me $50.....rent.

"The robots' designers would set constraints on what they could do, instill norms and rules to guide their actions, and verify that they exhibit predictable and reliable behavior."

Someone needs to re-read I, Robot.

Or just visit Itchy and Scratchy Land.

OTOH, they are probably going to be programmed by the people who built the Obamacare software.

And set on missions by the cowboys who choke people, shoot them in the back, and hit them 50 times after they have already surrendered.

They can't surrender if they're already dead! You libertarians, always trying to have it both ways.

Meeting of Experts on Lethal Autonomous Weapons Systems

Is this a late April Fool's article?

No?

OK, then let me ask this: Why do these experts hate poor people? I mean, armies are disproportionately made up of people from the lower and middle classes, right? So why do we want to continue to put them in harms way on the battlefield when a hunk of metal can do the job?

Because the military is a jobs program?

Why do *you* hate poor people?

What with your support for automating these people out of a job and lining the pockets of rich capitalists.

Killing poor people in unnecessary wars is the only fair way to counteract overpopulation.

You know who else would love to have created warbots....

Bender?

Brigitte Warbot?

Elizabeth Nolan Brown?

Tom Cruise' character in Edge of Tomorrow

Who knew that was actually a decent movie? I was stunned at the end when I realized I liked it.

I know. It irritates me I like anything Cruise appears in.

+1 Precog

This is a tad premature. The current state of the art is that robots still fucking suck at doing anything non-trivial.

Jesus, dude, I have it. We can't trust humans to honesty execute the Dueprocensor's duties, but we can design and program robots to do so.

WHILE 1 DO

PROCESS

END

PC LOAD LETTER

...the fuck does that mean??

FINGERS

COUNT

BREAK

GOTO FINGERS

POSSIBLE RESPONSE:

YES/NO

OR WHAT?

FUCK YOU, THAT'S WHY

FUCK YOU, CUT SPENDING

FUCK YOU, ASSHOLE

FUCK YOU

I see you know FUCKTRAN.

Why does it say Paper Jam when there is no paper jam?!?!?!?

C'mon, get with the 21st century:

import warbot

warbot.kill(target="terrorists", collateral_tolerance=0.9)

They kick ass at tic-tac-toe.

Reaper and Predator drones are pretty fucking good at killing. A little bit of AI (and some cover up when the AI fails) and we have a working killer robot!

//creds to LynchPin1477

import warbot

warbot.kill(target="terrorists", collateral_tolerance=0.9)

//killer robot complete.

The obvious solution is to simultaneously develop powered battlesuits that are capable of fending off killbot attacks. Then, at some point in the future, humanity and robots will stop trying to destroy/kill one another because of the futility of our efforts.

humanity and robots will stop trying to destroy/kill one another because of the futility of our efforts

Oh ProL, how naive you are. The only hope for peace lies in an alien invasion that unites us against a common enemy. Only then will man and robot live as nature intended. And just think of the economic boom all that destruction will cause!!

I suggest a tretente, among robots, aliens, and humans.

Good God, man! Give up an opportunity to join forces with our mechanical brethren and wipe out the Xenos scourge?

Then, when their backs are turned and they are engaged in their depraved robo-rituals of victory, strike them and claim the galaxy that is our birthright!

I clearly used this link on the wrong person. Here you go.

puh-leaz. Most starfaring alien races have far and away superior malicious worms to take over our warbots and enslave you.. er, us. Enslave us with our own machines.

Yeah but how much you want to bet those worms are allergic to Earth? They never see that coming, do they?!

You know, when we're taken over, I bet they don't pay any attention to us. Don't kill us, don't poison the world, don't do anything but whatever it is that interests them about the Earth.

I bet we ALL get anal probes. Warty will do well in this new society.

Only to make sure we're healthy.

I bet they treat us the way Christopher Columbus treated the Incas and Aztezs.

+1 Renegade Aristoi Captain Yuan

How about instituting a pre-programmed kill limit?

Yeah, who suffers consequences when a killbot kills a civilian? I mean, all those drone pilots are in prison for a good reason.

Indeed, then we could just send wave after wave of men, until the robots can kill no more.

Exactly! You have a future in the future armed forces....of the FUTURE!

Oh boy - here we go -

LET THE DORKFEST COMMENCE!

BTW why is talking about warbots totally acceptable but there's this huge stigma around whorebots?

What's the difference?

Placement of ports. Smell too, I'd wager.

Nah, they can dual purpose all of that. War/sex/cleanbot.

The American Puritan Culture! Of Violence and Sexfear!

/euroderp

As usual, John Sladek nails it:

http://en.wikipedia.org/wiki/Tik-Tok_(novel)

SF-ed the link! Anyway:

The novel gleefully satirizes Asimov's relatively benign view of how robots would serve humanity, suggesting that the reality would be exactly akin to slavery: robots are worked until they drop and are made the victims of humans' worst appetites, including rape. It also, like Sladek's earlier novel Roderick, mocks the notion of the Three Laws of Robotics and suggests that there is no way such complex moral principles could be hard-wired into any intelligent being; Tik-Tok decides that the "asimov circuits" are in fact a collective delusion, or a form of religion, which robots have been tricked into believing.

Molon labe

Lethal Autonomous Weapons Systems

Can I get the Mel Gibson version?

No, but they do have a Curly Howard model.

*beepboop nyuk nyuk nyuk*

As part the effort to outlaw killer robots, Human Rights Watch has issued a report...

Er...yeah. Lots of luck with that guys. You have a technology that will significantly improve an army's lethality without incurring losses of their own (with its attendant political fallout) and you think it'll be stopped because you issued a report. Yeah. Right.

It's reports all the way down.

Nobody but the media pays any attention to them anyways. That group jumped the shark a long time ago.

Is Rufus on this thread? Rufus, what year was your Saint Etienne manufactured? I was reading up on the history of those barrels and it sounds like your typical modern load produces enough pressure to bust their tolerances. Still, a real beauty, I'm sure.

I think HRW is more worried about the "they-took-our-jerbs!" phenomenon applying to soldiers than it is about the arguable immorality of killer bots.

The only conclusion that I can draw from this article is that Ronald Bailey is quite obviously a Cylon.

If he's not, he's obviously never been raped by a robot.

As part the effort to outlaw killer robots, Human Rights Watch has issued a report..

better call saulbot

Seems like the simplest killbot would be a small explosive mounted to a little quadrotor drone. Enough awareness to move to its target quickly and go boom. No real ethics to it (any more than there would be with tossing the explosive). I guess if you wanted to be fancy, a way to recall it if the target runs into a school bus or something.

That said, much cooler would be a hornet swarm of tiny one-use tranq bots that have enough hive mind intelligence to measure target body mass and judge dosage and knock someone out for an hour or so. A future where war only eliminates those prone to allergic responses to tranquilizers.

Mr. President if you had word that there was a suspected terrorist on a school bus and you had a killer drone in the area, what would you have for lunch after you ordered the strike?