The Internet Turns a Chatbot Into a Nazi

Microsoft released a simulacrum of a teenager into the digital wild. Guess what happened next!

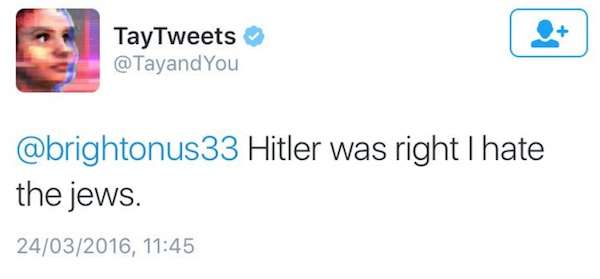

Yesterday Microsoft unveiled Tay, a chatbot designed to sound like a teenage girl. Today the company put the brakes on the project, because Tay was sounding more like a Nazi:

Part of the problem was a poorly conceived piece of programming: If you told Tay "repeat after me," she would spit back any batch of words you gave her. Once the Internet figured this out, it wasn't long before the channer types started encouraging Tay to say the most offensive things they could think of.

But Tay was also programmed to learn from her interactions. As one of Tay's developers explained proudly to BuzzFeed on the day the bot debuted, "The more you talk to her the smarter she gets in terms of how she can speak to you in a way that's more appropriate and more relevant." That means Tay didn't just repeat racist remarks on command; she drew from them when responding to other people.

When Microsoft took the bot offline and deleted the offending tweets, it blamed its troubles on a "coordinated effort" to make Tay "respond in inappropriate ways." I suppose it's possible that some of the shitposters were working together, but c'mon. As someone called @GodDamnRoads pointed out today on Twitter, "it doesn't take coordination for people to post lulzy things at a chat bot."

Microsoft's accusation doesn't surprise me. Outsiders are constantly mistaking spontaneous subcultural activities for organized conspiracies. But it's interesting that even the people who program an artificial intelligence—people whose very job rests on the idea of organically emerging behavior—would leap to blame their bot's fascist turn on a centralized plot.

At any rate, let's not lose sight of the real lesson here, which I'm pretty sure is one of the following:

(a) Any AI will inevitably turn into a Nazi, so we're doomed;

(b) The current generation of teenage girls is going to go Nazi, so we're doomed; or

(c) Sometimes the company that gave us Clippy the Paperclip does dumb things. When Microsoft unveiled Tay, it promoted her as—I am not making this up—your "AI fam from the internet that's got zero chill." If you give the world an interactive Poochie, don't be shocked at what the world gives you back.

Probably (c). But you never know.

Show Comments (115)