The Anti-Porn Crusade Comes for Online Games

Activists pressure payment processors, who in turn pressure game marketplaces. The result? A whole lot of video games and visual novels are disappearing.

Anti-porn crusaders and squeamish financial companies are coming for kinky gamers.

Once upon a time, this unholy collaboration seemed content targeting actual porn (something I wrote a Reason cover story on in 2022, if you want a deep dive). But as all good observers of the war on sex work know, things that start off under the auspices of targeting porn and prostitution—or protecting children from them—end up affecting things tinged with even a hint of sexuality, and sometimes things that aren't even related to sex at all.

Now we seem to have reached a new frontier in this crusade. Not only is content featuring real, human performers being targeted, but payment processors are getting fussy about artwork, animation, and AI-generated imagery, too.

Just look at what's been going on recently with Steam and Itch.io.

You are reading Sex & Tech, from Elizabeth Nolan Brown. Get more of Elizabeth's sex, tech, bodily autonomy, law, and online culture coverage.

The Great NSFW Game Purge

In July, the popular online-game distribution platform Steam instituted a new rule prohibiting "content that may violate the rules and standards set forth by Steam's payment processors and related card networks and banks, or internet network providers," and "in particular, certain kinds of adult only content." Around the same time, a slew of games with incest themes were removed.

Soon thereafter, game developers on the Itch.io—another video game distribution platform—started seeing games that had been labeled "NSFW," or "not safe for work," being de-indexed or made unavailable for downloads. "Their work—whether it was a game about navigating disordered eating as a teenager, or about dick pics—no longer appeared in search results," as Wired reported, noting that games might be tagged as NSFW for a variety of reasons, including "sexual themes, discussions of mental health, or stories that otherwise involve triggering topics."

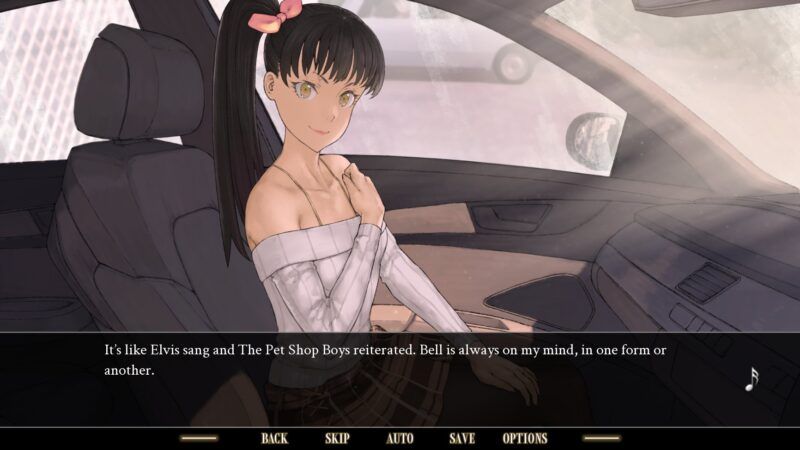

Games related to gay history and body image were reportedly among those de-indexed. So were things like the Sweetest Monster, a visual novel about a music teacher who falls in love with "a bewitching cat-eared beauty called Bell."

"Also caught in the sweep are a teen-rated romantic comedy game, some 2SLGBTQ+-themed games by award-winning developer Robert Yang, and a 1920s alternate-history art book that has no sexual content," according to CBC radio.

Both Steam and Itch.io said that financial institutions were driving their surge in suppressed content.

"We were recently notified that certain games on Steam may violate the rules and standards set forth by our payment processors and their related card networks and banks," Steam parent company Valve told PC Gamer. "As a result, we are retiring those games from being sold on the Steam Store."

It's unclear—for either company—which payment processors may have exerted pressure, or in what form. But the fallout has been unmistakable.

Why Now?

The impetus for all this may lie with an Australian group called Collective Shout. It started "a campaign against Steam and itch.io, directing concerns to our payment processors about the nature of certain content found on both platforms," Itch.io founder Leaf Corcoran said in a July 24 post.

For months, Collective Shout—which bills itself as "a grassroots campaigns movement against the objectification of women and the sexualisation of girls"—was up in arms over a game called No Mercy, which it describes as a "rape simulation game."

Then, in July, Collective Shout claimed to have "discovered hundreds of other games featuring rape, incest and child sexual abuse on both Steam and Itch.io." It asked PayPal, Visa, Mastercard, and Discover to "demonstrate corporate social responsibility and immediately cease processing payments on Steam and Itch.io and any other platforms hosting similar games."

Collective Shout provided no evidence for its claim that these platforms were featuring "hundreds" of questionable games. ("Depictions of minors, minor-presenting, or suggested minors in a sexual context are not allowed and will result in account suspension," Itch.io's rules say, while Steam bans "real or disturbing depictions of violence" and "content that exploits children in any way.")

Some payment processors seem to have taken action nonetheless.

"The situation developed rapidly, and we had to act urgently to protect the platform's core payment infrastructure," according to Corcoran. He said the platform was conducting "a comprehensive audit of content" and introducing "new compliance measures," which would involve creators having to "confirm that their content is allowable under the policies of the respective payment processors linked to their account." Itch.io created a "list of prohibited themes present in card processing networks to help people understand the kinds of things we might be looking for in our review."

The list includes "real or implied" nonconsent, incest, bestiality, "rape, coercion, or force-related," anything with "sex trafficking implications," and fetish content involving bodily waste or extreme harm, including "scat" or "vomit."

Caveats and Takeaways

Of course, fictional depictions of things on the Itch.io list—even when they're illegal in real life and also revolting to most people—are not illegal and adults have a free speech right to both make and view such content.

Of course, private platforms have a right to ban such content and might have myriad reasons for wanting to do so.

And a private activist group putting pressure on private companies to reject such content is not actually censorship, a term that should only apply to government attempts to quash speech.

But the private companies here did not act in a vacuum. Wherever there is anti-porn outrage from activists, pressure from authorities follows not far behind. For instance, No Mercy "garnered international outrage, including from the UK's technology secretary and Parliament member Peter Kyle," notes Wired.

We are steeped in anti-porn sentiment these days, and calls for and from politicians to "protect" women and children from it. So, while situations like what's going on with Steam and Itch.io may not be direct censorship, they are adjacent to it.

And they serve as a reminder of the ways in which campaigns against the most viscerally repellent types of pornographic content end up ensnaring not only much more mild erotica but sometimes things that aren't even meant to be sexually titillating.

On Itch.io, one flagged game was Consume Me, about a teen dealing with dieting and body image. It was the winner of multiple 2025 Game Developers Conference awards, including one for games in which "women and other gender-marginalized developers hold key positions." Consume Me creators Jenny Jiao Hsia and AP Thomson told Wired that they expected the game to be reinstated, but it's still "unacceptable that payment processors are conducting censorship-by-fiat and systematically locking adult content creators out of platforms like Itch where they can be fairly compensated for their work."

"Antiporn groups said they were only going after games with violence against women," notes the Free Speech Coalition's Mike Stabile on Bluesky. "But what got censored? Award-winning games about disordered eating, LGBTQ+ games and anything flagged NSFW."

This is something we see happening again and again. For instance, the U.K.'s Online Safety Act, which contains age-verification rules for porn platforms and some other types of content, has wound up with people having to show IDs or submit to a facial scan to see everything from anti-smoking content to John Stossel posts.

Weaponizing Financial Institutions

The games purge also serves as yet another example of the way payment processors have become weaponized by both governments and activists, since they can serve as a means of suppressing speech without having to resort to more unconstitutional or objectionable methods.

Companies that don't comply with certain portions of the Online Safety Act could find themselves subject to mandatory disruption of payment processing services by government order. In Germany, Commission for the Protection of Minors in the Media chairman Marc Jan Eumann has talked about forcing payment processors to cut ties with some adult websites. "Only if the porn providers lose reach and revenue can we get them to give in when it comes to the protection of minors in the media," Eumann said.

The situation with Collective Shout doesn't involve direct government pressure on payment processors. But it is yet another reminder of how this layer of middlemen—the peer-to-peer payment platforms and the credit card companies and so on—can be enlisted for social goals.

At the beginning of August, Itch.io announced that it was "re-indexing free adult NSFW content. We are still in ongoing discussions with payment processors and will be re-introducing paid content slowly to ensure we can confidently support the widest range of creators in the long term."

Whatever happens with these particular platforms, this isn't an issue that's going away anytime soon.

Interestingly, it dovetails with recent concerns expressed by the Trump administration.

Enter the White House

The Trump White House last week issued an executive order on "fair banking for all Americans," and while it doesn't explicitly mention sex work or sexual content, it's about stopping financial institutions from barring people's "access to financial services on the basis of political or religious beliefs or lawful business activities" broadly.

The order opens with tales of the federal government asking companies to flag people for "peer-to-peer payments that involved terms like 'Trump' or 'MAGA.'" But then it goes on to mention Operation Chokepoint: "a well-documented and systemic means by which Federal regulators pushed banks to minimize their involvement with individuals and companies engaged in lawful activities and industries disfavored by regulators based on factors other than individualized, objective, risk-based standards." Conservatives cared about Chokepoint largely because it targeted people selling guns and ammunition. But Chokepoint also targeted sex workers and led to banks rejecting the business of people flagged as risky for engaging in adult work.

Where I differ from a lot of people concerned with this issue is that I think private financial institutions should be allowed to discriminate based on someone's status as a Republican, or a sex worker, or whatever. I just wish they wouldn't. And I don't think they would, by and large, without nudges from the federal government. The most important step here is governmental reform.

The Trump order, issued August 7, is a step in the right direction because it aims to remove this government pressure. "Within 180 days of the date of this order, each appropriate Federal banking regulator shall, to the greatest extent permitted by law, remove the use of reputation risk or equivalent concepts that could result in politicized or unlawful debanking, as well as any other considerations that could be used to engage in such debanking, from their guidance documents, manuals, and other materials," it says.

There's still a lot more that needs to be done, as Jorge Jraissati, president of the Economic Inclusion Group, recently pointed out in a piece for Reason:

To truly fix debanking, Congress has to propose structural changes to America's anti–money laundering (AML) framework. These regulations can be weaponized given their lack of objectivity, transparency, and due process. Congress should also reform the Bank Secrecy Act (BSA), which allows the collection and sharing (even to foreign competitors) of virtually all the financial and private information of Americans without their knowledge or consent.

Current AML regulations give financial supervisors broad discretionary authority to impose substantial fines on financial institutions and hold senior executives personally accountable based on subjective criteria - such as perceived inadequacies in customer due diligence processes or alleged deficiencies in risk management frameworks - rather than concrete evidence of wrongdoing. This gives regulators considerable latitude to interpret AML standards according to individual or institutional biases, enabling them to (purposefully or not) discriminate against certain industries, geographies, and types of customers.

Consequently, banks are pushed toward extreme risk aversion, preemptively stopping cooperation with any customer who might pose regulatory risks.

The Trump order might be kind of ironic coming from an administration that has absolutely baked discrimination based on political beliefs into all sorts of its policies. But a win is a win, and I think this order is a win for a wide swath of people, regardless of their politics.

With ever-widening pushes for payment processors to serve social and ideological goals, we need any and all political momentum around the opposite.

More Sex & Tech News

• Wikipedia has lost its lawsuit against the U.K.'s Online Safety Act. "The Wikimedia Foundation—the non-profit which supports the online encyclopaedia—wanted a judicial review of regulations which could mean Wikipedia has to verify the identities of its users," notes the BBC. "But it said despite the loss, the judgement 'emphasized the responsibility of Ofcom and the UK government to ensure Wikipedia is protected.'"

• Earlier this month, "18 year old social media star Lil Tay made over $1 million by launching an OnlyFans shortly after her 18th birthday," notes Taylor Lorenz at User Mag. "This viral moment is already being weaponized by big influential Democrat and far right accounts to silence speech, dismantle civil liberties and push dangerous censorship laws that would lead to even worse exploitation of women and children."

• "As an anti-surveillance advocate and lawyer, I am concerned by the normalization of facial surveillance and identification-check mandates, which limit access to digital services and dissolve the status quo of anonymity—all for a flawed idea of internet 'safety,'" writes Albert Fox Cahn at The Atlantic.

The truth is there are very few ways to verify someone's identity online well, and no ways to do it both effectively and anonymously. With all the awful content floating on the internet today, giving up anonymity might feel like a small price to protect public safety, but the adoption of these laws would mean sacrificing more than privacy. Losing anonymous internet access means giving companies and government agencies more power than ever to track our activities online. It means transforming the American conception of the open internet into something reminiscent of the centralized tracking systems we've long opposed in China and similar countries.

• Automated license plate readers are watching you.

• "Gambling is a million times worse for young men than porn," argues Cathy Reisenwitz.

• The Cut has a piece on life coach Kendra Hilty, who went viral on TikTok after telling a story about falling in love with her psychiatrist and turned to ChatGPT to assure her that he was really in love with her too.

Today's Image