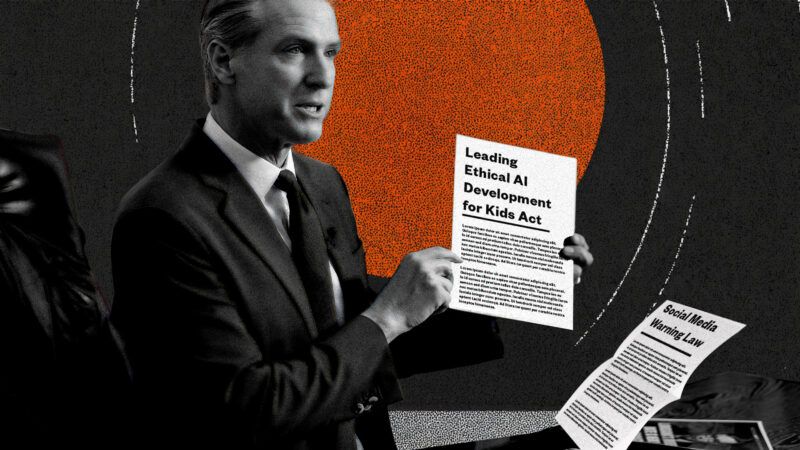

These 2 Terrible Tech Bills Are on Gavin Newsom's Desk

One limits children’s access to mental health services, the other mandates a black box warning, and both undermine users’ digital privacy.

The California state Senate recently sent two tech bills to Democratic Gov. Gavin Newsom's desk. If signed, one could make it harder for children to access mental health resources, and the other would create the most annoying Instagram experience imaginable.

The Leading Ethical AI Development (LEAD) for Kids Act prohibits "making a companion chatbot available to a child unless the companion chatbot is not foreseeably capable of doing certain things that could harm a child." The bill's introduction specifies the "things" that could harm a child as genuinely bad stuff: self-harm, suicidal ideation, violence, consumption of drugs or alcohol, and disordered eating.

Unfortunately, the bill's ambiguous language sloppily defines what outputs from an AI companion chatbot would meet these criteria. The verb preceding these buckets is not "telling," "directing," "mandating," or some other directive, but "encouraging."

Taylor Barkley, director of public policy for the Abundance Institute, tells Reason that, "by hinging liability on whether an AI 'encourages' harm—a word left dangerously vague—the law risks punishing companies not for urging bad behavior, but for failing to block it in just the right way." Notably, the bill does not merely outlaw operators from making chatbots available to children that encourage self-harm, but those that are "foreseeably capable" of doing so.

Ambiguity aside, the bill also outlaws companion chatbots from "offering mental health therapy to the child without the direct supervision of a licensed or credentialed professional." While traditional psychotherapy performed by a credentialed professional is associated with better mental health outcomes than those from a chatbot, such therapy is expensive—nearly $140 on average per session in the U.S., according to wellness platform SimplePractice. A ChatGPT Plus subscription costs only $20 per month. In addition to its much lower cost, the use of AI therapy chatbots has been associated with positive mental health outcomes.

While California has passed a bill that may reduce access to potential mental health resources, it's also passed one that stands to make residents' experiences on social media much more annoying. California's Social Media Warning Law would require social media platforms to display a warning for users under 17 years old that reads, "the Surgeon General has warned that while social media may have benefits for some young users, social media is associated with significant mental health harms and has not been proven safe for young users," for 10 seconds upon first opening a social media app each day. After using a given platform for three hours throughout the day, the warning is displayed again for a minimum of 30 seconds—without the ability to minimize it—"in a manner that occupies at least 75 percent of the screen."

Whether this vague warning would discourage many teens from doomscrolling is dubious; warning labels do not often drastically change consumers' behaviors. For example, a 2018 Harvard Business School study found that graphic warnings on soda decreased the share of sugar drinks purchased by students over two weeks by only 3.2 percentage points, and a 2019 RAND Corporation study found that graphic warning labels have no effect on discouraging regular smokers from purchasing cigarettes.

But "platforms aren't cigarettes," writes Clay Calvert, a technology fellow at the American Enterprise Institute, "[they] carry multiple expressive benefits for minors." Because social media warning labels "don't convey uncontroversial, measurable pure facts," compelling them likely violates the First Amendment's protections against compelled speech, he explains.

Shoshana Weissmann, digital director and fellow at the R Street Institute, tells Reason that both bills "would probably encourage platforms to verify user age before they can access the services, which would lead to all the same security problems we've seen again and again." These security problems include the leaking of drivers' licenses from AU10TIX, the identity verification company used by tech giants X, TikTok, and Uber, as reported by 404 Media in June 2024.

Both bills could very likely exacerbate the very problems they seek to address— worsening mental health among minors and unhealthy social media habits—while making users' privacy less secure. Newsom, who has not signaled if he'll sign these measures, has the opportunity to veto both bills. Only time will tell if he does.